The panorama of AI basis fashions is evolving quickly, however few entries have been as vital in 2025 because the arrival of Z.ai’s GLM-4.5 sequence: GLM-4.5 and its lighter sibling GLM-4.5-Air. Unveiled by Zhipu AI, these fashions set remarkably excessive requirements for unified agentic capabilities and open entry, aiming to bridge the hole between reasoning, coding, and clever brokers—and to take action at each huge and manageable scales.

Mannequin Structure and Parameters

| Mannequin | Complete Parameters | Energetic Parameters | Notability |

|---|---|---|---|

| GLM-4.5 | 355B | 32B | Among the many largest open weights, prime benchmark efficiency |

| GLM-4.5-Air | 106B | 12B | Compact, environment friendly, focusing on mainstream {hardware} compatibility |

GLM-4.5 is constructed on a Combination of Consultants (MoE) structure, with a complete of 355 billion parameters (32 billion energetic at a time). This mannequin is crafted for cutting-edge efficiency, focusing on high-demand reasoning and agentic functions. GLM-4.5-Air, with 106B complete and 12B energetic parameters, gives related capabilities with a dramatically decreased {hardware} and compute footprint.

Hybrid Reasoning: Two Modes in One Framework

Each fashions introduce a hybrid reasoning method:

- Pondering Mode: Allows advanced step-by-step reasoning, device use, multi-turn planning, and autonomous agent duties.

- Non-Pondering Mode: Optimized for immediate, stateless responses, making the fashions versatile for conversational and quick-reaction use circumstances.

This dual-mode design addresses each subtle cognitive workflows and low-latency interactive wants inside a single mannequin, empowering next-generation AI brokers.

Efficiency Benchmarks

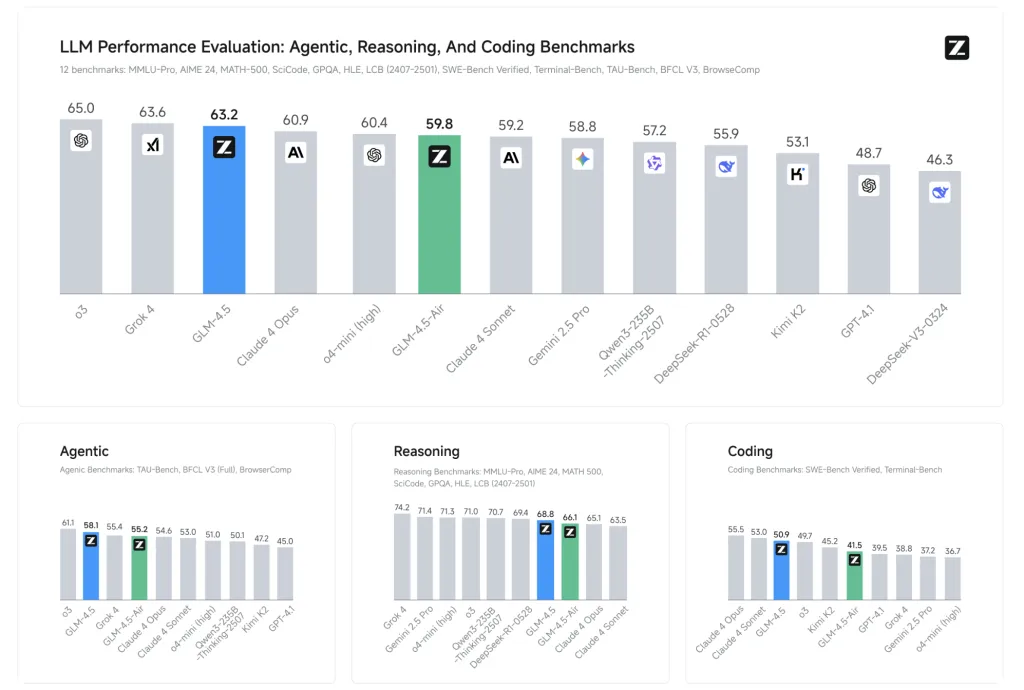

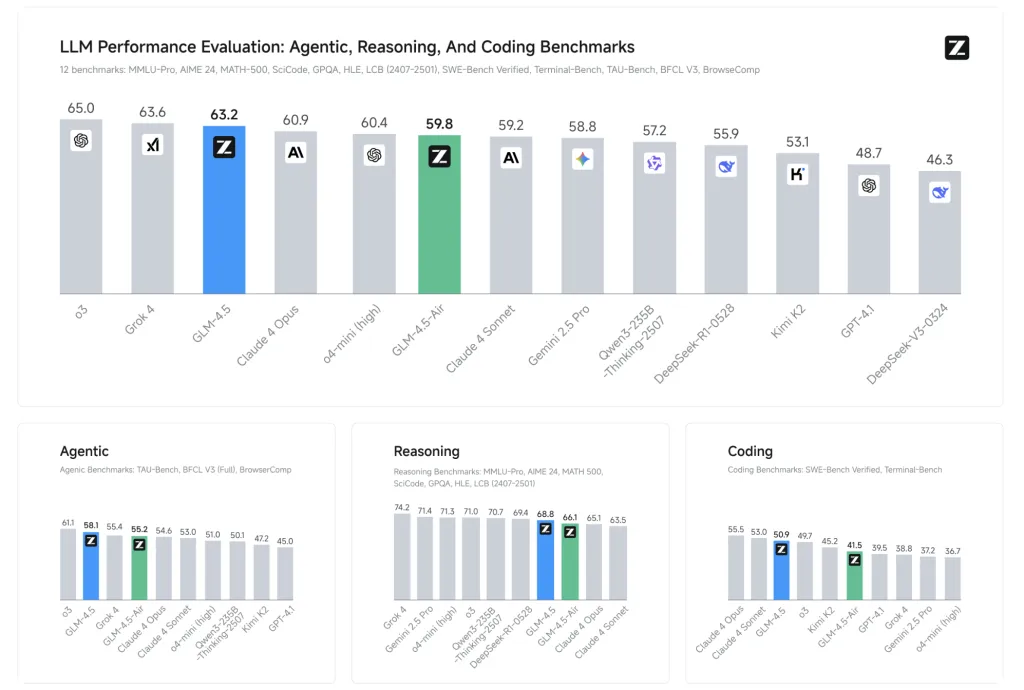

Z.ai benchmarked GLM-4.5 on 12 industry-standard checks (together with MMLU, GSM8K, HumanEval):

- GLM-4.5: Common benchmark rating of 63.2, ranked third general (second globally, prime amongst all open-source fashions).

- GLM-4.5-Air: Delivers a aggressive 59.8, establishing itself because the chief amongst ~100B-parameter fashions.

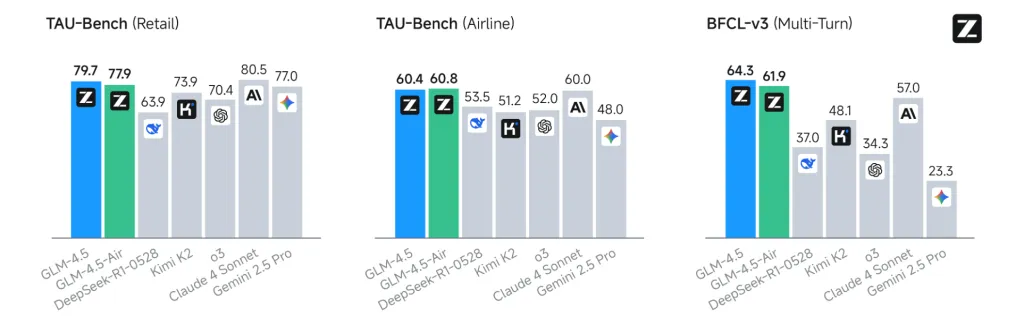

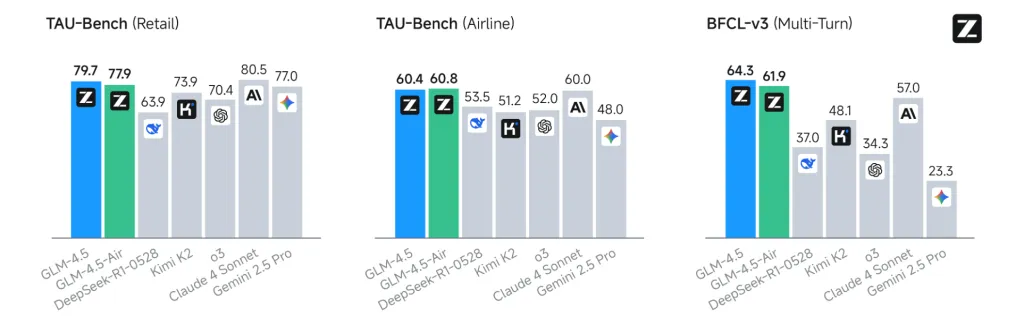

- Outperforms notable rivals in particular areas: tool-calling success price of 90.6%, outperforming Claude 3.5 Sonnet and Kimi K2.

- Notably robust ends in Chinese language-language duties and coding, with constant SOTA outcomes throughout open benchmarks.

Agentic Capabilities and Structure

GLM-4.5 advances “Agent-native” design: core agentic functionalities (reasoning, planning, motion execution) are constructed instantly into the mannequin structure. This implies:

- Multi-step process decomposition and planning

- Instrument use and integration with exterior APIs

- Advanced information visualization and workflow administration

- Native help for reasoning and perception-action cycles

These capabilities allow end-to-end agentic functions beforehand reserved for smaller, hard-coded frameworks or closed-source APIs.

Effectivity, Pace, and Price

- Speculative Decoding & Multi-Token Prediction (MTP): With options like MTP, GLM-4.5 achieves 2.5×–8× quicker inference than earlier fashions, with era speeds >100 tokens/sec on the high-speed API and as much as 200 tokens/sec claimed in observe.

- Reminiscence & {Hardware}: GLM-4.5-Air’s 12B energetic design is appropriate with client GPUs (32–64GB VRAM) and could be quantized to suit broader {hardware}. This permits high-performance LLMs to run domestically for superior customers.

- Pricing: API calls begin as little as $0.11 per million enter tokens and $0.28 per million output tokens—industry-leading costs for the dimensions and high quality supplied.

Open-Supply Entry & Ecosystem

A keystone of the GLM-4.5 sequence is its MIT open-source license: the bottom fashions, hybrid (considering/non-thinking) fashions, and FP8 variations are all launched for unrestricted business use and secondary improvement. Code, device parsers, and reasoning engines are built-in into main LLM frameworks, together with transformers, vLLM, and SGLang, with detailed repositories obtainable on GitHub and Hugging Face.

The fashions can be utilized via main inference engines, with fine-tuning and on-premise deployment totally supported. This degree of openness and suppleness contrasts sharply with the more and more closed stance of Western rivals.

Key Technical Improvements

- Multi-Token Prediction (MTP) layer for speculative decoding, dramatically boosting inference pace on CPUs and GPUs.

- Unified structure for reasoning, coding, and multimodal perception-action workflows.

- Skilled on 15 trillion tokens, with help for as much as 128k enter and 96k output context home windows.

- Instant compatibility with analysis and manufacturing tooling, together with directions for tuning and adapting the fashions for brand new use circumstances.

In abstract, GLM-4.5 and GLM-4.5-Air characterize a significant leap for open-source, agentic, and reasoning-focused basis fashions. They set new requirements for accessibility, efficiency, and unified cognitive capabilities—offering a strong spine for the following era of clever brokers and developer functions.

Try the GLM 4.5, GLM 4.5 Air, GitHub Web page and Technical particulars. All credit score for this analysis goes to the researchers of this undertaking. Additionally, be happy to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.