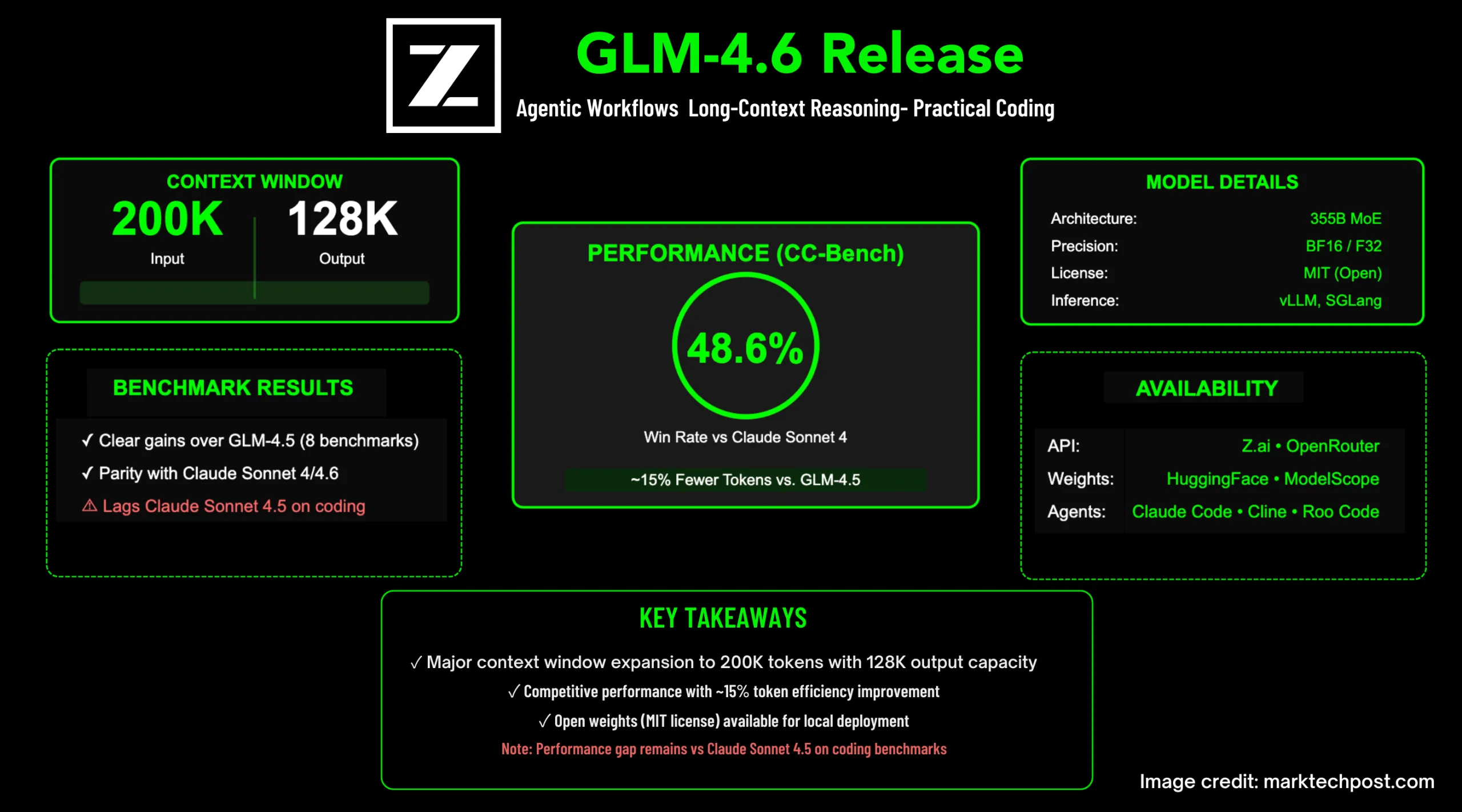

Zhipu AI has launched GLM-4.6, a serious replace to its GLM sequence targeted on agentic workflows, long-context reasoning, and sensible coding duties. The mannequin raises the enter window to 200K tokens with a 128K max output, targets decrease token consumption in utilized duties, and ships with open weights for native deployment.

So, what’s precisely is new?

- Context + output limits: 200K enter context and 128K most output tokens.

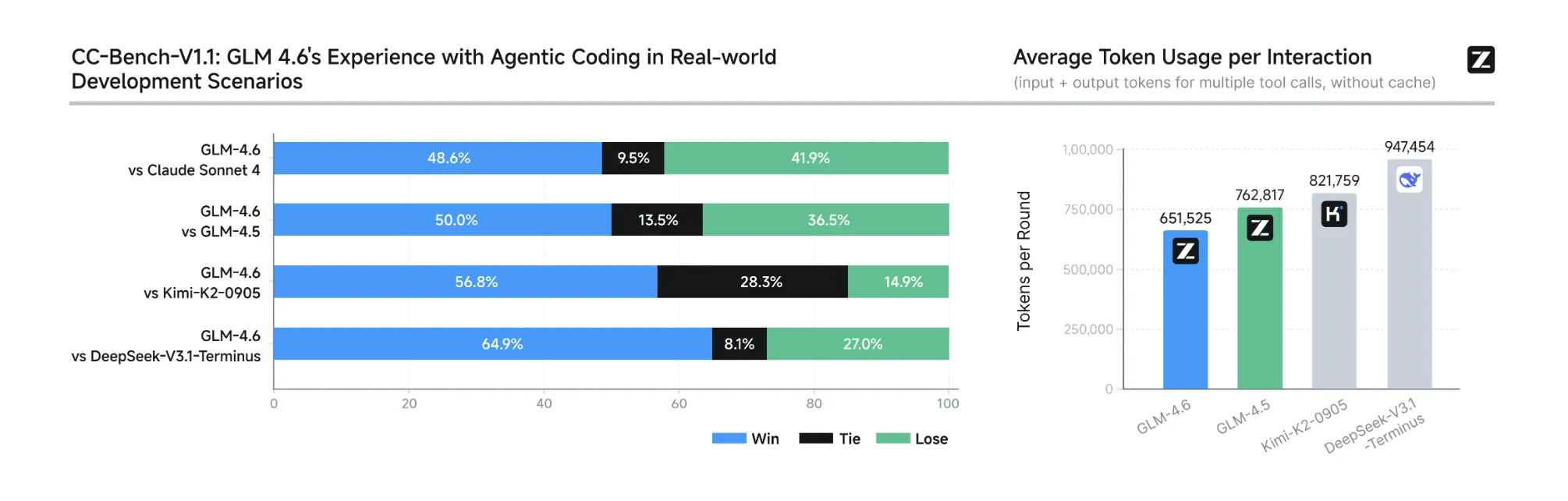

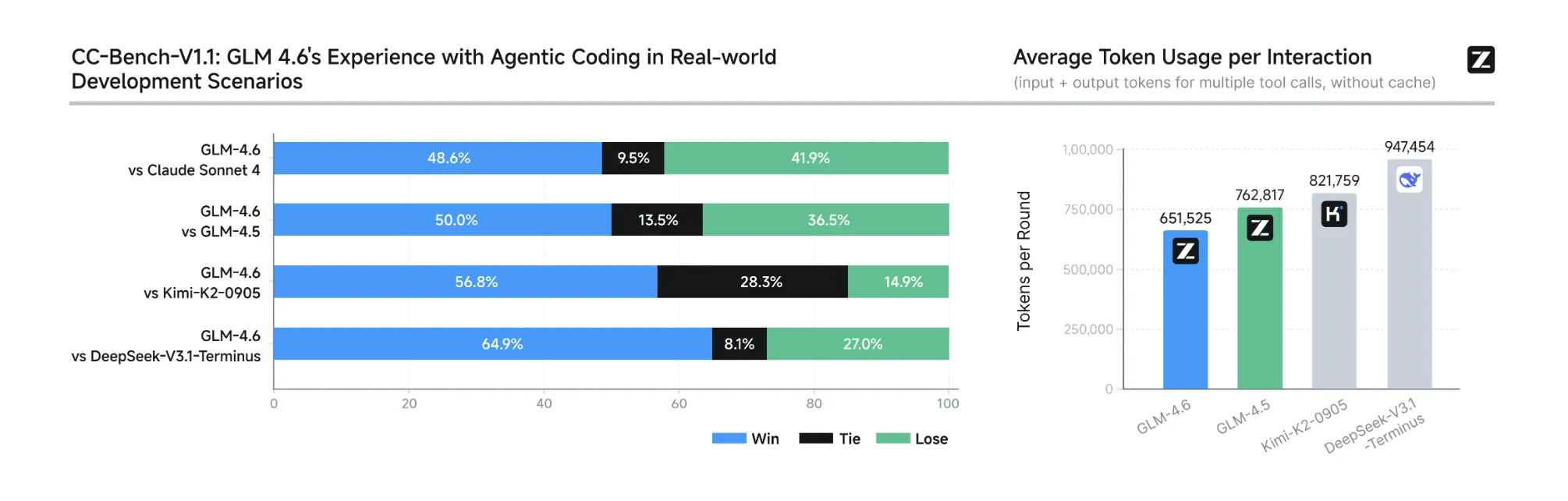

- Actual-world coding outcomes: On the prolonged CC-Bench (multi-turn duties run by human evaluators in remoted Docker environments), GLM-4.6 is reported close to parity with Claude Sonnet 4 (48.6% win price) and makes use of ~15% fewer tokens vs. GLM-4.5 to complete duties. Activity prompts and agent trajectories are revealed for inspection.

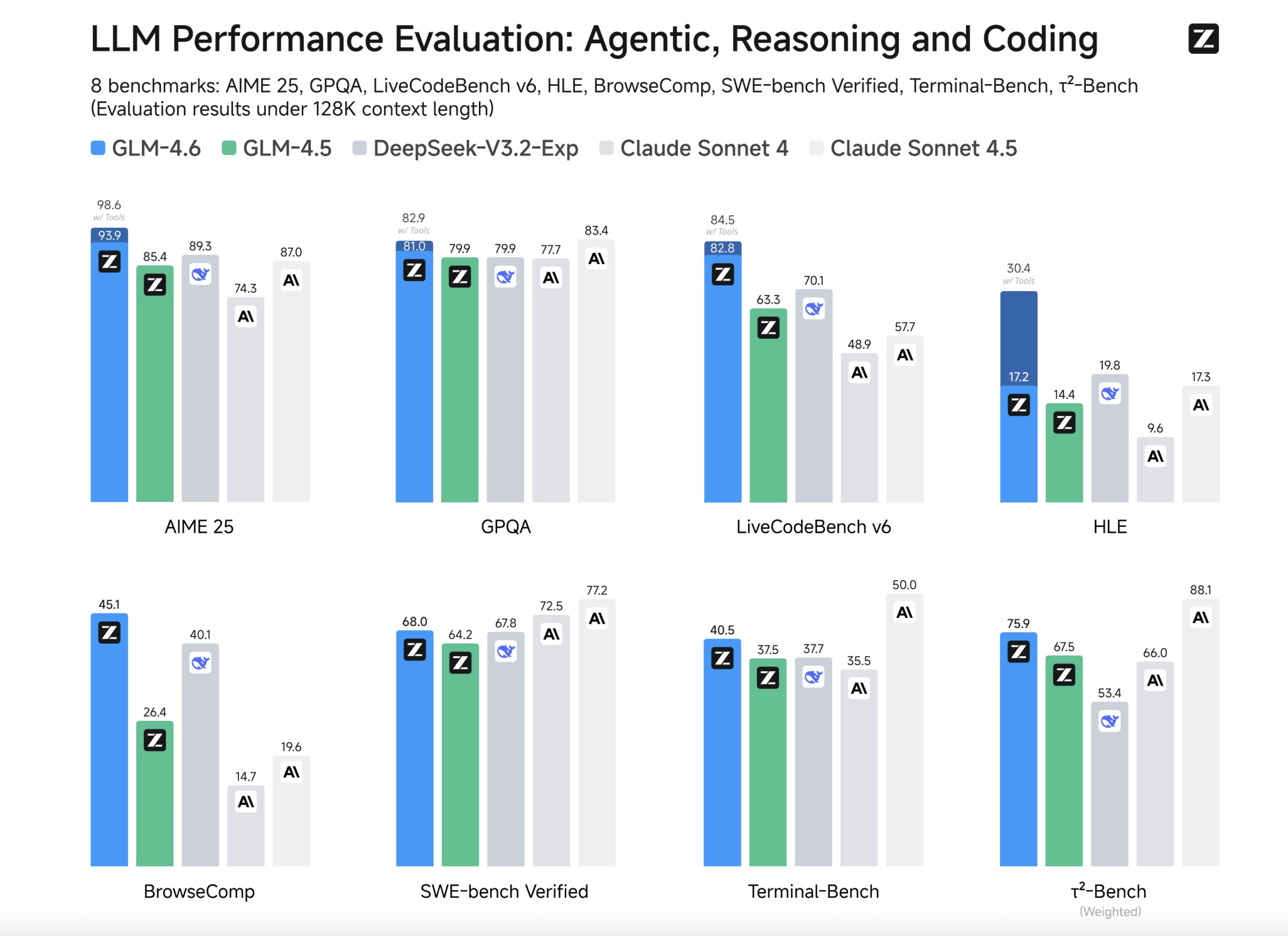

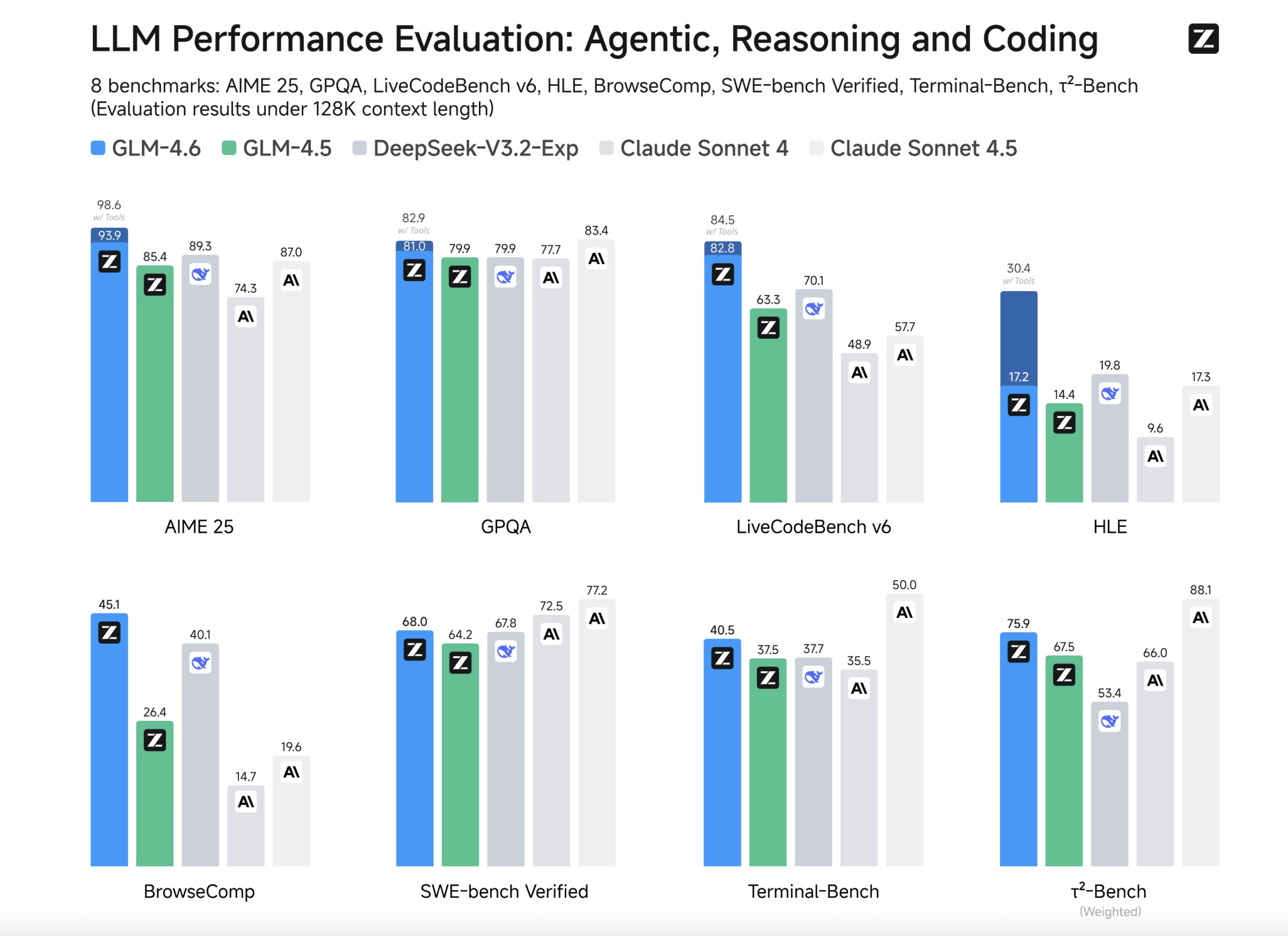

- Benchmark positioning: Zhipu summarizes “clear good points” over GLM-4.5 throughout eight public benchmarks and states parity with Claude Sonnet 4/4.6 on a number of; it additionally notes GLM-4.6 nonetheless lags Sonnet 4.5 on coding—a helpful caveat for mannequin choice.

- Ecosystem availability: GLM-4.6 is obtainable by way of Z.ai API and OpenRouter; it integrates with common coding brokers (Claude Code, Cline, Roo Code, Kilo Code), and present Coding Plan customers can improve by switching the mannequin identify to

glm-4.6. - Open weights + license: Hugging Face mannequin card lists License: MIT and Mannequin measurement: 355B params (MoE) with BF16/F32 tensors. (MoE “whole parameters” should not equal to lively parameters per token; no active-params determine is acknowledged for 4.6 on the cardboard.)

- Native inference: vLLM and SGLang are supported for native serving; weights are on Hugging Face and ModelScope.

Abstract

GLM-4.6 is an incremental however materials step: a 200K context window, ~15% token discount on CC-Bench versus GLM-4.5, near-parity activity win-rate with Claude Sonnet 4, and quick availability by way of Z.ai, OpenRouter, and open-weight artifacts for native serving.

FAQs

1) What are the context and output token limits?

GLM-4.6 helps a 200K enter context and 128K most output tokens.

2) Are open weights out there and below what license?

Sure. The Hugging Face mannequin card lists open weights with License: MIT and a 357B-parameter MoE configuration (BF16/F32 tensors).

3) How does GLM-4.6 evaluate to GLM-4.5 and Claude Sonnet 4 on utilized duties?

On the prolonged CC-Bench, GLM-4.6 stories ~15% fewer tokens vs. GLM-4.5 and near-parity with Claude Sonnet 4 (48.6% win-rate).

4) Can I run GLM-4.6 domestically?

Sure. Zhipu supplies weights on Hugging Face/ModelScope and paperwork native inference with vLLM and SGLang; group quantizations are showing for workstation-class {hardware}.

Try the GitHub Web page, Hugging Face Mannequin Card and Technical particulars. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.