Zhipu AI has formally launched and open-sourced GLM-4.5V, a next-generation vision-language mannequin (VLM) that considerably advances the state of open multimodal AI. Primarily based on Zhipu’s 106-billion parameter GLM-4.5-Air structure—with 12 billion energetic parameters by way of a Combination-of-Consultants (MoE) design—GLM-4.5V delivers robust real-world efficiency and unmatched versatility throughout visible and textual content material.

Key Options and Design Improvements

1. Complete Visible Reasoning

- Picture Reasoning: GLM-4.5V achieves superior scene understanding, multi-image evaluation, and spatial recognition. It might probably interpret detailed relationships in complicated scenes (reminiscent of distinguishing product defects, analyzing geographical clues, or inferring context from a number of pictures concurrently).

- Video Understanding: It processes lengthy movies, performing automated segmentation and recognizing nuanced occasions because of a 3D convolutional imaginative and prescient encoder. This permits functions like storyboarding, sports activities analytics, surveillance assessment, and lecture summarization.

- Spatial Reasoning: Built-in 3D Rotational Positional Encoding (3D-RoPE) offers the mannequin a strong notion of three-dimensional spatial relationships, essential for decoding visible scenes and grounding visible parts.

2. Superior GUI and Agent Duties

- Display screen Studying & Icon Recognition: The mannequin excels at studying desktop/app interfaces, localizing buttons and icons, and helping with automation—important for RPA (robotic course of automation) and accessibility instruments.

- Desktop Operation Help: Via detailed visible understanding, GLM-4.5V can plan and describe GUI operations, helping customers in navigating software program or performing complicated workflows.

3. Complicated Chart and Doc Parsing

- Chart Understanding: GLM-4.5V can analyze charts, infographics, and scientific diagrams inside PDFs or PowerPoint information, extracting summarized conclusions and structured information even from dense, lengthy paperwork.

- Lengthy Doc Interpretation: With help for as much as 64,000 tokens of multimodal context, it might parse and summarize prolonged, image-rich paperwork (reminiscent of analysis papers, contracts, or compliance reviews), making it excellent for enterprise intelligence and information extraction.

4. Grounding and Visible Localization

- Exact Grounding: The mannequin can precisely localize and describe visible parts—reminiscent of objects, bounding bins, or particular UI parts—utilizing world information and semantic context, not simply pixel-level cues. This permits detailed evaluation for high quality management, AR functions, and picture annotation workflows.

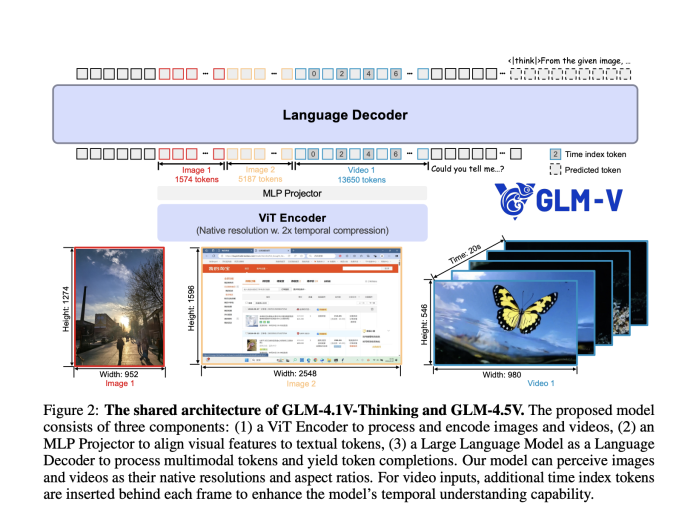

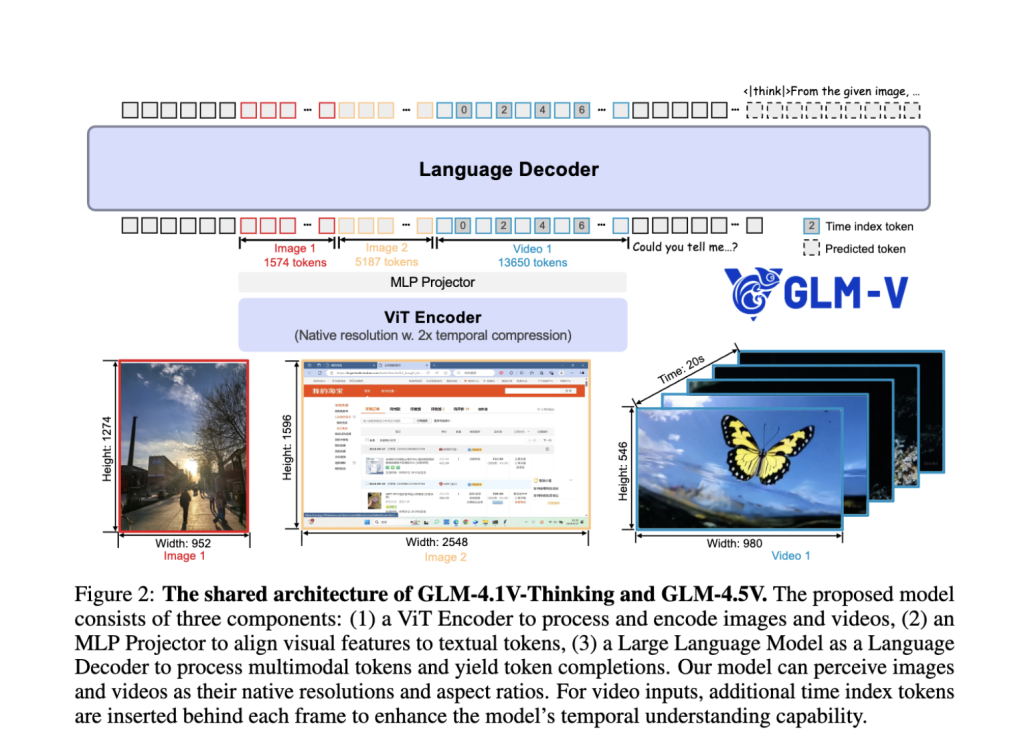

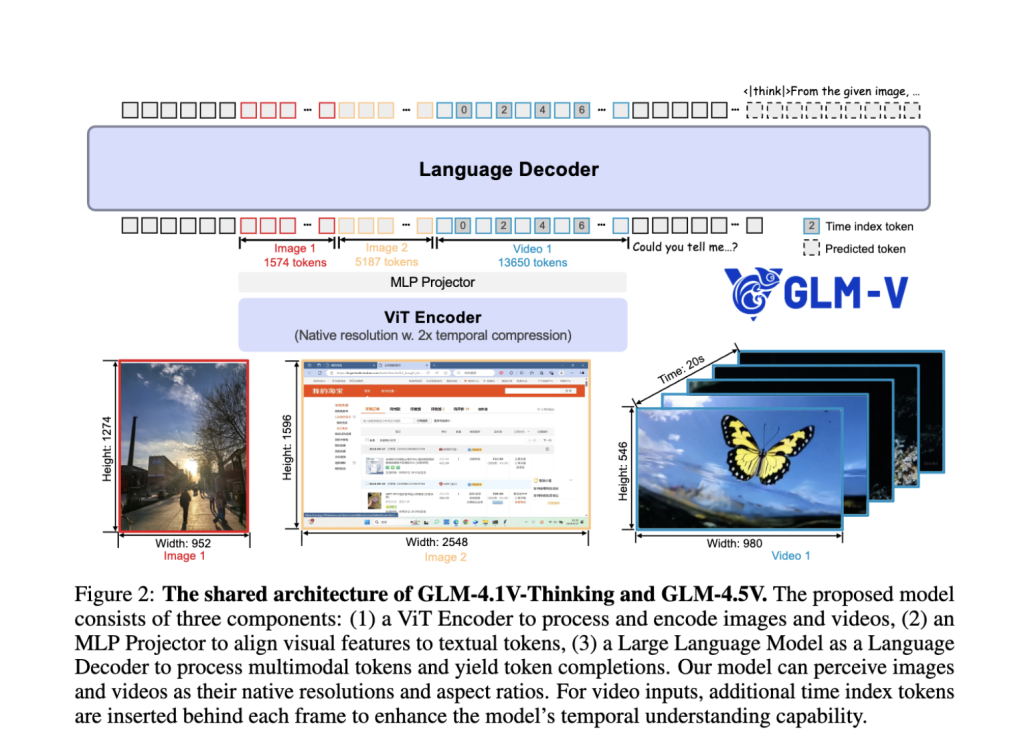

Architectural Highlights

- Hybrid Imaginative and prescient-Language Pipeline: The system integrates a robust visible encoder, MLP adapter, and a language decoder, permitting seamless fusion of visible and textual data. Static pictures, movies, GUIs, charts, and paperwork are all handled as first-class inputs.

- Combination-of-Consultants (MoE) Effectivity: Whereas housing 106B whole parameters, the MoE design prompts solely 12B per inference, guaranteeing excessive throughput and reasonably priced deployment with out sacrificing accuracy.

- 3D Convolution for Video & Pictures: Video inputs are processed utilizing temporal downsampling and 3D convolution, enabling the evaluation of high-resolution movies and native facet ratios, whereas sustaining effectivity.

- Adaptive Context Size: Helps as much as 64K tokens, permitting strong dealing with of multi-image prompts, concatenated paperwork, and prolonged dialogues in a single move.

- Progressive Pretraining and RL: The coaching regime combines large multimodal pretraining, supervised fine-tuning, and Reinforcement Studying with Curriculum Sampling (RLCS) for long-chain reasoning mastery and real-world process robustness.

“Considering Mode” for Tunable Reasoning Depth

A distinguished characteristic is the “Considering Mode” toggle:

- Considering Mode ON: Prioritizes deep, step-by-step reasoning, appropriate for complicated duties (e.g., logical deduction, multi-step chart or doc evaluation).

- Considering Mode OFF: Delivers sooner, direct solutions for routine lookups or easy Q&A. The consumer can management the mannequin’s reasoning depth at inference, balancing pace towards interpretability and rigor.

Benchmark Efficiency and Actual-World Affect

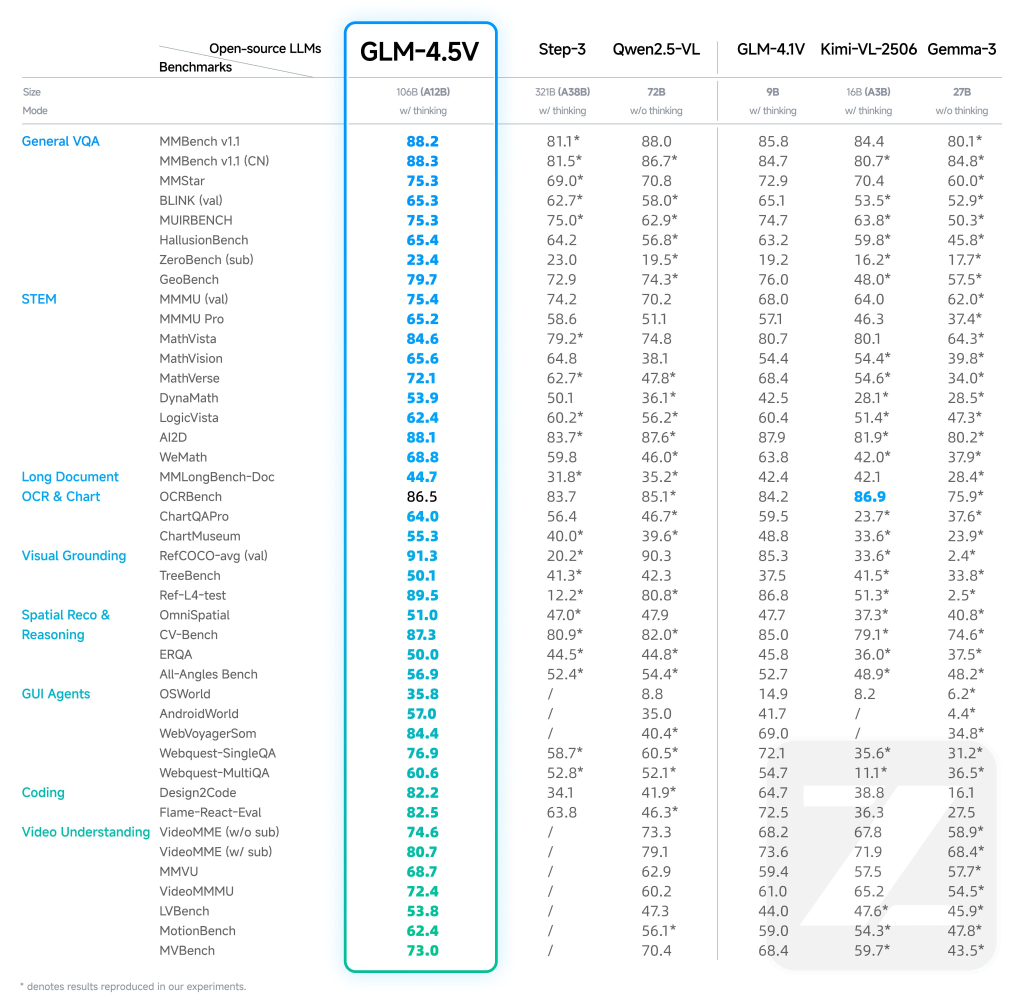

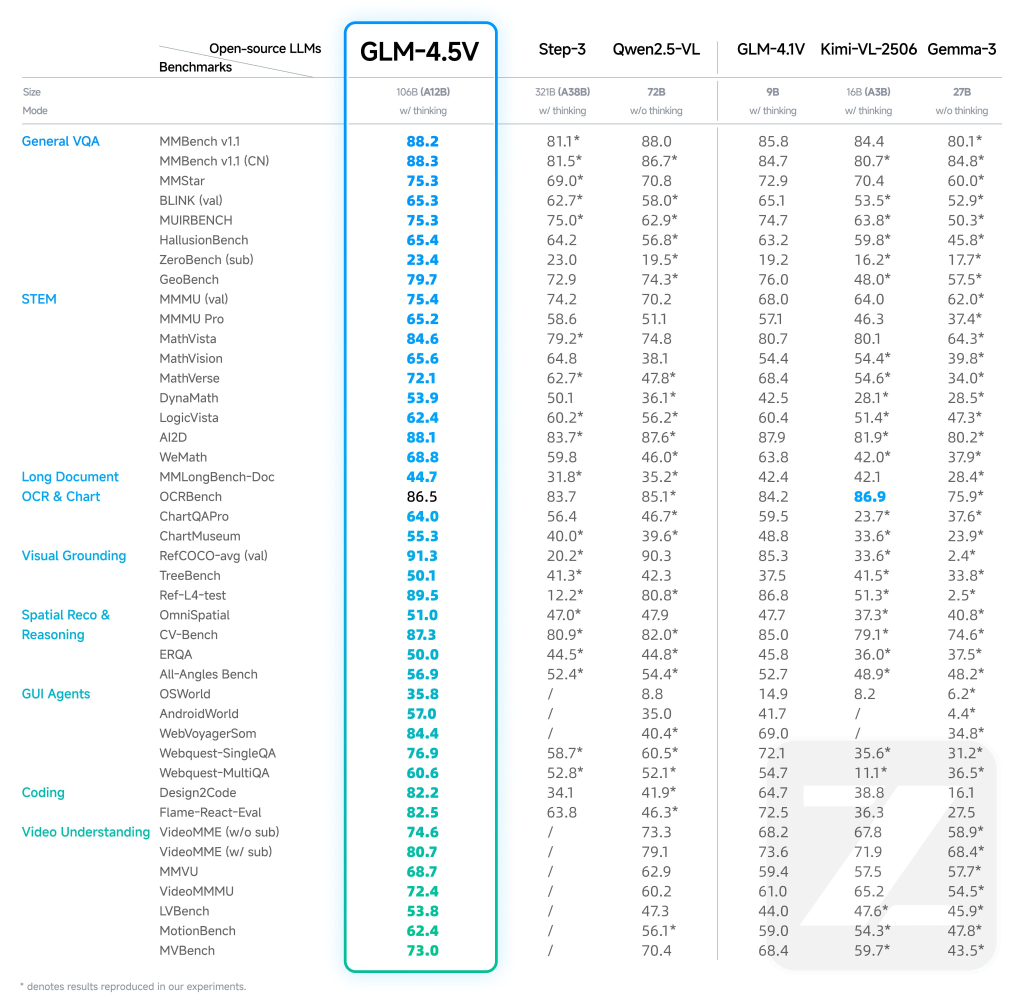

- State-of-the-Artwork Outcomes: GLM-4.5V achieves SOTA throughout 41–42 public multimodal benchmarks, together with MMBench, AI2D, MMStar, MathVista, and extra, outperforming each open and a few premium proprietary fashions in classes like STEM QA, chart understanding, GUI operation, and video comprehension.

- Sensible Deployments: Companies and researchers report transformative leads to defect detection, automated report evaluation, digital assistant creation, and accessibility know-how with GLM-4.5V.

- Democratizing Multimodal AI: Open-sourced underneath the MIT license, the mannequin equalizes entry to cutting-edge multimodal reasoning that was beforehand gated by unique proprietary APIs.

Instance Use Circumstances

| Function | Instance Use | Description |

|---|---|---|

| Picture Reasoning | Defect detection, content material moderation | Scene understanding, multiple-image summarization |

| Video Evaluation | Surveillance, content material creation | Lengthy video segmentation, occasion recognition |

| GUI Duties | Accessibility, automation, QA | Display screen/UI studying, icon location, operation suggestion |

| Chart Parsing | Finance, analysis reviews | Visible analytics, information extraction from complicated charts |

| Doc Parsing | Regulation, insurance coverage, science | Analyze & summarize lengthy illustrated paperwork |

| Grounding | AR, retail, robotics | Goal object localization, spatial referencing |

Abstract

GLM-4.5V by Zhipu AI is a flagship open-source vision-language mannequin setting new efficiency and usefulness requirements for multimodal reasoning. With its highly effective structure, context size, real-time “pondering mode”, and broad functionality spectrum, GLM-4.5V is redefining what’s doable for enterprises, researchers, and builders working on the intersection of imaginative and prescient and language.

Try the Paper, Mannequin on Hugging Face and GitHub Web page right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Publication.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.