If you happen to’re drowning in paperwork (and let’s face it, who is not?), you’ve got in all probability realized that conventional OCR is like bringing a knife to a gunfight. Certain, it might probably learn textual content, however it has no clue that the quantity sitting subsequent to “Complete Due” might be extra essential than the one subsequent to “Web page 2 of 47.”

That is the place LayoutLM is available in – it’s the reply to the age-old query: “What if we taught AI to really perceive paperwork as a substitute of simply studying them like a confused first-grader?”

What makes LayoutLM totally different out of your legacy OCR

We have all been there. You feed a wonderfully good bill into an OCR system, and it spits again a textual content soup that may make alphabet soup jealous. The issue? Conventional OCR treats paperwork like they’re simply partitions of textual content, utterly ignoring that stunning spatial association that people use to make sense of data.

LayoutLM takes a basically totally different strategy. As an alternative of simply extracting textual content, it understands three crucial features of any doc:

- The precise textual content content material (what the phrases say)

- The spatial structure (the place issues are positioned)

- The visible options (how issues look)

Consider it this fashion: if conventional OCR is like studying a ebook along with your eyes closed, LayoutLM is like having a dialog with somebody who really understands doc design. It is aware of that in an bill, the massive daring quantity on the backside proper might be the entire, and people neat rows within the center? That is your line objects speaking.

In contrast to earlier text-only fashions like BERT, LayoutLM provides two essential items of data: 2D place (the place the textual content is) and visible cues (what the textual content seems to be like). Earlier than LayoutLM, AI would learn a doc as one lengthy string of phrases, utterly blind to the visible construction that offers the textual content its that means.

How LayoutLM really works

Think about how you learn an bill. You do not simply see a jumble of phrases; you see that the seller’s identify is in massive font on the prime, the road objects are in a neat desk, and the entire quantity is on the backside proper. The place is crucial. LayoutLM was the primary main mannequin designed to learn this fashion, efficiently combining textual content, structure, and picture info right into a singular mannequin.

Textual content embeddings

At its core, LayoutLM begins with BERT-based textual content embeddings. If BERT is new to you, consider it because the Shakespeare of language fashions – it understands context, nuance, and relationships between phrases. However whereas BERT stops at understanding language, LayoutLM is simply getting warmed up.

Spatial embeddings

This is the place issues get attention-grabbing. LayoutLM provides spatial embeddings that seize the 2D place of each single token on the web page. The LayoutLMv1 mannequin, particularly used the 4 nook coordinates of a phrase’s bounding field (x0,y0,x1,y1). The inclusion of width and peak as direct embeddings was an enhancement launched in LayoutLMv2.

Visible options

LayoutLMv2 and v3 took issues even additional by incorporating precise visible options. Utilizing both ResNet (v2) or patch embeddings just like Imaginative and prescient Transformers (v3), these fashions can actually “see” the doc. Daring textual content? Totally different fonts? Firm logos? Colour coding? LayoutLM notices all of it.

The LayoutLM trilogy and the AI universe it created

The unique LayoutLM sequence established the core expertise. By 2025, this has advanced into a brand new period of extra highly effective and common AI fashions.

The foundational trilogy (2020-2022):

- LayoutLMv1: The pioneer that first mixed textual content and structure info. It used a Masked Visible-Language Mannequin (MVLM) goal, the place it discovered to foretell masked phrases utilizing each the textual content and structure context.

- LayoutLMv2: The sequel that built-in visible options immediately into the pre-training course of. It additionally added new coaching targets like Textual content-Picture Alignment and Textual content-Picture Matching to create a tighter vision-language connection.

- LayoutLMv3: The ultimate act that streamlined the structure with a extra environment friendly design, reaching higher efficiency with much less complexity.

The brand new period (2023-2025):

After the LayoutLM trilogy, its rules grew to become normal, and the sphere exploded.

- Shift to common fashions: We noticed the emergence of fashions like Microsoft’s UDOP (Common Doc Processing), which unifies imaginative and prescient, textual content, and structure right into a single, highly effective transformer able to each understanding and producing paperwork.

- The rise of imaginative and prescient basis fashions: The sport modified once more with fashions like Microsoft’s Florence-2, a flexible imaginative and prescient mannequin that may deal with a large vary of duties—together with OCR, object detection, and sophisticated doc understanding—all via a unified, prompt-based interface.

- The affect of normal multimodal AI: Maybe the largest shift has been the arrival of large, general-purpose fashions like GPT-4, Claude, and Gemini. These fashions exhibit astounding “zero-shot” capabilities, the place you may present them a doc and easily ask a query to get a solution, typically with none specialised coaching.

The place can AI like LayoutLM be utilized?

The expertise underpinning LayoutLM is flexible and has been efficiently utilized to a variety of use instances, together with:

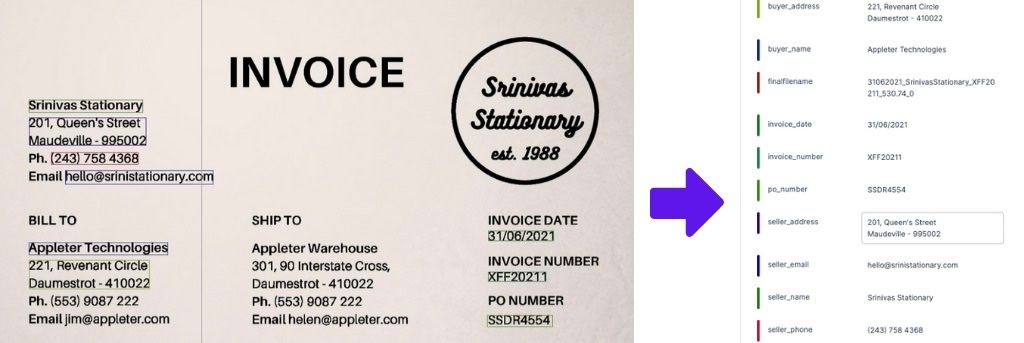

Bill processing

Keep in mind the final time you needed to manually enter bill knowledge? Yeah, we’re making an attempt to neglect too. With LayoutLM built-in into Nanonets’ bill processing resolution, we are able to mechanically extract:

- Vendor info (even when their brand is the scale of a postage stamp)

- Line objects (sure, even these pesky multi-line descriptions)

- Tax calculations (as a result of math is tough)

- Fee phrases (buried in that superb print you by no means learn)

One among our clients in procurement shared with us that they elevated their processing quantity from 50 invoices a day to 500. That is not a typo – that is the ability of understanding structure.

Receipt processing

Receipts are the bane of expense reporting. They’re crumpled, pale, and formatted by what looks as if a random quantity generator. However LayoutLM does not care. It could extract:

- Service provider particulars (even from that hole-in-the-wall restaurant)

- Particular person objects with costs (sure, even that difficult Starbucks order)

- Tax breakdowns (to your accounting workforce’s sanity)

- Fee strategies (company card vs. private)

Contract evaluation

Authorized paperwork are the place LayoutLM actually shines. It understands:

- Clause hierarchies (Part 2.3.1 is below Part 2.3, which is below Part 2)

- Signature blocks (who signed the place and when)

- Tables of phrases and circumstances

- Cross-references between sections

Kinds processing

Whether or not it is insurance coverage claims, mortgage functions, or authorities varieties, LayoutLM handles all of them. The mannequin understands:

- Checkbox states (checked, unchecked, or that bizarre half-check)

- Handwritten entries in type fields

- Multi-page varieties with continued sections

- Complicated desk constructions with merged cells

Why LayoutLM? The leap to multimodal understanding

How does a deep studying mannequin be taught to appropriately assign labels to textual content? Earlier than LayoutLM, a number of approaches existed, every with its personal limitations:

- Textual content-only fashions: Utilizing textual content embeddings from massive language fashions like BERT isn’t very efficient by itself, because it ignores the wealthy contextual clues offered by the doc’s structure.

- Picture-based fashions: Laptop imaginative and prescient fashions like Sooner R-CNN can use visible info to detect textual content blocks however do not totally make the most of the semantic content material of the textual content itself.

- Graph-based fashions: These fashions mix textual and locational info however typically neglect the visible cues current within the doc picture.

LayoutLM was one of many first fashions to efficiently mix all three dimensions of data—textual content, structure (location), and picture—right into a singular, highly effective framework. It achieved this by extending the confirmed structure of BERT to know not simply what the phrases are, however the place they’re on the web page and what they seem like.

LayoutLM tutorial: The way it works

This part breaks down the core parts of the unique LayoutLM mannequin.

1. OCR Textual content and Bounding Field extraction

Step one in any LayoutLM pipeline is to course of a doc picture with an OCR engine. This course of extracts two essential items of data: the textual content content material of the doc and the situation of every phrase, represented by a “bounding field.” A bounding field is a rectangle outlined by coordinates (e.g., top-left and bottom-right corners) that encapsulates a bit of textual content on the web page.

2. Language and placement embeddings

LayoutLM is constructed on the BERT structure, a strong Transformer mannequin. The important thing innovation of LayoutLM was including new sorts of enter embeddings to show this language mannequin the right way to perceive 2D house:

- Textual content Embeddings: Customary phrase embeddings that signify the semantic that means of every token (phrase or sub-word).

- 1D Place Embeddings: Customary positional embeddings utilized in BERT to know the sequence order of phrases.

- 2D Place Embeddings: That is the breakthrough function. For every phrase, its bounding field coordinates (x0,y0,x1,y1) are normalized to a 1000×1000 grid and handed via 4 separate embedding layers. These spatial embeddings are then added to the textual content and 1D place embeddings. This enables the mannequin’s self-attention mechanism to be taught that phrases which can be visually shut are sometimes semantically associated, enabling it to know constructions like varieties and tables with out specific guidelines.

3. Picture embeddings

To include visible and stylistic options like font sort, shade, or emphasis, LayoutLM additionally launched an non-compulsory picture embedding. This was generated by making use of a pre-trained object detection mannequin (Sooner R-CNN) to the picture areas corresponding to every phrase. Nevertheless, this function added important computational overhead and was discovered to have a restricted affect on some duties, so it was not at all times used.

4. Pre-training LayoutLM

To learn to fuse these totally different modalities, LayoutLM was pre-trained on the IIT-CDIP Take a look at Assortment 1.0, a large dataset of over 11 million scanned doc pictures from U.S. tobacco business lawsuits. This pre-training used two primary targets:

- Masked Visible-Language Mannequin (MVLM): Much like BERT’s Masked Language Mannequin, some textual content tokens are randomly masked. The mannequin should predict the unique token utilizing the encircling context. Crucially, the 2D place embedding of the masked phrase is saved, forcing the mannequin to be taught from each linguistic and spatial clues.

- Multi-label Doc Classification (MDC): An non-compulsory process the place the mannequin learns to classify paperwork into classes (e.g., “letter,” “memo”) utilizing the doc labels from the IIT-CDIP dataset. This was meant to assist the mannequin be taught document-level representations. Nevertheless, later work discovered this might typically damage efficiency on info extraction duties and it was typically omitted.

5. Positive-tuning for downstream duties

After pre-training, the LayoutLM mannequin could be fine-tuned for particular duties, the place it has set new state-of-the-art benchmarks:

- Type understanding (FUNSD dataset): This entails assigning labels (like query, reply, header) to textual content blocks.

- Receipt understanding (SROIE dataset): This focuses on extracting particular fields from scanned receipts. .

- Doc picture classification (RVL-CDIP dataset): This process entails classifying a whole doc picture into one among 16 classes.

Utilizing LayoutLM with Hugging Face

One of many main causes for LayoutLM’s reputation is its availability on the Hugging Face Hub, which makes it considerably simpler for builders to make use of. The transformers library offers pre-trained fashions, tokenizers, and configuration lessons for LayoutLM.

To fine-tune LayoutLM for a customized process, you sometimes must:

- Set up Libraries: Guarantee you’ve got torch and transformers put in.

- Put together Knowledge: Course of your paperwork with an OCR engine to get phrases and their normalized bounding packing containers (scaled to a 0-1000 vary).

- Tokenize and Align: Use the LayoutLMTokenizer to transform textual content into tokens. A key step is to make sure that every token is aligned with the right bounding field from the unique phrase.

- Positive-tune: Use the LayoutLMForTokenClassification class for duties like NER (labeling textual content) or LayoutLMForSequenceClassification for doc classification. The mannequin takes input_ids, attention_mask, token_type_ids, and the essential bbox tensor as enter.

It is essential to notice that the bottom Hugging Face implementation of the unique LayoutLM doesn’t embrace the visible function embeddings from the Sooner R-CNN mannequin; that functionality was extra deeply built-in in LayoutLMv2.

How LayoutLM is used: Positive-tuning for downstream duties

LayoutLM’s true energy is unlocked when it is fine-tuned for particular enterprise duties. As a result of it is accessible on Hugging Face, builders can get began comparatively simply. The principle duties embrace:

- Type understanding (textual content labeling): This entails linking a label, like “Bill Quantity,” to a selected piece of textual content in a doc. That is handled as a token classification process.

- Doc picture classification: This entails categorizing a whole doc (e.g., as an “bill” or “buy order”) primarily based on its mixed textual content, structure, and picture options.

For builders trying to see how this works in follow, listed here are some examples utilizing the Hugging Face transformers library.

Instance: LayoutLM for textual content labeling (type understanding)

To assign labels to totally different components of a doc, you employ the LayoutLMForTokenClassification class. The code beneath reveals the essential setup.

from transformers import LayoutLMTokenizer, LayoutLMForTokenClassification

import torch

tokenizer = LayoutLMTokenizer.from_pretrained("microsoft/layoutlm-base-uncased")

mannequin = LayoutLMForTokenClassification.from_pretrained("microsoft/layoutlm-base-uncased")

phrases = ["Hello", "world"]

# Bounding packing containers have to be normalized to a 0-1000 scale

normalized_word_boxes = [[637, 773, 693, 782], [698, 773, 733, 782]]

token_boxes = []

for phrase, field in zip(phrases, normalized_word_boxes):

word_tokens = tokenizer.tokenize(phrase)

token_boxes.lengthen([box] * len(word_tokens))

# Add bounding packing containers for particular tokens

token_boxes = [[0, 0, 0, 0]] + token_boxes + [[1000, 1000, 1000, 1000]]

encoding = tokenizer(" ".be part of(phrases), return_tensors="pt")

input_ids = encoding["input_ids"]

attention_mask = encoding["attention_mask"]

bbox = torch.tensor([token_boxes])

# Instance labels (e.g., 1 for a subject, 0 for not a subject)

token_labels = torch.tensor([1, 1, 0, 0]).unsqueeze(0)

outputs = mannequin(

input_ids=input_ids,

bbox=bbox,

attention_mask=attention_mask,

labels=token_labels,

)

loss = outputs.loss

logits = outputs.logits

Instance: LayoutLM for doc classification

To categorise a whole doc, you employ the LayoutLMForSequenceClassification class, which makes use of the ultimate illustration of the [CLS] token for its prediction.

from transformers import LayoutLMTokenizer, LayoutLMForSequenceClassification

import torch

tokenizer = LayoutLMTokenizer.from_pretrained("microsoft/layoutlm-base-uncased")

mannequin = LayoutLMForSequenceClassification.from_pretrained("microsoft/layoutlm-base-uncased")

phrases = ["Hello", "world"]

normalized_word_boxes = [[637, 773, 693, 782], [698, 773, 733, 782]]

token_boxes = []

for phrase, field in zip(phrases, normalized_word_boxes):

word_tokens = tokenizer.tokenize(phrase)

token_boxes.lengthen([box] * len(word_tokens))

token_boxes = [[0, 0, 0, 0]] + token_boxes + [[1000, 1000, 1000, 1000]]

encoding = tokenizer(" ".be part of(phrases), return_tensors="pt")

input_ids = encoding["input_ids"]

attention_mask = encoding["attention_mask"]

bbox = torch.tensor([token_boxes])

# Instance doc label (e.g., 1 for "bill")

sequence_label = torch.tensor([1])

outputs = mannequin(

input_ids=input_ids,

bbox=bbox,

attention_mask=attention_mask,

labels=sequence_label,

)

loss = outputs.loss

logits = outputs.logits

Why a strong mannequin (and code) is simply the start line

As you may see from the code, even a easy instance requires important setup: OCR, bounding field normalization, tokenization, and managing tensor shapes. That is simply the tip of the iceberg. If you attempt to construct a real-world enterprise resolution, you run into even greater challenges that the mannequin itself cannot remedy.

Listed below are the lacking items:

- Automated import: The code does not fetch paperwork for you. You want a system that may mechanically pull invoices from an e mail inbox, seize buy orders from a shared Google Drive, or connect with a SharePoint folder.

- Doc classification: Your inbox does not simply comprise invoices. An actual workflow must mechanically kind invoices from buy orders and contracts earlier than you may even run the appropriate mannequin.

- Knowledge validation and approvals: A mannequin will not know your organization’s enterprise guidelines. You want a workflow that may mechanically flag duplicate invoices, test if a PO quantity matches your database, or route any bill over $5,000 to a supervisor for guide approval.

- Seamless export & integration: The extracted knowledge is barely helpful if it will get into your different methods. An entire resolution requires pre-built integrations to push clear, structured knowledge into your ERP (like SAP), accounting software program (like QuickBooks or Salesforce), or inside databases.

- A usable interface: Your finance and operations groups cannot work with Python scripts. They want a easy, intuitive interface to view extracted knowledge, make fast corrections, and approve paperwork with a single click on.

Nanonets: The entire resolution constructed for enterprise

Nanonets offers the complete end-to-end workflow platform, utilizing the best-in-class AI fashions below the hood so that you get the enterprise final result with out the technical complexity. We constructed Nanonets as a result of we noticed this actual hole between highly effective AI and a sensible, usable enterprise resolution.

Right here’s how we remedy the entire downside:

- We’re mannequin agnostic: We summary away the complexity of the AI panorama. You need not fear about selecting between LayoutLMv3, Florence-2, or one other mannequin; our platform mechanically makes use of the very best software for the job to ship the very best accuracy to your particular paperwork.

- Zero-fuss workflow automation: Our no-code platform allows you to construct the precise workflow you want in minutes. For our shopper Hometown Holdings, this meant a totally automated course of from ingesting utility payments through e mail to exporting knowledge into Hire Supervisor, saving them 4,160 worker hours yearly.

- On the spot studying & reliability: Our platform learns from each consumer correction. When a brand new doc format arrives, you simply appropriate it as soon as, and the mannequin learns immediately. This was essential for our shopper Suzano, who needed to course of buy orders from over 70 clients in a whole bunch of various templates. Nanonets diminished their processing time from 8 minutes to simply 48 seconds per doc.

- Area-specific efficiency: Current analysis reveals that pre-training fashions on domain-relevant paperwork considerably improves efficiency and reduces errors. That is precisely what the Nanonets platform facilitates via steady, real-time studying in your particular paperwork, making certain the mannequin is at all times optimized to your distinctive wants.

Getting began with LayoutLM (with out the PhD in machine studying)

If you happen to’re able to implement LayoutLM however do not wish to construct all the pieces from scratch, Nanonets affords a whole doc AI platform that makes use of LayoutLM and different state-of-the-art fashions below the hood. You get:

- Pre-trained fashions for widespread doc varieties

- No-code interface for coaching customized fashions

- API entry for builders

- Human-in-the-loop validation when wanted

- Integrations along with your current instruments

The very best half? You can begin with a free trial and course of your first paperwork in minutes, not months.

Continuously Requested Questions

1. What’s the distinction between LayoutLM and conventional OCR?

Conventional OCR (Optical Character Recognition) converts a doc picture into plain textual content. It extracts what the textual content says however has no understanding of the doc’s construction. LayoutLM goes a step additional by combining that textual content with its visible structure info (the place the textual content is on the web page). This enables it to know context, like figuring out {that a} quantity is a “Complete Quantity” due to its place, which conventional OCR can not do.

2. How do I take advantage of LayoutLM with Hugging Face Transformers?

Implementing LayoutLM with Hugging Face requires loading the mannequin (e.g., LayoutLMForTokenClassification) and its tokenizer. The important thing step is offering bbox (bounding field) coordinates for every token together with the input_ids. You could first use an OCR software like Tesseract to get the textual content and coordinates, then normalize these coordinates to a 0-1000 scale earlier than passing them to the mannequin.

3. What accuracy can I anticipate from LayoutLM and newer fashions?

Accuracy relies upon closely on the doc sort and high quality. For well-structured paperwork like receipts, LayoutLM can obtain F1-scores as much as 95%. On extra complicated varieties, it scores round 79%. Newer fashions can supply larger accuracy, however efficiency nonetheless varies. Probably the most essential components are the standard of the scanned doc and the way intently your paperwork match the mannequin’s coaching knowledge. Current research present that fine-tuning on domain-specific paperwork is a key consider reaching the very best accuracy.

4. How does this expertise enhance bill processing?

LayoutLM and comparable fashions automate bill processing by intelligently extracting key info like vendor particulars, bill numbers, line objects, and totals. In contrast to older, template-based methods, these fashions adapt to totally different layouts mechanically by leveraging each textual content and positioning. This functionality dramatically reduces guide knowledge entry time (we’ve introduced down time required from 20 minutes per doc to below 10 seconds), improves accuracy, and allows straight-through processing for the next proportion of invoices.

5. How do you fine-tune LayoutLM for customized doc varieties?

Positive-tuning LayoutLM requires a labeled dataset of your customized paperwork, together with each the textual content and exact bounding field coordinates for every token. This knowledge is used to coach the pre-trained mannequin to acknowledge the particular patterns in your paperwork. It is a highly effective however resource-intensive course of, requiring knowledge assortment, annotation, and important computational energy for coaching.

6. How do I implement LayoutLM with Hugging Face Transformers for doc processing?

Implementing LayoutLM with Hugging Face Transformers requires putting in the transformers, datasets, and PyTorch packages, then loading each the LayoutLMTokenizerFast and LayoutLMForTokenClassification fashions from the “microsoft/layoutlm-base-uncased” checkpoint.

The important thing distinction from normal NLP fashions is that LayoutLM requires bounding field coordinates for every token alongside the textual content, which have to be normalized to a 0-1000 scale utilizing the doc’s width and peak.

After preprocessing your doc knowledge to incorporate input_ids, attention_mask, token_type_ids, and bbox coordinates, you may run inference by passing these inputs to the mannequin, which is able to return logits that may be transformed to predicted labels for duties like named entity recognition, doc classification, or info extraction.

7. What’s the distinction between LayoutLM and conventional OCR fashions?

LayoutLM basically differs from conventional OCR fashions by combining visible structure understanding with language comprehension, whereas conventional OCR focuses solely on character recognition and textual content extraction.

Conventional OCR converts pictures to plain textual content with out understanding doc construction, context, or spatial relationships between parts, making it appropriate for primary textual content digitization however restricted for complicated doc understanding duties. LayoutLM integrates each textual content material and spatial positioning info (bounding packing containers) to know not simply what textual content says, however the place it seems on the web page and the way totally different parts relate to one another structurally.

This twin understanding allows LayoutLM to carry out subtle duties like type subject extraction, desk evaluation, and doc classification that think about each semantic that means and visible structure, making it considerably extra highly effective for clever doc processing in comparison with conventional OCR’s easy textual content conversion capabilities.

8. What accuracy charges can I anticipate from LayoutLM on totally different doc varieties?

LayoutLM achieves various accuracy charges relying on doc construction and complexity, with efficiency sometimes starting from 85-95% for well-structured paperwork like varieties and invoices, and 70-85% for extra complicated or unstructured paperwork.

The mannequin performs exceptionally properly on the FUNSD dataset (type understanding), reaching round 79% F1 rating, and on the SROIE dataset (receipt understanding), reaching roughly 95% accuracy for key info extraction duties. Doc classification duties usually see larger accuracy charges (90-95%) in comparison with token-level duties like named entity recognition (80-90%), whereas efficiency can drop considerably for poor high quality scanned paperwork, handwritten textual content, or paperwork with uncommon layouts that differ considerably from coaching knowledge.

Elements affecting accuracy embrace doc picture high quality, consistency of structure construction, textual content readability, and the way intently the goal paperwork match the mannequin’s coaching distribution.

9. How can LayoutLM enhance bill processing automation for companies?

LayoutLM transforms bill processing automation by intelligently extracting key info like vendor particulars, bill numbers, line objects, totals, and dates whereas understanding the spatial relationships between these parts, enabling correct knowledge seize even when bill layouts fluctuate considerably between distributors.

In contrast to conventional template-based methods that require guide configuration for every bill format, LayoutLM adapts to totally different layouts mechanically by leveraging each textual content material and visible positioning to establish related fields no matter their actual location on the web page. This functionality dramatically reduces guide knowledge entry time from hours to minutes, improves accuracy by eliminating human transcription errors, and allows straight-through processing for the next proportion of invoices with out human intervention.

The expertise additionally helps complicated situations like multi-line merchandise extraction, tax calculation verification, and buy order matching, whereas offering confidence scores that permit companies to implement automated approval workflows for high-confidence extractions and route solely unsure instances for guide overview.

5. What are the steps to fine-tune LayoutLM for customized doc varieties?

Positive-tuning LayoutLM for customized doc varieties begins with amassing and annotating a consultant dataset of your particular paperwork, together with each the textual content content material and exact bounding field coordinates for every token, together with labels for the goal process, resembling entity varieties for NER or classes for classification.

Put together the info by normalizing bounding packing containers to the 0-1000 scale, tokenizing textual content utilizing the LayoutLM tokenizer, and making certain correct alignment between tokens and their corresponding spatial coordinates and labels. Configure the coaching course of by loading a pre-trained LayoutLM mannequin, setting applicable hyperparameters (studying charge round 5e-5, batch dimension 8-16 relying on GPU reminiscence), and implementing knowledge loaders that deal with the multi-modal enter format combining textual content, structure, and label info.

Execute coaching utilizing normal PyTorch or Hugging Face coaching loops whereas monitoring validation metrics to stop overfitting, then consider the fine-tuned mannequin on a held-out take a look at set to make sure it generalizes properly to unseen paperwork of your goal sort earlier than deploying to manufacturing.