xAI launched Grok-4-Quick, a cost-optimized successor to Grok-4 that merges “reasoning” and “non-reasoning” behaviors right into a single set of weights controllable by way of system prompts. The mannequin targets high-throughput search, coding, and Q&A with a 2M-token context window and native tool-use RL that decides when to browse the online, execute code, or name instruments.

Structure observe

Earlier Grok releases cut up long-chain “reasoning” and brief “non-reasoning” responses throughout separate fashions. Grok-4-Quick’s unified weight house reduces end-to-end latency and tokens by steering conduct by way of system prompts, which is related for real-time functions (search, assistive brokers, and interactive coding) the place switching fashions penalizes each latency and price.

Search and agentic use

Grok-4-Quick was skilled end-to-end with tool-use reinforcement studying and exhibits positive aspects on search-centric agent benchmarks: BrowseComp 44.9%, SimpleQA 95.0%, Reka Analysis 66.0%, plus larger scores on Chinese language variants (e.g., BrowseComp-zh 51.2%). xAI additionally cites personal battle-testing on LMArena the place grok-4-fast-search (codename “menlo”) ranks #1 within the Search Enviornment with 1163 Elo, and the textual content variant (codename “tahoe”) sits at #8 within the Textual content Enviornment, roughly on par with grok-4-0709.

Efficiency and effectivity deltas

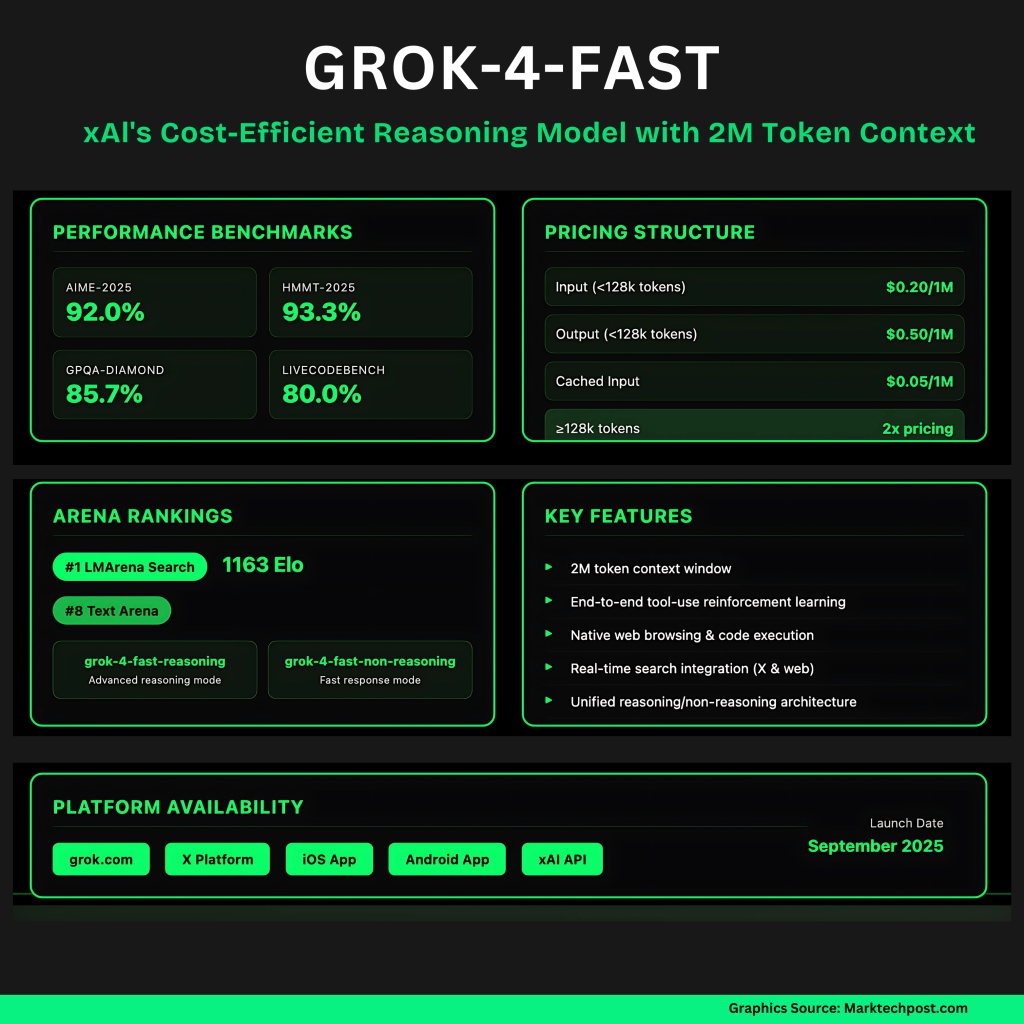

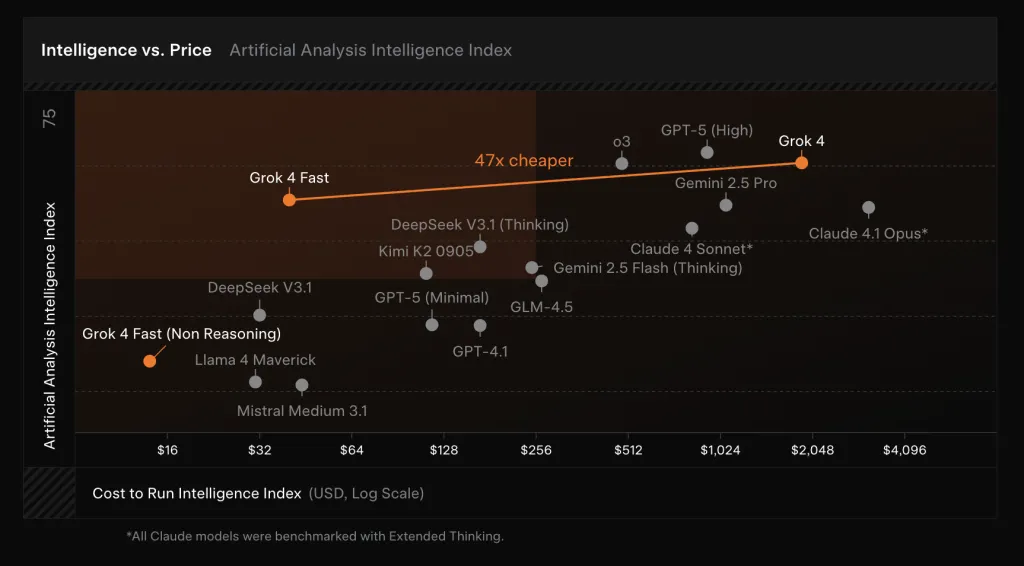

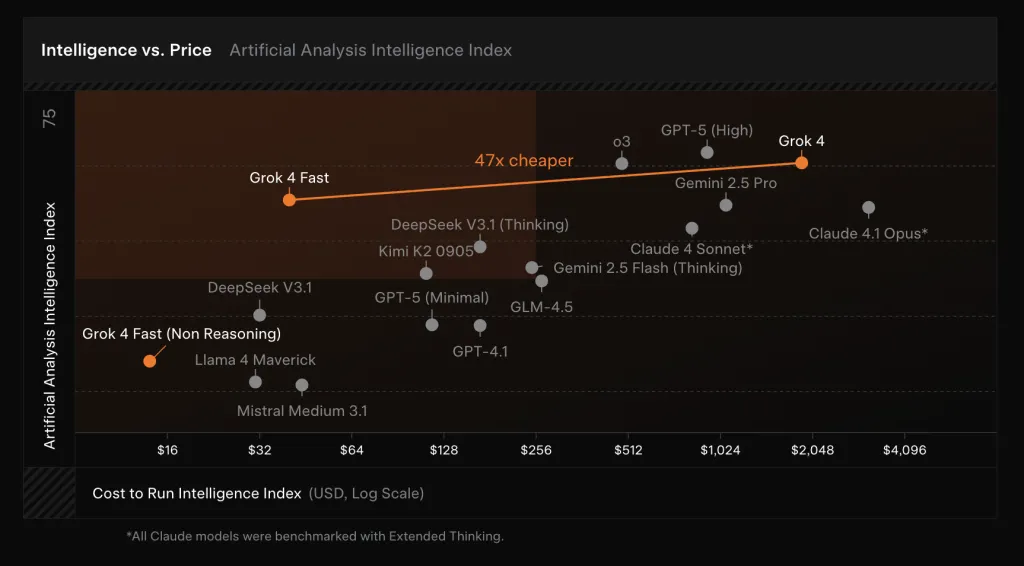

On inside and public benchmarks, Grok-4-Quick posts frontier-class scores whereas reducing token utilization. xAI reviews move@1 outcomes of 92.0% (AIME 2025, no instruments), 93.3% (HMMT 2025, no instruments), 85.7% (GPQA Diamond), and 80.0% (LiveCodeBench Jan–Could), approaching or matching Grok-4 however utilizing ~40% fewer “pondering” tokens on common. The corporate frames this as “intelligence density,” claiming a ~98% discount in worth to achieve the identical benchmark efficiency as Grok-4 when the decrease token depend and new per-token pricing are mixed.

Deployment and worth

The mannequin is usually accessible to all customers in Grok’s Quick and Auto modes throughout internet and cellular; Auto will choose Grok-4-Quick for troublesome queries to enhance latency with out dropping high quality, and—for the primary time—free customers entry xAI’s newest mannequin tier. For builders, xAI exposes two SKUs—grok-4-fast-reasoning and grok-4-fast-non-reasoning—each with 2M context. Pricing (xAI API) is $0.20 / 1M enter tokens (, $0.40 / 1M enter tokens (≥128k), $0.50 / 1M output tokens (, $1.00 / 1M output tokens (≥128k), and $0.05 / 1M cached enter tokens.

5 Technical Takeaways:

- Unified mannequin + 2M context. Grok-4-Quick makes use of a single weight house for “reasoning” and “non-reasoning,” prompt-steered, with a 2,000,000-token window throughout each SKUs.

- Pricing for scale. API pricing begins at $0.20/M enter, $0.50/M output, with cached enter at $0.05/M and better charges solely past 128K context.

- Effectivity claims. xAI reviews ~40% fewer “pondering” tokens at comparable accuracy vs Grok-4, yielding a ~98% lower cost to match Grok-4 efficiency on frontier benchmarks.

- Benchmark profile. Reported move@1: AIME-2025 92.0%, HMMT-2025 93.3%, GPQA-Diamond 85.7%, LiveCodeBench (Jan–Could) 80.0%.

- Agentic/search use. Publish-training with tool-use RL; positioned for shopping/search workflows with documented search-agent metrics and live-search billing in docs.

Abstract

Grok-4-Quick packages Grok-4-level functionality right into a single, prompt-steerable mannequin with a 2M-token window, tool-use RL, and pricing tuned for high-throughput search and agent workloads. Early public indicators (LMArena #1 in Search, aggressive Textual content placement) align with xAI’s declare of comparable accuracy utilizing ~40% fewer “pondering” tokens, translating to decrease latency and unit price in manufacturing.

Take a look at the Technical particulars. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.