Retrieval-Augmented Technology is altering the way in which LLMs faucet into exterior data. The issue is that quite a lot of builders misunderstand what RAG really does. They deal with the doc sitting within the vector retailer and assume the magic begins and ends with retrieving it. However indexing and retrieval aren’t the identical factor in any respect.

Indexing is about the way you select to signify data. Retrieval is about what elements of that data the mannequin will get to see. When you acknowledge that hole, the entire image shifts. You begin to understand how a lot management you even have over the mannequin’s reasoning, velocity, and grounding.

This information breaks down what RAG indexing actually means and walks via sensible methods to design indexing methods that really assist your system assume higher, not simply fetch textual content.

What’s RAG indexing?

RAG indexing is the premise of retrieval. It’s the course of of reworking uncooked data into numerical knowledge that may then be searched by way of similarity queries. This numerical knowledge is known as embeddings, and embeddings captures which means, relatively than simply floor stage textual content.

Think about this like constructing a searchable semantic map of your data base. Every chunk, abstract, or variant of a question turns into some extent alongside the map. The extra organized this map is, the higher your retriever can determine related data when a consumer asks a query.

In case your indexing is off, resembling in case your chunks are too massive, the embeddings are capturing noise, or your illustration of the information doesn’t signify consumer intent, then no LLM will make it easier to very a lot. The standard of retrieval will all the time depend upon how successfully the information is listed, not how nice your machine studying mannequin is.

Why it Issues?

You aren’t constrained to retrieving solely what you index. The facility of your RAG system is how successfully your index displays which means and never textual content. Indexing articulates the body via which your retriever sees the data.

Once you match your indexing technique to your knowledge and your consumer want, retrieval will get sharper, fashions will hallucinate much less, and consumer will get correct completions. A well-designed index turns RAG from a retrieval pipeline into an actual semantic reasoning engine.

RAG Indexing Methods That Truly Work

Suppose now we have a doc about Python programming:

Doc = """ Python is a flexible programming language broadly utilized in knowledge science, machine studying, and net growth. It helps a number of paradigms and has a wealthy ecosystem of libraries like NumPy, pandas, and TensorFlow. """ Now, let’s discover when to make use of every RAG indexing technique successfully and implement it for such content material to construct a performant retrieval system.

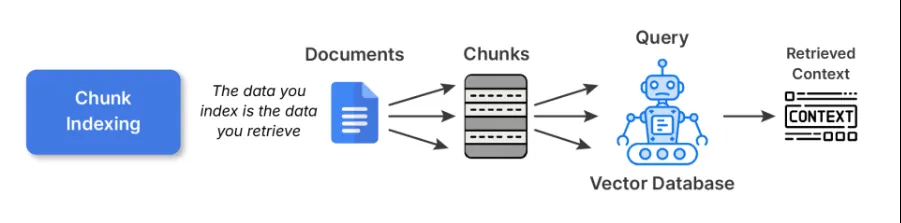

1. Chunk Indexing

That is the place to begin for many RAG pipelines. You break up giant paperwork into smaller, semantically coherent chunks and embed each utilizing some embedding mannequin. These embeddings are then saved in a vector database.

Instance Code:

# 1. Chunk Indexing

def chunk_indexing(doc, chunk_size=100):

phrases = doc.break up()

chunks = []

current_chunk = []

current_len = 0

for phrase in phrases:

current_len += len(phrase) + 1 # +1 for house

current_chunk.append(phrase)

if current_len >= chunk_size:

chunks.append(" ".be part of(current_chunk))

current_chunk = []

current_len = 0

if current_chunk:

chunks.append(" ".be part of(current_chunk))

chunk_embeddings = [embed(chunk) for chunk in chunks]

return chunks, chunk_embeddings

chunks, chunk_embeddings = chunk_indexing(doc_text, chunk_size=50)

print("Chunks:n", chunks)Greatest Practices:

- All the time hold the chunks round 200-400 tokens for brief kind textual content or 500-800 for lengthy kind technical content material.

- Make certain to keep away from splitting mid sentences or mid paragraph, use logical, semantic breaking factors for higher chunking.

- Good to make use of overlapping home windows (20-30%) in order that context at boundaries isn’t misplaced.

Commerce-offs: Chunk indexing is easy and general-purpose indexing. Nonetheless, greater chunks can hurt retrieval precision, whereas smaller chunks can fragment context and overwhelm the LLM with items that don’t match collectively.

Learn extra: Construct RAG Pipeline utilizing LlamaIndex

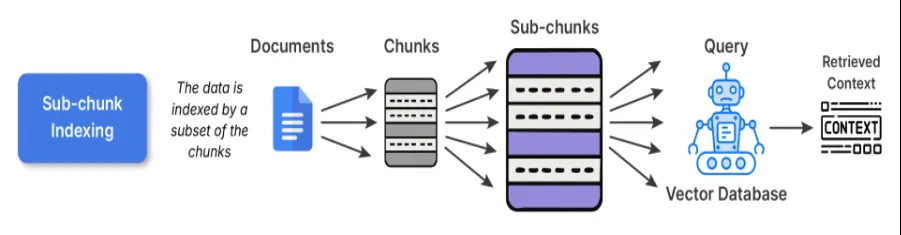

2. Sub-chunk Indexing

Sub-chunk indexing serves as a layer of refinement on high of chunk indexing. When embedding the traditional chunks, you additional divide the chunk into smaller sub-chunks. Once you wish to retrieve, you evaluate the sub-chunks to the question, and as soon as that sub-chunk matches your question, the complete dad or mum chunk is enter into the LLM.

Why this works:

The sub-chunks afford you the power to look in a extra pinpointed, refined, and actual manner, whereas retaining the big context that you simply wanted for reasoning. For instance, you’ll have a protracted analysis article, and the sub-chunk on one piece of content material in that article stands out as the rationalization of 1 method in a single lengthy paragraph, thus bettering each precision and interpretability.

Instance Code:

# 2. Sub-chunk Indexing

def sub_chunk_indexing(chunk, sub_chunk_size=25):

phrases = chunk.break up()

sub_chunks = []

current_sub_chunk = []

current_len = 0

for phrase in phrases:

current_len += len(phrase) + 1

current_sub_chunk.append(phrase)

if current_len >= sub_chunk_size:

sub_chunks.append(" ".be part of(current_sub_chunk))

current_sub_chunk = []

current_len = 0

if current_sub_chunk:

sub_chunks.append(" ".be part of(current_sub_chunk))

return sub_chunks

# Sub-chunks for first chunk (as instance)

sub_chunks = sub_chunk_indexing(chunks[0], sub_chunk_size=30)

sub_embeddings = [embed(sub_chunk) for sub_chunk in sub_chunks]

print("Sub-chunks:n", sub_chunks)

When to make use of: This could be advantageous for datasets that include a number of distinct concepts in every paragraph; for instance, when you contemplate data bases-like textbooks, analysis articles, and so on., this might be ideally suited.

Commerce-off: The fee is barely greater for preprocessing and storage because of the overlapping embeddings, nevertheless it has considerably higher alignment between question and content material.

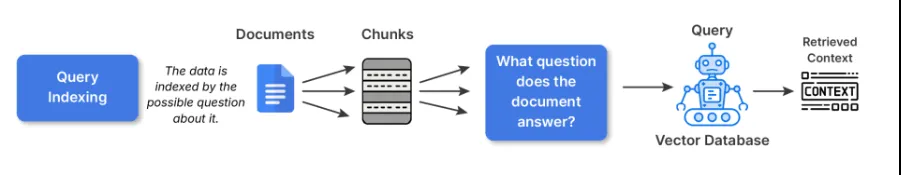

3. Question Indexing

Within the case of question indexing, the uncooked textual content isn’t instantly embedded. As an alternative, we create a number of imagined questions that every chunk may reply, then embeds that textual content. That is partly achieved to bridge the semantic hole of how customers ask and the way your paperwork describe issues.

For instance, in case your chunk says:

“LangChain has utilities for constructing RAG pipelines”

The mannequin would generate queries like:

- How do I construct a RAG pipeline in LangChain?

- What instruments for retrieval does LangChain have?

Then, when any actual consumer asks an identical query, the retrieval will hit a kind of listed queries instantly.

Instance Code:

# 3. Question Indexing - generate artificial queries associated to the chunk

def generate_queries(chunk):

# Easy artificial queries for demonstration

queries = [

"What is Python used for?",

"Which libraries does Python support?",

"What paradigms does Python support?"

]

query_embeddings = [embed(q) for q in queries]

return queries, query_embeddings

queries, query_embeddings = generate_queries(doc_text)

print("Artificial Queries:n", queries)Greatest Practices:

- When writing index queries, I might recommend utilizing LLMs to provide 3-5 queries per chunk.

- You can even deduplicate or cluster all questions which can be like make the precise index smaller.

When to make use of:

- Q&A methods, or a chatbot the place most consumer interactions are pushed by pure language questions.

- Search expertise the place the consumer is more likely to ask for what, how, or why sort inquiries.

Commerce-off: Whereas artificial enlargement provides preprocessing time and house, it supplies a significant enhance in retrieval relevance for consumer going through methods.

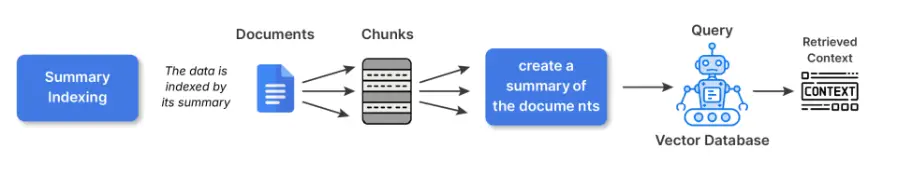

4. Abstract Indexing

Abstract indexing permits you to reframe items of fabric into smaller summaries previous to embedding. You keep the entire content material in one other location, after which retrieval is completed on the summarized variations.

Why that is helpful:

Constructions, dense or repetitive supply supplies (assume spreadsheets, coverage paperwork, technical manuals) usually are supplies that embedding instantly from the uncooked textual content model captures noise. Summarizing abstracts away the much less related floor particulars and is extra semantically significant to embeddings.

For Instance:

The unique textual content says: “Temperature readings from 2020 to 2025 ranged from 22 to 42 diploma Celsius, with anomalies attributed to El Nino”

The abstract can be: Annual temperature traits (2020-2025) with El Nino associated anomalies.

The abstract illustration supplies deal with the idea.

Instance Code:

# 4. Abstract Indexing

def summarize(textual content):

# Easy abstract for demonstration (exchange with an precise summarizer for actual use)

if "Python" in textual content:

return "Python: versatile language, utilized in knowledge science and net growth with many libraries."

return textual content

abstract = summarize(doc_text)

summary_embedding = embed(abstract)

print("Abstract:", abstract)

When to make use of it:

- With structured knowledge (tables, CSVs, log information)

- Technical or verbose content material the place embeddings will underperform utilizing uncooked textual content embeddings.

Commerce off: Summaries can danger shedding nuance/factual accuracy if summaries turn out to be too summary. For essential to area analysis, notably authorized, finance, and so on. hyperlink to the unique textual content for grounding.

5. Hierarchical Indexing

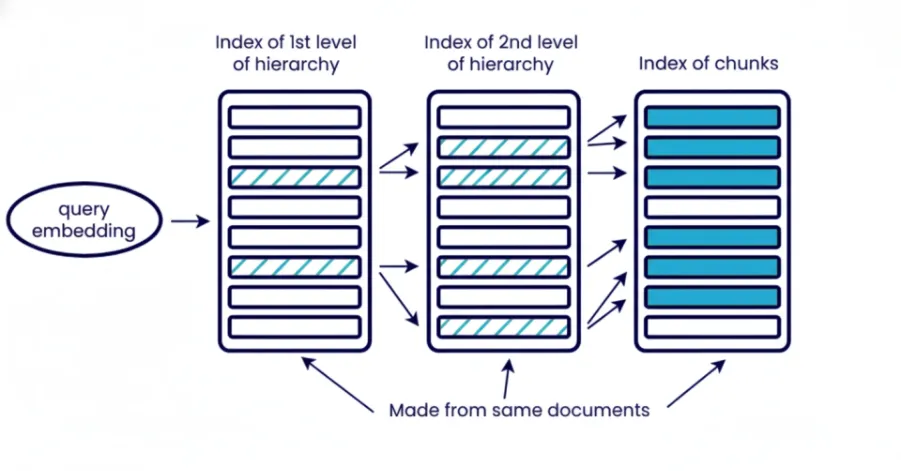

Hierarchical indexing organizes data into quite a lot of totally different ranges, paperwork, part, paragraph, sub-paragraph. You retrieve in phases beginning with broad introduce to slim right down to particular context. The highest stage for part retrieves sections of related paperwork and the following layer retrieve paragraph or sub-paragraph on particular context inside these retrieved part of final paperwork.

What does this imply?

Hierarchical retrieval reduces noise to the system and is helpful if you want to management the context dimension. That is particularly helpful when working with a big corpus of paperwork and you may’t pull it . It additionally enhance interpretability for subsequent evaluation as you may know which doc with which part contributed to to the ultimate reply.

Instance Code:

# 5. Hierarchical Indexing

# Arrange doc into ranges: doc -> chunks -> sub-chunks

hierarchical_index = {

"doc": doc_text,

"chunks": chunks,

"sub_chunks": {chunk: sub_chunk_indexing(chunk) for chunk in chunks}

}

print("Hierarchical index instance:")

print(hierarchical_index)Greatest Practices:

Use a number of embedding ranges or mixture of embedding and key phrases search. For instance, initially retrieve paperwork solely with BM25 after which extra exactly retrieve these related chunks or elements with embedding.

When to make use of it:

- Enterprise scale RAG with 1000’s of paperwork.

- Retrieving from lengthy kind sources resembling books, authorized archives or technical pdf’s.

Commerce off: Elevated complexity as a consequence of a number of retrievals ranges desired. Additionally requires further storage and preprocessing for metadata/summaries. Will increase question latency due to multi-step retrieval and never nicely suited to giant unstructured knowledge.

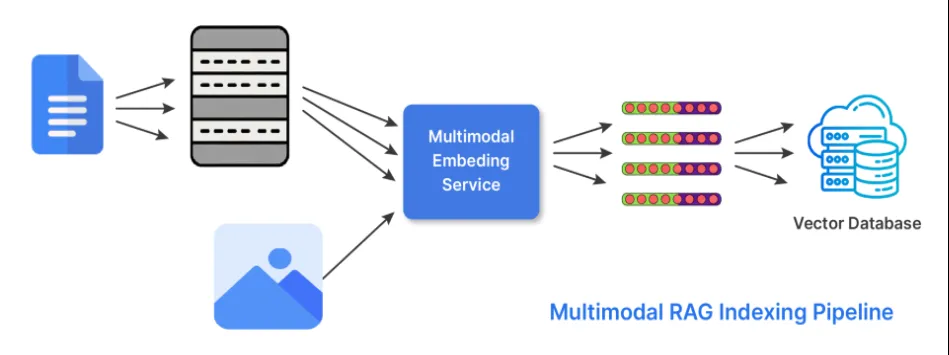

6. Hybrid Indexing (Multi-Modal)

Information isn’t simply in textual content. In its hybrid indexing kind, RAG does two issues to have the ability to work with a number of types of knowledge or modality’s. The retriever makes use of embeddings it generates from totally different encoders specialised or tuned for every of the attainable modalities. And the fetches outcomes from every of the related embeddings and combines them to generate a response utilizing scoring methods or late-fusion approaches.

Right here’s an instance of its use:

- Use CLIP or BLIP for pictures and textual content captions.

- Use CodeBERT or StarCoder embeddings to course of code.

Instance Code:

# 6. Hybrid Indexing (instance with textual content + picture)

# Instance textual content and dummy picture embedding (exchange embed_image with precise mannequin)

def embed_image(image_data):

# Dummy instance: picture knowledge represented as size of string (exchange with CLIP/BLIP encoder)

return [len(image_data) / 1000]

text_embedding = embed(doc_text)

image_embedding = embed_image("image_bytes_or_path_here")

print("Textual content embedding dimension:", len(text_embedding))

print("Picture embedding dimension:", len(image_embedding))

When to make use of hybrid indexing:

- When working with technical manuals or documentation that has pictures or charts.

- Multi-modal documentation or assist articles.

- Product catalogues or e-commerce.

Commerce-off: It’s a extra sophisticated logic and storage mannequin for retrieval, however a lot richer contextual understanding within the response and better flexibility within the area.

Conclusion

Profitable RAG methods depend upon applicable indexing methods for the kind of knowledge and inquiries to be answered. Indexing guides what the retriever finds and what the language mannequin will floor on, making it a essential basis past retrieval. The kind of indexing you’d use could also be chunk, sub-chunk, question, abstract, hierarchical, or hybrid indexing, and that indexing ought to comply with the construction current in your knowledge, which can add to relevance, and remove noise. Effectively-designed indexing processes will decrease hallucinations and supply an correct, reliable system.

Continuously Requested Questions

A. Indexing encodes data into embeddings, whereas retrieval selects which encoded items the mannequin sees to reply a question.

A. They form how exactly the system can match queries and the way a lot context the mannequin will get for reasoning.

A. Use it when your data base mixes textual content, pictures, code, or different modalities and also you want the retriever to deal with all of them.

Login to proceed studying and revel in expert-curated content material.

![What’s RAG Indexing? [6 Strategies for Smarter AI Retrieval] What’s RAG Indexing? [6 Strategies for Smarter AI Retrieval]](https://i3.wp.com/cdn.analyticsvidhya.com/wp-content/uploads/2025/11/RAG-Indexing-.png?w=696&resize=696,0&ssl=1)