Over the previous few months, we’ve launched thrilling updates to Lakeflow Jobs (previously generally known as Databricks Workflows) to enhance information orchestration and optimize workflow efficiency.

For newcomers, Lakeflow Jobs is the built-in orchestrator for Lakeflow, a unified and clever answer for information engineering with streamlined ETL growth and operations constructed on the Knowledge Intelligence Platform. Lakeflow Jobs is probably the most trusted orchestrator for the Lakehouse and production-grade workloads, with over 14,600 clients, 187,000 weekly customers, and 100 million jobs run each week.

From UI enhancements to extra superior workflow management, take a look at the most recent in Databricks’ native information orchestration answer and uncover how information engineers can streamline their end-to-end information pipeline expertise.

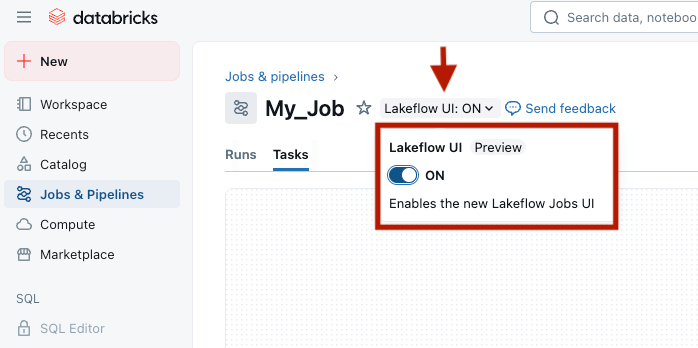

Refreshed UI for a extra targeted person expertise

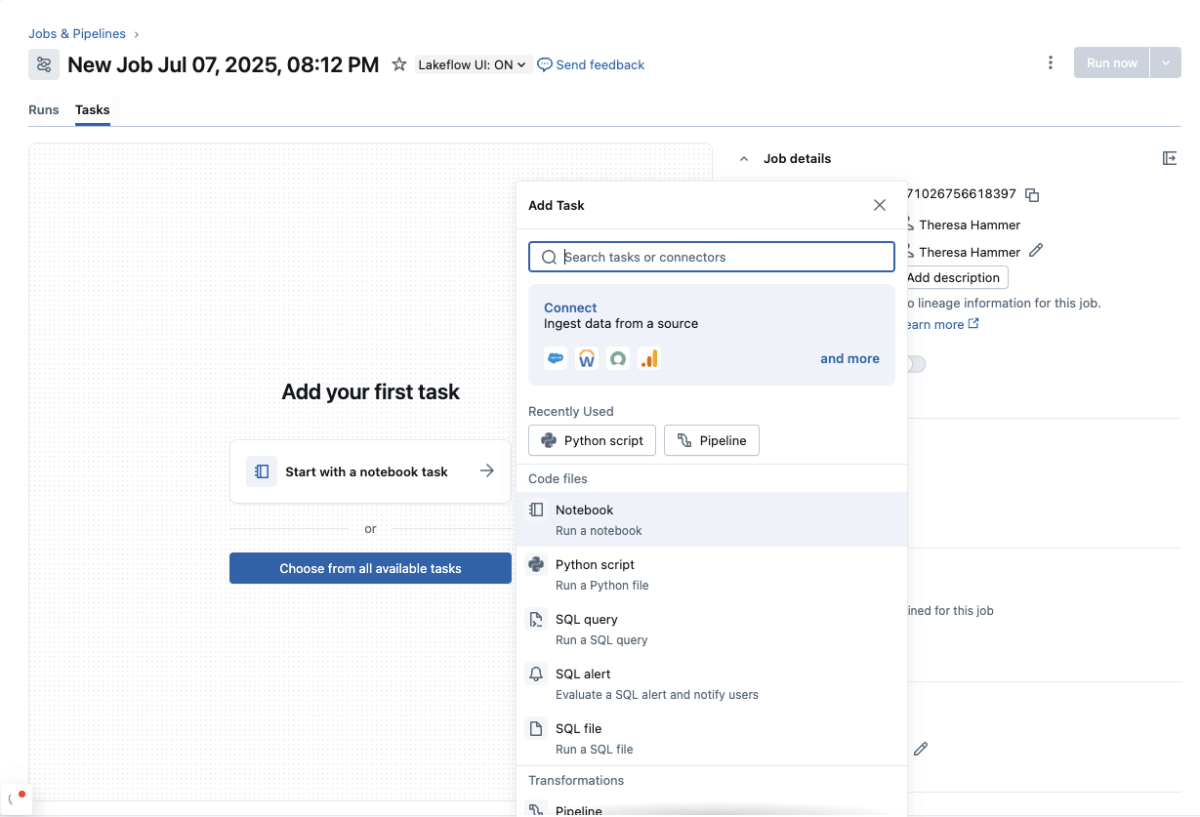

We’ve redesigned our interface to offer Lakeflow Jobs a recent and fashionable look. The brand new compact format permits for a extra intuitive orchestration journey. Customers will take pleasure in a process palette that now gives shortcuts and a search button to assist them extra simply discover and entry their duties, whether or not it is a Lakeflow Pipeline, an AI/BI dashboard, a pocket book, SQL, or extra.

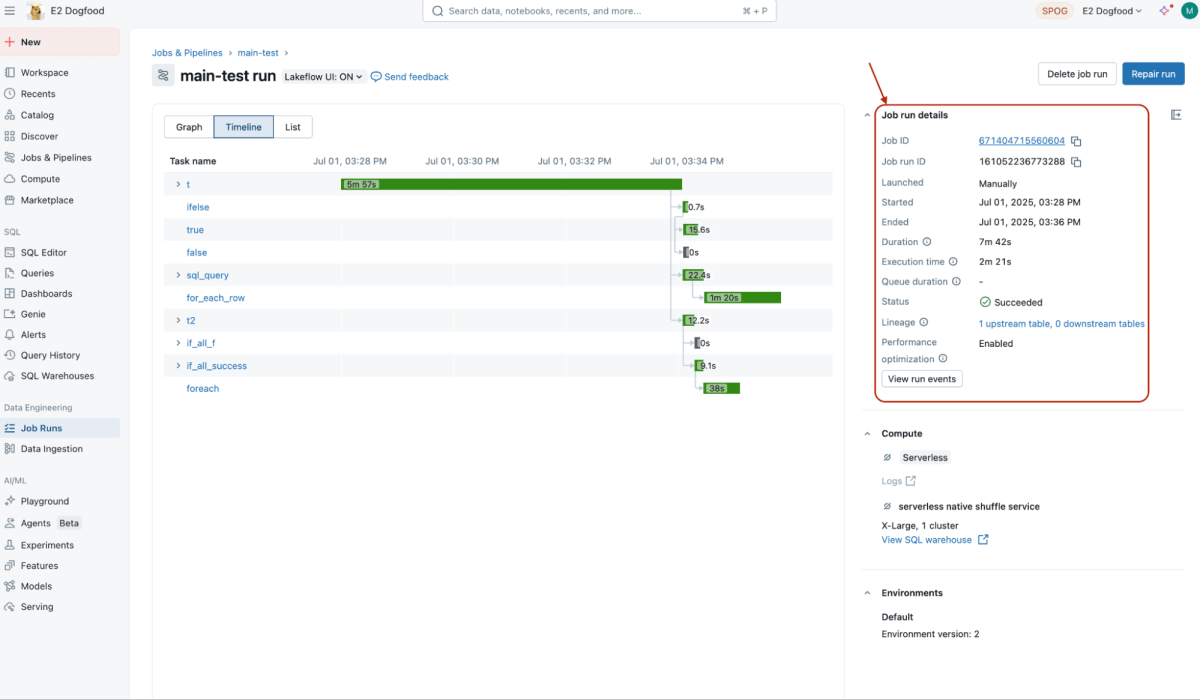

For monitoring, clients can now simply discover data on their jobs’ execution occasions in the correct panel below Job and Activity run particulars, permitting them to simply monitor processing occasions and rapidly determine any information pipeline points.

We’ve additionally improved the sidebar by letting customers select which sections (Job particulars, Schedules & Triggers, Job parameters, and many others.) to cover or hold open, making their orchestration interface cleaner and extra related.

General, Lakeflow Jobs customers can anticipate a extra streamlined, targeted, and simplified orchestration workflow. The brand new format is at present obtainable to customers who’ve opted into the preview and enabled the toggle on the Jobs web page.

Extra managed and environment friendly information flows

Our orchestrator is consistently being enhanced with new options. The most recent replace introduces superior controls for information pipeline orchestration, giving customers higher command over their workflows for extra effectivity and optimized efficiency.

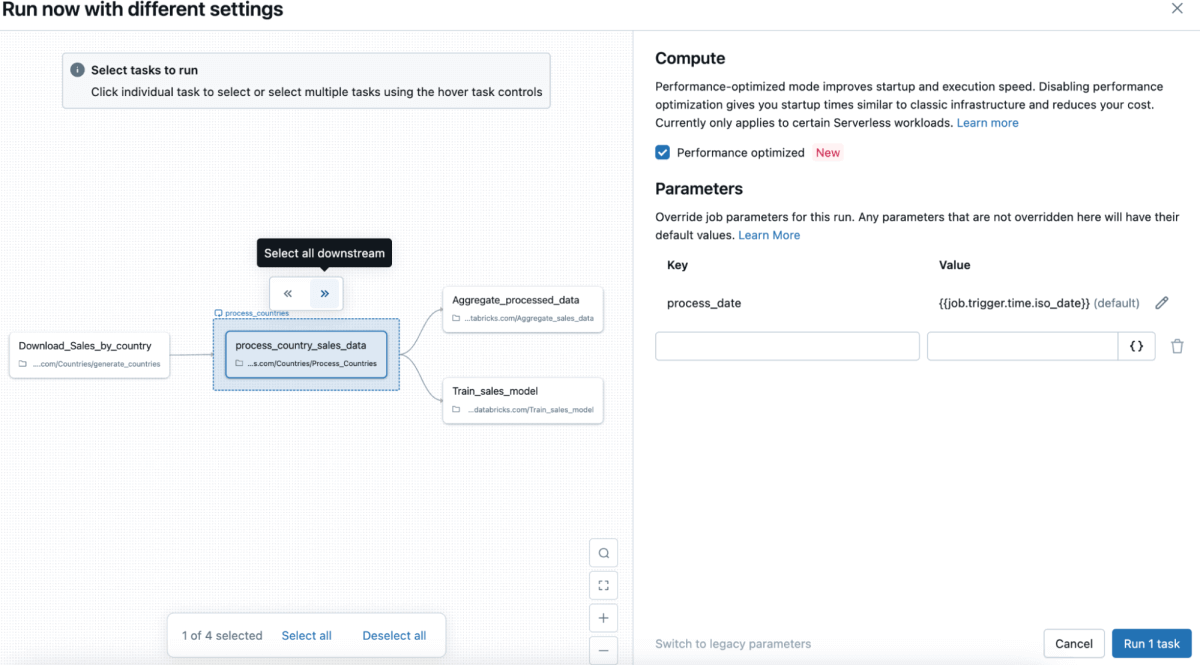

Partial runs permit customers to pick which duties to execute with out affecting others. Beforehand, testing particular person duties required operating your entire job, which may very well be computationally intensive, gradual, and expensive. Now, on the Jobs & Pipelines web page, customers can choose “Run now with totally different settings” and select particular duties to execute with out impacting others, avoiding computational waste and excessive prices. Equally, Partial repairs allow quicker debugging by permitting customers to repair particular person failed duties with out rerunning your entire job.

With extra management over their run and restore flows, clients can pace up growth cycles, enhance job uptime, and cut back compute prices. Each Partial runs and repairs are typically obtainable within the UI and the Jobs API.

To all SQL followers on the market, we’ve some good news for you! On this newest spherical of updates, clients will have the ability to use SQL queries’ outputs as parameters in Lakeflow Jobs to orchestrate their information. This makes it simpler for SQL builders to cross parameters between duties and share context inside a job, leading to a extra cohesive and unified information pipeline orchestration. This characteristic can be now typically obtainable.

Fast-start with Lakeflow Join in Jobs

Along with these enhancements, we’re additionally making it quick and simple to ingest information into Lakeflow Jobs by extra tightly integrating Jobs with Lakeflow Join, Databricks Lakeflow’s managed and dependable information ingestion answer, with built-in connectors.

Prospects can already orchestrate Lakeflow Join ingestion pipelines that originate from Lakeflow Join, utilizing any of the absolutely managed connectors (e.g., Salesforce, Workday, and many others.) or instantly from notebooks. Now, with Lakeflow Join in Jobs, clients can simply create an ingestion pipeline instantly from two entry factors of their Jobs interface, all inside a point-and-click surroundings. Since ingestion is commonly step one in ETL, this new seamless integration with Lakeflow Join permits clients to consolidate and streamline their information engineering expertise, from finish to finish.

Lakeflow Join in Jobs is now typically obtainable for purchasers. Study extra about this and different current Lakeflow Join releases.

A single orchestration for all of your workloads

We’re constantly innovating on Lakeflow Jobs to supply our clients a contemporary and centralized orchestration expertise for all their information wants throughout the group. Extra options are coming to Jobs – we’ll quickly unveil a manner for customers to set off jobs primarily based on desk updates, present help for system tables, and broaden our observability capabilities, so keep tuned!

For many who need to continue to learn about Lakeflow Jobs, take a look at our on-demand periods from our Knowledge+AI Summit and discover Lakeflow in a wide range of use instances, demos, and extra!