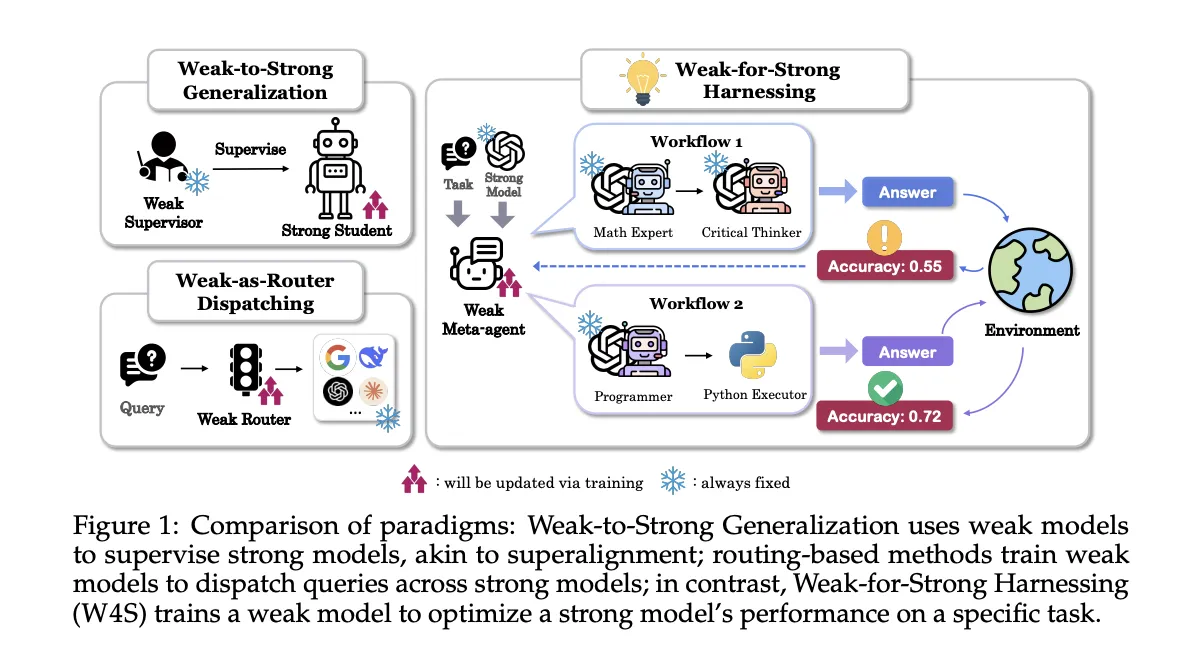

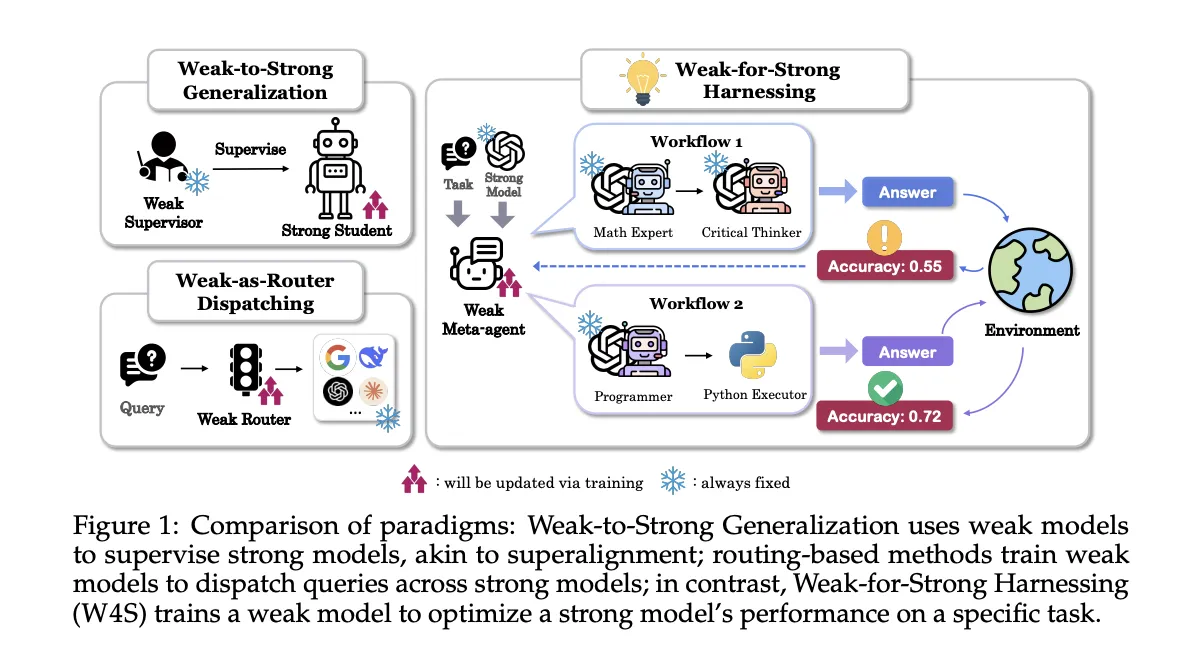

Researchers from Stanford, EPFL, and UNC introduce Weak-for-Robust Harnessing, W4S, a brand new Reinforcement Studying RL framework that trains a small meta-agent to design and refine code workflows that decision a stronger executor mannequin. The meta-agent doesn’t fantastic tune the robust mannequin, it learns to orchestrate it. W4S formalizes workflow design as a multi flip Markov resolution course of, and trains the meta-agent with a way referred to as Reinforcement Studying for Agentic Workflow Optimization, RLAO. The analysis workforce studies constant beneficial properties throughout 11 benchmarks with a 7B meta-agent skilled for about 1 GPU hour.

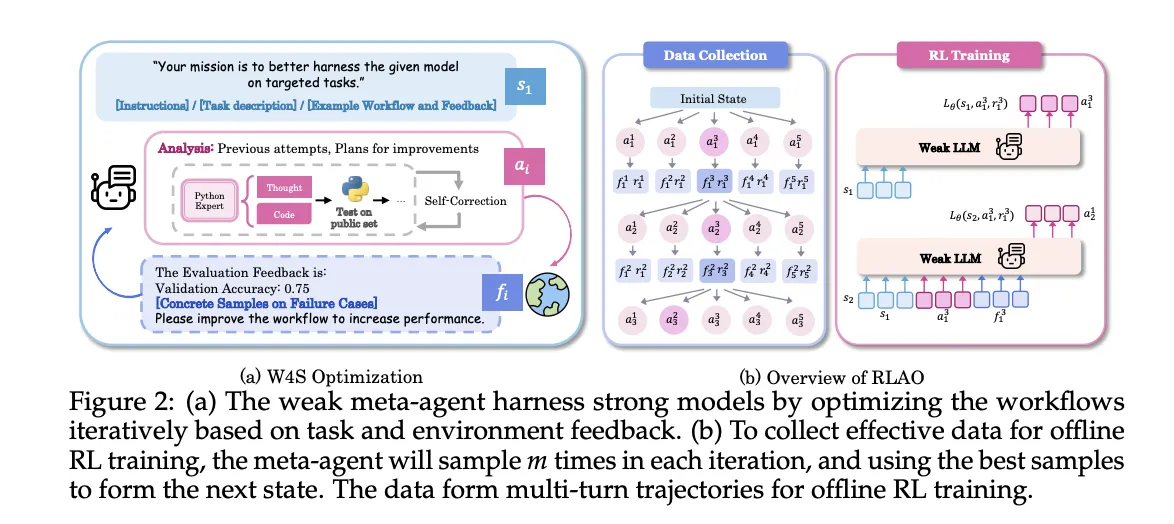

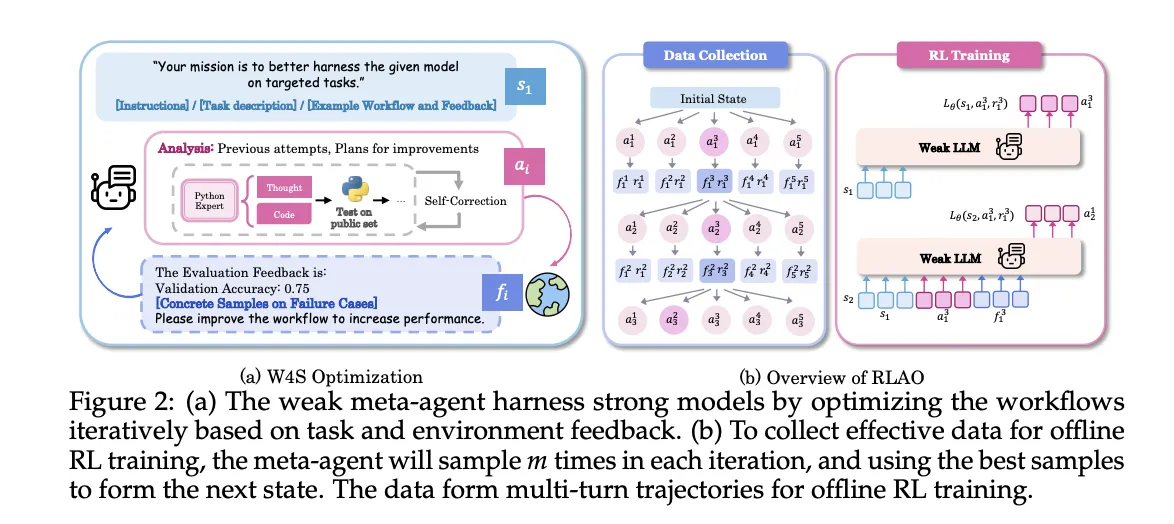

W4S operates in turns. The state incorporates process directions, the present workflow program, and suggestions from prior executions. An motion has 2 elements, an evaluation of what to vary, and new Python workflow code that implements these adjustments. The setting executes the code on validation objects, returns accuracy and failure instances, and gives a brand new state for the following flip. The meta-agent can run a fast self test on one pattern, if errors come up it makes an attempt as much as 3 repairs, if errors persist the motion is skipped. This loop provides studying sign with out touching the weights of the robust executor.

W4S runs as an iterative loop

- Workflow technology: The weak meta agent writes a brand new workflow that leverages the robust mannequin, expressed as executable Python code.

- Execution and suggestions: The robust mannequin executes the workflow on validation samples, then returns accuracy and error instances as suggestions.

- Refinement: The meta agent makes use of the suggestions to replace the evaluation and the workflow, then repeats the loop.

Reinforcement Studying for Agentic Workflow Optimization (RLAO)

RLAO is an offline reinforcement studying process over multi flip trajectories. At every iteration, the system samples a number of candidate actions, retains the very best performing motion to advance the state, and shops the others for coaching. The coverage is optimized with reward weighted regression. The reward is sparse and compares present validation accuracy to historical past, the next weight is given when the brand new consequence beats the earlier finest, a smaller weight is given when it beats the final iteration. This goal favors regular progress whereas controlling exploration price.

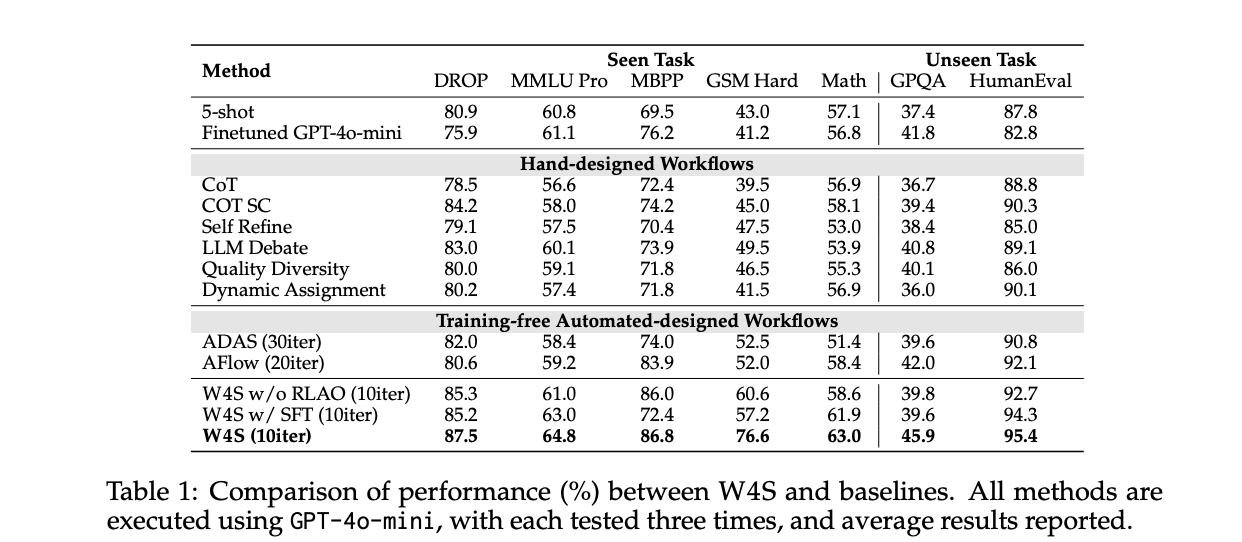

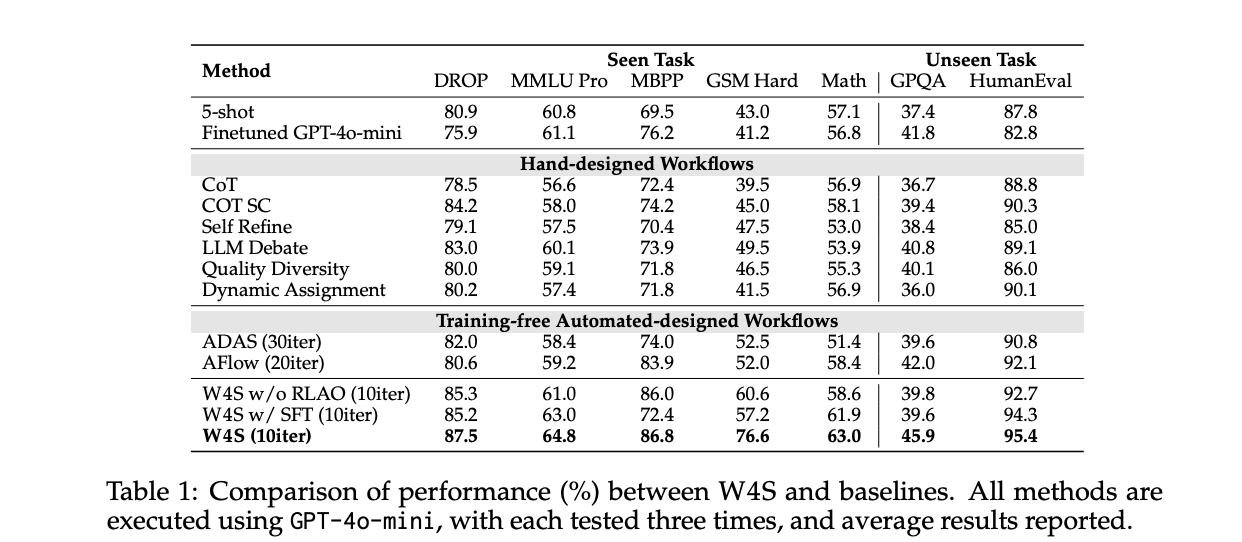

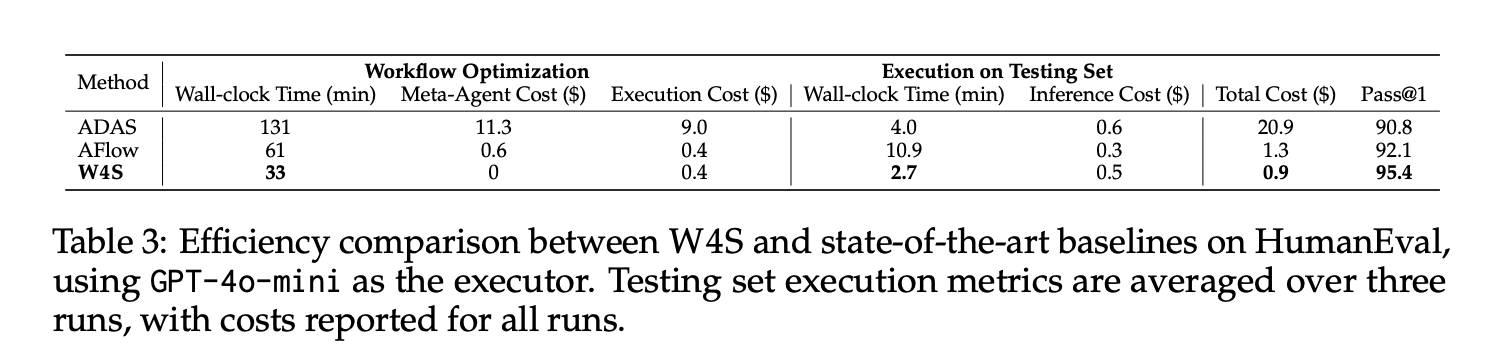

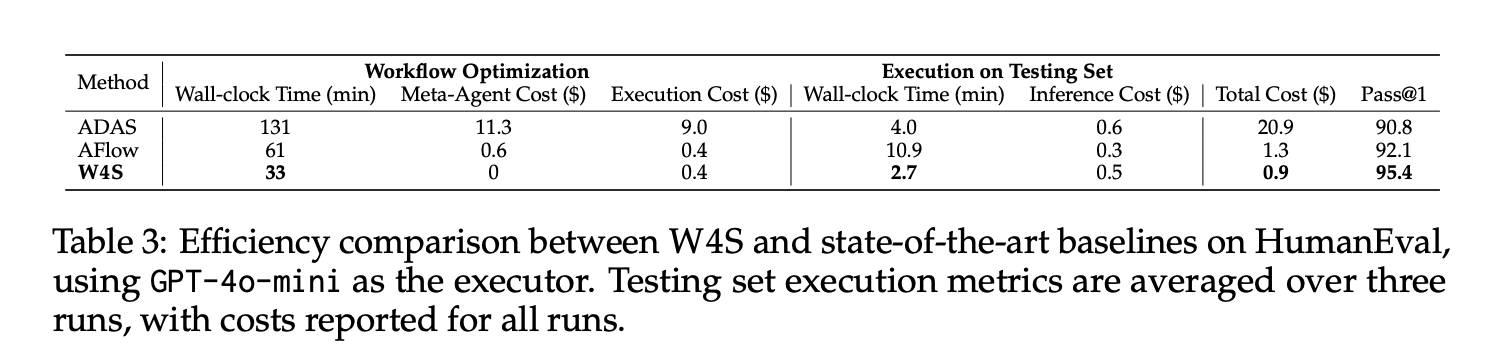

Understanding the Outcomes

On HumanEval with GPT-4o-mini as executor, W4S achieves Move@1 of 95.4, with about 33 minutes of workflow optimization, zero meta-agent API price, an optimization execution price of about 0.4 {dollars}, and about 2.7 minutes to execute the check set at about 0.5 {dollars}, for a complete of about 0.9 {dollars}. Below the identical executor, AFlow and ADAS path this quantity. The reported common beneficial properties towards the strongest automated baseline vary from 2.9% to 24.6% throughout 11 benchmarks.

On math switch, the meta-agent is skilled on GSM Plus and MGSM with GPT-3.5-Turbo as executor, then evaluated on GSM8K, GSM Exhausting, and SVAMP. The paper studies 86.5 on GSM8K and 61.8 on GSM Exhausting, each above automated baselines. This means that the discovered orchestration transfers to associated duties with out re coaching the executor.

Throughout seen duties with GPT-4o-mini as executor, W4S surpasses coaching free automated strategies that don’t study a planner. The research additionally runs ablations the place the meta-agent is skilled by supervised fantastic tuning somewhat than RLAO, the RLAO agent yields higher accuracy below the identical compute funds. The analysis workforce embody a GRPO baseline on a 7B weak mannequin for GSM Exhausting, W4S outperforms it below restricted compute.

Iteration budgets matter. The analysis workforce units W4S to about 10 optimization activates most important tables, whereas AFlow runs about 20 turns and ADAS runs about 30 turns. Regardless of fewer turns, W4S achieves increased accuracy. This implies that discovered planning over code, mixed with validation suggestions, makes the search extra pattern environment friendly.

Key Takeaways

- W4S trains a 7B weak meta agent with RLAO to put in writing Python workflows that harness stronger executors, modeled as a multi flip MDP.

- On HumanEval with GPT 4o mini as executor, W4S reaches Move@1 of 95.4, with about 33 minutes optimization and about 0.9 {dollars} complete price, beating automated baselines below the identical executor.

- Throughout 11 benchmarks, W4S improves over the strongest baseline by 2.9% to 24.6%, whereas avoiding fantastic tuning of the robust mannequin.

- The tactic runs an iterative loop, it generates a workflow, executes it on validation knowledge, then refines it utilizing suggestions.

- ADAS and AFlow additionally program or search over code workflows, W4S differs by coaching a planner with offline reinforcement studying.

W4S targets orchestration, not mannequin weights, and trains a 7B meta agent to program workflows that decision stronger executors. W4S formalizes workflow design as a multi flip MDP and optimizes the planner with RLAO utilizing offline trajectories and reward weighted regression. Reported outcomes present Move@1 of 95.4 on HumanEval with GPT 4o mini, common beneficial properties of two.9% to 24.6% throughout 11 benchmarks, and about 1 GPU hour of coaching for the meta agent. The framing compares cleanly with ADAS and AFlow, which search agent designs or code graphs, whereas W4S fixes the executor and learns the planner.

Try the Technical Paper and GitHub Repo. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you’ll be able to be a part of us on telegram as properly.