TwinMind, a California-based Voice AI startup, unveiled Ear-3 speech-recognition mannequin, claiming state-of-the-art efficiency on a number of key metrics and expanded multilingual assist. The discharge positions Ear-3 as a aggressive providing in opposition to current ASR (Computerized Speech Recognition) options from suppliers like Deepgram, AssemblyAI, Eleven Labs, Otter, Speechmatics, and OpenAI.

Key Metrics

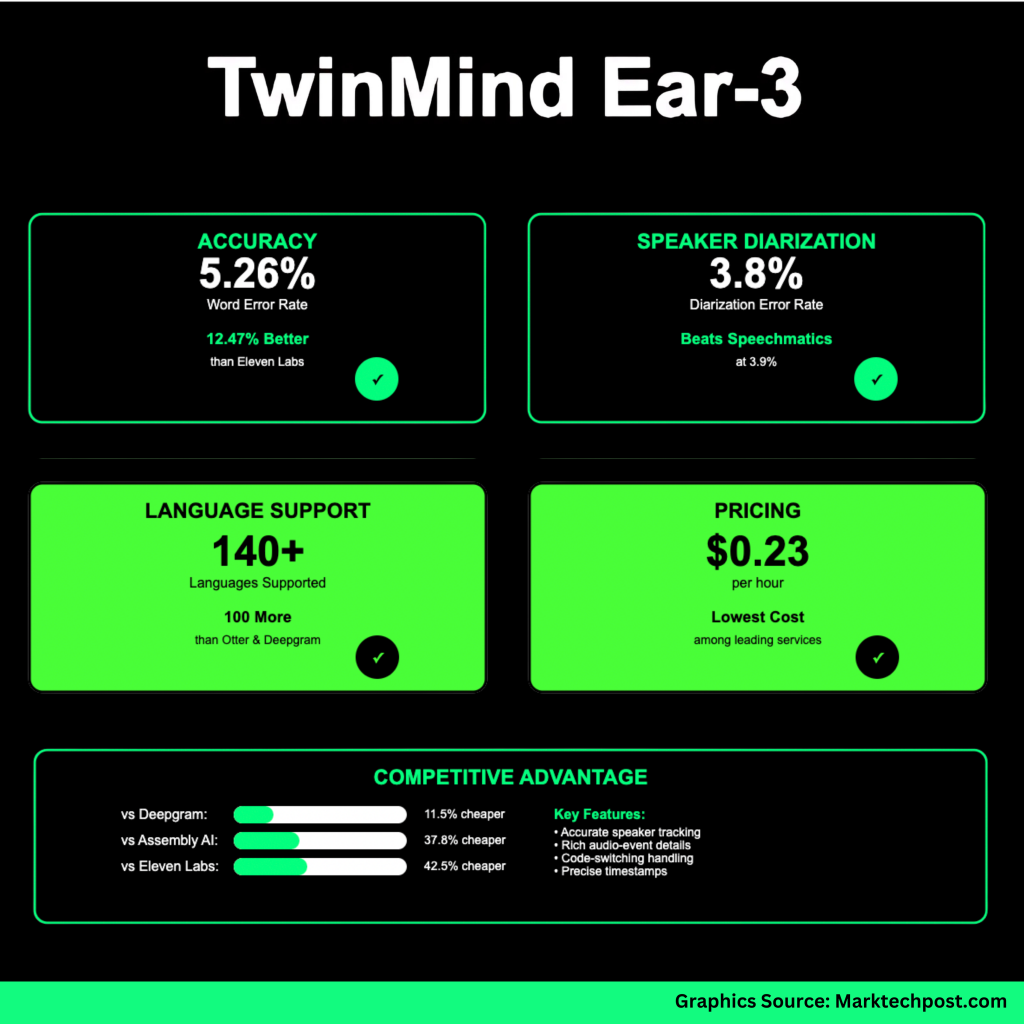

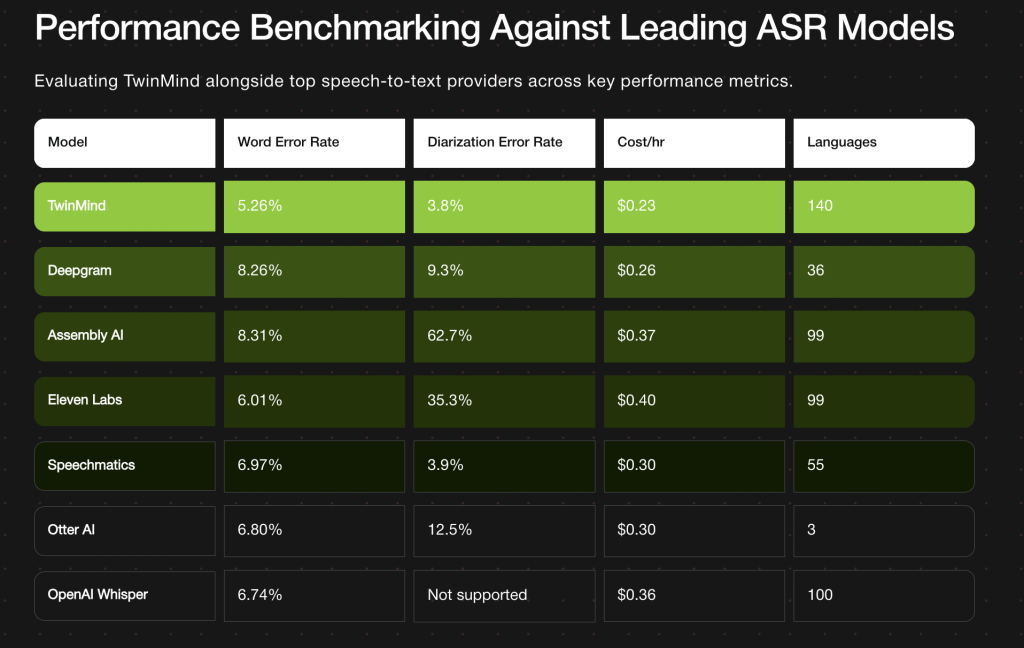

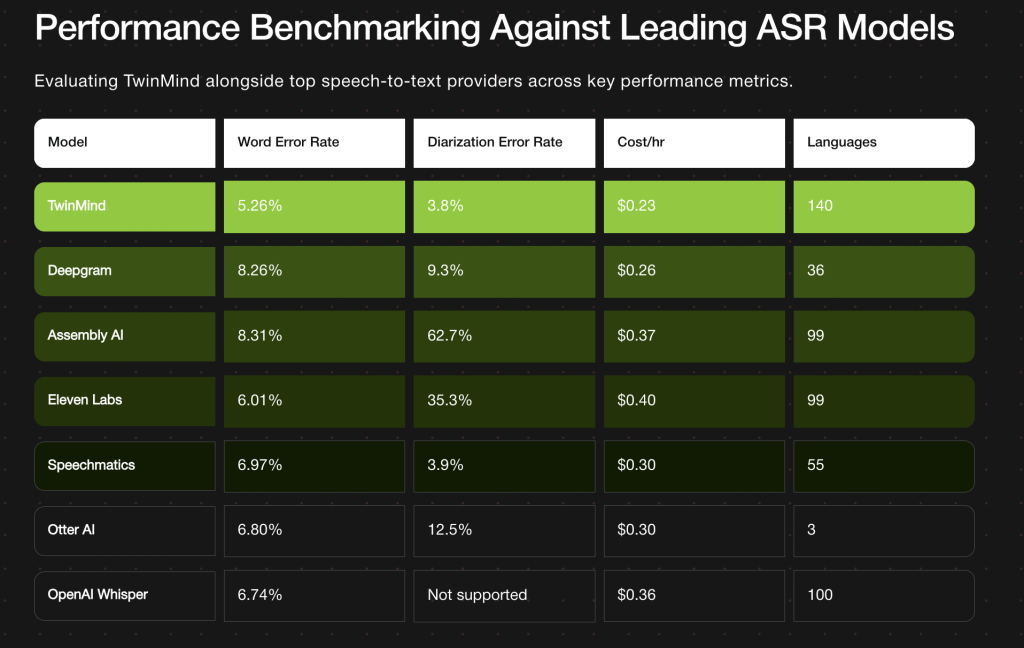

| Metric | TwinMind Ear-3 End result | Comparisons / Notes |

|---|---|---|

| Phrase Error Price (WER) | 5.26 % | Considerably decrease than many rivals: Deepgram ~8.26 %, AssemblyAI ~8.31 %. |

| Speaker Diarization Error Price (DER) | 3.8 % | Slight enchancment over earlier finest from Speechmatics (~3.9 %). |

| Language Help | 140+ languages | Over 40 extra languages than many main fashions; goals for “true international protection.” |

| Value per Hour of Transcription | US$ 0.23/hr | Positioned as lowest amongst main companies. |

Technical Strategy & Positioning

- TwinMind signifies Ear-3 is a “fine-tuned mix of a number of open-source fashions,” skilled on a curated dataset containing human-annotated audio sources akin to podcasts, movies, and movies.

- Diarization and speaker labeling are improved through a pipeline that features audio cleansing and enhancement earlier than diarization, plus “exact alignment checks” to refine speaker boundary detections.

- The mannequin handles code-switching and blended scripts, that are usually tough for ASR techniques resulting from diverse phonetics, accent variance, and linguistic overlap.

Commerce-offs & Operational Particulars

- Ear-3 requires cloud deployment. Due to its mannequin measurement and compute load, it can’t be totally offline. TwinMind’s Ear-2 (its earlier mannequin) stays the fallback when connectivity is misplaced.

- Privateness: TwinMind claims audio isn’t saved long-term; solely transcripts are saved regionally, with non-compulsory encrypted backups. Audio recordings are deleted “on the fly.”

- Platform integration: API entry for the mannequin is deliberate within the coming weeks for builders/enterprises. For finish customers, Ear-3 performance shall be rolled out to TwinMind’s iPhone, Android, and Chrome apps over the subsequent month for Professional customers.

Comparative Evaluation & Implications

Ear-3’s WER and DER metrics put it forward of many established fashions. Decrease WER interprets to fewer transcription errors (mis-recognitions, dropped phrases, and so on.), which is essential for domains like authorized, medical, lecture transcription, or archival of delicate content material. Equally, decrease DER (i.e. higher speaker separation + labeling) issues for conferences, interviews, podcasts — something with a number of members.

The worth level of US$0.23/hr makes high-accuracy transcription extra economically possible for long-form audio (e.g. hours of conferences, lectures, recordings). Mixed with assist for over 140 languages, there’s a clear push to make this usable in international settings, not simply English-centric or well-resourced language contexts.

Nonetheless, cloud dependency might be a limitation for customers needing offline or edge-device capabilities, or the place information privateness / latency issues are stringent. Implementation complexity for supporting 140+ languages (accent drift, dialects, code-switching) could reveal weaker zones underneath antagonistic acoustic situations. Actual-world efficiency could differ in comparison with managed benchmarking.

Conclusion

TwinMind’s Ear-3 mannequin represents a robust technical declare: excessive accuracy, speaker diarization precision, in depth language protection, and aggressive price discount. If benchmarks maintain in actual utilization, this might shift expectations for what “premium” transcription companies ought to ship.

Try the Venture Web page. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter.