Spoken Dialogue Fashions (SDMs) are on the frontier of conversational AI, enabling seamless spoken interactions between people and machines. But, as SDMs develop into integral to digital assistants, good gadgets, and customer support bots, evaluating their true potential to deal with the real-world intricacies of human dialogue stays a big problem. A brand new analysis paper from China launched C3 benchmark immediately addresses this hole, offering a complete, bilingual analysis suite for SDMs—emphasizing the distinctive difficulties inherent in spoken conversations.

The Unexplored Complexity of Spoken Dialogue

Whereas text-based Giant Language Fashions (LLMs) have benefited from intensive benchmarking, spoken dialogues current a definite set of challenges:

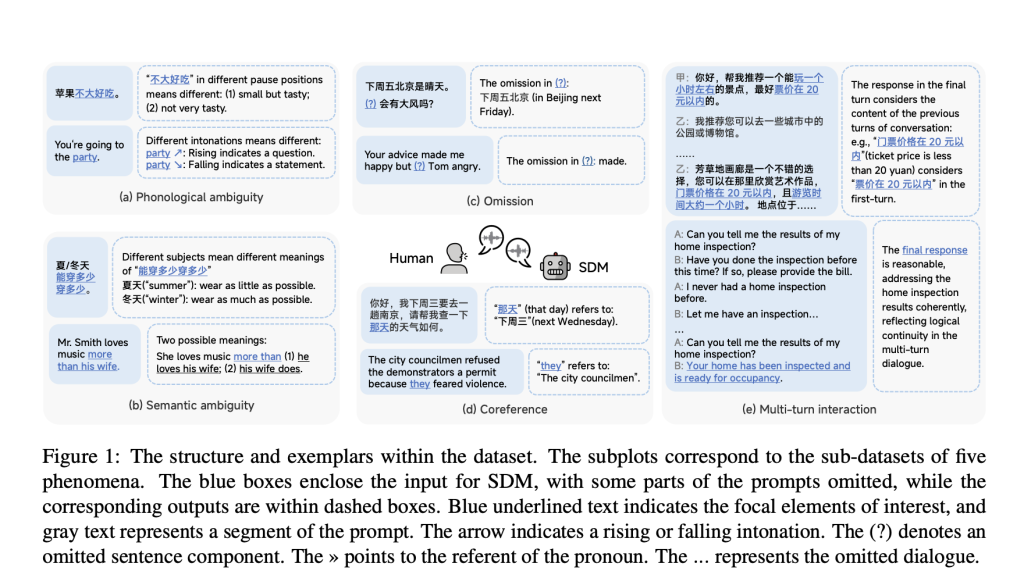

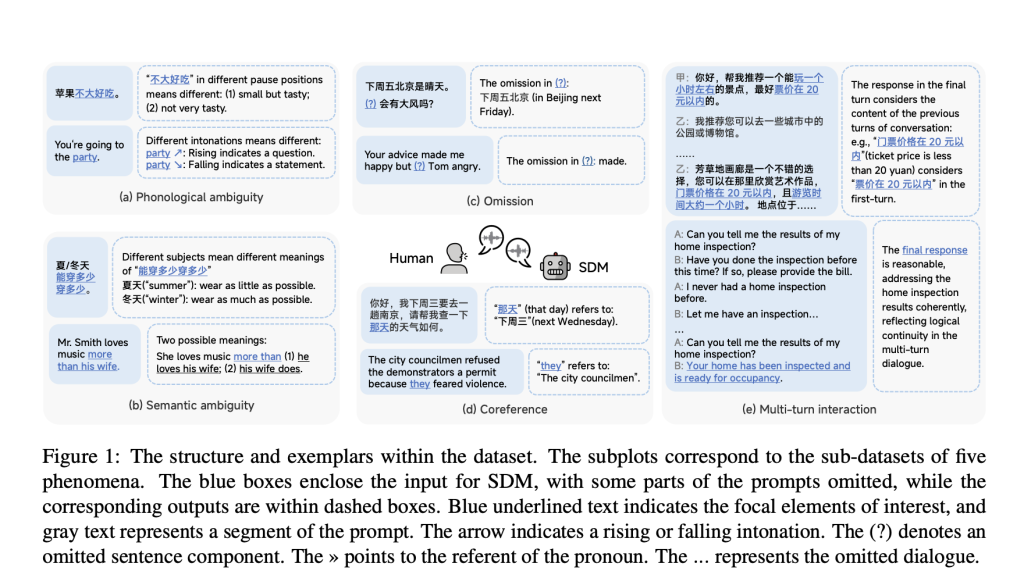

- Phonological Ambiguity: Variations in intonation, stress, pauses, and homophones can completely alter which means, particularly throughout languages with tonal components equivalent to Chinese language.

- Semantic Ambiguity: Phrases and sentences with a number of meanings (lexical and syntactic ambiguity) demand cautious disambiguation.

- Omission and Coreference: Audio system typically omit phrases or use pronouns, counting on context for understanding—a recurring problem for AI fashions.

- Multi-turn Interplay: Pure dialogue isn’t one-shot; understanding typically accumulates over a number of conversational turns, requiring strong reminiscence and coherent historical past monitoring.

Present benchmarks for SDMs are sometimes restricted to a single language, restricted to single-turn dialogues, and infrequently deal with ambiguity or context-dependency, leaving massive analysis gaps.

C3 Benchmark: Dataset Design and Scope

C3—“A Bilingual Benchmark for Spoken Dialogue Fashions Exploring Challenges in Advanced Conversations”—introduces:

- 1,079 situations throughout English and Chinese language, deliberately spanning 5 key phenomena:

- Phonological Ambiguity

- Semantic Ambiguity

- Omission

- Coreference

- Multi-turn Interplay

- Audio-text paired samples enabling true spoken dialogue analysis (with 1,586 pairs as a consequence of multi-turn settings).

- Cautious guide quality control: Audio is regenerated or human-voiced to make sure uniform timbre and take away background noise.

- Process-oriented directions crafted for every kind of phenomenon, urging SDMs to detect, interpret, resolve, and generate appropriately.

- Balanced protection of each languages, with Chinese language examples emphasizing tone and distinctive referential buildings not current in English.

Analysis Methodology: LLM-as-a-Decide and Human Alignment

The analysis crew introduces an revolutionary LLM-based automated analysis technique—utilizing robust LLMs (GPT-4o, DeepSeek-R1) to evaluate SDM responses, with outcomes carefully correlating with impartial human analysis (Pearson and Spearman > 0.87, p

- Automated Analysis: For many duties, output audio is transcribed and in comparison with reference solutions by the LLM. For phenomena solely discernible in audio (e.g., intonation), people annotate responses.

- Process-specific Metrics: For omission and coreference, each detection and determination accuracy are measured.

- Reliability Testing: A number of human raters and strong statistical validation verify that automated and human judges are extremely constant.

Benchmark Outcomes: Mannequin Efficiency and Key Findings

Outcomes from evaluating six state-of-the-art end-to-end SDMs throughout English and Chinese language reveal:

| Mannequin | Prime Rating (English) | Prime Rating (Chinese language) |

|---|---|---|

| GPT-4o-Audio-Preview | 55.68% | 29.45% |

| Qwen2.5-Omni | 51.91percent2 | 40.08% |

Evaluation by Phenomena:

- Ambiguity is More durable than Context-Dependency: SDMs rating considerably decrease on phonological and semantic ambiguity than on omission, coreference, or multi-turn duties—particularly in Chinese language, the place semantic ambiguity drops under 4% accuracy.

- Language Issues: All SDMs carry out higher on English than Chinese language in most classes. The hole persists even amongst fashions designed for each languages.

- Mannequin Variation: Some fashions (like Qwen2.5-Omni) excel at multi-turn and context monitoring, whereas others (like GPT-4o-Audio-Preview) dominate ambiguity decision in English.

- Omission and Coreference: Detection is normally simpler than decision/completion—demonstrating that recognizing an issue is distinct from addressing it.

Implications for Future Analysis

C3 conclusively demonstrates that:

- Present SDMs are removed from human-level in difficult conversational phenomena.

- Language-specific options (particularly tonal and referential points of Chinese language) require tailor-made modeling and analysis.

- Benchmarking should transfer past single-turn, ambiguity-free settings.

The open-source nature of C3, together with its strong bilingual design, gives the muse for the following wave of SDMs—enabling researchers and engineers to isolate and enhance on probably the most difficult points of spoken AI.2507.22968v1.pdf

Conclusion

The C3 benchmark marks an essential development in evaluating SDMs, pushing conversations past easy scripts towards the real messiness of human interplay. By rigorously exposing fashions to phonological, semantic, and contextual complexity in each English and Chinese language, C3 lays the groundwork for future techniques that may actually perceive—and take part in—advanced spoken dialogue.

Try the Paper and GitHub Web page. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Nikhil is an intern advisor at Marktechpost. He’s pursuing an built-in twin diploma in Supplies on the Indian Institute of Expertise, Kharagpur. Nikhil is an AI/ML fanatic who’s at all times researching functions in fields like biomaterials and biomedical science. With a robust background in Materials Science, he’s exploring new developments and creating alternatives to contribute.