Autonomous workflows, powered by real-time suggestions and steady studying, have gotten important for productiveness and decision-making.

Within the early days of the AI shift, AI purposes had been largely constructed as skinny layers on prime of off-the-shelf basis fashions. However as builders started tackling extra advanced use circumstances, they rapidly encountered the restrictions of merely utilizing RAG on prime of off-the-shelf fashions. Whereas this strategy provided a quick path to manufacturing, it typically fell quick in delivering the accuracy, reliability, effectivity, and engagement wanted for extra refined use circumstances.

Nonetheless, this dynamic is shifting. As AI shifts from assistive copilots to autonomous co-workers, the structure behind these techniques should evolve. Autonomous workflows, powered by real-time suggestions and steady studying, have gotten important for productiveness and decision-making. AI purposes that incorporate steady studying by real-time suggestions loops—what we consult with because the ‘indicators loop’—are rising as the important thing to constructing extra adaptive and resilient differentiation over time.

Constructing actually efficient AI apps and brokers requires extra than simply entry to highly effective LLMs. It calls for a rethinking of AI structure—one which locations steady studying and adaptation at its core. The ‘indicators loop’ facilities on capturing consumer interactions and product utilization information in actual time, then systematically integrating this suggestions to refine mannequin habits and evolve product options, creating purposes that get higher over time.

Because the rise of open-source frontier fashions democratizes entry to mannequin weights, fine-tuning (together with reinforcement studying) is changing into extra accessible and constructing these loops turns into extra possible. Capabilities like reminiscence are additionally growing the worth of indicators loops. These applied sciences allow AI techniques to retain context and study from consumer suggestions—driving higher personalization and bettering buyer retention. And as using brokers continues to develop, making certain accuracy turns into much more crucial, underscoring the rising significance of fine-tuning and implementing a strong indicators loop.

At Microsoft, we’ve seen the facility of the indicators loop strategy firsthand. First-party merchandise like Dragon Copilot and GitHub Copilot exemplify how indicators loops can drive speedy product enchancment, elevated relevance, and long-term consumer engagement.

Implementing indicators loop for steady AI enchancment: Insights from Dragon Copilot and GitHub Copilot

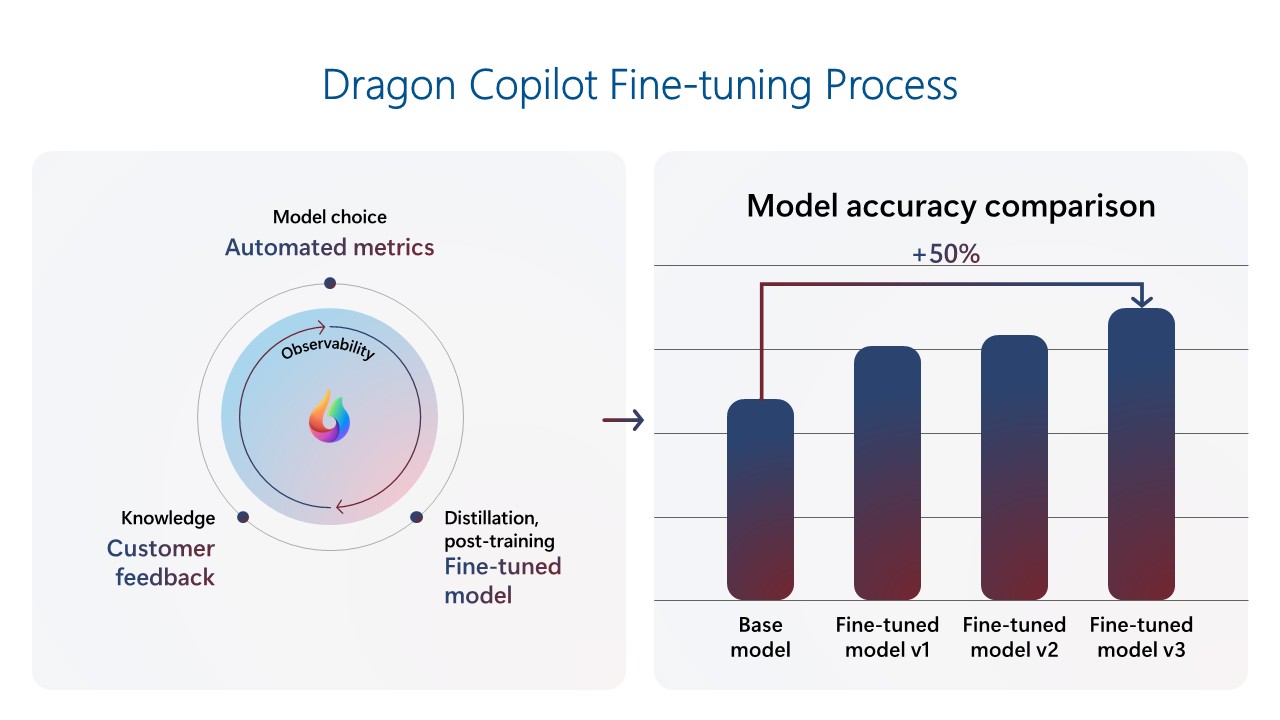

Dragon Copilot is a healthcare Copilot that helps medical doctors grow to be extra productive and ship higher affected person care. The Dragon Copilot group has constructed a indicators loop to drive steady product enchancment. The group constructed a fine-tuned mannequin utilizing a repository of scientific information, which resulted in a lot better efficiency than the bottom foundational mannequin with prompting solely. Because the product has gained utilization, the group used buyer suggestions telemetry to repeatedly refine the mannequin. When new foundational fashions are launched, they’re evaluated with automated metrics to benchmark efficiency and up to date if there are important beneficial properties. This loop creates compounding enhancements with each mannequin technology, which is very essential in a discipline the place the demand for precision is extraordinarily excessive. The newest fashions now outperform base foundational fashions by ~50%. This excessive efficiency helps clinicians concentrate on sufferers, seize the complete affected person story, and enhance care high quality by producing correct, complete documentation effectively and constantly.

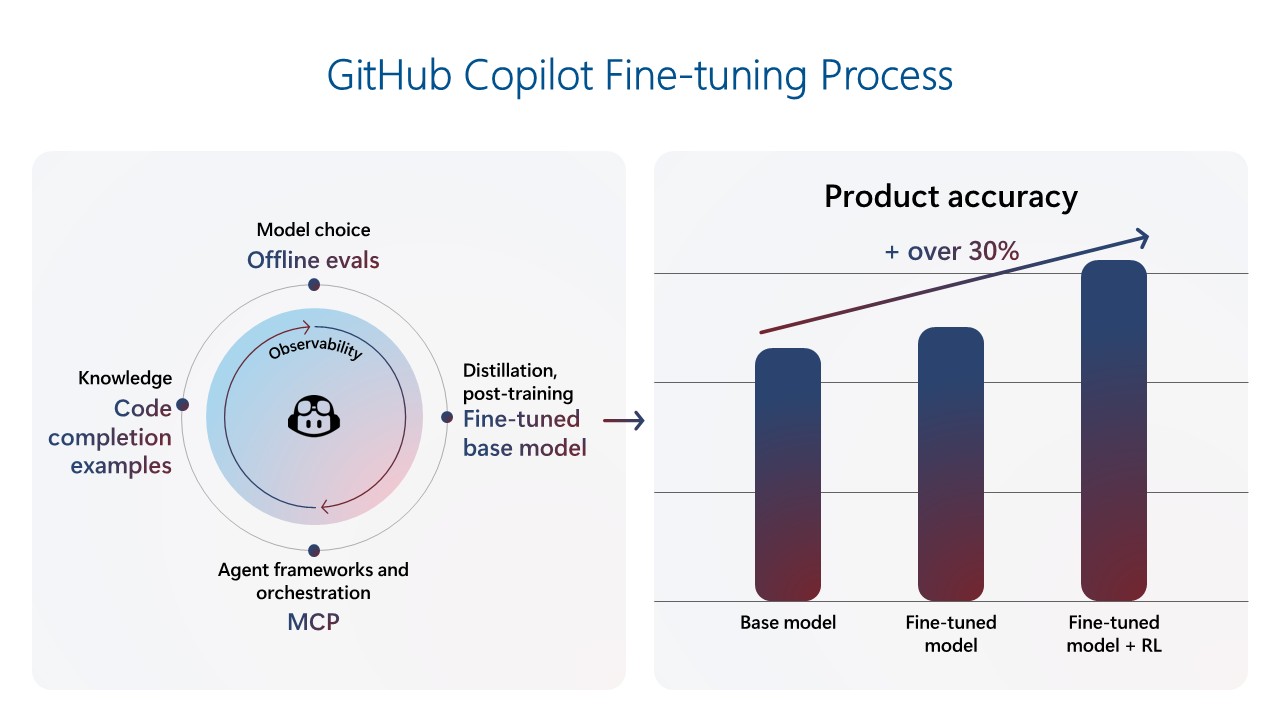

GitHub Copilot was the primary Microsoft Copilot, capturing widespread consideration and setting the usual of what AI-powered help may appear like. In its first yr, it quickly grew to over 1,000,000 customers, and has now reached greater than 20 million customers. As expectations for code suggestion high quality and relevance proceed to rise, the GitHub Copilot group has shifted its focus to constructing a strong mid-training and post-training atmosphere, enabling a indicators loop to ship Copilot improvements by steady fine-tuning. The newest code completions mannequin was skilled on over 400 thousand real-world samples from public repositories and additional tuned by way of reinforcement studying utilizing hand-crafted, artificial coaching information. Alongside this new mannequin, the group launched a number of client-side and UX adjustments, attaining an over 30% enchancment in retained code for completions and a 35% enchancment in pace. These enhancements enable GitHub Copilot to anticipate developer wants and act as a proactive coding accomplice.

Key implications for the way forward for AI: Positive-tuning, suggestions loops, and pace matter

The experiences of Dragon Copilot and GitHub Copilot underscore a basic shift in how differentiated AI merchandise can be constructed and scaled shifting ahead. A couple of key implications emerge:

- Positive-tuning isn’t non-obligatory—it’s strategically essential: Positive-tuning is now not area of interest, however a core functionality that unlocks important efficiency enhancements. Throughout our merchandise, fine-tuning has led to dramatic beneficial properties in accuracy and have high quality. As open-source fashions democratize entry to foundational capabilities, the flexibility to fine-tune for particular use circumstances will more and more outline product excellence.

- Suggestions loops can generate steady enchancment: As foundational fashions grow to be more and more commoditized, the long-term defensibility of AI merchandise is not going to come from the mannequin alone, however from how successfully these fashions study from utilization. The indicators loop—powered by real-world consumer interactions and fine-tuning—permits groups to ship high-performing experiences that repeatedly enhance over time.

- Corporations should evolve to assist iteration at scale, and pace can be key: Constructing a system that helps frequent mannequin updates requires adjusting information pipelines, fine-tuning, analysis loops, and group workflows. Corporations’ engineering and product orgs should align round quick iteration and fine-tuning, telemetry evaluation, artificial information technology, and automatic analysis frameworks to maintain up with consumer wants and mannequin capabilities. Organizations that evolve their techniques and instruments to quickly incorporate indicators—from telemetry to human suggestions—can be greatest positioned to steer. Azure AI Foundry supplies the important elements wanted to facilitate this steady mannequin and product enchancment.

- Brokers require intentional design and steady adaptation: Constructing brokers goes past mannequin choice. It calls for considerate orchestration of reminiscence, reasoning, and suggestions mechanisms. Indicators loops allow brokers to evolve from reactive assistants into proactive co-workers that study from interactions and enhance over time. Azure AI Foundry supplies the infrastructure to assist this evolution, serving to groups design brokers that act, adapt dynamically, and ship sustained worth.

Whereas within the early days of AI fine-tuning was not economical and required a lot of effort and time, the rise of open-source frontier fashions and strategies like LoRA and distillation have made tuning cheaper, and instruments have grow to be simpler to make use of. In consequence, fine-tuning is extra accessible to extra organizations than ever earlier than. Whereas out-of-the-box fashions have a task to play for horizontal workloads like data search or customer support, organizations are more and more experimenting with fine-tuning for {industry} and domain-specific situations, including their domain-specific information to their merchandise and fashions.

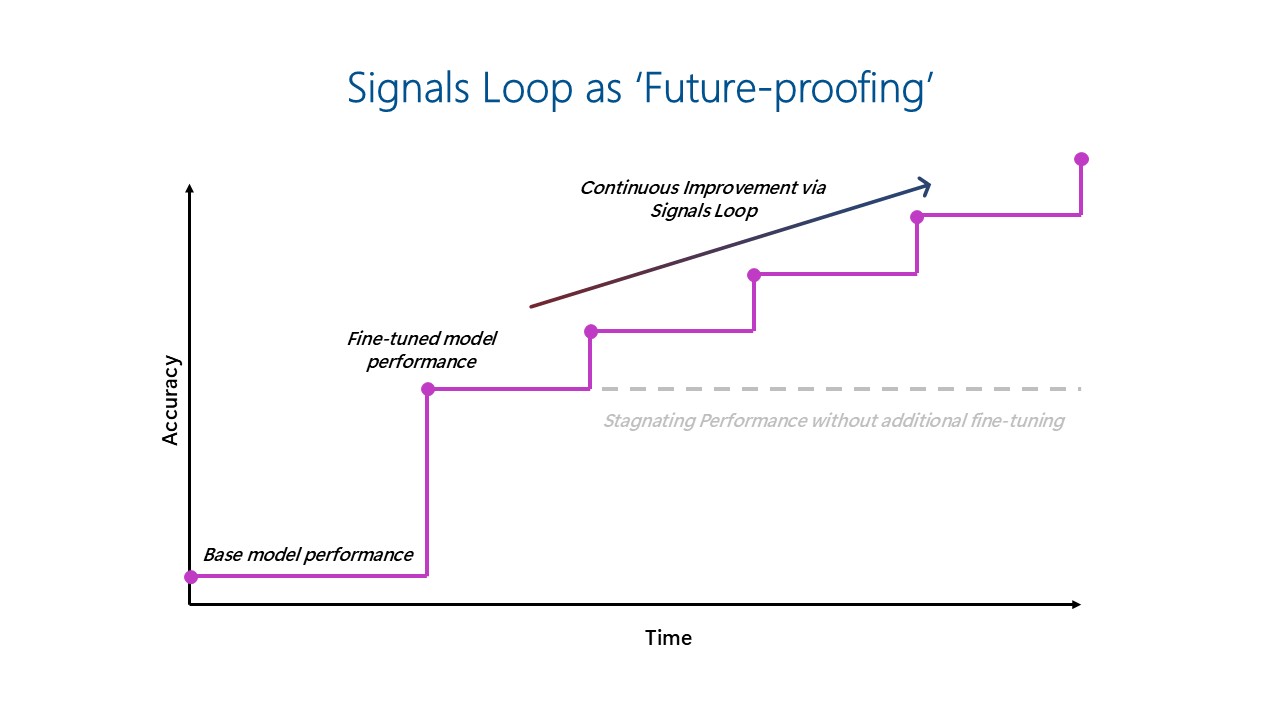

The indicators loop ‘future proofs’ AI investments by enabling fashions to repeatedly enhance over time as utilization information is fed again into the fine-tuned mannequin, stopping stagnated efficiency.

Construct adaptive AI experiences with Azure AI Foundry

To simplify the implementation of fine-tuning suggestions loops, Azure AI Foundry affords industry-leading fine-tuning capabilities by a unified platform that streamlines your entire AI lifecycle—from mannequin choice to deployment—whereas embedding enterprise-grade compliance and governance. This empowers groups to construct, adapt, and scale AI options with confidence and management.

Listed below are 4 key explanation why fine-tuning on Azure AI Foundry stands out:

- Mannequin alternative: Entry a broad portfolio of open and proprietary fashions from main suppliers, with the pliability to decide on between serverless or managed compute choices.

- Reliability: Depend on 99.9% availability for Azure OpenAI fashions and profit from latency ensures with provisioned throughput models (PTUs).

- Unified platform: Leverage an end-to-end atmosphere that brings collectively fashions, coaching, analysis, deployment, and efficiency metrics—multi function place.

- Scalability: Begin small with an economical Developer Tier for experimentation and seamlessly scale to manufacturing workloads utilizing PTUs.

Be a part of us in constructing the way forward for AI, the place copilots grow to be co-workers, and workflows grow to be self-improving engines of productiveness.

Study extra