Knowledge preprocessing removes errors, fills lacking data, and standardizes knowledge to assist algorithms discover precise patterns as a substitute of being confused by both noise or inconsistencies.

Any algorithm wants correctly cleaned up knowledge organized in structured codecs earlier than studying from the info. The machine studying course of requires knowledge preprocessing as its basic step to ensure fashions preserve their accuracy and operational effectiveness whereas guaranteeing dependability.

The standard of preprocessing work transforms fundamental knowledge collections into necessary insights alongside reliable outcomes for all machine studying initiatives. This text walks you thru the important thing steps of knowledge preprocessing for machine studying, from cleansing and remodeling knowledge to real-world instruments, challenges, and tricks to enhance mannequin efficiency.

Understanding Uncooked Knowledge

Uncooked knowledge is the start line for any machine studying undertaking, and the information of its nature is key.

The method of coping with uncooked knowledge could also be uneven typically. It typically comes with noise, irrelevant or deceptive entries that may skew outcomes.

Lacking values are one other drawback, particularly when sensors fail or inputs are skipped. Inconsistent codecs additionally present up typically: date fields could use completely different kinds, or categorical knowledge is perhaps entered in varied methods (e.g., “Sure,” “Y,” “1”).

Recognizing and addressing these points is important earlier than feeding the info into any machine studying algorithm. Clear enter results in smarter output.

Knowledge Preprocessing in Knowledge Mining vs Machine Studying

Whereas each knowledge mining and machine studying depend on preprocessing to organize knowledge for evaluation, their targets and processes differ.

In knowledge mining, preprocessing focuses on making giant, unstructured datasets usable for sample discovery and summarization. This consists of cleansing, integration, and transformation, and formatting knowledge for querying, clustering, or affiliation rule mining, duties that don’t all the time require mannequin coaching.

In contrast to machine studying, the place preprocessing typically facilities on enhancing mannequin accuracy and lowering overfitting, knowledge mining goals for interpretability and descriptive insights. Characteristic engineering is much less about prediction and extra about discovering significant tendencies.

Moreover, knowledge mining workflows could embody discretization and binning extra ceaselessly, significantly for categorizing steady variables. Whereas ML preprocessing could cease as soon as the coaching dataset is ready, knowledge mining could loop again into iterative exploration.

Thus, the preprocessing targets: perception extraction versus predictive efficiency, set the tone for the way the info is formed in every area. In contrast to machine studying, the place preprocessing typically facilities on enhancing mannequin accuracy and lowering overfitting, knowledge mining goals for interpretability and descriptive insights.

Characteristic engineering is much less about prediction and extra about discovering significant tendencies.

Moreover, knowledge mining workflows could embody discretization and binning extra ceaselessly, significantly for categorizing steady variables. Whereas ML preprocessing could cease as soon as the coaching dataset is ready, knowledge mining could loop again into iterative exploration.

Core Steps in Knowledge Preprocessing

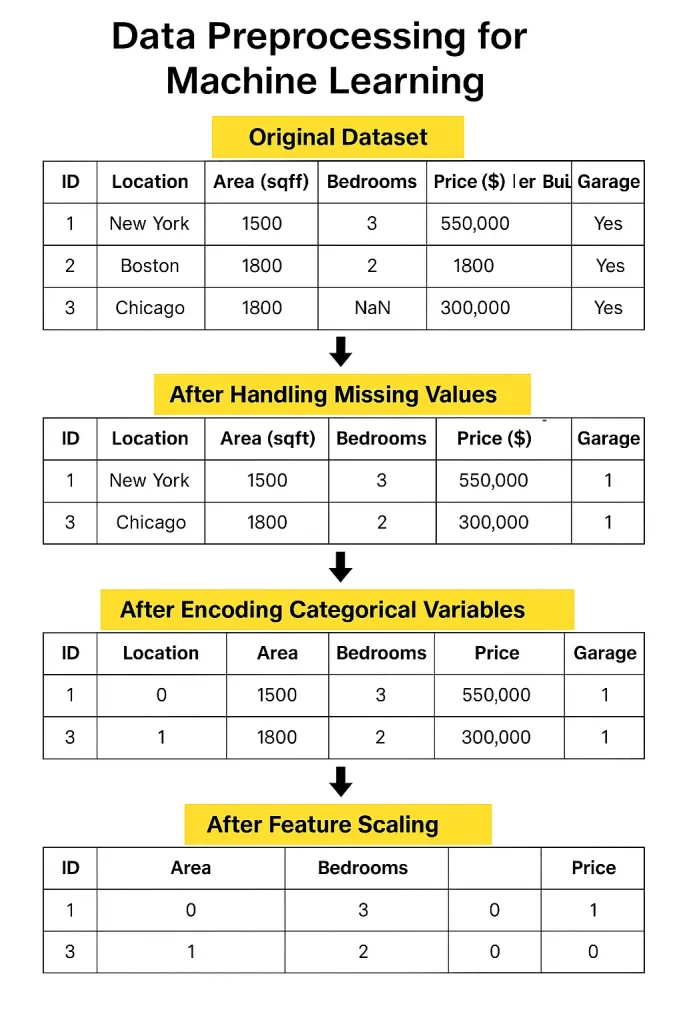

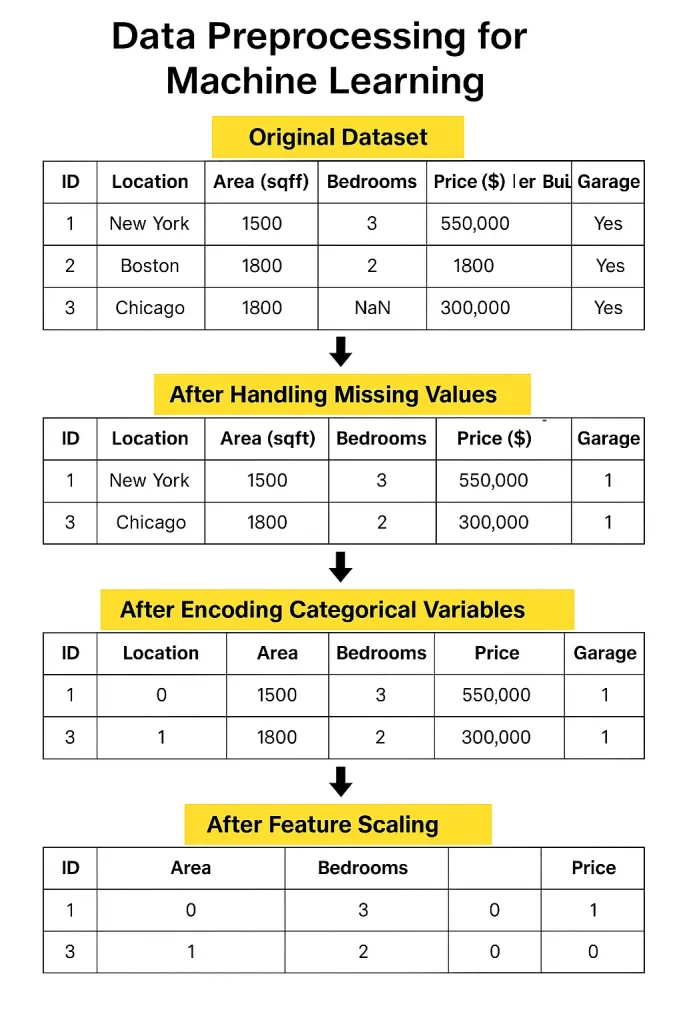

1. Knowledge Cleansing

Actual-world knowledge typically comes with lacking values, blanks in your spreadsheet that have to be stuffed or rigorously eliminated.

Then there are duplicates, which might unfairly weight your outcomes. And don’t neglect outliers- excessive values that may pull your mannequin within the improper route if left unchecked.

These can throw off your mannequin, so you could must cap, rework, or exclude them.

2. Knowledge Transformation

As soon as the info is cleaned, you could format it. In case your numbers fluctuate wildly in vary, normalization or standardization helps scale them persistently.

Categorical data- like nation names or product types- must be transformed into numbers by encoding.

And for some datasets, it helps to group related values into bins to cut back noise and spotlight patterns.

3. Knowledge Integration

Typically, your knowledge will come from completely different places- information, databases, or on-line instruments. Merging all of it may be tough, particularly if the identical piece of data appears to be like completely different in every supply.

Schema conflicts, the place the identical column has completely different names or codecs, are widespread and wish cautious decision.

4. Knowledge Discount

Huge knowledge can overwhelm fashions and improve processing time. By choosing solely probably the most helpful options or lowering dimensions utilizing strategies like PCA or sampling makes your mannequin sooner and sometimes extra correct.

Instruments and Libraries for Preprocessing

- Scikit-learn is great for most elementary preprocessing duties. It has built-in features to fill lacking values, scale options, encode classes, and choose important options. It’s a strong, beginner-friendly library with all the pieces you could begin.

- Pandas is one other important library. It’s extremely useful for exploring and manipulating knowledge.

- TensorFlow Knowledge Validation will be useful when you’re working with large-scale initiatives. It checks for knowledge points and ensures your enter follows the right construction, one thing that’s straightforward to miss.

- DVC (Knowledge Model Management) is nice when your undertaking grows. It retains monitor of the completely different variations of your knowledge and preprocessing steps so that you don’t lose your work or mess issues up throughout collaboration.

Widespread Challenges

One of many greatest challenges right this moment is managing large-scale knowledge. When you’ve gotten tens of millions of rows from completely different sources day by day, organizing and cleansing all of them turns into a critical activity.

Tackling these challenges requires good instruments, strong planning, and fixed monitoring.

One other important concern is automating preprocessing pipelines. In principle, it sounds nice; simply arrange a stream to wash and put together your knowledge routinely.

However in actuality, datasets fluctuate, and guidelines that work for one would possibly break down for an additional. You continue to want a human eye to verify edge instances and make judgment calls. Automation helps, however it’s not all the time plug-and-play.

Even when you begin with clear knowledge, issues change, codecs shift, sources replace, and errors sneak in. With out common checks, your once-perfect knowledge can slowly collapse, resulting in unreliable insights and poor mannequin efficiency.

Greatest Practices

Listed below are a couple of finest practices that may make an enormous distinction in your mannequin’s success. Let’s break them down and look at how they play out in real-world conditions.

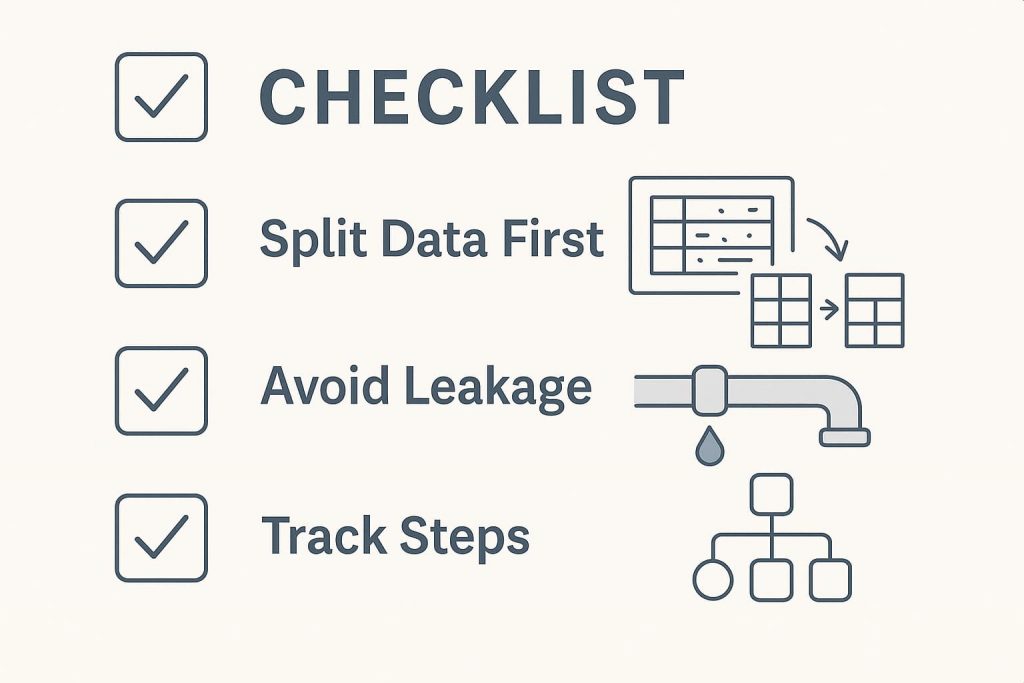

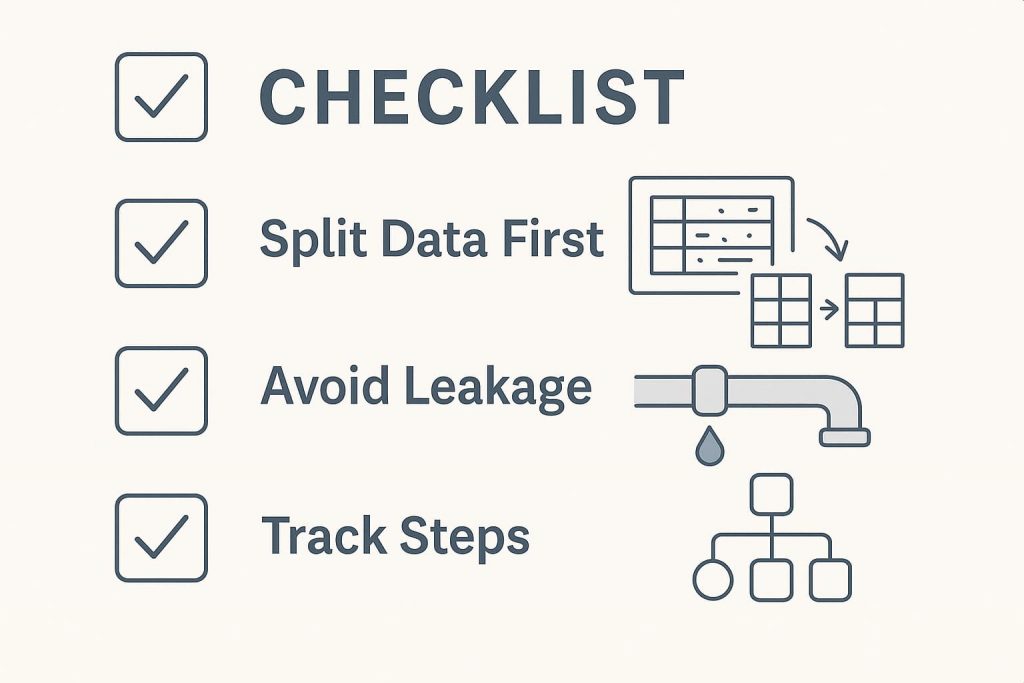

1. Begin With a Correct Knowledge Cut up

A mistake many newbies make is doing all of the preprocessing on the total dataset earlier than splitting it into coaching and take a look at units. However this strategy can by chance introduce bias.

For instance, when you scale or normalize the whole dataset earlier than the break up, data from the take a look at set could bleed into the coaching course of, which known as knowledge leakage.

At all times break up your knowledge first, then apply preprocessing solely on the coaching set. Later, rework the take a look at set utilizing the identical parameters (like imply and normal deviation). This retains issues honest and ensures your analysis is trustworthy.

2. Avoiding Knowledge Leakage

Knowledge leakage is sneaky and one of many quickest methods to destroy a machine studying mannequin. It occurs when the mannequin learns one thing it wouldn’t have entry to in a real-world scenario—dishonest.

Widespread causes embody utilizing goal labels in characteristic engineering or letting future knowledge affect present predictions. The secret is to all the time take into consideration what data your mannequin would realistically have at prediction time and preserve it restricted to that.

3. Observe Each Step

As you progress by your preprocessing pipeline, dealing with lacking values, encoding variables, scaling options, and holding monitor of your actions are important not simply in your personal reminiscence but in addition for reproducibility.

Documenting each step ensures others (or future you) can retrace your path. Instruments like DVC (Knowledge Model Management) or a easy Jupyter pocket book with clear annotations could make this simpler. This type of monitoring additionally helps when your mannequin performs unexpectedly—you may return and work out what went improper.

Actual-World Examples

To see how a lot of a distinction preprocessing makes, think about a case research involving buyer churn prediction at a telecom firm. Initially, their uncooked dataset included lacking values, inconsistent codecs, and redundant options. The primary mannequin skilled on this messy knowledge barely reached 65% accuracy.

After making use of correct preprocessing, imputing lacking values, encoding categorical variables, normalizing numerical options, and eradicating irrelevant columns, the accuracy shot as much as over 80%. The transformation wasn’t within the algorithm however within the knowledge high quality.

One other nice instance comes from healthcare. A staff engaged on predicting coronary heart illness

used a public dataset that included blended knowledge varieties and lacking fields.

They utilized binning to age teams, dealt with outliers utilizing RobustScaler, and one-hot encoded a number of categorical variables. After preprocessing, the mannequin’s accuracy improved from 72% to 87%, proving that the way you put together your knowledge typically issues greater than which algorithm you select.

Briefly, preprocessing is the inspiration of any machine studying undertaking. Observe finest practices, preserve issues clear, and don’t underestimate its impression. When performed proper, it may take your mannequin from common to distinctive.

Continuously Requested Questions (FAQ’s)

1. Is preprocessing completely different for deep studying?

Sure, however solely barely. Deep studying nonetheless wants clear knowledge, simply fewer handbook options.

2. How a lot preprocessing is an excessive amount of?

If it removes significant patterns or hurts mannequin accuracy, you’ve doubtless overdone it.

3. Can preprocessing be skipped with sufficient knowledge?

No. Extra knowledge helps, however poor-quality enter nonetheless results in poor outcomes.

3. Do all fashions want the identical preprocessing?

No. Every algorithm has completely different sensitivities. What works for one could not swimsuit one other.

4. Is normalization all the time mandatory?

Principally, sure. Particularly for distance-based algorithms like KNN or SVMs.

5. Are you able to automate preprocessing absolutely?

Not completely. Instruments assist, however human judgment continues to be wanted for context and validation.

Why monitor preprocessing steps?

It ensures reproducibility and helps determine what’s enhancing or hurting efficiency.

Conclusion

Knowledge preprocessing isn’t only a preliminary step, and it’s the bedrock of fine machine studying. Clear, constant knowledge results in fashions that aren’t solely correct but in addition reliable. From eradicating duplicates to choosing the right encoding, every step issues. Skipping or mishandling preprocessing typically results in noisy outcomes or deceptive insights.

And as knowledge challenges evolve, a strong grasp of principle and instruments turns into much more worthwhile. Many hands-on studying paths right this moment, like these present in complete knowledge science

Should you’re trying to construct robust, real-world knowledge science expertise, together with hands-on expertise with preprocessing strategies, think about exploring the Grasp Knowledge Science & Machine Studying in Python program by Nice Studying. It’s designed to bridge the hole between principle and observe, serving to you apply these ideas confidently in actual initiatives.