Software-as-Interface, a two-camera, video-only methodology that teaches robots instrument use, with a excessive common success fee and cuts information assortment time.

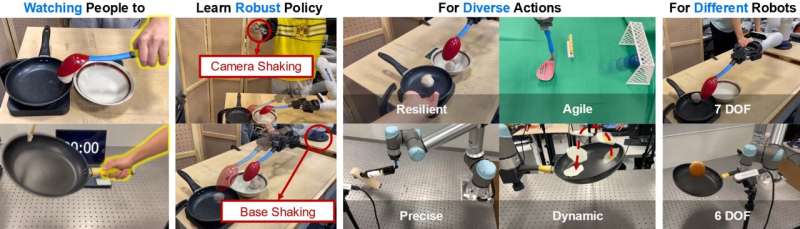

A analysis staff from College of Illinois in collaboration with Columbia College and UT Austin, has unveiled a framework that trains robots to make use of instruments by studying instantly from odd human movies. The strategy reviews increased success fee for doing the actual duties and sooner information assortment than teleoperation-based baselines, pointing to a lower-cost route for instructing dynamic abilities.

The method, known as Software-as-Interface, the robotic learns from information, collected by two RGB digital camera views of an individual performing a process. A 3D reconstruction mannequin (MASt3R) builds scene geometry; 3D Gaussian splatting synthesises further views to enhance robustness.

The true magic occurs, with the elimination of people from the video. With Grounded-SAM, an open-set object detector to mix with the section something mannequin (SAM). The system tracks solely the instrument and its interplay with the scene, ignoring the human from it.

The system then estimates the instrument’s 6-DoF, 6 Diploma of Freedom to imitate and learns a tool-centred coverage, which hyperlinks to cross-robot switch.

The staff validated the framework on 5 duties that require velocity or precision: hammering a nail, scooping a meatball, flipping meals in a pan, balancing a wine bottle, and kicking a soccer right into a objective. Throughout these duties, the strategy achieved a 71% increased common success fee than diffusion insurance policies skilled on teleoperation information and diminished data-collection time by 77%. Some duties had been solved solely by this framework within the reported assessments.

The information pipeline makes use of commodity cameras and doesn’t require robot-side operators or motion-capture rigs. That reduces setup complexity and may scale to demonstrations recorded outdoors the lab.

Limitations stay. The present system assumes a inflexible instrument fastened to the gripper and may undergo from pose-estimation errors; novel-view synthesis can degrade beneath giant viewpoint modifications. These constraints information the following set of engineering targets.