Summary

With the rise of related autos, the automotive business is experiencing an explosion of time sequence knowledge. Lots of of Digital Management Models (ECUs) repeatedly stream knowledge throughout in-vehicle networks in excessive frequencies (1Hz-100Hz). This knowledge provides immense potential for predictive analytics and innovation, however extracting data at petabyte scale presents main technical, monetary, and sustainability challenges.

On this weblog submit, we introduce a novel hierarchical semantic knowledge mannequin tailor-made for large-scale time sequence knowledge. Leveraging the newest options (e.g. liquid clustering) launched by the Databricks Intelligence Platform, it allows scalable and cost-efficient analytics – reworking uncooked automotive measurement knowledge into actionable insights that drive car growth, efficiency tuning, and predictive upkeep.

Moreover, we share benchmarks primarily based on real-world knowledge from Mercedes-Benz and examine state-of-the-art knowledge optimization methods to guage efficiency throughout key business use instances.

Introduction

Time sequence evaluation within the automotive business isn’t simply quantity crunching; it is like studying the heartbeat of each car on the highway. Every knowledge level tells a narrative, from the refined vibrations of an engine to split-second choices of autonomous driving techniques and even driver-vehicle interactions. As these knowledge factors coalesce into traits and patterns, they reveal insights that may revolutionize car growth, improve security options, and even predict upkeep wants earlier than a single warning mild flashes on a dashboard.

Nonetheless, the sheer quantity of this knowledge presents a formidable problem. Trendy autos, outfitted with lots of of ECUs, generate a large period of time sequence knowledge. Whereas amassing and storing this wealth of data is essential, the actual problem—and alternative—lies in harnessing its energy to maneuver past easy reporting to forward-looking predictive analytics utilizing ML & AI.

On the coronary heart of this problem is the necessity for a universally relevant, environment friendly, and scalable mannequin for representing time sequence knowledge — one which helps each well-defined and rising use instances. To satisfy this want, we introduce a novel hierarchical semantic knowledge mannequin that addresses the complexity of automotive time sequence evaluation, reworking uncooked measurement knowledge right into a strategic asset.

In creating this knowledge mannequin, we centered on three vital facets:

- Value-efficient and Scalable Knowledge Entry: An information mannequin must be designed to assist widespread question patterns in time sequence knowledge evaluation, enabling fast and resource-efficient processing of huge datasets.

- Usability: Ease of use for knowledge practitioners in addition to area specialists is vital, guaranteeing that working with the info is simple and intuitive, whatever the scale to get insights shortly with out spending hours on writing queries.

- Knowledge Discoverability & Knowledge Governance: Minimizing the info mannequin for time sequence knowledge of as much as hundreds or tens of millions of various alerts and contextual metadata is essential for governance and maintainability. Knowledge of an arbitrary variety of automobile fleets may be simply registered in a number of Unity Catalog tables and customers can securely uncover, entry and collaborate on trusted knowledge.

In collaboration with Mercedes-Benz AG, one of many largest premium car producers primarily based in Stuttgart Germany, we improve the info mannequin primarily based on ASAM requirements to assist Mercedes-Benz to develop probably the most fascinating automobile leveraging the facility of Mercedes-Benz Working System (MB.OS). Just like the Mercedes-Benz Imaginative and prescient EQXX idea automobile, which units new benchmarks for electrical vary and effectivity, we’re pushing the analytics efficiency and effectivity to a wholly new degree by utilizing cutting-edge applied sciences.

On this weblog submit, we showcase productive knowledge analytics use instances and real-world knowledge to reveal the capabilities of our prolonged knowledge mannequin throughout varied setups. Moreover, we performed scientific analysis on totally different optimization methods and carried out systematic benchmarks on Z-Ordering and Liquid Clustering knowledge layouts.

A hierarchical semantic knowledge mannequin for addressing the three vital facets

This knowledge mannequin can symbolize time sequence knowledge of tens of hundreds alerts in a single desk and features a hierarchical illustration of contextual metadata. Our mannequin, due to this fact, offers the next benefits:

- Environment friendly Filtering: The hierarchical construction permits for fast filtering throughout a number of dimensions, enabling analysts to shortly slender down their search house.

- Semantic Relationships: By incorporating semantic relationships between samples and contextual metadata, the mannequin facilitates extra intuitive and highly effective querying capabilities.

- Scalability: The hierarchical nature of the mannequin helps environment friendly knowledge group as the amount grows to petabyte scale.

- Contextual Integration: The semantic layer permits for seamless integration of contextual metadata, enhancing the depth of study attainable.

The Core Knowledge Mannequin

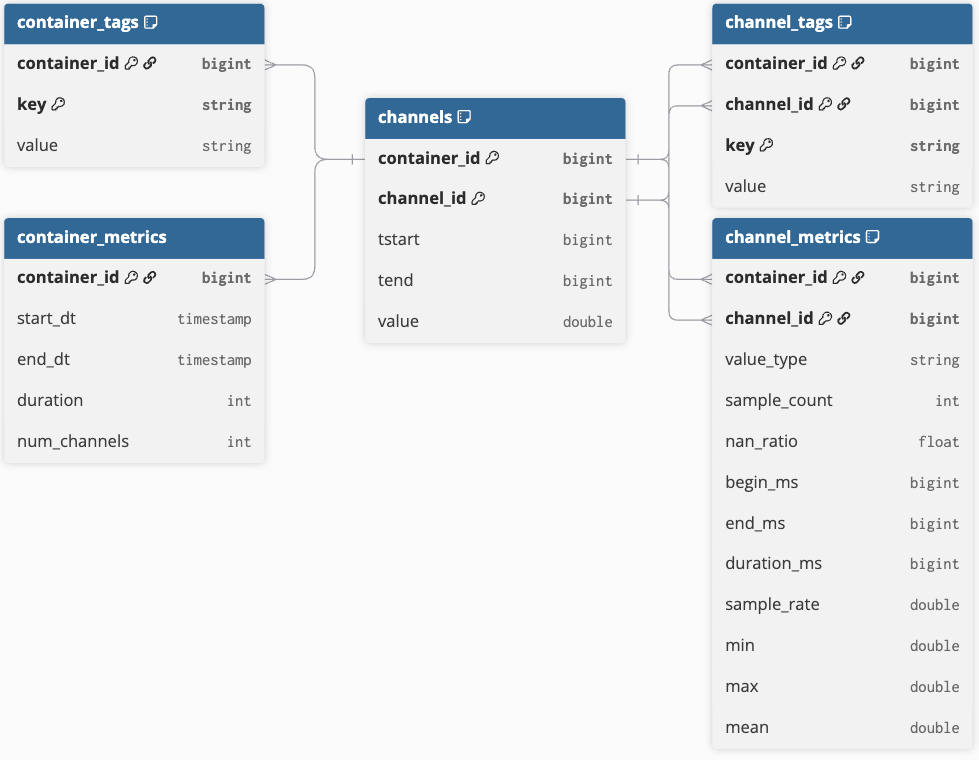

The core mannequin consists of 5 tables that effectively symbolize time sequence knowledge and contextual metadata (see Determine 1 for the Entity Relationship diagram). Central to the mannequin is the samples desk, which comprises time sequence knowledge in a slender format with two identifier columns: container_id and channel_id. The container_id serves as a singular identifier for a group of time sequence objects, whereas channel_id uniquely identifies every time sequence (or channel) inside that container. This construction allows distributed evaluation of the underlying time sequence knowledge.

Within the automotive context, a container consists of predefined channels recorded by automobile knowledge loggers throughout a check drive and saved in a single file. Nonetheless, a number of measurement information may be grouped right into a single container if measurements from a visit are cut up on account of dimension constraints. This idea additionally applies to steady time sequence knowledge streams (e.g., from IoT units), the place container boundaries may be outlined by time (e.g., hourly or every day) or by course of data, corresponding to splitting streams primarily based on manufacturing steps or batches.

All pattern knowledge is saved utilizing run-length encoding (RLE), merging consecutive samples with the identical worth right into a single row outlined by a beginning time (“tstart”), an ending time (“have a tendency”) and the recorded worth. The ending time is non-inclusive, marking the transition to the following worth. RLE is a straightforward compression technique that facilitates environment friendly evaluation, corresponding to calculating histograms by bucketing values and summing the period (have a tendency – tstart). Every row is listed by container_id, channel_id, and the energetic timeframe. This core samples desk is saved easy to attenuate storage dimension and improve question efficiency.

Along with the samples desk, we now have 4 tables to symbolize the contextual metadata:

- “container_metrics” and “container_tags” are listed by their given “container_id”.

- “channel_metrics” and “channel_tags” metadata is moreover identifiable by the corresponding “channel_id”.

- Each metrics tables have a static schema which comprises useful info for pruning queries.

- Each tags tables are used as a easy key-value-pair retailer that may maintain any sort of metadata.

Some metadata may be extracted straight from measurement information; tags can be enriched from exterior metadata sources to provide a context to linked containers and alerts.

Mercedes-Benz Implementation

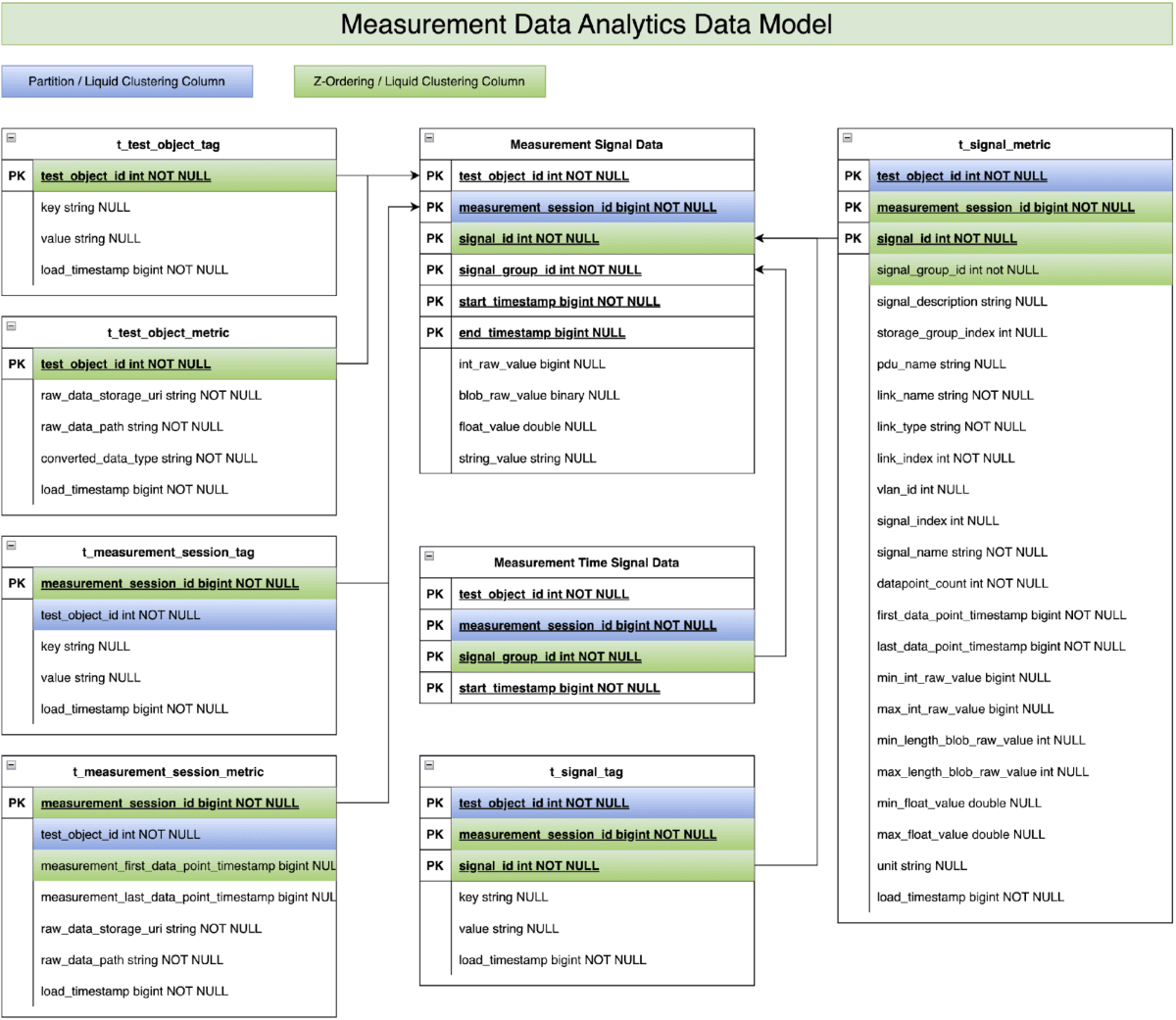

As a member of Affiliation for Standardization of Automation and Measuring Techniques (ASAM) group (standing in August 2025), Mercedes-Benz has lengthy utilized varied applied sciences to research collected measurement knowledge. By means of our collaboration with Databricks, we acknowledged the immense potential of the earlier than talked about time sequence knowledge mannequin to assist the Mercedes-Benz car growth. Consequently, we leveraged our car growth experience to boost the info mannequin primarily based on the ASAM MDF commonplace (see Determine 2). We contributed productive measurement knowledge from growth autos and tailored actual knowledge analytics use instances. This allowed us to validate the info mannequin idea and reveal its feasibility in enhancing the car growth course of and high quality.

Our focus will now shift to demonstrating how this enhanced knowledge mannequin performs with Mercedes-Benz growth car measurement knowledge:

- Stage 1 filtering through “t_test_object_metric” & “t_test_object_tag”: These two tables retailer enterprise info and statistics at check object degree (e.g. check car). Examples embody car sort, car sequence, mannequin yr, car configuration and so on. This info permits the info analytics use instances in step one to give attention to particular check objects amongst lots of of check objects.

- Stage 2 filtering through “t_measurement_session_metric” & “t_measurement_session_tag”: These two tables retailer the enterprise info and statistics on the measurement session degree. Examples embody check occasions, time zone info and measurement begin/finish timestamps. The measurement begin/finish timestamps assist the info analytics scripts within the second step to slender down the fascinating lots of of measurement periods from tens of millions of measurement periods.

- Stage 3 filtering through “t_signal_metric” & “t_signal_tag”: These two tables retailer the enterprise info and statistics at sign key degree. Examples embody car velocity, highway sort, climate situation, drive pilot alerts and so on. The info analytics scripts leverage the knowledge within the ultimate step to establish the related alerts for the underlying question from hundreds of obtainable alerts.

- Analytics scripts on measurement sign knowledge tables: The precise analytics logic is executed on the measurement sign knowledge tables, which retailer the time sequence knowledge collected from check autos. Nonetheless, after making use of the three ranges of knowledge filtering talked about above, usually solely a small fraction of the unique uncooked time sequence knowledge must be processed and analyzed.

Mercedes-Benz Instance Use Case for working with the metadata tables

By introducing totally different ranges of metric and tag tables as core metadata, knowledge analytics efficiency has considerably improved in comparison with current options at Mercedes-Benz. For example how the core metadata enhances analytics efficiency, we want to use the Computerized Lane Change (ALC) system readiness detection for instance.

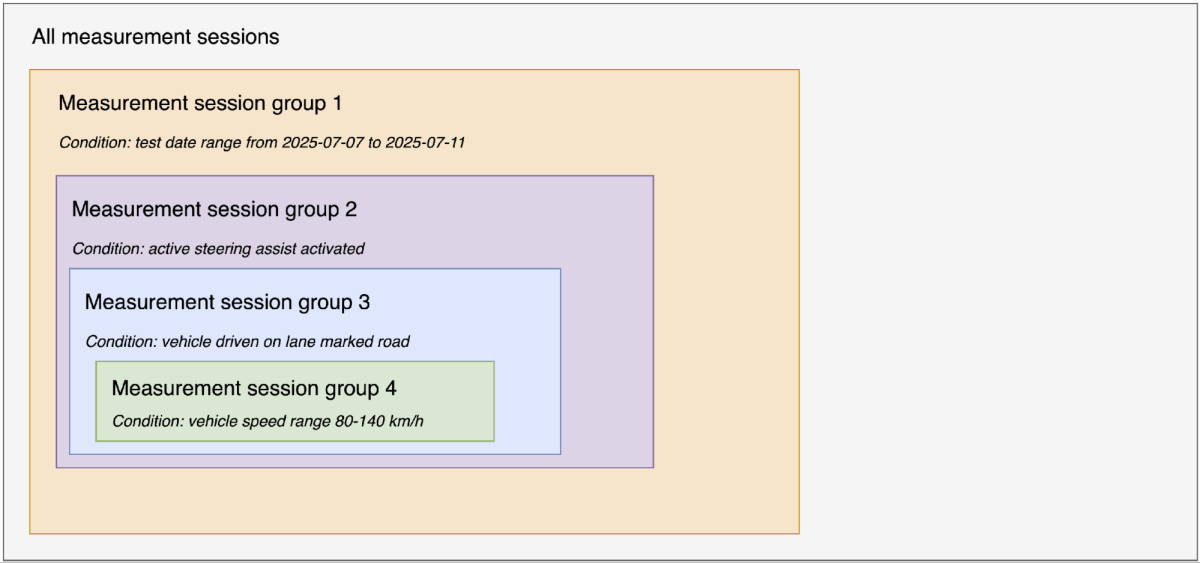

As highlighted in Mercedes-Benz innovation, the ALC perform is an integral a part of Lively Distance Help DISTRONIC with Lively Steering Help. If a slower car is driving forward, the car can provoke a lane change itself within the velocity vary of 80-140 km/h and overtake fully robotically if lane markings are detected and enough clearance is obtainable. The prerequisite is a highway with a velocity restrict and the car being outfitted with MBUX Navigation. The subtle system requires no additional impulse from the driving force to execute the automated lane change. These three preconditions assist the analytics script filter the related periods from hundreds of periods. For readability, we current our methodology in a logical and sequential method (see Determine 3); you will need to word that the precise implementation may be carried out in parallel.

- Filter periods through the check drive date vary between 2025-07-07 and 2025-07-11 from all generated measurement periods to create session group 1. On this step, we use the measurement_first_data_point_timestamp and measurement_end_data_point_timestamp columns within the “t_measurement_session_metric” desk to establish the related periods from all recorded periods of the car.

- Filter periods inside session group 1 that include activated Lively Steering Help to create session group 2. On this step, we verify the periods the place max_int_raw_value > 0 (assuming the sign integer uncooked worth for activated Lively Steering Help is 1) within the “t_signal_metric” desk to establish the related periods from session group 1.

- Filter periods inside session group 2 the place the car is pushed on a lane-marked highway to create session group 3. On this step, we verify the periods the place max_int_raw_value > 2 (assuming the sign integer uncooked worth for lane-marked highway sort is 3) within the “t_signal_metric” desk to establish the related periods from session group 2.

- Filter periods inside session group 3 that include car velocity within the vary of 80-140 km/h to create session group 4. On this step, we verify the periods the place max_float_value >= 80 OR min_float_value within the “t_signal_metric” desk to establish the related periods from session group 3.

- Filter the mandatory sign IDs inside session group 4. On this step, we use the mixture of pdu_name, link_name, vlan_id, and signal_name to search out the sign IDs of related alerts.

- Use the filtered sign IDs and measurement session IDs from session group 4 to affix the measurement sign knowledge level desk and establish the ALC system readiness.

Deciding on the optimum knowledge structure via real-world knowledge & use case benchmarking

To reveal the efficiency and scalability of the described knowledge mannequin, we systematically benchmarked real-world measurement knowledge and use instances. In our benchmark examine, we evaluated varied combos of knowledge layouts and optimization strategies. The benchmarks had been designed to optimize for:

- Knowledge structure & optimization methods: We examined totally different knowledge structure approaches, corresponding to partitioning schemes, RLE, non-RLE, Z-Ordering, and Liquid Clustering, to optimize the question efficiency.

- Scalability: We centered on options able to dealing with the ever-growing quantity of measurement knowledge whereas sustaining effectivity.

- Value-efficiency: We thought of each storage prices and question efficiency to establish probably the most cost-effective strategy for long-term knowledge retention and evaluation.

Because the benchmark outcomes are essential for choosing the longer term measurement knowledge schema and format in Mercedes-Benz, we used productive knowledge and analytics scripts to guage the totally different choices.

In follow even minor optimizations can unlock main financial savings at scale, enabling hundreds of engineers to extract insights safely and cost-efficiently. Benchmarking is vital to validate effectivity of a advised answer and must be steadily repeated with bigger modifications to the system.

Benchmark Setup

The benchmark dataset comprises measurement knowledge from 21 distinct check autos, every outfitted with trendy automobile loggers to gather the measurement knowledge. The gathering options between 30,000 to 60,000 recorded alerts per car, which provide a variety of knowledge factors for evaluation. In whole, the dataset represents 40,000 hours of recordings, with 12,500 hours particularly capturing knowledge whereas the autos had been reside (ignition on). This dataset allows the examine of assorted facets of automotive conduct and efficiency throughout totally different autos and working circumstances.

The next 4 analytical question classes had been executed as a part of the benchmark:

- Sign Distribution Evaluation – We generated one-dimensional histograms for key alerts (e.g. Car Velocity) to evaluate knowledge distribution and frequency patterns.

- Sign Arithmetic Operations – We carried out fundamental calculations (e.g. subtraction, ratios) on a number of to hundreds of alerts.

- Check Case Identification – The queries establish and validate predefined operational eventualities throughout the dataset, outlined by a sequence of occasions occurring in a given order.

- Detection of Readiness of Automated Lane Change Assistant System – This question extensively makes use of the metadata tables earlier than the precise underlying time sequence knowledge is queried.

Please word that on this weblog submit, we solely current the outcomes for class 1 and 4, because the outcomes for the opposite classes yield comparable efficiency outcomes and don’t present further insights.

To benchmark the scalability of the answer, we used 4 totally different cluster sizes. The reminiscence optimized Standard_E8d_v4 node sort was chosen due to its delta cache function and bigger reminiscence to carry the core metadata. As for the Databricks runtime, the 15.4 LTS was the newest obtainable long-term assist runtime. In our earlier investigation, the Photon function has confirmed to be extra cost-efficient, regardless of its greater DBU value, so Photon was utilized in all benchmarks. Desk 1 offers particulars of the chosen Databricks cluster.

| T-Shirt Dimension | Node Kind | DBR | #Nodes (driver + employee) | Photon |

|---|---|---|---|---|

| X-Small | Standard_E8d_v4 | 15.4 LTS | 1 + 2 | sure |

| Small | Standard_E8d_v4 | 15.4 LTS | 1 + 4 | sure |

| Medium | Standard_E8d_v4 | 15.4 LTS | 1 + 8 | sure |

| Massive | Standard_E8d_v4 | 15.4 LTS | 1 + 16 | sure |

Desk 1 The benchmark cluster setups

Benchmark Outcomes

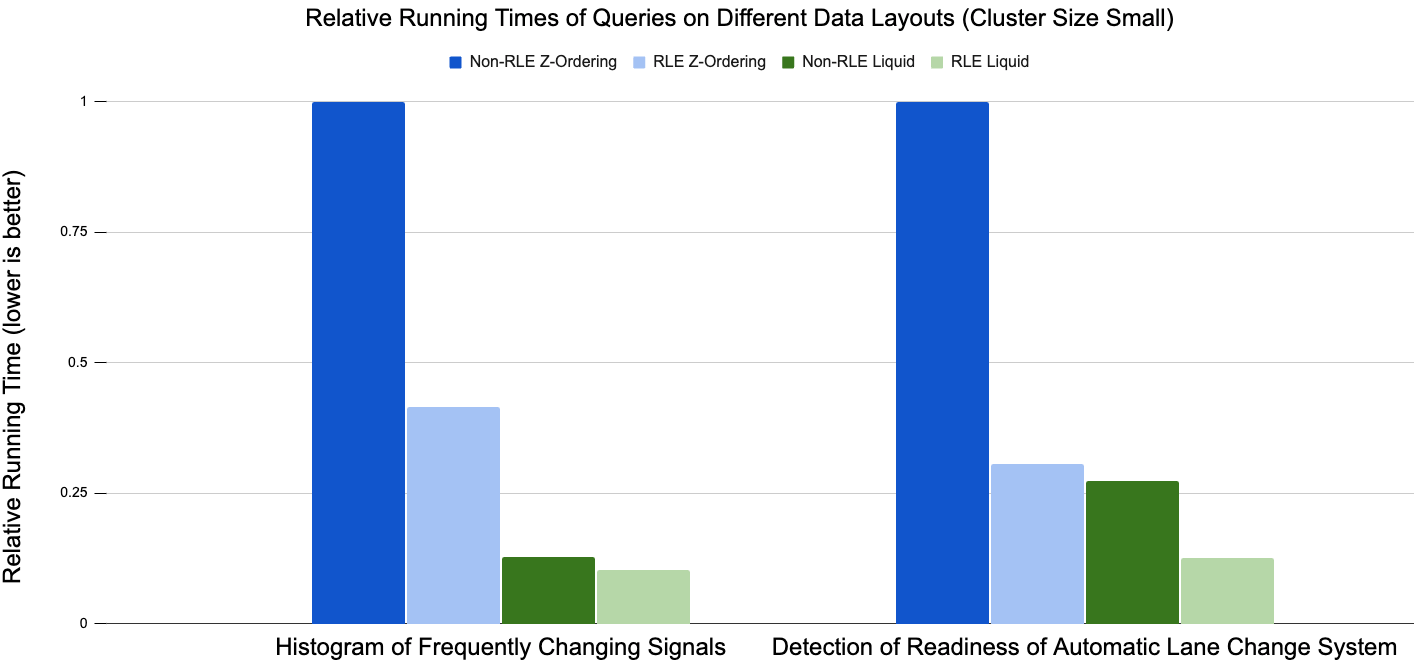

The benchmark was executed on two essential variations of the info mannequin. The primary model has run size encoded (RLE) samples knowledge (see part Core Knowledge Mannequin), whereas the second model doesn’t use RLE. Moreover, we utilized two totally different knowledge structure optimizations to each variations of the info mannequin. Within the first optimization we used hive-style partitioning to partition the measurement sign knowledge desk by the measurement_session_id column and utilized the Z-Ordering approach on the signal_id column. Within the second optimization, we used Liquid Clustering to cluster the measurement sign knowledge desk by measurement_session_id and signal_id.

Runtime Efficiency

Because of the important variations in absolute working occasions among the many benchmarked setups, we determined to make use of relative working time primarily based on Z-Ordering with non-RLE outcomes to visualise the outcomes. Typically, throughout all checks we carried out, Liquid Clustering (inexperienced bars) outperforms hive-style partitioning +Z-Ordering (blue bars). For the histogram of ceaselessly altering alerts, the RLE optimization reduces the runtime by roughly 60% for Z-Ordering, whereas it solely reduces the runtime by lower than 10% for Liquid Clustering.

Within the second use case, detection of readiness of automated lane change system, RLE decreased the runtime by almost 70% for Z-Ordering and greater than 50% for Liquid Clustering. The general outcomes of the demonstrated use instances point out that the mixture of RLE and Liquid Clustering performs the perfect on knowledge mannequin.

Scalability

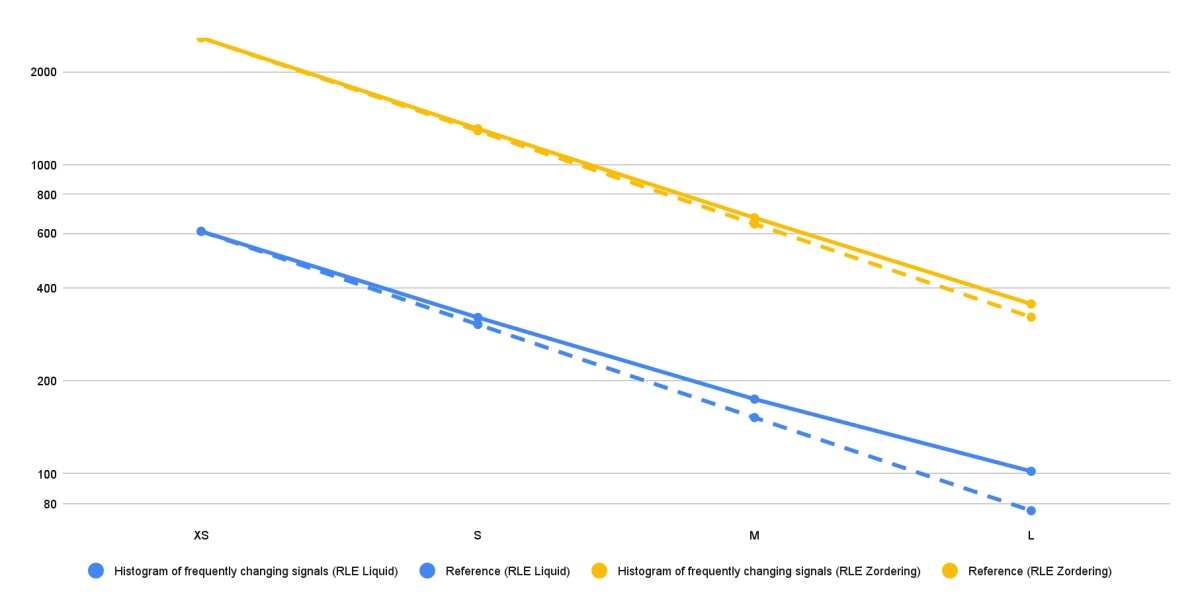

To guage the scalability of the answer, we executed all 4 analytical queries on a static dataset utilizing totally different cluster sizes. Actually, in each benchmarking run, we doubled the cluster dimension in comparison with the earlier run. Ideally, for an answer that scales completely, the runtime of a question ought to lower by an element of two with every doubling of the cluster dimension. Nonetheless, the technical limitations usually forestall good scaling.

Determine 5 exhibits the ends in absolute working occasions (seconds) for varied benchmark setups for one use case, although we noticed the very same sample throughout all different use instances. The reference strains (yellow and blue dashed strains) symbolize the decrease certain of working occasions (good scaling) for the 2 totally different benchmark setups. For the proven use case the working time usually decreases nearly completely because the cluster dimension will increase from X-Small to Massive. This means that the info mannequin and optimization methods are scalable, benefiting from further nodes and processing energy.

However, we will see that the working occasions of the RLE Liquid Clustering answer (blue line) begin to drift away from the right scaling reference line starting with the Medium cluster dimension. This hole turns into much more pronounced with the Massive cluster dimension. Nonetheless, it is necessary to notice that absolutely the working occasions for the RLE Liquid Clustering answer are considerably decrease than these for RLE Z-Ordering. Subsequently, it’s anticipated that the RLE Liquid Clustering answer would exhibit diminished scalability enhancements at bigger cluster sizes, as its baseline working time is already exceptionally low at that stage.

Storage Dimension

Our benchmark knowledge had been generated from 64.55TB of proprietary MDF information, collected from 21 Mercedes-Benz MB.OS check autos over a five-month check interval. To maximise the question efficiency whereas sustaining a suitable storage dimension, we use the zstd compression for Parquet file and set the DELTA goal file dimension as 32MB, primarily based on outcomes of earlier investigations. Small file sizes are fascinating on this situation to stop storing too many alerts in the identical bodily file, making dynamic file pruning extra environment friendly for extremely selective queries.

All knowledge layouts resulted in Delta tables comparable in dimension to the proprietary MDF knowledge (please see Desk 2). Normally, the compression price from uncooked file format to the Delta tables relies upon rather a lot on totally different traits of the MF4 information. The underlying dataset comprises as much as 60.000 alerts per car and plenty of them had been recorded on worth change solely. For these alerts compression strategies like RLE haven’t any impact. For different datasets with solely hundreds, however repeatedly recorded alerts, we discovered that the storage dimension was decreased by >50% in comparison with the uncooked MDF information.

Our outcomes confirmed that the Liquid Clustering tables had been considerably bigger in dimension when in comparison with the Z-Ordered tables (+14% for the RLE knowledge layouts). Nonetheless, contemplating the working time efficiency benchmark outcomes offered above, the extra storage dimension required by RLE Liquid Clustering structure is justified by its superior efficiency.

| Codecs | Proprietary MDF File | RLE Z-Ordering | RLE Liquid Clustering |

|---|---|---|---|

| Storage dimension [TB] | 64.55 | 67.43 | 77.05 |

Desk 2 Storage sizes of uncooked knowledge and of the totally different RLE knowledge layouts

Conclusion

We developed a hierarchical semantic knowledge mannequin to effectively retailer and analyze petabyte-scale time sequence knowledge from related autos on the Databricks Intelligence Platform. Designed for cost-efficient, scalable entry, usability, and powerful governance, the mannequin opens up turning uncooked telemetry into actionable insights.

Utilizing real-world Mercedes-Benz knowledge, we confirmed how hierarchical metadata tables enhance analytics efficiency through multi-level filtering. Within the Computerized Lane Change Readiness instance, this construction enabled fast identification of related periods and alerts, drastically decreasing processing time.

Benchmarking revealed that combining Run-Size Encoding (RLE) with Liquid Clustering delivered the perfect efficiency throughout analytical question sorts, outperforming RLE with Z-Ordering, particularly in runtime. Whereas it required extra storage, the trade-off was justified by important question velocity positive factors. Scalability checks confirmed sturdy efficiency whilst knowledge volumes grew.

Sooner or later, the Databricks crew will publish options on 1) find out how to convert MDF information into the newly launched knowledge mannequin with Databricks Jobs, 2) cowl find out how to govern advanced knowledge units containing massive fleets or different belongings and permit for straightforward discovery whereas sustaining privateness, safety and rising complexities with Unity Catalog and three) introduce a framework for engineers with out a sturdy SQL or python background to effectively acquire insights from knowledge by themselves.

In abstract the hierarchical semantic knowledge mannequin with RLE and Liquid Clustering provides a robust, ruled, and scalable answer for automotive time sequence analytics, accelerating growth at Mercedes-Benz and fostering data-driven collaboration towards a extra sustainable, environment friendly future.