Introduction: Analytics and operations are converging

Functions as we speak can’t depend on uncooked occasions alone. They want curated, contextual, and actionable information from the lakehouse to energy personalization, automation, and clever consumer experiences.

Delivering that information reliably with low latency has been a problem, usually requiring advanced pipelines and {custom} infrastructure.

Lakebase, not too long ago introduced by Databricks, addresses this drawback. It pairs a high-performance Postgres database with native lakehouse integration, making reverse ETL easy and dependable.

What’s reverse ETL?

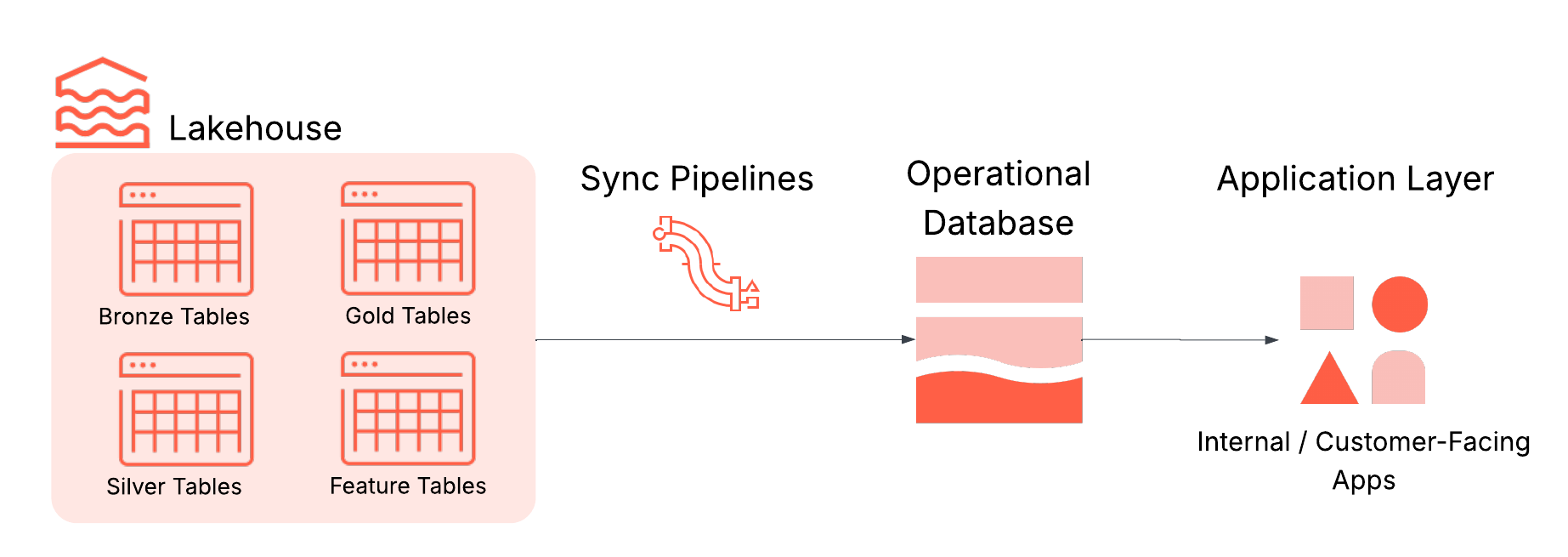

Reverse ETL syncs high-quality information from a lakehouse into the operational programs that energy purposes. This ensures that trusted datasets and AI-driven insights circulation instantly into purposes that energy personalization, suggestions, fraud detection, and real-time decisioning.

With out Reverse ETL, insights stay within the lakehouse and don’t attain the purposes that want them. The lakehouse is the place information will get cleaned, enriched, and changed into analytics, nevertheless it isn’t constructed for low-latency app interactions or transactional workloads. That’s the place Lakebase is available in, delivering trusted lakehouse information instantly into the instruments the place it drives motion, with out {custom} pipelines.

In apply, reverse ETL sometimes includes 4 key parts, all built-in into Lakebase:

- Lakehouse: Shops curated, high-quality information used to drive selections, reminiscent of business-level mixture tables (aka “gold tables”), engineered options, and ML inference outputs.

- Syncing pipelines: Transfer related information into operational shops with scheduling, freshness ensures, and monitoring.

- Operational database: Optimized for prime concurrency, low latency, and ACID transactions.

- Functions: The ultimate vacation spot the place insights turn out to be motion, whether or not in customer-facing purposes, inner instruments, APIs, or dashboards.

Challenges of reverse ETL as we speak

Reverse ETL seems to be easy however in apply, most groups run into the identical challenges:

- Brittle, custom-built ETL pipelines: These pipelines usually require streaming infrastructure, schema administration, error dealing with, and orchestration. They’re brittle and resource-intensive to take care of.

- A number of, disconnected programs: Separate stacks for analytics and operations imply extra infrastructure to handle, extra authentication layers, and extra possibilities for format mismatches.

- Inconsistent governance fashions: Analytical and operational programs normally stay in several coverage domains, making it exhausting to use constant qc and audit insurance policies.

These challenges create friction for each builders and the enterprise, slowing down efforts to reliably activate information and ship clever, real-time purposes.

Lakebase: Built-in by default for simple reverse ETL

Lakebase removes these limitations and transforms reverse ETL into a completely managed, built-in workflow. It combines a high-performance Postgres engine, deep lakehouse integration, and built-in information synchronization in order that contemporary insights circulation into purposes with out additional infrastructure.

These capabilities of Lakebase are particularly worthwhile for Reverse ETL:

- Deep lakehouse integration: Sync information from lakehouse tables to Lakebase on a snapshot, scheduled, or steady foundation, with out constructing or managing exterior ETL jobs. This replaces the complexity of {custom} pipelines, retries, and monitoring with a local, managed expertise.

- Totally-managed Postgres: Constructed on open supply Postgres, Lakebase helps ACID transactions, indexes, joins, and extensions reminiscent of PostGIS and pgvector. You’ll be able to join with current drivers and instruments like pgAdmin or JDBC, avoiding the necessity to be taught new database applied sciences or keep separate OLTP infrastructure.

- Scalable, resilient structure: Lakebase separates compute and storage for impartial scaling, delivering sub-10 ms question latency and 1000’s of QPS. Enterprise-grade options embrace multi-AZ excessive availability, point-in-time restoration, and encrypted storage, eradicating the scaling and resiliency challenges of self-managed databases.

- Built-in safety and governance: Register Lakebase with Unity Catalog to deliver operational information into your centralized governance framework, overlaying audit trails and permissions on the catalog degree. Entry by way of Postgres protocol nonetheless makes use of native Postgres roles and permissions, making certain genuine transactional safety whereas becoming into your broader Databricks governance mannequin.

- Cloud-agnostic structure: Deploy Lakebase alongside your lakehouse in your most popular cloud surroundings with out re-architecting your workflows.

With these capabilities within the Databricks Knowledge Intelligence Platform, Lakebase replaces the fragmented reverse ETL setup that depends on {custom} pipelines, standalone OLTP programs, and separate governance. It delivers an built-in, high-performance, and safe service, making certain that analytical insights circulation into purposes extra rapidly, with much less operational effort, and with governance preserved.

Pattern Use Case: Constructing an Clever Assist Portal with Lakebase

As a sensible instance, let’s stroll by way of construct an clever assist portal powered by Lakebase. This interactive portal helps assist groups triage incoming incidents utilizing ML-driven insights from the lakehouse, reminiscent of predicted escalation threat and really helpful actions, whereas permitting customers to assign possession, monitor standing, and depart feedback on every ticket.

Lakebase makes this doable by syncing predictions into Postgres whereas additionally storing updates from the app. The result’s a assist portal that mixes analytics with stay operations. The identical strategy applies to many different use instances together with personalization engines and ML-driven dashboards.

Step 1: Sync Predictions from the Lakehouse to Lakebase

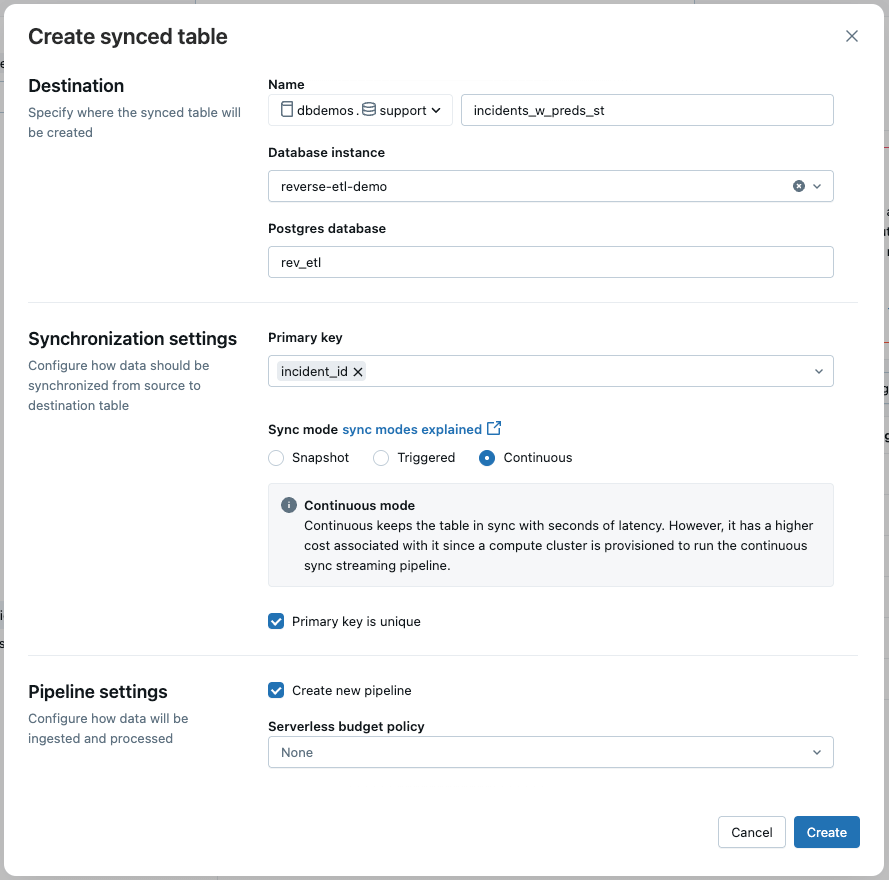

The incident information, enriched with ML predictions, lives in a Delta desk and is up to date in close to real-time by way of a streaming pipeline. To energy the assist app, we use Lakebase reverse ETL to constantly sync this Delta desk to a Postgres desk.

Within the UI, we choose:

- Sync Mode: Steady for low-latency updates

- Major Key: incident_id

This ensures the app displays the newest information with minimal delay.

Notice: You can too create the sync pipeline programmatically utilizing the Databricks SDK.

Step 2: Create a State Desk for Consumer Inputs

The assist app additionally wants a desk to retailer user-entered information like possession, standing, and feedback. Since this information is written from the app, it ought to go right into a separate desk in Lakebase (fairly than the synced desk).

Right here’s the schema:

This design ensures that reverse ETL stays unidirectional (Lakehouse → Lakebase), whereas nonetheless permitting interactive updates by way of the app.

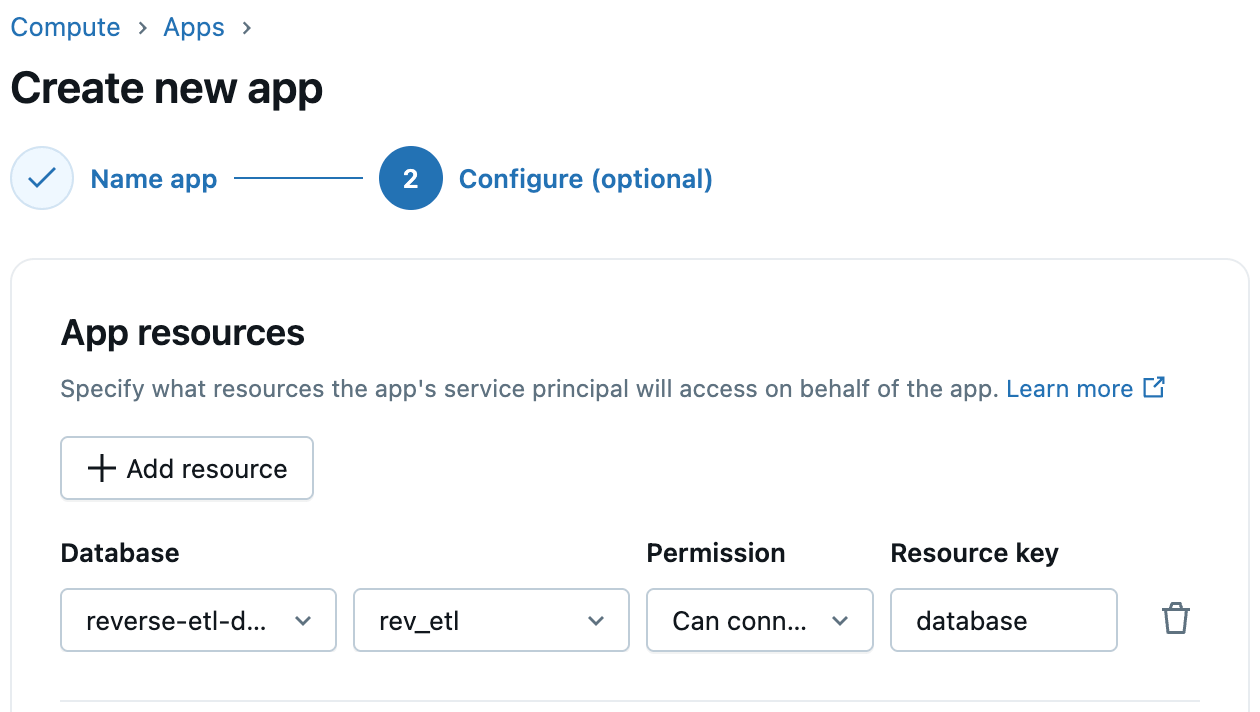

Step 3: Configure Lakebase Entry in Databricks Apps

Databricks Apps assist first-class integration with Lakebase. When creating your app, merely add Lakebase as an app useful resource and choose the Lakebase occasion and database. Databricks robotically provisions a corresponding Postgres function for the app’s service principal, streamlining app-to-database connectivity. You’ll be able to then grant this function the required database, schema, and desk permissions.

Step 4: Deploy Your App Code

Along with your information synced and permissions in place, now you can deploy the Flask app that powers the assist portal. The app connects to Lakebase by way of Postgres and serves a wealthy dashboard with charts, filters, and interactivity.

Conclusion

Bringing analytical insights into operational purposes not must be a posh, brittle course of. With Lakebase, reverse ETL turns into a completely managed and built-in functionality. It combines the efficiency of a Postgres engine, the reliability of a scalable structure, and the governance of the Databricks Platform.

Whether or not you’re powering an clever assist portal or constructing different real-time, data-driven experiences, Lakebase reduces engineering overhead and quickens the trail from perception to motion.

To be taught extra about create synced tables in Lakebase, take a look at our documentation and get began as we speak.