Giant language fashions are sometimes refined after pretraining utilizing both supervised fine-tuning (SFT) or reinforcement fine-tuning (RFT), every with distinct strengths and limitations. SFT is efficient in educating instruction-following via example-based studying, however it might probably result in inflexible habits and poor generalization. RFT, alternatively, optimizes fashions for job success utilizing reward indicators, which might enhance efficiency but in addition introduce instability and reliance on a powerful beginning coverage. Whereas these strategies are sometimes used sequentially, their interplay stays poorly understood. This raises an necessary query: how can we design a unified framework that mixes SFT’s construction with RFT’s goal-driven studying?

Analysis on the intersection of RL and LLM post-training has gained momentum, notably for coaching reasoning-capable fashions. Offline RL, which learns from fastened datasets, typically yields suboptimal insurance policies as a result of restricted range of the information. This has sparked curiosity in combining offline and on-line RL approaches to enhance efficiency. In LLMs, the dominant technique is to first apply SFT to show fascinating behaviors, then use RFT to optimize outcomes. Nonetheless, the dynamics between SFT and RFT are nonetheless not nicely understood, and discovering efficient methods to combine them stays an open analysis problem.

Researchers from the College of Edinburgh, Fudan College, Alibaba Group, Stepfun, and the College of Amsterdam suggest a unified framework that mixes supervised and reinforcement fine-tuning in a method known as Prefix-RFT. This technique guides exploration utilizing partial demonstrations, permitting the mannequin to proceed producing options with flexibility and flexibility. Examined on math reasoning duties, Prefix-RFT constantly outperforms standalone SFT, RFT, and mixed-policy strategies. It integrates simply into current frameworks and proves sturdy to modifications in demonstration high quality and amount. Mixing demonstration-based studying with exploration can result in simpler and adaptive coaching of enormous language fashions.

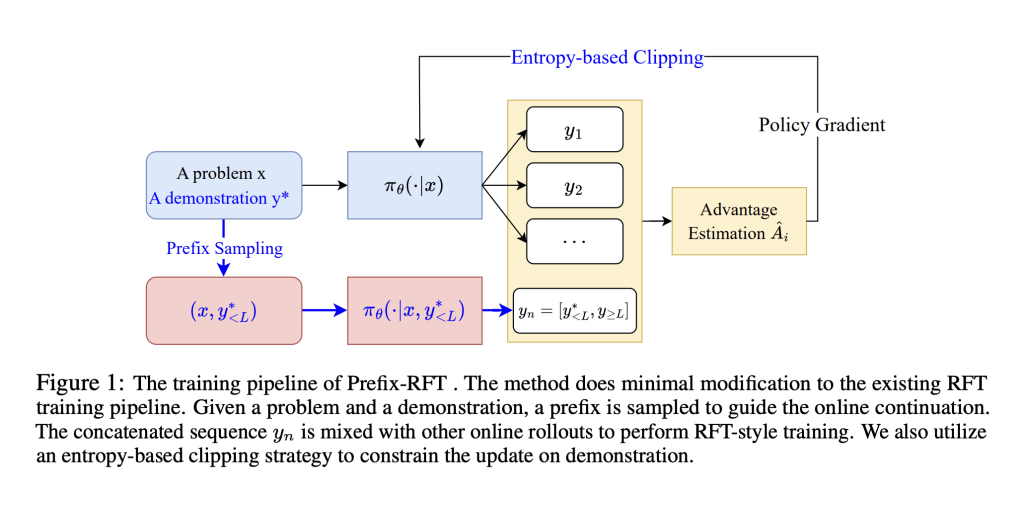

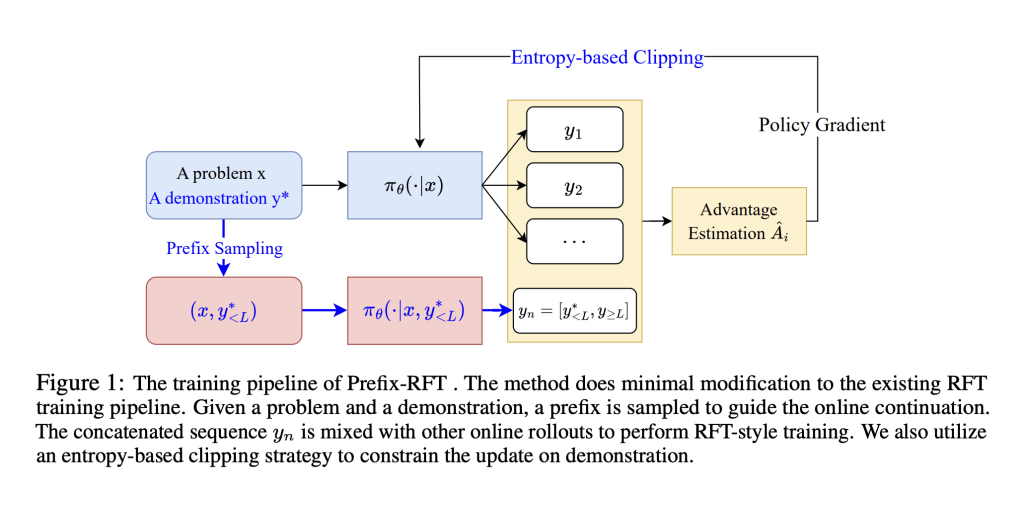

The research presents Prefix Reinforcement Tremendous-Tuning (Prefix-RFT) as a option to mix the strengths of SFT and RFT. Whereas SFT presents stability by mimicking professional demonstrations, RFT encourages exploration via using reward indicators. Prefix-RFT bridges the 2 through the use of a partial demonstration (a prefix) and letting the mannequin generate the remaining. This method guides studying with out relying too closely on full supervision. It incorporates methods like entropy-based clipping and a cosine decay scheduler to make sure secure coaching and environment friendly studying. In comparison with prior strategies, Prefix-RFT presents a extra balanced and adaptive fine-tuning technique.

Prefix-RFT is a reward fine-tuning technique that improves efficiency utilizing high-quality offline math datasets, reminiscent of OpenR1-Math-220K (46k filtered issues). Examined on Qwen2.5-Math-7B, 1.5B, and LLaMA-3.1-8B, it was evaluated on benchmarks together with AIME 2024/25, AMC, MATH500, Minerva, and OlympiadBench. Prefix-RFT achieved the best avg@32 and cross@1 scores throughout duties, outperforming RFT, SFT, ReLIFT, and LUFFY. Utilizing Dr. GRPO, it up to date solely the highest 20% high-entropy prefix tokens, with the prefix size decaying from 95% to five%. It maintained intermediate SFT loss, indicating a powerful stability between imitation and exploration, particularly on troublesome issues (Trainhard).

In conclusion, Prefix-RFT combines the strengths of SFT and RFT by using sampled demonstration prefixes to information studying. Regardless of its simplicity, it constantly outperforms SFT, RFT, and hybrid baselines throughout numerous fashions and datasets. Even with just one% of the coaching information (450 prompts), it maintains sturdy efficiency (avg@32 drops solely from 40.8 to 37.6), exhibiting effectivity and robustness. Its top-20% entropy-based token replace technique proves handiest, attaining the best benchmark scores with shorter outputs. Furthermore, utilizing a cosine decay scheduler for prefix size enhances stability and studying dynamics in comparison with a uniform technique, notably on advanced duties reminiscent of AIME.

Take a look at the Paper right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter.