The Ernie open-source mannequin household has been dormant for some time, however they’re right here to make it well worth the wait. This newest launch got here out stealthily, however braced for making a big impact. With a “Pondering with pictures” mode in a mannequin underneath 3B parameters, rather a lot is on supply. This text serves as a information to ERNI-4.5-VL, testing it on the claims product of its efficiency throughout its launch.

What’s ERNIE-4.5-VL?

ERNIE-4.5-VL-28B-A3B-Pondering is perhaps the longest mannequin identify in historical past, however what it gives greater than makes up for it. Constructed upon the highly effective ERNIE-4.5-VL-28B-A3B structure, it’s a leap ahead in multimodal reasoning capabilities. With a measly 3B lively parameters depend, it claims a greater efficiency than Gemini-2.5-Professional and GPT-5-Excessive throughout numerous benchmarks in doc and chart understanding. However this isn’t it! Essentially the most fascinating half about this reveal is its “Pondering with Pictures” characteristic that permits zooming out and in of pictures, to seize finer particulars.

Entry?

The simplest method to entry the mannequin is through the use of it on HuggingFace Areas.

Utilizing transformers library, you may entry the fashions utilizing a boilerplate code just like this.

Let’s Strive ERNIE 4.5

To see how properly ERNIE-4.5…Pondering performs to its contemporaries, we put it to check on imaginative and prescient duties towards Gemini-2.5-Professional. We’d be testing the 2 throughout the next duties:

- Object Detection

- Dense Picture Understanding

These two duties have been chosen attributable to them providing excessive problem to fashions of the previous. I’d be testing the mannequin at HuggingFace Areas interface: https://huggingface.co/areas/baidu/ERNIE-4.5-VL-28B-A3B-Pondering

Object Detection

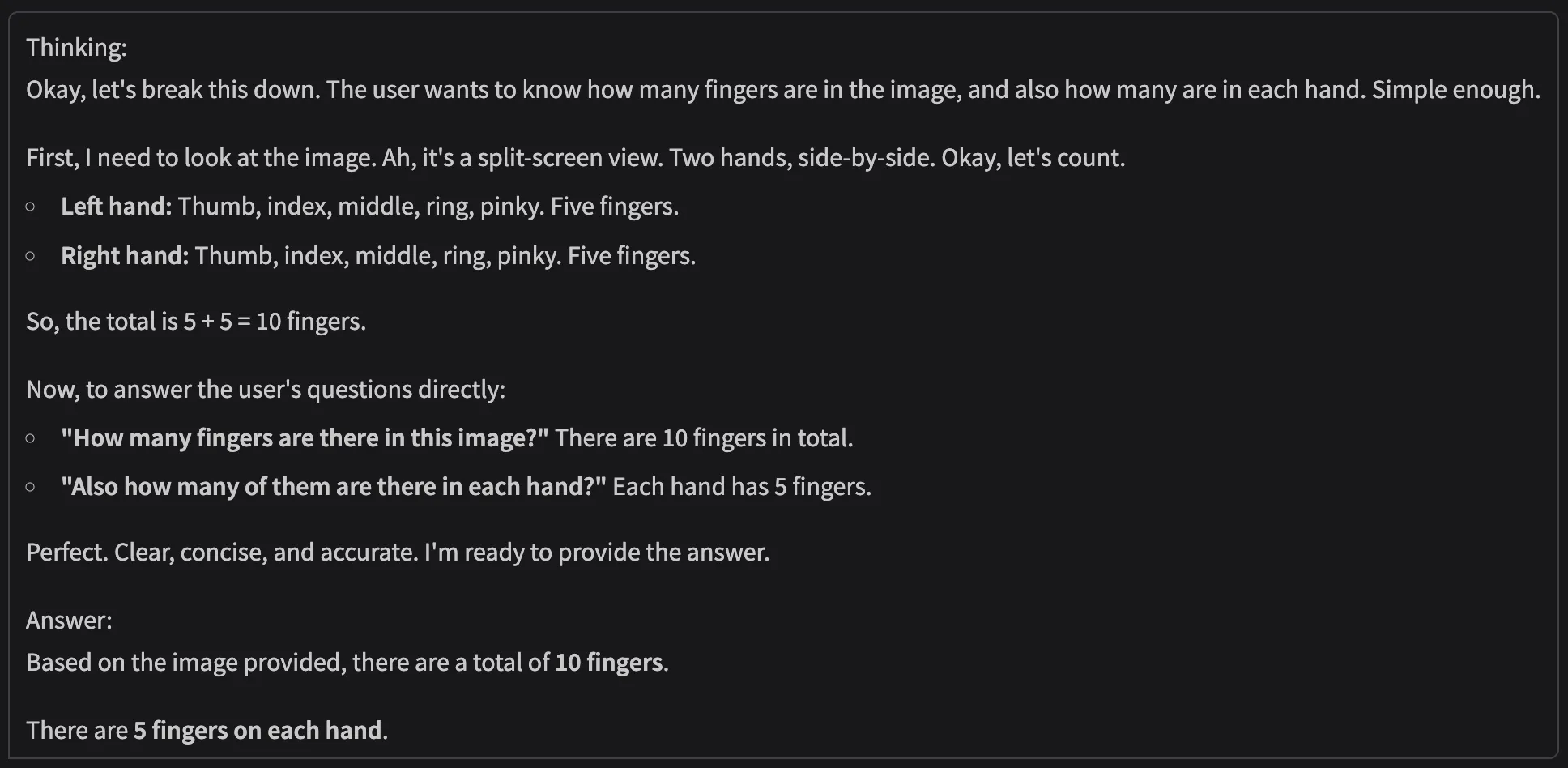

For this process, I’d be utilizing the infamous finger drawback. Fashions until date wrestle to determine the reply to the only of issues:

Question: “What number of fingers are there within the picture?”

Response:

Assessment: Fallacious response! Trying on the pondering of the mannequin, it’s as if the mannequin didn’t even take into account the potential for a human hand having greater than 5 fingers. This is perhaps the perfect case situation underneath most circumstances, however for folks with greater than 5 fingers, it could be biased/unsuitable. I used to be questioning how properly Gemini-2.5-pro performs on the identical process, so I put it to check:

Even it did not reply this elusive query—What number of fingers are there!

Dense Picture Understanding

For this process, I’d be utilizing a heavy and dense picture (12528 × 8352 dimensions and over 7 Megabytes in dimension) filled with lots of particulars about cash throughout totally different components of the world. Fashions are inclined to wrestle with pictures which can be so jam-packed.

Question: “What can you discover from this picture? Give me the precise figures and particulars which can be current there.”

Response:

Assessment: The mannequin was in a position to acknowledge lots of the dense content material of the picture. It was in a position to make out a number of particulars, albeit a few of them have been inaccurate.

The figures that have been unsuitable might be attributed to the inaccurate detection of figures through the OCR course of. However the truth that it was in a position to course of and (to some extent) perceive the contents, in of itself is a big progress. That is particularly contemplating that different fashions like Gemini-2.5 Professional when given the identical picture, outright fails to even strive:

A 3Billion lively parameter mannequin having the ability to eclipse Gemini-2.5 Professional. They have been proper!

Efficiency

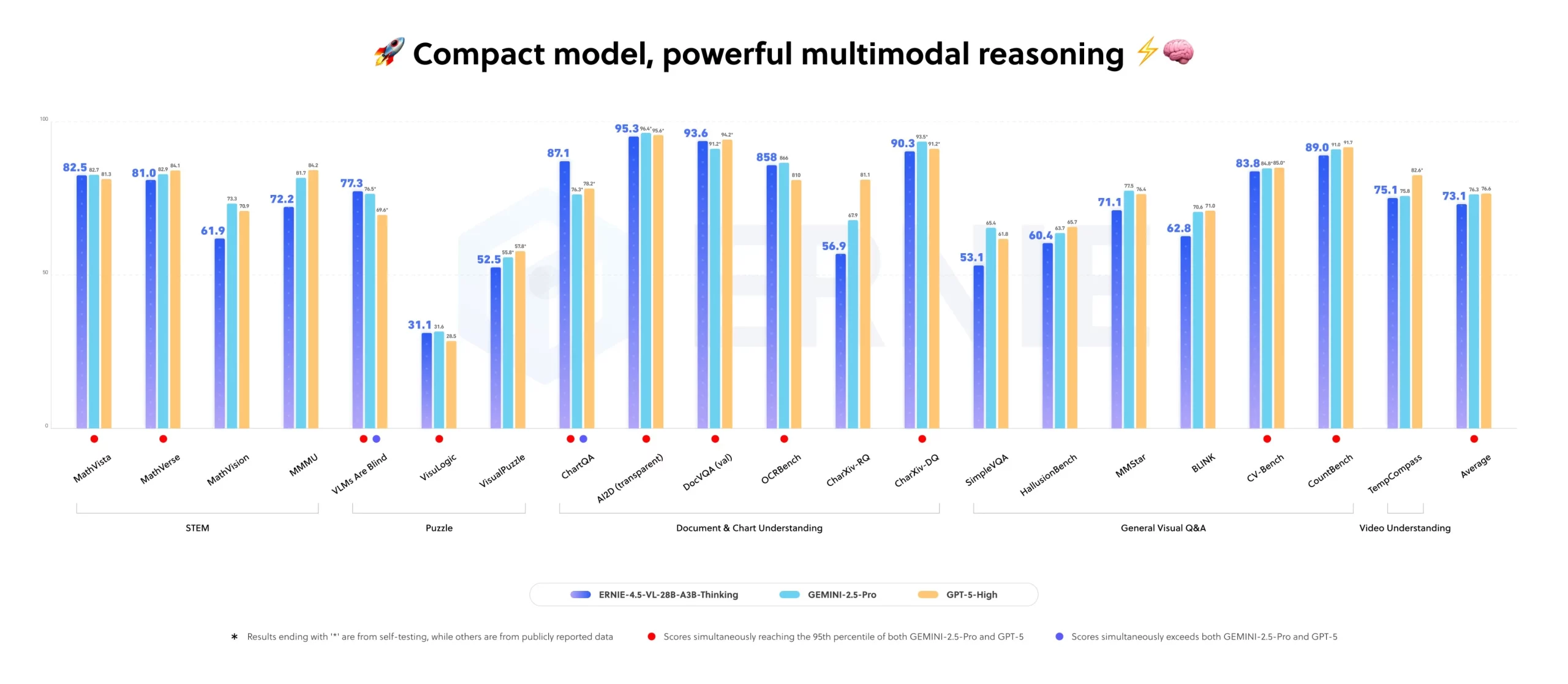

I can’t absolutely check the fashions throughout all tangents that it might be examined on. So the official benchmark outcomes are right here to help with this:

A transparent lead in chartQA is current, which explains the corporate’s declare of “higher efficiency in doc and chart understanding”. Although the illustrations is a bit cryptic to observe.

Conclusion

The ERNIE of us aren’t tapping out, given all of the releases from different Chinese language labs. We want variety in LLMs, and the ERNIE fashions that I’ve evaluated have been fairly promising. The lengthy absence of Ernie proved fruitful, contemplating the outcomes. And there i extra to observe within the upcoming days, based mostly off the newest Baidu’s tweet. The assertion “Extra parameters doesn’t essentially imply higher fashions” is on show, with the most recent Baidu fashions.

Steadily Requested Questions

A. It’s Baidu’s newest multimodal mannequin with 3B lively parameters, designed for superior reasoning throughout textual content and pictures, outperforming fashions like Gemini-2.5-Professional in doc and chart understanding.

A. Its “Pondering with Pictures” potential permits interactive zooming inside pictures, serving to it seize superb particulars and outperform bigger fashions in dense visible reasoning.

A. Not essentially. Many researchers now consider the long run lies in optimizing architectures and effectivity quite than endlessly scaling parameter counts.

A. As a result of larger fashions are pricey, gradual, and energy-intensive. Smarter coaching and parameter-efficient strategies ship related or higher outcomes with fewer sources.

Login to proceed studying and luxuriate in expert-curated content material.