Pondering Machines has launched Tinker, a Python API that lets researchers and engineers write coaching loops regionally whereas the platform executes them on managed distributed GPU clusters. The pitch is slim and technical: hold full management of information, targets, and optimization steps; hand off scheduling, fault tolerance, and multi-node orchestration. The service is in non-public beta with a waitlist and begins free, transferring to usage-based pricing “within the coming weeks.”

Alright, however inform me what it’s?

Tinker exposes low-level primitives—not high-level “prepare()” wrappers. Core calls embody forward_backward, optim_step, save_state, and pattern, giving customers direct management over gradient computation, optimizer stepping, checkpointing, and analysis/inference inside customized loops. A typical workflow: instantiate a LoRA coaching consumer in opposition to a base mannequin (e.g., Llama-3.2-1B), iterate forward_backward/optim_step, persist state, then get hold of a sampling consumer to guage or export weights.

Key Options

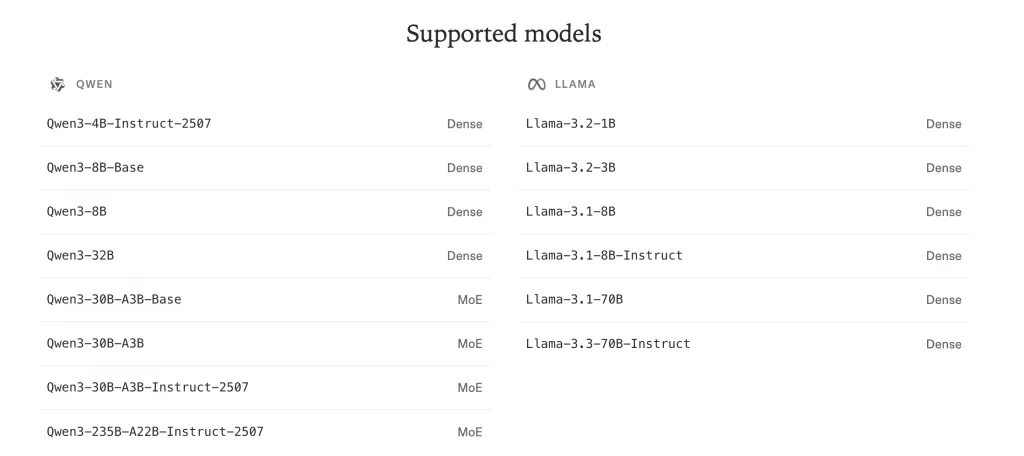

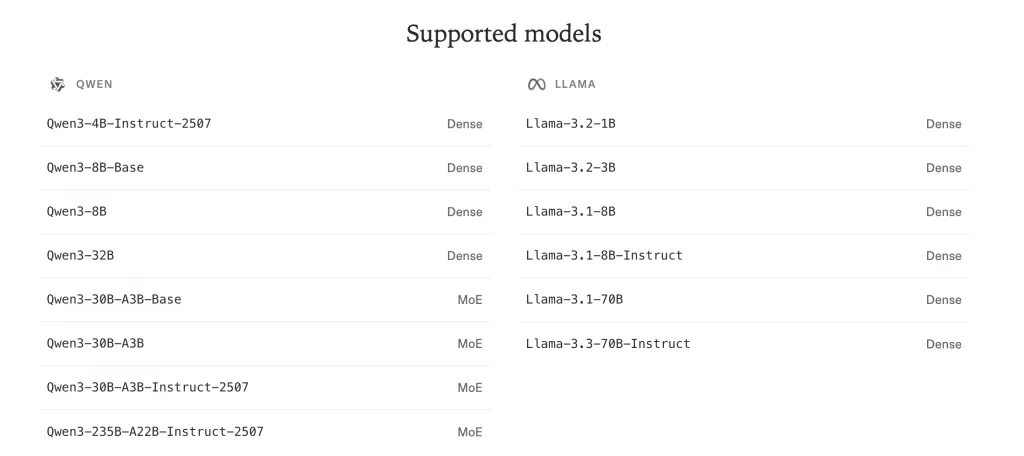

- Open-weights mannequin protection. Effective-tune households comparable to Llama and Qwen, together with giant mixture-of-experts variants (e.g., Qwen3-235B-A22B).

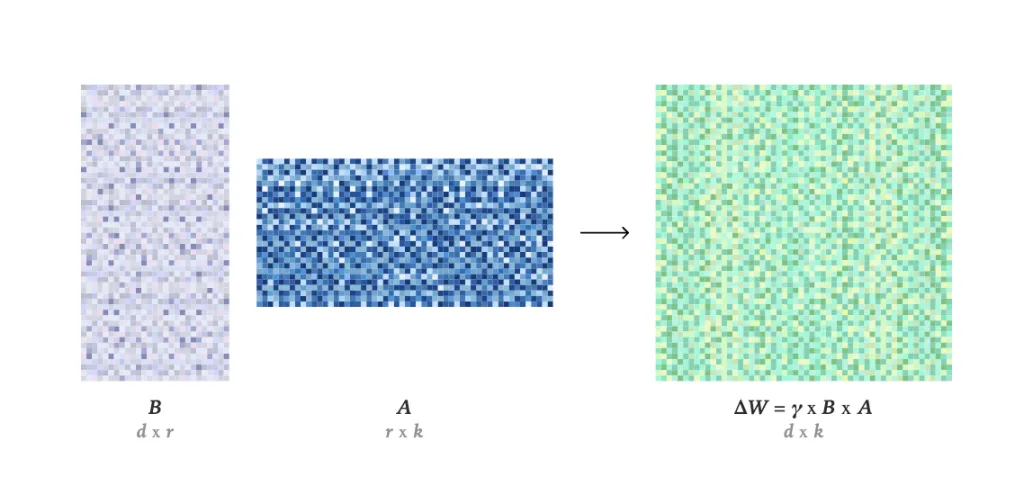

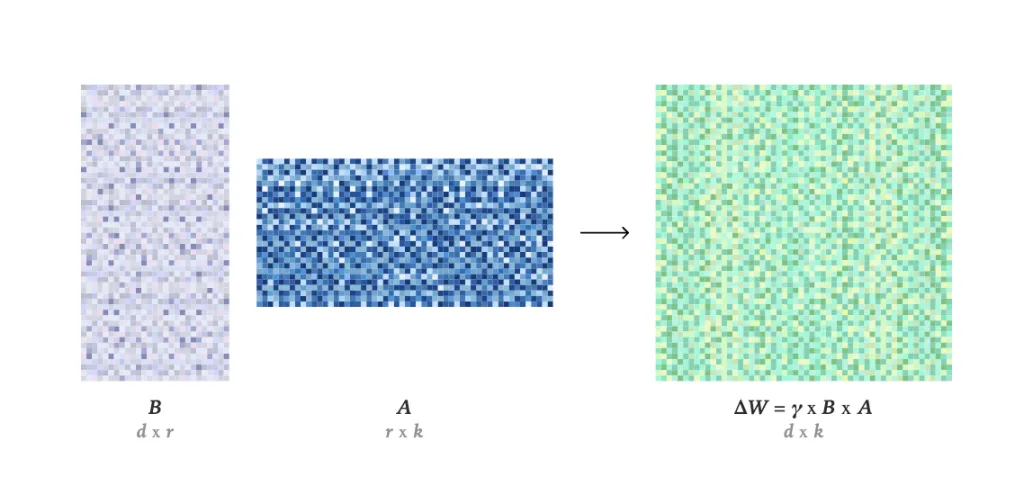

- LoRA-based post-training. Tinker implements Low-Rank Adaptation (LoRA) somewhat than full fine-tuning; their technical word (“LoRA With out Remorse”) argues LoRA can match full FT for a lot of sensible workloads—particularly RL—beneath the precise setup.

- Transportable artifacts. Obtain educated adapter weights to be used outdoors Tinker (e.g., together with your most well-liked inference stack/supplier).

What runs on it?

The Pondering Machines staff positions Tinker as a managed post-training platform for open-weights fashions from small LLMs as much as giant mixture-of-experts techniques, a very good instance could be Qwen-235B-A22B as a supported mannequin. Switching fashions is deliberately minimal—change a string identifier and rerun. Beneath the hood, runs are scheduled on Pondering Machines’ inside clusters; the LoRA strategy allows shared compute swimming pools and decrease utilization overhead.

Tinker Cookbook: Reference Coaching Loops and Put up-Coaching Recipes

To cut back boilerplate whereas conserving the core API lean, the staff revealed the Tinker Cookbook (Apache-2.0). It comprises ready-to-use reference loops for supervised studying and reinforcement studying, plus labored examples for RLHF (three-stage SFT → reward modeling → coverage RL), math-reasoning rewards, tool-use / retrieval-augmented duties, immediate distillation, and multi-agent setups. The repo additionally ships utilities for LoRA hyperparameter calculation and integrations for analysis (e.g., InspectAI).

Who’s already utilizing it?

Early customers embody teams at Princeton (Gödel prover staff), Stanford (Rotskoff Chemistry), UC Berkeley (SkyRL, async off-policy multi-agent/tool-use RL), and Redwood Analysis (RL on Qwen3-32B for management duties).

Tinker is non-public beta as of now with waitlist sign-up. The service is free to begin, with usage-based pricing deliberate shortly; organizations are requested to contact the staff straight for onboarding.

I like that Tinker exposes low-level primitives (forward_backward, optim_step, save_state, pattern) as an alternative of a monolithic prepare()—it retains goal design, reward shaping, and analysis in my management whereas offloading multi-node orchestration to their managed clusters. The LoRA-first posture is pragmatic for price and turnaround, and their very own evaluation argues LoRA can match full fine-tuning when configured appropriately, however I’d nonetheless need clear logs, deterministic seeds, and per-step telemetry to confirm reproducibility and drift. The Cookbook’s RLHF and SL reference loops are helpful beginning factors, but I’ll choose the platform on throughput stability, checkpoint portability, and guardrails for knowledge governance (PII dealing with, audit trails) throughout actual workloads.

Total I want Tinker’s open, versatile API: it lets me customise open-weight LLMs by way of specific training-loop primitives whereas the service handles distributed execution. In contrast with closed techniques, this preserves algorithmic management (losses, RLHF workflows, knowledge dealing with) and lowers the barrier for brand spanking new practitioners to experiment and iterate.

Take a look at the Technical particulars and Join our waitlist right here. In the event you’re a college or group searching for vast scale entry, contact [email protected].

Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you possibly can be part of us on telegram as nicely.