As extra organizations undertake lakehouse architectures, migrating from legacy information warehouses like Oracle to fashionable platforms like Databricks has turn out to be a standard precedence. The advantages—higher scalability, efficiency, and price effectivity—are clear, however the path to get there isn’t at all times simple.

On this publish, I’ll share sensible methods for navigating the migration from Oracle to Databricks, together with suggestions for avoiding widespread pitfalls and setting your undertaking up for long-term success.

Understanding the important thing variations

Earlier than discussing migration methods, it’s essential to know the core variations between Oracle and Databricks—not simply in know-how but in addition in architectural variations.

Oracle’s relational mannequin vs. Databricks’ lakehouse structure

Oracle information warehouses observe a standard relational mannequin optimized for structured, transactional workloads. Databricks is an ideal answer for internet hosting information warehouse workloads, whatever the information mannequin used, much like different database administration methods like Oracle. In distinction, Databricks is constructed on a lakehouse structure, which merges the flexibleness of knowledge lakes with the efficiency and reliability of knowledge warehouses.

This shift modifications how information is saved, processed, and accessed—but in addition unlocks completely new potentialities. With Databricks, organizations can:

- Help fashionable use circumstances like machine studying (ML), conventional AI, and generative AI

- Leverage the separation of storage and compute, enabling a number of groups to spin up unbiased warehouses whereas accessing the identical underlying information

- Break down information silos and scale back the necessity for redundant ETL pipelines

SQL dialects and processing variations

Each platforms help SQL, however there are variations in syntax, built-in features, and the way queries are optimized. These variations should be addressed through the migration to make sure compatibility and efficiency.

Information processing and scaling

Oracle makes use of a row-based, vertically scaled structure (with restricted horizontal scaling by way of Actual Utility Clusters). Databricks, alternatively, makes use of Apache Spark™’s distributed mannequin, which helps each horizontal and vertical scaling throughout giant datasets.

Databricks additionally works natively with Delta Lake and Apache Iceberg, columnar storage codecs optimized for high-performance, large-scale analytics. These codecs help options like ACID transactions, schema evolution, and time journey, that are vital for constructing resilient and scalable pipelines.

Pre-migration steps (widespread to all information warehouse migrations)

No matter your supply system, a profitable migration begins with just a few vital steps:

- Stock your setting: Start by cataloging all database objects, dependencies, utilization patterns, and ETL or information integration workflows. This offers the inspiration for understanding scope and complexity.

- Analyze workflow patterns: Establish how information flows by means of your present system. This contains batch vs. streaming workloads, workload dependencies, and any platform-specific logic that will require redesign.

- Prioritize and part your migration: Keep away from a “massive bang” method. As an alternative, break your migration into manageable phases primarily based on danger, enterprise influence, and readiness. Collaborate with Databricks groups and licensed integration companions to construct a practical, low-risk plan that aligns together with your objectives and timelines.

Information migration methods

Profitable information migration requires a considerate method that addresses each the technical variations between platforms and the distinctive traits of your information belongings. The next methods will assist you to plan and execute an environment friendly migration course of whereas maximizing the advantages of Databricks’ structure.

Schema translation and optimization

Keep away from copying Oracle schemas straight with out rethinking their design for Databricks. For instance, Oracle’s NUMBER information sort helps better precision than what Databricks permits (most precision and scale of 38). In such circumstances, it could be extra applicable to make use of DOUBLE sorts as a substitute of making an attempt to retain actual matches.

Translating schemas thoughtfully ensures compatibility and avoids efficiency or information accuracy points down the road.

For extra particulars, take a look at the Oracle to Databricks Migration Information.

Information extraction and loading approaches

Oracle migrations usually contain transferring information from on-premises databases to Databricks, the place bandwidth and extraction time can turn out to be bottlenecks. Your extraction technique ought to align with information quantity, replace frequency, and tolerance for downtime.

Widespread choices embrace:

- JDBC connections – helpful for smaller datasets or low-volume transfers

- Lakehouse Federation – for replicating information marts on to Databricks

- Azure Information Manufacturing facility or AWS Database Migration Companies – for orchestrated information motion at scale

- Oracle-native export instruments:

- DBMS_CLOUD.EXPORT_DATA (accessible on Oracle Cloud)

- SQL Developer unload (for on-premises or native utilization)

- Handbook setup of DBMS_CLOUD on Oracle 19.9+ on premises deployments

- Bulk switch choices – equivalent to AWS Snowball or Microsoft Information Field, to maneuver giant historic tables into the cloud

Choosing the proper device is determined by your information dimension, connectivity limits, and restoration wants.

Optimize for efficiency

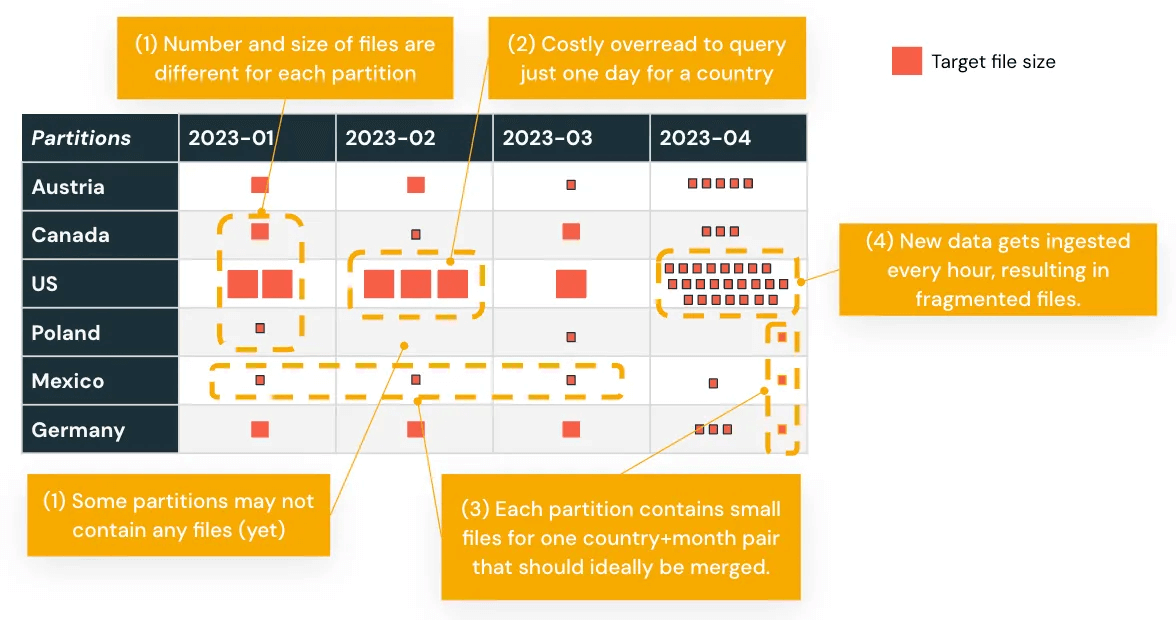

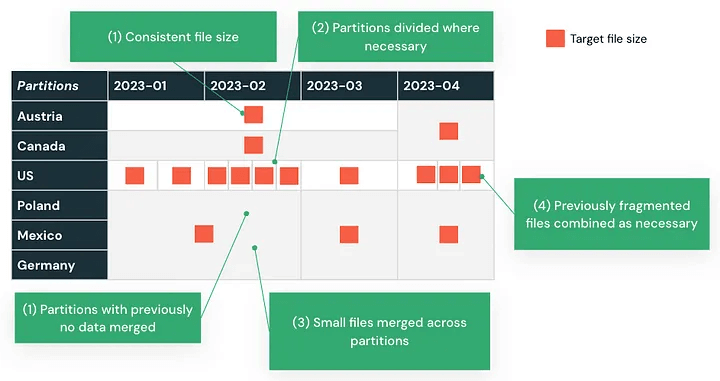

Migrated information usually must be reshaped to carry out nicely in Databricks’. This begins with rethinking how information is partitioned.

In case your Oracle information warehouse used static or unbalanced partitions, these methods could not translate nicely. Analyze your question patterns and restructure partitions accordingly. Databricks affords a number of strategies to enhance efficiency:

- Computerized Liquid Clustering for ongoing optimization with out handbook tuning

- Z-Ordering for clustering on regularly filtered columns

- Liquid Clustering to dynamically arrange information

Moreover:

- Compress small recordsdata to scale back overhead

- Separate cold and warm information to optimize value and storage effectivity

- Keep away from over-partitioning, which might decelerate scans and improve metadata overhead

For instance, partitioning primarily based on transaction dates that ends in uneven information distribution could be rebalanced utilizing Computerized Liquid Clustering, enhancing efficiency for time-based queries.

Designing with Databricks’ processing mannequin in thoughts ensures that your workloads scale effectively and stay maintainable post-migration.

Code and logic migration

Whereas information migration kinds the inspiration of your transition, transferring your software logic and SQL code represents probably the most complicated features of the Oracle to Databricks migration. This course of includes translating syntax and adapting to completely different programming paradigms and optimization strategies that align with Databricks’ distributed processing mannequin.

SQL translation methods

Convert Oracle SQL to Databricks SQL utilizing a structured method. Automated instruments like BladeBridge (now a part of Databricks) can analyze code complexity and carry out bulk translation. Relying on the codebase, typical conversion charges are round 75% or greater.

These instruments assist scale back handbook effort and determine areas that require rework or architectural modifications post-migration.

Saved procedures migration

Keep away from looking for actual one-to-one replacements for Oracle PL/SQL constructs. Packages like DBMS_X, UTL_X, and CTX_X don’t exist in Databricks and would require rewriting the logic to suit the platform.

For widespread constructs equivalent to:

- Cursors

- Exception dealing with

- Loops and management move statements

Databricks now affords SQL Scripting, which helps procedural SQL in notebooks. Alternatively, think about changing these workflows to Python or Scala inside Databricks Workflows or DLT pipelines, which supply better flexibility and integration with distributed processing.

BladeBridge can help in translating this logic into Databricks SQL or PySpark notebooks as a part of the migration.

ETL workflow transformation

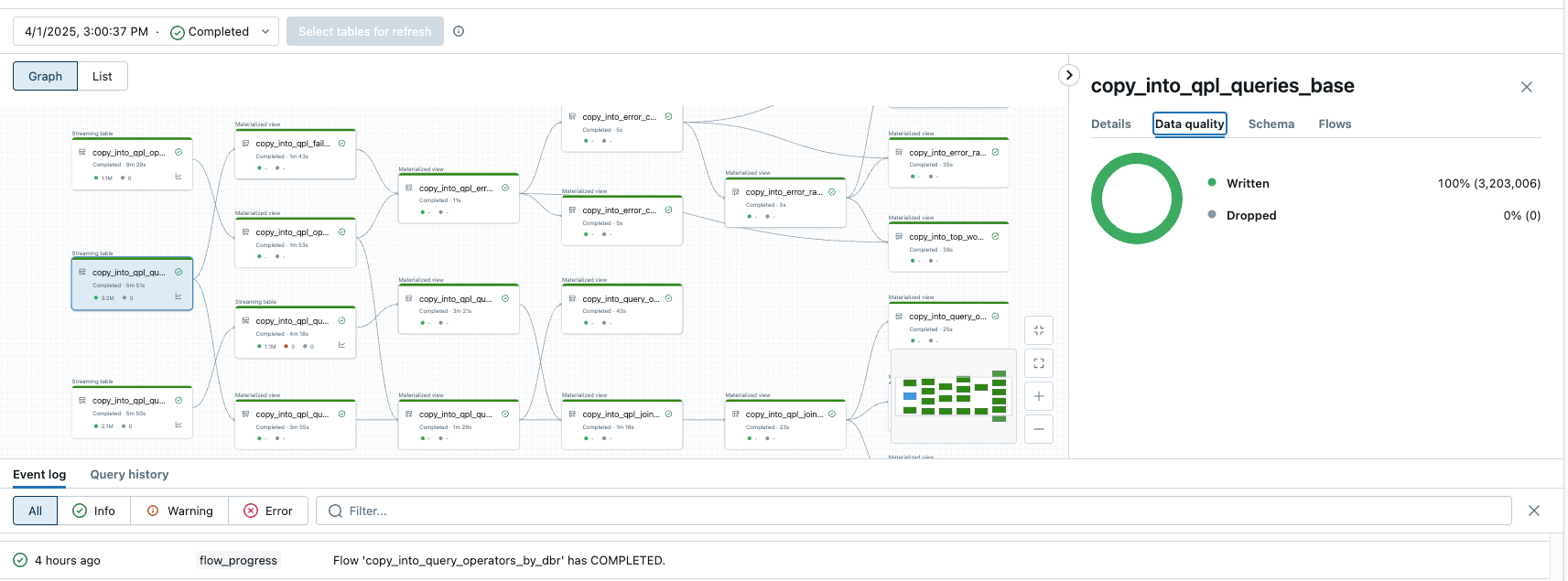

Databricks affords a number of approaches for constructing ETL processes that simplify legacy Oracle ETL:

- Databricks Notebooks with parameters – for easy, modular ETL duties

- DLT – to outline pipelines declaratively with help for batch and streaming, incremental processing, and built-in information high quality checks

- Databricks Workflows – for scheduling and orchestration throughout the platform

These choices give groups flexibility in refactoring and working post-migration ETL whereas aligning with fashionable information engineering patterns.

Submit-migration: validation, optimization, and adoption

Validate with technical and enterprise assessments

After a use case has been migrated, it’s vital to validate that all the pieces works as anticipated, each technically and functionally.

- Technical validation ought to embrace:

- Row depend and combination reconciliation between methods

- Information completeness and high quality checks

- Question end result comparisons throughout supply and goal platforms

- Enterprise validation includes operating each methods in parallel and having stakeholders affirm that outputs match expectations earlier than cutover.

Optimize for value and efficiency

After validation, consider and fine-tune the setting primarily based on precise workloads. Focus areas embrace:

- Partitioning and clustering methods (e.g., Z-Ordering, Liquid Clustering)

- File dimension and format optimization

- Useful resource configuration and scaling insurance policies. These changes assist align infrastructure with efficiency objectives and price targets.

Data switch and organizational readiness

A profitable migration doesn’t finish with technical implementation. Guaranteeing that groups can use the brand new platform successfully is simply as essential.

- Plan for hands-on coaching and documentation

- Allow groups to undertake new workflows, together with collaborative growth, notebook-based logic, and declarative pipelines

- Assign possession for information high quality, governance, and efficiency monitoring within the new system

Migration is greater than a technical shift

Migrating from Oracle to Databricks is not only a platform change—it’s a shift in how information is managed, processed, and consumed.

Thorough planning, phased execution, and shut coordination between technical groups and enterprise stakeholders are important to scale back danger and guarantee a easy transition.

Equally essential is making ready your group to work in another way: adopting new tooling, new processes, and a brand new mindset round analytics or AI. With a balanced deal with each implementation and adoption, your crew can unlock the total worth of a contemporary lakehouse structure.

What to do subsequent

Migration is never simple. Tradeoffs, delays, and surprising challenges are a part of the method, particularly when aligning individuals, processes, and know-how.

That’s why it’s essential to work with groups that’ve carried out this earlier than. Databricks Skilled Companies and our licensed migration companions deliver deep expertise in delivering high-quality migrations on time and at scale. Contact us to start out your migration evaluation.

On the lookout for extra steering? Obtain the total Oracle to Databricks Migration Information for sensible steps, tooling insights, and planning templates that will help you transfer with confidence.