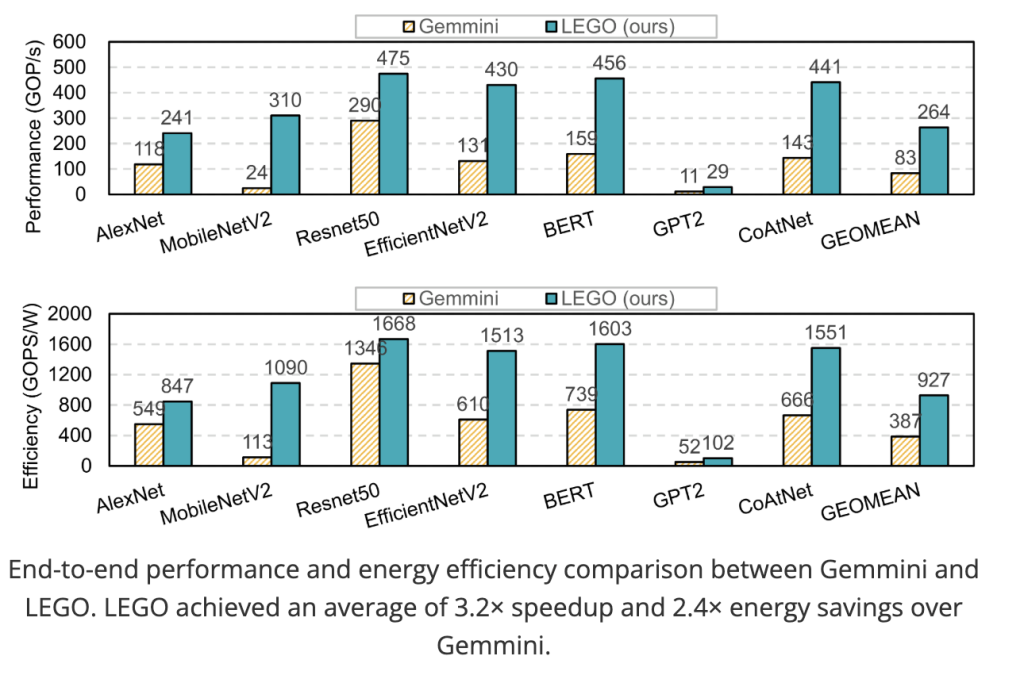

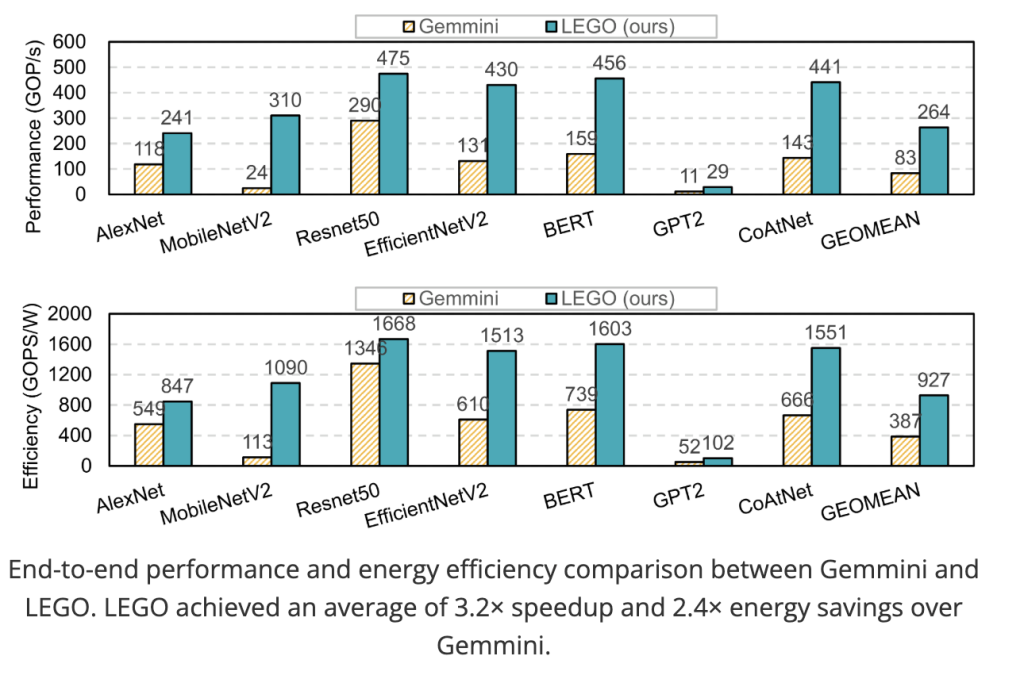

MIT researchers (Han Lab) launched LEGO, a compiler-like framework that takes tensor workloads (e.g., GEMM, Conv2D, consideration, MTTKRP) and mechanically generates synthesizable RTL for spatial accelerators—no handwritten templates. LEGO’s entrance finish expresses workloads and dataflows in a relation-centric affine illustration, builds FU (purposeful unit) interconnects and on-chip reminiscence layouts for reuse, and helps fusing a number of spatial dataflows in a single design. The again finish lowers to a primitive-level graph and makes use of linear programming and graph transforms to insert pipeline registers, rewire broadcasts, extract discount bushes, and shrink space and energy. Evaluated throughout basis fashions and traditional CNNs/Transformers, LEGO’s generated {hardware} exhibits 3.2× speedup and 2.4× power effectivity over Gemmini underneath matched assets.

{Hardware} Era with out Templates

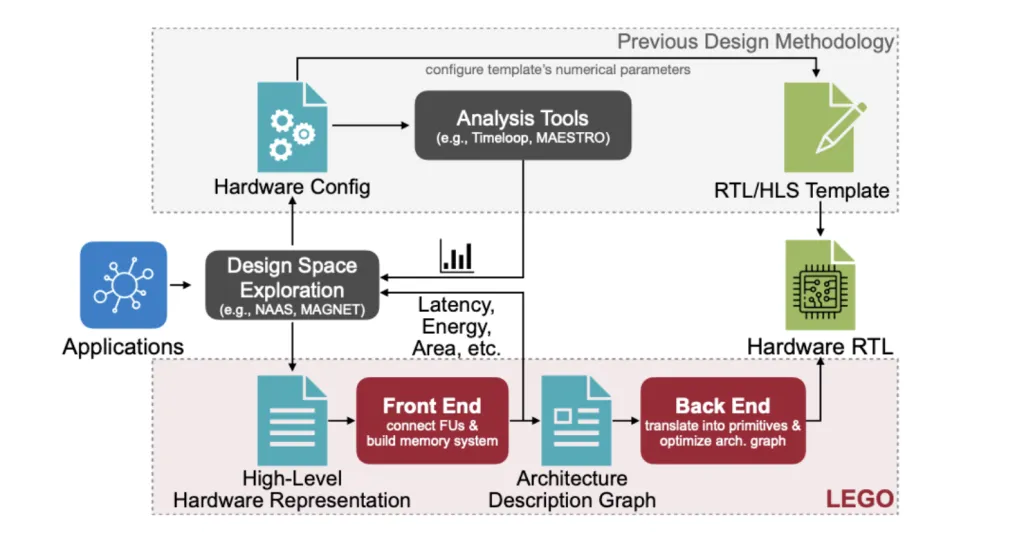

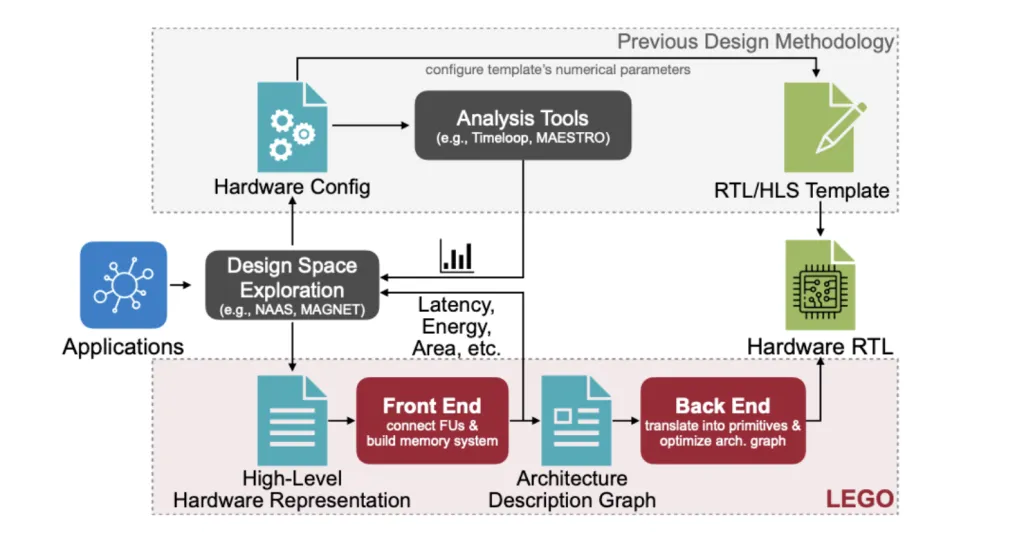

Current flows both: (1) analyze dataflows with out producing {hardware}, or (2) generate RTL from hand-tuned templates with fastened topologies. These approaches limit the structure house and battle with fashionable workloads that have to swap dataflows dynamically throughout layers/ops (e.g., conv vs. depthwise vs. consideration). LEGO immediately targets any dataflow and mixtures, producing each structure and RTL from a high-level description somewhat than configuring a couple of numeric parameters in a template.

Enter IR: Affine, Relation-Centric Semantics (Deconstruct)

LEGO fashions tensor packages as loop nests with three index courses: temporal (for-loops), spatial (par-for FUs), and computation (pre-tiling iteration area). Two affine relations drive the compiler:

- Information mapping fI→Df_{I→D}: maps computation indices to tensor indices.

- Dataflow mapping fTS→If_{TS→I}: maps temporal/spatial indices to computation indices.

This affine-only illustration eliminates modulo/division within the core evaluation, making reuse detection and deal with technology a linear-algebra drawback. LEGO additionally decouples management stream from dataflow (a vector c encodes management sign propagation/delay), enabling shared management throughout FUs and considerably lowering management logic overhead.

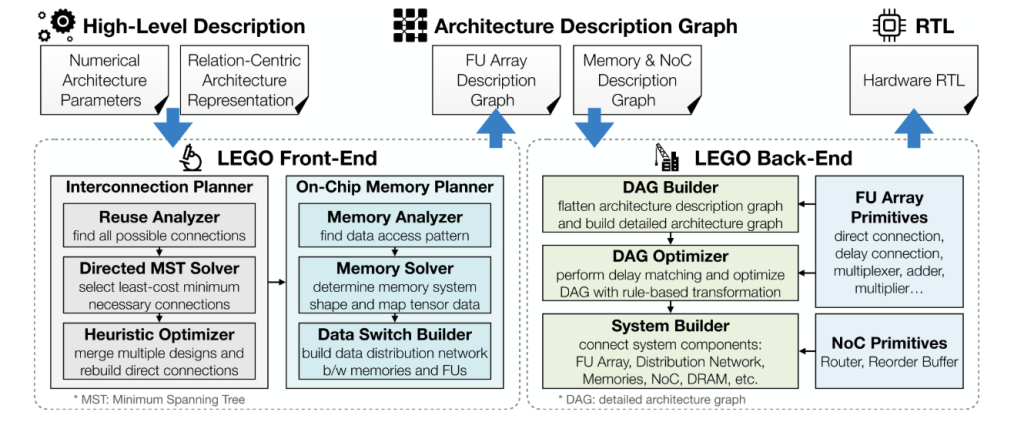

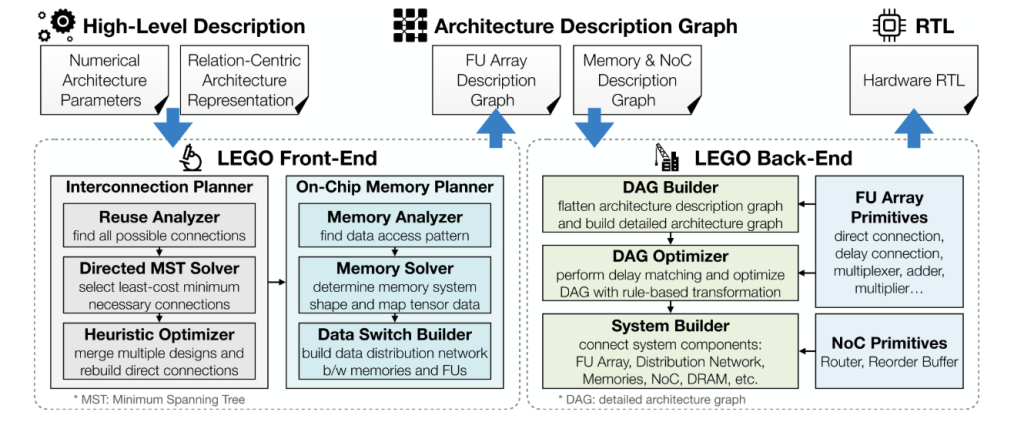

Entrance Finish: FU Graph + Reminiscence Co-Design (Architect)

The primary targets is to maximise reuse and on-chip bandwidth whereas minimizing interconnect/mux overhead.

- Interconnection synthesis. LEGO formulates reuse as fixing linear programs over the affine relations to find direct and delay (FIFO) connections between FUs. It then computes minimum-spanning arborescences (Chu-Liu/Edmonds) to maintain solely vital edges (value = FIFO depth). A BFS-based heuristic rewrites direct interconnects when a number of dataflows should co-exist, prioritizing chain reuse and nodes already fed by delay connections to chop muxes and knowledge nodes.

- Banked reminiscence synthesis. Given the set of FUs that should learn/write a tensor in the identical cycle, LEGO computes financial institution counts per tensor dimension from the utmost index deltas (optionally dividing by GCD to scale back banks). It then instantiates data-distribution switches to route between banks and FUs, leaving FU-to-FU reuse to the interconnect.

- Dataflow fusion. Interconnects for various spatial dataflows are mixed right into a single FU-level Structure Description Graph (ADG); cautious planning avoids naïve mux-heavy merges and yields as much as ~20% power positive aspects in comparison with naïve fusion.

Again Finish: Compile & Optimize to RTL (Compile & Optimize)

The ADG is lowered to a Detailed Structure Graph (DAG) of primitives (FIFOs, muxes, adders, deal with turbines). LEGO applies a number of LP/graph passes:

- Delay matching through LP. A linear program chooses output delays DvD_v to decrease inserted pipeline registers ∑(Dv−Du−Lv)⋅bitwidthsum (D_v-D_u-L_v)cdot textual content{bitwidth} throughout edges—assembly timing alignment with minimal storage.

- Broadcast pin rewiring. A two-stage optimization (digital value shaping + MST-based rewiring amongst locations) converts costly broadcasts into ahead chains, enabling register sharing and decrease latency; a closing LP re-balances delays.

- Discount tree extraction + pin reuse. Sequential adder chains grow to be balanced bushes; a 0-1 ILP remaps reducer inputs throughout dataflows so fewer bodily pins are required (mux as an alternative of add). This reduces each logic depth and register depend.

These passes concentrate on the datapath, which dominates assets (e.g., FU-array registers ≈ 40% space, 60% energy), and produce ~35% space financial savings versus naïve technology.

Consequence

Setup. LEGO is applied in C++ with HiGHS because the LP solver and emits SpinalHDL→Verilog. Analysis covers tensor kernels and end-to-end fashions (AlexNet, MobileNetV2, ResNet-50, EfficientNetV2, BERT, GPT-2, CoAtNet, DDPM, Secure Diffusion, LLaMA-7B). A single LEGO-MNICOC accelerator occasion is used throughout fashions; a mapper picks per-layer tiling/dataflow. Gemmini is the primary baseline underneath matched assets (256 MACs, 256 KB on-chip buffer, 128-bit bus @ 16 GB/s).

Finish-to-end velocity/effectivity. LEGO achieves 3.2× speedup and 2.4× power effectivity on common vs. Gemmini. Positive aspects stem from: (i) a quick, correct efficiency mannequin guiding mapping; (ii) dynamic spatial dataflow switching enabled by generated interconnects (e.g., depthwise conv layers select OH–OW–IC–OC). Each designs are bandwidth-bound on GPT-2.

Useful resource breakdown. Instance SoC-style configuration exhibits FU array and NoC dominate space/energy, with PPUs contributing ~2–5%. This helps the choice to aggressively optimize datapaths and management reuse.

Generative fashions. On a bigger 1024-FU configuration, LEGO sustains >80% utilization for DDPM/Secure Diffusion; LLaMA-7B stays bandwidth-limited (anticipated for low operational depth).

Significance for every section

- For researchers: LEGO offers a mathematically grounded path from loop-nest specs to spatial {hardware} with provable LP-based optimizations. It abstracts away low-level RTL and exposes significant levers (tiling, spatialization, reuse patterns) for systematic exploration.

- For practitioners: It’s successfully hardware-as-code. You’ll be able to goal arbitrary dataflows and fuse them in a single accelerator, letting a compiler derive interconnects, buffers, and controllers whereas shrinking mux/FIFO overheads. This improves power and helps multi-op pipelines with out handbook template redesign.

- For product leaders: By decreasing the barrier to customized silicon, LEGO allows task-tuned, power-efficient edge accelerators (wearables, IoT) that maintain tempo with fast-moving AI stacks—the silicon adapts to the mannequin, not the opposite means round. Finish-to-end outcomes in opposition to a state-of-the-art generator (Gemmini) quantify the upside.

How the “Compiler for AI Chips” Works—Step-by-Step?

- Deconstruct (Affine IR). Write the tensor op as loop nests; provide affine f_{I→D} (knowledge mapping), f_{TS→I} (dataflow), and management stream vector c. This specifies what to compute and how it’s spatialized, with out templates.

- Architect (Graph Synthesis). Resolve reuse equations → FU interconnects (direct/delay) → MST/heuristics for minimal edges and fused dataflows; compute banked reminiscence and distribution switches to fulfill concurrent accesses with out conflicts.

- Compile & Optimize (LP + Graph Transforms). Decrease to a primitive DAG; run delay-matching LP, broadcast rewiring (MST), reduction-tree extraction, and pin-reuse ILP; carry out bit-width inference and non-compulsory energy gating. These passes collectively ship ~35% space and ~28% power financial savings vs. naïve codegen.

The place It Lands within the Ecosystem?

In contrast with evaluation instruments (Timeloop/MAESTRO) and template-bound turbines (Gemmini, DNA, MAGNET), LEGO is template-free, helps any dataflow and their mixtures, and emits synthesizable RTL. Outcomes present comparable or higher space/energy versus knowledgeable handwritten accelerators underneath related dataflows and applied sciences, whereas providing one-architecture-for-many-models deployment.

Abstract

LEGO operationalizes {hardware} technology as compilation for tensor packages: an affine entrance finish for reuse-aware interconnect/reminiscence synthesis and an LP-powered again finish for datapath minimization. The framework’s measured 3.2× efficiency and 2.4× power positive aspects over a number one open generator, plus ~35% space reductions from back-end optimizations, place it as a sensible path to application-specific AI accelerators on the edge and past.

Take a look at the Paper and Mission Web page. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter.