Determine 4: Outcomes when utilizing ellmer to question a ragnar retailer within the console.

The my_chat$chat() runs the chat object’s chat methodology and returns outcomes to your console. In order for you an internet chatbot interface as an alternative, you’ll be able to run ellmer‘s live_browser() perform in your chat object, which might be helpful if you wish to ask a number of questions: live_browser(my_chat).

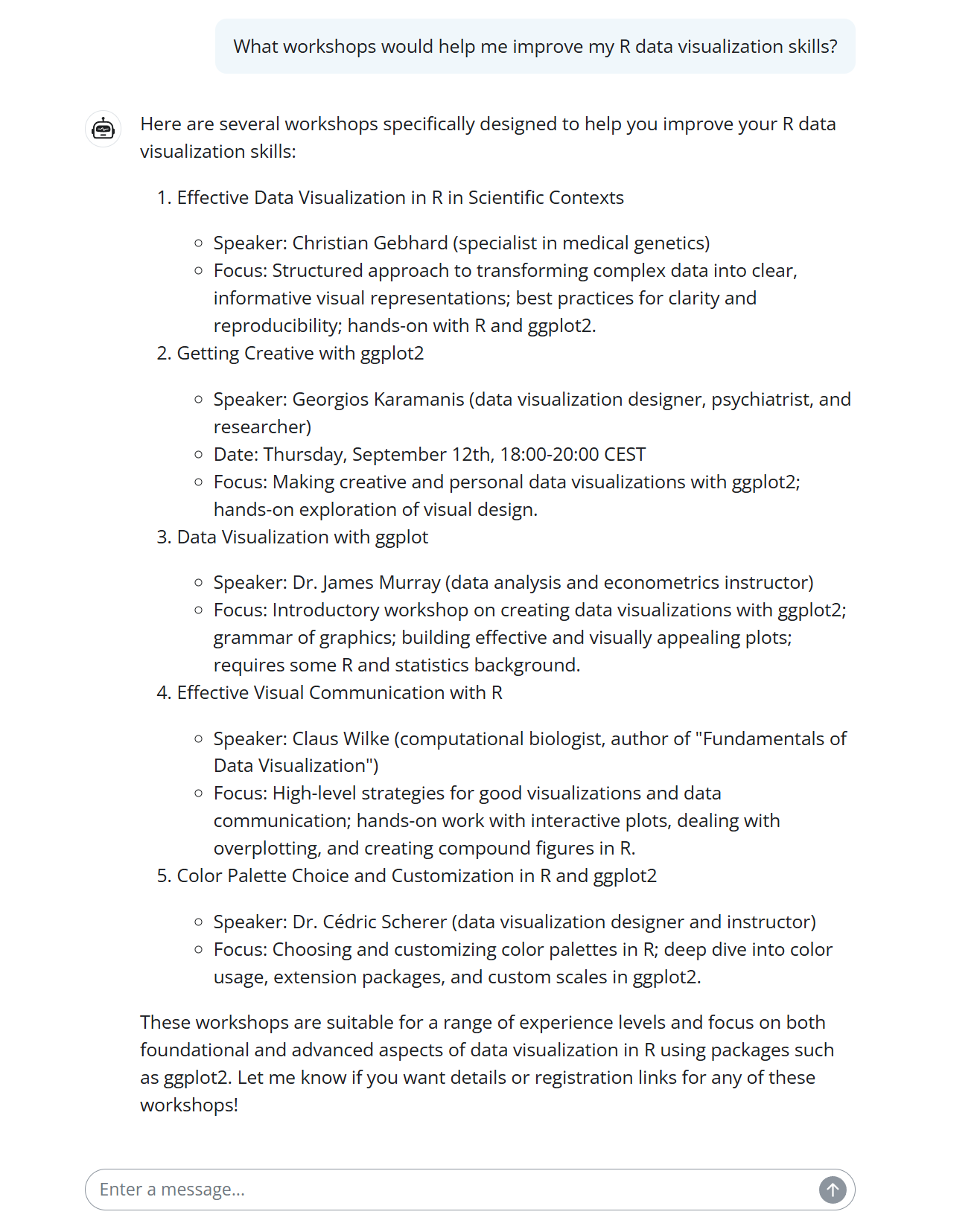

Determine 5: Leads to ellmer’s built-in easy internet chatbot interface.

Fundamental RAG labored fairly properly after I requested about matters, however not for questions involving time. Asking about workshops “subsequent month”–even after I advised the LLM the present date–didn’t return the proper workshops.

That’s as a result of this primary RAG is simply searching for textual content that’s most related to a query. In case you ask “What R knowledge visualization occasions are taking place subsequent month?”, you would possibly find yourself with a workshop in three months. Fundamental semantic search typically misses required parts, which is why now we have metadata filtering.

Metadata filtering “is aware of” what is crucial to a question–at the very least when you’ve set it up that means. Such a filtering permits you to specify that chunks should match sure necessities, akin to a date vary, after which performs semantic search solely on these chunks. The gadgets that don’t match your must-haves received’t be included.

To show primary ragnar RAG code right into a RAG app with metadata filtering, it’s essential to add metadata as separate columns in your ragnar knowledge retailer and ensure an LLM is aware of how and when to make use of that info.

For this instance, we’ll must do the next:

- Get the date of every workshop and add it as a column to the unique textual content chunks.

- Create an information retailer that features a date column.

- Create a customized

ragnarretrieval device that tells the LLM how one can filter for dates if the consumer’s question features a time part.

Let’s get to it!

Step 1: Add the brand new metadata

In case you’re fortunate, your knowledge already has the metadata you need in a structured format. Alas, no such luck right here, for the reason that Workshops for Ukraine listings are HTML textual content. How can we get the date of every future workshop?

It’s potential to do some metadata parsing with common expressions. However when you’re fascinated about utilizing generative AI with R, it’s price realizing how one can ask LLMs to extract structured knowledge. Let’s take a fast detour for that.

We are able to request structured knowledge with ellmer‘s parallel_chat_structured() in three steps:

- Outline the construction we wish.

- Create prompts.

- Ship these prompts to an LLM.

We are able to extract the workshop title with a regex—a simple job since all of the titles begin with ### and finish with a line break:

ukraine_chunks

mutate(title = str_extract(textual content, "^### (.+)n", 1))

Outline the specified construction

The very first thing we’ll do is outline the metadata construction we wish an LLM to return for every workshop merchandise. Most essential is the date, which will probably be flagged as not required since previous workshops didn’t embody them. ragnar creator Tomasz Kalinowski suggests we additionally embody the speaker and speaker affiliation, which appears helpful. We are able to save the ensuing metadata construction as an ellmer “TypeObject” template:

type_workshop_metadata

Create prompts to request that structured knowledge

The code beneath makes use of ellmer‘s interpolate() perform to create a vector of prompts utilizing that template, one for every textual content chunk:

prompts

Ship all of the prompts to an LLM

This subsequent little bit of code creates a chat object after which makes use of parallel_chat_structured() to run all of the prompts. The chat and prompts vector are required arguments. On this case, I additionally dialed again the default numbers of lively requests and requests per minute with the max_active and rpm arguments so I didn’t hit my API limits (which frequently occurs on my OpenAI account on the defaults):

chat

Lastly, we add the extracted outcomes to the ukraine_chunks knowledge body and save these outcomes. That means, we received’t must re-run all of the code later if we want this knowledge once more:

ukraine_chunks

mutate(!!!extracted,

date = as.Date(date))

rio::export(ukraine_chunks, "ukraine_workshop_data_results.parquet")

In case you’re unfamiliar with the splice operator (!!! within the above code), it’s unpacking particular person columns within the extracted knowledge body and including them as new columns to ukraine_chunks by way of the mutate() perform.

The ukraine_chunks knowledge body now has the columns begin, finish, context, textual content, title, date, speaker_name, and speaker_affiliations.

I nonetheless ended up with a number of outdated dates in my knowledge. Since this tutorial’s important focus is RAG and never optimizing knowledge extraction, I’ll name this adequate. So long as the LLM found out {that a} workshop on “Thursday, September 12” wasn’t this yr, we are able to delete previous dates the old style means:

ukraine_chunks

mutate(date = if_else(date >= Sys.Date(), date, NA))

We’ve acquired the metadata we want, structured how we wish it. The following step is to arrange the info retailer.

Step 2: Arrange the info retailer with metadata columns

We would like the ragnar knowledge retailer to have columns for title, date, speaker_name, and speaker_affiliations, along with the defaults.

So as to add further columns to a model knowledge retailer, you first create an empty knowledge body with the additional columns you need, after which use that knowledge body as an argument when creating the shop. This course of is less complicated than it sounds, as you’ll be able to see beneath:

my_extra_columns

Inserting textual content chunks from the metadata-augmented knowledge body right into a ragnar knowledge retailer is similar as earlier than, utilizing ragnar_store_insert() and ragnar_store_build_index():

ragnar_store_insert(retailer, ukraine_chunks)

ragnar_store_build_index(retailer)

In case you’re attempting to replace present gadgets in a retailer as an alternative of inserting new ones, you need to use ragnar_store_update(). That ought to verify the hash to see if the entry exists and whether or not it has been modified.

Step 3: Create a customized ragnar retrieval device

So far as I do know, it’s essential to register a customized device with ellmer when doing metadata filtering as an alternative of utilizing ragnar‘s easy ragnar_register_tool_retrieve(). You are able to do this by:

- Creating an R perform

- Turning that perform right into a device definition

- Registering the device with a chat object’s

register_tool()methodology

First, you’ll write a standard R perform. The perform beneath provides filtering if a begin and/or finish date should not NULL, after which performs chunk retrieval. It requires a retailer to be in your world setting—don’t use retailer as an argument on this perform; it received’t work.

This perform first units up a filter expression, relying on whether or not dates are specified, after which provides the filter expression as an argument to a ragnar retrieval perform. Including filtering to ragnar_retrieve() features is a brand new function as of this writing in July 2025.

Beneath is the perform largely steered by Tomasz Kalinowski. Right here we’re utilizing ragnar_retrieve() to get each typical and semantic search, as an alternative of simply VSS looking out. I added “data-related” because the default question so the perform may deal with time-related questions and not using a matter:

retrieve_workshops_filtered = !!as.Date(start_date))

} else if (!is.null(end_date)) {

# Solely finish date

filter_expr

choose(title, date, speaker_name, speaker_affiliations, textual content)

}

Subsequent, create a device for ellmer based mostly on that perform utilizing device(), which wants the perform title and a device definition as arguments. The definition is essential as a result of the LLM makes use of it to determine whether or not or to not use the device to reply a query:

workshop_retrieval_tool

Now create an ellmer chat with a system immediate to assist the LLM know when to make use of the device. Then register the device and check out it out! My instance is beneath.

my_system_prompt

If there are certainly any R-related workshops subsequent month, you need to get the proper reply, because of your new superior RAG app constructed fully in R. You can too create an area chatbot interface with live_browser(my_chat).

And, as soon as once more, it’s good apply to shut your connection while you’re completed with DBI::dbDisconnect(retailer@con).

That’s it for this demo, however there’s much more you are able to do with R and RAG. Would you like a greater interface, or one you’ll be able to share? This pattern R Shiny internet app, written primarily by Claude Opus, would possibly provide you with some concepts.