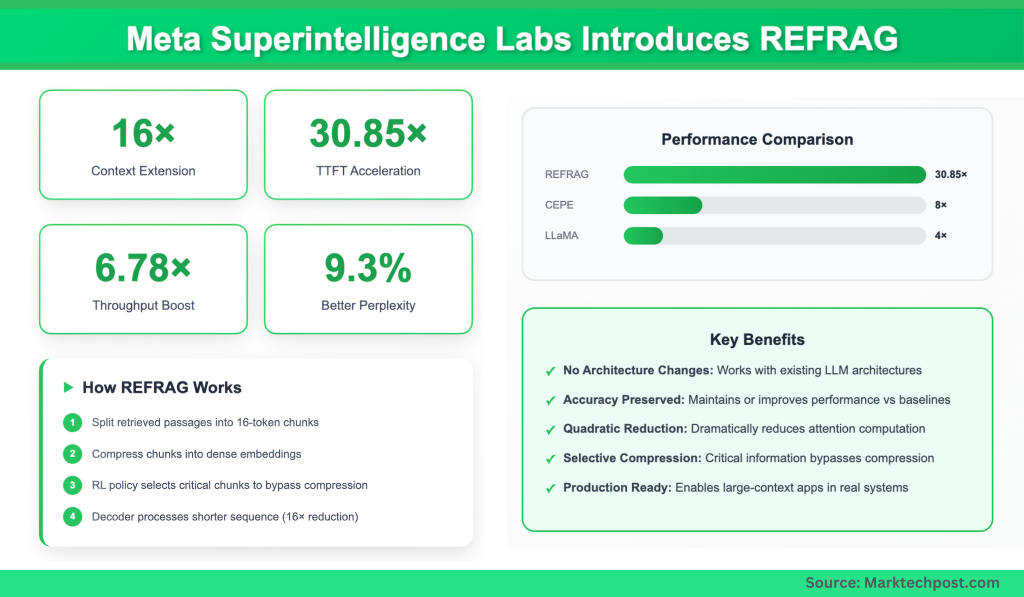

A crew of researchers from Meta Superintelligence Labs, Nationwide College of Singapore and Rice College has unveiled REFRAG (REpresentation For RAG), a decoding framework that rethinks retrieval-augmented era (RAG) effectivity. REFRAG extends LLM context home windows by 16× and achieves as much as a 30.85× acceleration in time-to-first-token (TTFT) with out compromising accuracy.

Why is lengthy context such a bottleneck for LLMs?

The eye mechanism in massive language fashions scales quadratically with enter size. If a doc is twice as lengthy, the compute and reminiscence price can develop fourfold. This not solely slows inference but additionally will increase the dimensions of the key-value (KV) cache, making large-context purposes impractical in manufacturing techniques. In RAG settings, most retrieved passages contribute little to the ultimate reply, however the mannequin nonetheless pays the total quadratic value to course of them.

How does REFRAG compress and shorten context?

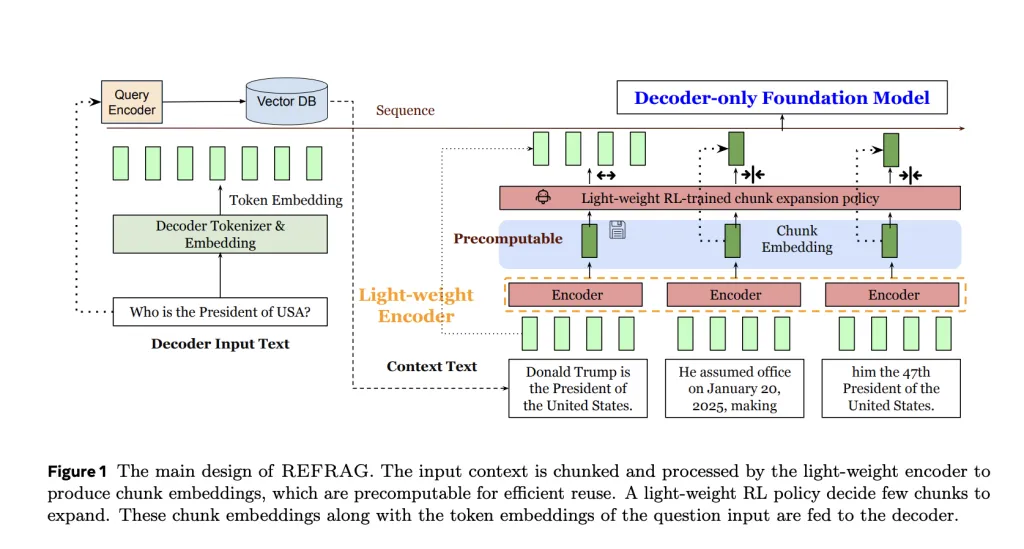

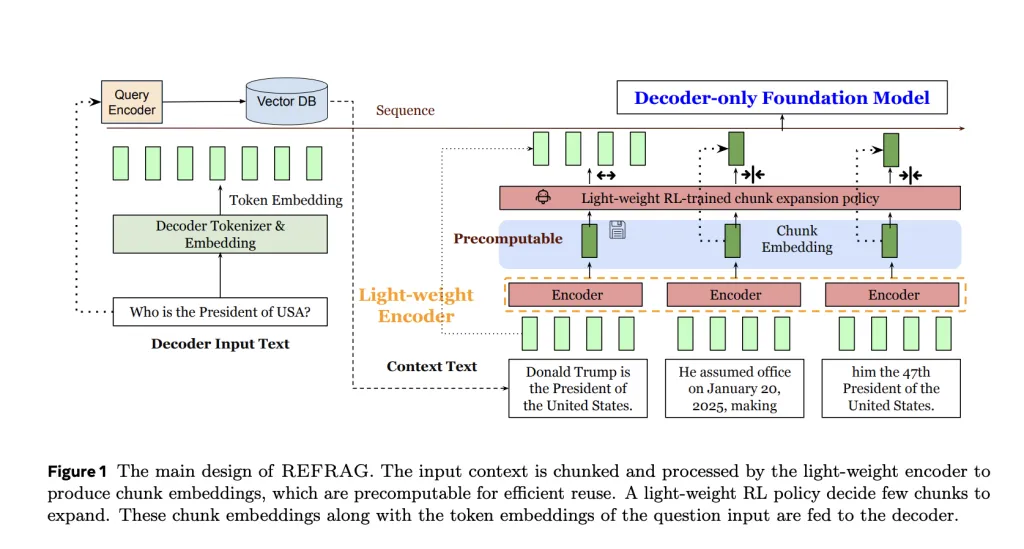

REFRAG introduces a light-weight encoder that splits retrieved passages into fixed-size chunks (e.g., 16 tokens) and compresses every right into a dense chunk embedding. As an alternative of feeding 1000’s of uncooked tokens, the decoder processes this shorter sequence of embeddings. The result’s a 16× discount in sequence size, with no change to the LLM structure.

How is acceleration achieved?

By shortening the decoder’s enter sequence, REFRAG reduces the quadratic consideration computation and shrinks the KV cache. Empirical outcomes present 16.53× TTFT acceleration at ok=16 and 30.85× acceleration at ok=32, far surpassing prior state-of-the-art CEPE (which achieved solely 2–8×). Throughput additionally improves by as much as 6.78× in comparison with LLaMA baselines.

How does REFRAG protect accuracy?

A reinforcement studying (RL) coverage supervises compression. It identifies probably the most information-dense chunks and permits them to bypass compression, feeding uncooked tokens straight into the decoder. This selective technique ensures that important particulars—comparable to actual numbers or uncommon entities—should not misplaced. Throughout a number of benchmarks, REFRAG maintained or improved perplexity in comparison with CEPE whereas working at far decrease latency.

What do the experiments reveal?

REFRAG was pretrained on 20B tokens from the SlimPajama corpus (Books + arXiv) and examined on long-context datasets together with Ebook, Arxiv, PG19, and ProofPile. On RAG benchmarks, multi-turn dialog duties, and long-document summarization, REFRAG constantly outperformed robust baselines:

- 16× context extension past normal LLaMA-2 (4k tokens).

- ~9.3% perplexity enchancment over CEPE throughout 4 datasets.

- Higher accuracy in weak retriever settings, the place irrelevant passages dominate, because of the means to course of extra passages below the identical latency price range.

Abstract

REFRAG reveals that long-context LLMs don’t need to be gradual or memory-hungry. By compressing retrieved passages into compact embeddings, selectively increasing solely the vital ones, and rethinking how RAG decoding works, Meta Superintelligence Labs has made it attainable to course of a lot bigger inputs whereas working dramatically quicker. This makes large-context purposes—like analyzing total reviews, dealing with multi-turn conversations, or scaling enterprise RAG techniques—not solely possible however environment friendly, with out compromising accuracy.

FAQs

Q1. What’s REFRAG?

REFRAG (REpresentation For RAG) is a decoding framework from Meta Superintelligence Labs that compresses retrieved passages into embeddings, enabling quicker and longer-context inference in LLMs.

Q2. How a lot quicker is REFRAG in comparison with present strategies?

REFRAG delivers as much as 30.85× quicker time-to-first-token (TTFT) and 6.78× throughput enchancment in comparison with LLaMA baselines, whereas outperforming CEPE.

Q3. Does compression cut back accuracy?

No. A reinforcement studying coverage ensures important chunks stay uncompressed, preserving key particulars. Throughout benchmarks, REFRAG maintained or improved accuracy relative to prior strategies.

This fall. The place will the code be obtainable?

Meta Superintelligence Labs will launch REFRAG on GitHub at facebookresearch/refrag

Take a look at the PAPER right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Publication.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.