Embedding-based search outperforms conventional keyword-based strategies throughout varied domains by capturing semantic similarity utilizing dense vector representations and approximate nearest neighbor (ANN) search. Nevertheless, the ANN knowledge construction brings extreme storage overhead, usually 1.5 to 7 instances the dimensions of the unique uncooked knowledge. This overhead is manageable in large-scale internet purposes however turns into impractical for private gadgets or massive datasets. Decreasing storage to below 5% of the unique knowledge measurement is vital for edge deployment, however current options fall quick. Strategies like product quantization (PQ) can cut back storage, however both result in a lower in accuracy or want elevated search latency.

Vector search strategies depend upon IVF and proximity graphs. Graph-based approaches like HNSW, NSG, and Vamana are thought of state-of-the-art as a consequence of their steadiness of accuracy and effectivity. Efforts to scale back graph measurement, comparable to discovered neighbor choice, face limitations as a consequence of excessive coaching prices and dependency on labeled knowledge. For resource-constrained environments, DiskANN and Starling retailer knowledge on disk, whereas FusionANNS optimizes {hardware} utilization. Strategies like AiSAQ and EdgeRAG try to reduce reminiscence utilization however nonetheless endure from excessive storage overhead or efficiency degradation at scale. Embedding compression strategies like PQ and RabitQ gives quantization with theoretical error bounds, however struggles to take care of accuracy below tight budgets.

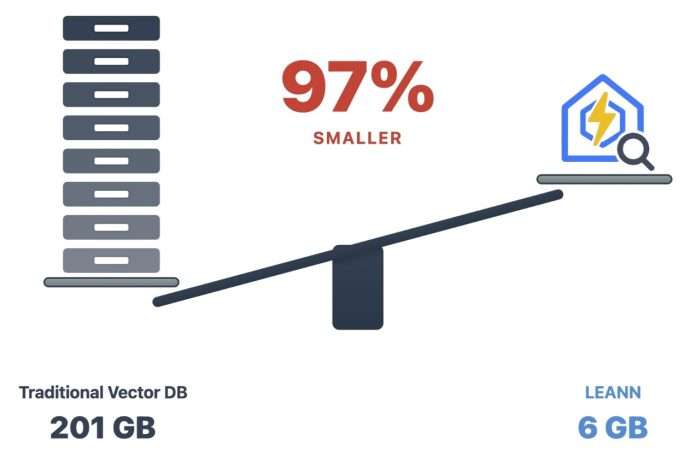

Researchers from UC Berkeley, CUHK, Amazon Net Companies, and UC Davis have developed LEANN, a storage-efficient ANN search index optimized for resource-limited private gadgets. It integrates a compact graph-based construction with an on-the-fly recomputation technique, enabling quick and correct retrieval whereas minimizing storage overhead. LEANN achieves as much as 50 instances smaller storage than commonplace indexes by lowering the index measurement to below 5% of the unique uncooked knowledge. It maintains 90% top-3 recall in below 2 seconds on real-world question-answering benchmarks. To scale back latency, LEANN makes use of a two-level traversal algorithm and dynamic batching that mixes embedding computations throughout search hops, enhancing GPU utilization.

LEANN’s structure combines core strategies comparable to graph-based recomputation, foremost strategies, and system workflow. Constructed on the HNSW framework, it observes that every question wants embeddings for under a restricted subset of nodes, prompting on-demand computation as a substitute of pre-storing all embeddings. To deal with earlier challenges, LEANN introduces two strategies: (a) a two-level graph traversal with dynamic batching to decrease recomputation latency, and (b) a excessive diploma of preserving graph pruning technique to scale back metadata storage. Within the system workflow, LEANN begins by computing embeddings for all dataset objects after which constructs a vector index utilizing an off-the-shelf graph-based indexing strategy.

By way of storage and latency, LEANN outperforms EdgeRAG, an IVF-based recomputation technique, attaining latency reductions starting from 21.17 to 200.60 instances throughout varied datasets and {hardware} platforms. This benefit is from LEANN’s polylogarithmic recomputation complexity, which scales extra effectively than EdgeRAG’s √𝑁 progress. By way of accuracy for downstream RAG duties, LEANN achieves greater efficiency throughout most datasets, besides GPQA, the place a distributional mismatch limits its effectiveness. Equally, on HotpotQA, the single-hop retrieval setup limits accuracy beneficial properties, because the dataset calls for multi-hop reasoning. Regardless of these limitations, LEANN reveals sturdy efficiency throughout numerous benchmarks.

On this paper, researchers launched LEANN, a storage-efficient neural retrieval system that mixes graph-based recomputation with progressive optimizations. By integrating a two-level search algorithm and dynamic batching, it eliminates the necessity to retailer full embeddings, attaining vital reductions in storage overhead whereas sustaining excessive accuracy. Regardless of its strengths, LEANN faces limitations, comparable to excessive peak storage utilization throughout index building, which might be addressed by pre-clustering or different strategies. Future work might deal with lowering latency and enhancing responsiveness, opening the trail for broader adoption in resource-constrained environments.

Try the Paper and GitHub Web page right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Publication.

Sajjad Ansari is a last yr undergraduate from IIT Kharagpur. As a Tech fanatic, he delves into the sensible purposes of AI with a deal with understanding the affect of AI applied sciences and their real-world implications. He goals to articulate advanced AI ideas in a transparent and accessible method.