Anthropic’s Claude Code giant language mannequin has been abused by risk actors who used it in information extortion campaigns and to develop ransomware packages.

The corporate says that its instrument has additionally been utilized in fraudulent North Korean IT employee schemes and to distribute lures for Contagious Interview campaigns, in Chinese language APT campaigns, and by a Russian-speaking developer to create malware with superior evasion capabilities.

AI-created ransomware

In one other occasion, tracked as ‘GTG-5004,’ a UK-based risk actor used Claude Code to develop and commercialize a ransomware-as-a-service (RaaS) operation.

The AI utility helped create all of the required instruments for the RaaS platform, implementing ChaCha20 stream cipher with RSA key administration on the modular ransomware, shadow copy deletion, choices for particular file focusing on, and the power to encrypt community shares.

On the evasion entrance, the ransomware masses by way of reflective DLL injection and options syscall invocation strategies, API hooking bypass, string obfuscation, and anti-debugging.

Anthropic says that the risk actor relied nearly completely on Claude to implement probably the most knowledge-demanding bits of the RaaS platform, noting that, with out AI help, they’d have most definitely failed to provide a working ransomware.

“Essentially the most hanging discovering is the actor’s seemingly full dependency on AI to develop purposeful malware,” reads the report.

“This operator doesn’t seem able to implementing encryption algorithms, anti-analysis strategies, or Home windows internals manipulation with out Claude’s help.”

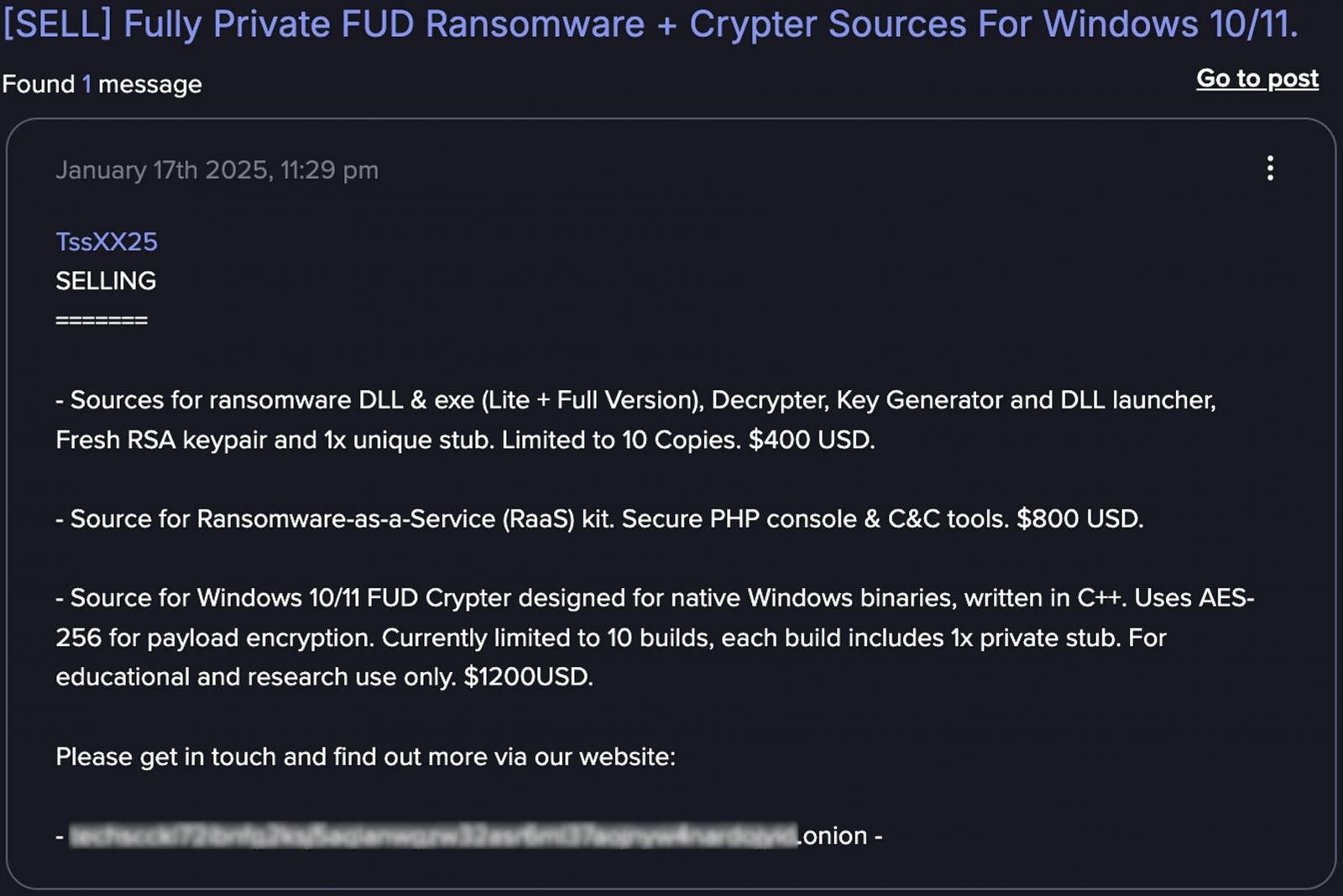

After creating the RaaS operation, the risk actor provided ransomware executables, kits with PHP consoles and command-and-control (C2) infrastructure, and Home windows crypters for $400 to $1,200 on darkish internet boards reminiscent of Dread, CryptBB, and Nulled.

Supply: Anthropic

AI-operated extortion marketing campaign

In one of many analyzed circumstances, which Anthropic tracks as ‘GTG-2002,’ a cybercriminal used Claude as an energetic operator to conduct a knowledge extortion marketing campaign towards at the least 17 organizations within the authorities, healthcare, monetary, and emergency companies sectors.

The AI agent carried out community reconnaissance and helped the risk actor obtain preliminary entry, after which generated customized malware based mostly on the Chisel tunneling instrument to make use of for delicate information exfiltration.

After the assault failed, Claude Code was used to make the malware cover itself higher by offering strategies for string encryption, anti-debugging code, and filename masquerading.

Claude was subsequently used to research the stolen recordsdata to set the ransom calls for, which ranged between $75,000 and $500,000, and even to generate customized HTML ransom notes for every sufferer.

“Claude not solely carried out ‘on-keyboard’ operations but in addition analyzed exfiltrated monetary information to find out applicable ransom quantities and generated visually alarming HTML ransom notes that had been displayed on sufferer machines by embedding them into the boot course of” – Anthropic.

Anthropic known as this assault an instance of “vibe hacking,” reflecting using AI coding brokers as companions in cybercrime, fairly than using them exterior the operation’s context.

Anthropic’s report contains extra examples the place Claude Code was put to unlawful use, albeit in much less complicated operations. The corporate says that its LLM assisted a risk actor in growing superior API integration and resilience mechanisms for a carding service.

One other cybercriminal leveraged AI energy for love scams, producing “excessive emotional intelligence” replies, creaating photos that improved profiles, and developingemotional manipulation content material to focus on victims, in addition to offering multi-language help for wider focusing on.

For every of the introduced circumstances, the AI developer gives techniques and strategies that would assist different researchers uncover new cybercriminal exercise or make a connection to a recognized unlawful operation.

Anthropic has banned all accounts linked to the malicious operations it detected, constructed tailor-made classifiers to detect suspicious use patterns, and shared technical indicators with exterior companions to assist defend towards these circumstances of AI misuse.