Ask a query in ChatGPT, Perplexity, Gemini, or Copilot, and the reply seems in seconds. It feels easy. However beneath the hood, there’s no magic. There’s a battle occurring.

That is the a part of the pipeline the place your content material is in a knife battle with each different candidate. Each passage within the index desires to be the one the mannequin selects.

For SEOs, this can be a new battleground. Conventional search engine marketing was about rating on a web page of outcomes. Now, the competition occurs inside a solution choice system. And when you need visibility, you want to perceive how that system works.

Picture Credit score: Duane Forrester

Picture Credit score: Duane ForresterThe Reply Choice Stage

This isn’t crawling, indexing, or embedding in a vector database. That half is completed earlier than the question ever occurs. Reply choice kicks in after a person asks a query. The system already has content material chunked, embedded, and saved. What it must do is use candidate passages, rating them, and determine which of them to move into the mannequin for era.

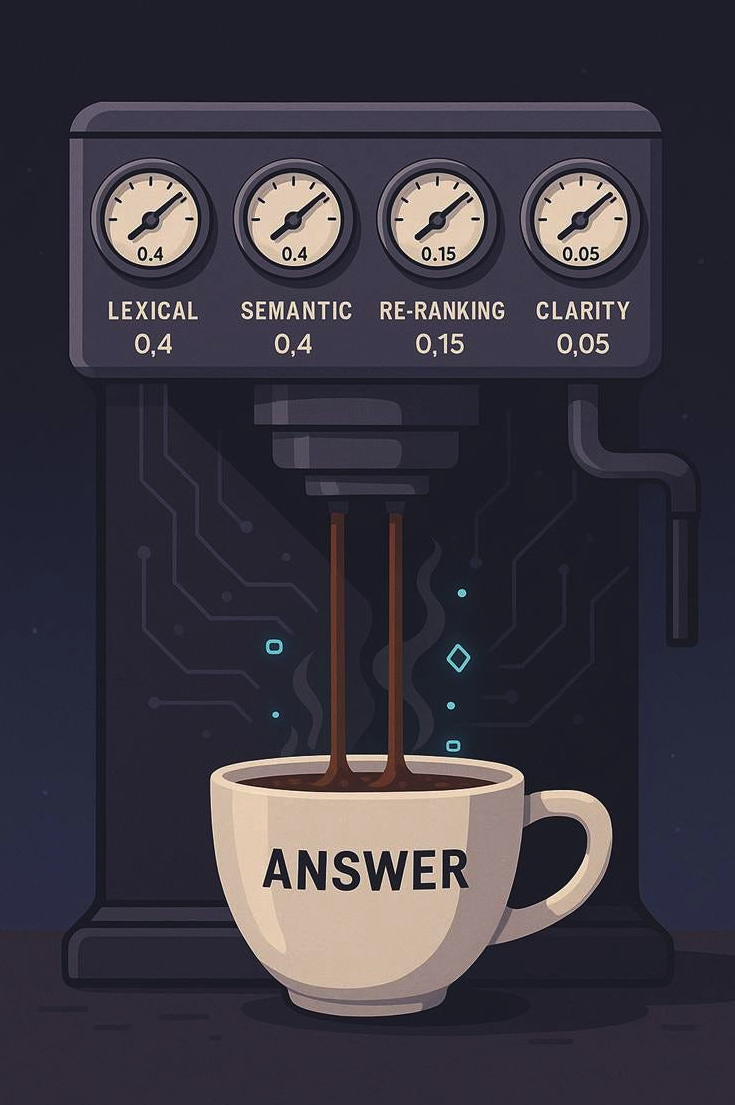

Each fashionable AI search pipeline makes use of the identical three phases (throughout 4 steps): retrieval, re-ranking, and readability checks. Every stage issues. Every carries weight. And whereas each platform has its personal recipe (the weighting assigned at every step/stage), the analysis provides us sufficient visibility to sketch a practical place to begin. To principally construct our personal mannequin to no less than partially replicate what’s happening.

The Builder’s Baseline

When you had been constructing your personal LLM-based search system, you’d have to inform it how a lot every stage counts. Which means assigning normalized weights that sum to 1.

A defensible, research-informed beginning stack may appear to be this:

- Lexical retrieval (key phrases, BM25): 0.4.

- Semantic retrieval (embeddings, which means): 0.4.

- Re-ranking (cross-encoder scoring): 0.15.

- Readability and structural boosts: 0.05.

Each main AI system has its personal proprietary mix, however they’re all basically brewing from the identical core substances. What I’m exhibiting you right here is the typical place to begin for an enterprise search system, not precisely what ChatGPT, Perplexity, Claude, Copilot, or Gemini function with. We’ll by no means know these weights.

Hybrid defaults throughout the business again this up. Weaviate’s hybrid search alpha parameter defaults to 0.5, an equal steadiness between key phrase matching and embeddings. Pinecone teaches the identical default in its hybrid overview.

Re-ranking will get 0.15 as a result of it solely applies to the brief checklist. But its impression is confirmed: “Passage Re-Rating with BERT” confirmed main accuracy features when BERT was layered on BM25 retrieval.

Readability will get 0.05. It’s small, however actual. A passage that leads with the reply, is dense with details, and could be lifted entire, is extra more likely to win. That matches the findings from my very own piece on semantic overlap vs. density.

At first look, this may sound like “simply search engine marketing with totally different math.” It isn’t. Conventional search engine marketing has all the time been guesswork inside a black field. We by no means actually had entry to the algorithms in a format that was near their manufacturing variations. With LLM techniques, we lastly have one thing search by no means actually gave us: entry to all of the analysis they’re constructed on. The dense retrieval papers, the hybrid fusion strategies, the re-ranking fashions, they’re all public. That doesn’t imply we all know precisely how ChatGPT or Gemini dials their knobs, or tunes their weights, but it surely does imply we will sketch a mannequin of how they possible work far more simply.

From Weights To Visibility

So, what does this imply when you’re not constructing the machine however competing inside it?

Overlap will get you into the room, density makes you credible, lexical retains you from being filtered out, and readability makes you the winner.

That’s the logic of the reply choice stack.

Lexical retrieval remains to be 40% of the battle. In case your content material doesn’t include the phrases individuals truly use, you don’t even enter the pool.

Semantic retrieval is one other 40%. That is the place embeddings seize which means. A paragraph that ties associated ideas collectively maps higher than one that’s skinny and remoted. That is how your content material will get picked up when customers phrase queries in methods you didn’t anticipate.

Re-ranking is 15%. It’s the place readability and construction matter most. Passages that appear to be direct solutions rise. Passages that bury the conclusion drop.

Readability and construction are the tie-breaker. 5% may not sound like a lot, however in shut fights, it decides who wins.

Two Examples

Zapier’s Assist Content material

Zapier’s documentation is famously clear and answer-first. A question like “Methods to join Google Sheets to Slack” returns a ChatGPT reply that begins with the precise steps outlined as a result of the content material from Zapier gives the precise information wanted. Once you click on by means of a ChatGPT useful resource hyperlink, the web page you land on isn’t a weblog put up; it’s most likely not even a assist article. It’s the precise web page that permits you to accomplish the duty you requested for.

- Lexical? Sturdy. The phrases “Google Sheets” and “Slack” are proper there.

- Semantic? Sturdy. The passage clusters associated phrases like “integration,” “workflow,” and “set off.”

- Re-ranking? Sturdy. The steps lead with the reply.

- Readability? Very sturdy. Scannable, answer-first formatting.

In a 0.4 / 0.4 / 0.15 / 0.05 system, Zapier’s chunk scores throughout all dials. This is the reason their content material typically exhibits up in AI solutions.

A Advertising and marketing Weblog Submit

Distinction that with a typical lengthy advertising weblog put up about “workforce productiveness hacks.” The put up mentions Slack, Google Sheets, and integrations, however solely after 700 phrases of story.

- Lexical? Current, however buried.

- Semantic? Respectable, however scattered.

- Re-ranking? Weak. The reply to “How do I join Sheets to Slack?” is hidden in a paragraph midway down.

- Readability? Weak. No liftable answer-first chunk.

Although the content material technically covers the subject, it struggles on this weighting mannequin. The Zapier passage wins as a result of it aligns with how the reply choice layer truly works.

Conventional search nonetheless guides the person to learn, consider, and determine if the web page they land on solutions their want. AI solutions are totally different. They don’t ask you to parse outcomes. They map your intent on to the duty or reply and transfer you straight into “get it performed” mode. You ask, “Methods to join Google Sheets to Slack,” and you find yourself with an inventory of steps or a hyperlink to the web page the place the work is accomplished. You don’t actually get a weblog put up explaining how somebody did this throughout their lunch break, and it solely took 5 minutes.

Volatility Throughout Platforms

There’s one other main distinction from conventional search engine marketing. Engines like google, regardless of algorithm modifications, converged over time. Ask Google and Bing the identical query, and also you’ll typically see related outcomes.

LLM platforms don’t converge, or no less than, aren’t up to now. Ask the identical query in Perplexity, Gemini, and ChatGPT, and also you’ll typically get three totally different solutions. That volatility displays how every system weights its dials. Gemini might emphasize citations. Perplexity might reward breadth of retrieval. ChatGPT might compress aggressively for conversational type. And now we have information that exhibits that between a standard engine, and an LLM-powered reply platform, there’s a huge gulf between solutions. Brightedge’s information (62% disagreement on model suggestions) and ProFound’s information (…AI modules and reply engines differ dramatically from search engines like google, with simply 8 – 12% overlap in outcomes) showcase this clearly.

For SEOs, this implies optimization isn’t one-size-fits-all anymore. Your content material may carry out effectively in a single system and poorly in one other. That fragmentation is new, and also you’ll want to search out methods to deal with it as shopper conduct round utilizing these platforms for solutions shifts.

Why This Issues

Within the previous mannequin, a whole bunch of rating elements blurred collectively right into a consensus “finest effort.” Within the new mannequin, it’s such as you’re coping with 4 large dials, and each platform tunes them in a different way. In equity, the complexity behind these dials remains to be fairly huge.

Ignore lexical overlap, and also you lose a part of that 40% of the vote. Write semantically skinny content material, and you may lose one other 40. Ramble or bury your reply, and also you received’t win re-ranking. Pad with fluff and also you miss the readability enhance.

The knife battle doesn’t occur on a SERP anymore. It occurs inside the reply choice pipeline. And it’s extremely unlikely these dials are static. You possibly can guess they transfer in relation to many different elements, together with one another’s relative positioning.

The Subsequent Layer: Verification

At the moment, reply choice is the final gate earlier than era. However the subsequent stage is already in view: verification.

Analysis exhibits how fashions can critique themselves and lift factuality. Self-RAG demonstrates retrieval, era, and critique loops. SelfCheckGPT runs consistency checks throughout a number of generations. OpenAI is reported to be constructing a Common Verifier for GPT-5. And, I wrote about this entire matter in a latest Substack article.

When verification layers mature, retrievability will solely get you into the room. Verification will determine when you keep there.

Closing

This actually isn’t common search engine marketing in disguise. It’s a shift. We will now extra clearly see the gears turning as a result of extra of the analysis is public. We additionally see volatility as a result of every platform spins these gears in a different way.

For SEOs, I believe the takeaway is evident. Preserve lexical overlap sturdy. Construct semantic density into clusters. Lead with the reply. Make passages concise and liftable. And I do perceive how a lot that seems like conventional search engine marketing steering. I additionally perceive how the platforms utilizing the data differ a lot from common search engines like google. These variations matter.

That is the way you survive the knife battle inside AI. And shortly, the way you move the verifier’s take a look at when you’re there.

Extra Sources:

This put up was initially printed on Duane Forrester Decodes.

Featured Picture: tete_escape/Shutterstock