The LangChain ecosystem gives an essential set of instruments with which to assemble an software utilizing Giant Language Fashions (LLMs). Nevertheless, when the names of the businesses corresponding to LangChain, LangGraph, LangSmith, and LangFlow are talked about, it’s usually tough to know the place to start. This can be a information that reveals a simple approach round this confusion. Right here, we are going to look at the aim of every of the instruments and display their interplay. We will slim all the way down to a sensible, hands-on case of the event of multi-agent techniques utilizing these instruments. All through the article, you’ll be taught learn how to use LangGraph to orchestrate and LangSmith to debug. We’re additionally going to make use of LangFlow as a prototyping merchandise. Total, when you undergo this text, you’ll be properly knowledgeable of learn how to choose the suitable instruments to make use of in your initiatives.

The LangChain Ecosystem at a Look

Let’s begin with a fast have a look at the primary instruments.

- LangChain: That is the core framework. It gives you with the constructing blocks of the LLM purposes. Contemplate it a listing of elements. It contains fashions, immediate templates, and information connector easy interfaces. The complete LangChain ecosystem is predicated on LangChain.

- LangGraph: This can be a advanced and stateful agent development library. Whereas LangChain is nice with easy chains, with LangGraph, you possibly can construct loops, branches, and multi-step workflows. LangGraph is greatest in relation to orchestrating multi-agent techniques.

- LangSmith: A monitoring and testing platform to your LLM purposes. It permits you to observe the tracing of your chains and brokers which might be essential in troubleshooting. One of many essential steps to transition a prototype to a manufacturing software is LangSmith to debug a posh workflow.

- LangFlow: A visible Builder and Experimenter of LangChain. LangFlow prototyping has a drag-and-drop interface, so you possibly can write little code to make and check out concepts in a short time. It is a wonderful studying and team-working expertise.

These instruments don’t compete with one another. They’re structured in a way that they’ve for use collectively. LangChain offers you the elements, LangGraph will put them collectively into extra advanced machines, LangSmith will check whether or not the machines have been functioning correctly, and LangFlow provides you with a sandbox the place you possibly can write machines.

Allow us to discover every of those intimately now.

1. LangChain: The Foundational Framework

The basic open-source system is LangChain (learn all about it right here). It hyperlinks LLMs to outdoors information shops and instruments. It objectifies components corresponding to constructing blocks. This lets you create linear chains of sequence, often known as Chains. Most initiatives involving the event of LLM have LangChain as their basis.

Finest For:

- An interactive chatbot out of a strict program.

- Machine learning-based augmented retrieval pipelines.

- Liner workflows – the workflows which might be adopted sequentially.

Core Idea: Chains and LangChain Expression Language (LCEL). LCEL includes using the pipe image ( ) to attach elements to one another. This varieties a readable and clear stream of information.

Maturity and Efficiency: LangChain is the oldest instrument of the ecosystem. It has an unlimited following and greater than 120,000 stars on GitHub. The construction is minimalistic. It has a low overhead of efficiency. It’s already prepared to make use of and deployed in hundreds of purposes.

Arms-on: Constructing a Primary Chain

This instance reveals learn how to create a easy chain. The chain will produce a joke {of professional} content material a couple of explicit matter.

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

# 1. Initialize the LLM mannequin. We use GPT-4o right here.

mannequin = ChatOpenAI(mannequin="gpt-4o")

# 2. Outline a immediate template. The {matter} is a variable.

immediate = ChatPromptTemplate.from_template("Inform me knowledgeable joke about {matter}")

# 3. Create the chain utilizing the pipe operator (|).

# This sends the formatted immediate to the mannequin.

chain = immediate | mannequin

# 4. Run the chain with a particular matter.

response = chain.invoke({"matter": "Knowledge Science"})

print(response.content material) Output:

2. LangGraph: For Advanced, Stateful Brokers

LangGraph is a continuation of LangChain. It provides loops and state administration (learn all about it right here). The flows of LangChain are linear (A-B-C). In distinction, loops and branches (A-B-A) are permitted in LangGraph. That is essential to agentic processes the place an AI would want to rectify itself or replicate capabilities. It’s these complexity wants which might be put to the check most within the LangChain vs LangGraph resolution.

Finest For:

- Brokers cooperating in Multi-agent techniques.

- Brokers of autonomous analysis loop between duties.

- Processes that contain the recollection of previous actions.

Core Idea: The nodes are capabilities, and the sides are paths in LangGraph. There’s a frequent object of the state that goes via the graph, and knowledge is shared throughout nodes.

Maturity and Efficiency: the brand new customary of enterprise brokers is LangGraph. It achieved a steady 1.0 in late 2025. It’s developed to maintain, lengthy lasting, duties which might be proof against crashes of the server. Albeit it accommodates larger overhead than LangChain, that is an crucial trade-off to create powerful-stateful techniques.

Arms-on: A Easy “Self-Correction” Loop

A easy graph is shaped on this instance. A drafter node and a refiner node make a draft higher and higher. It represents a easy melodramatic agent.

from typing import TypedDict

from langgraph.graph import StateGraph, START, END

# 1. Outline the state object for the graph.

class AgentState(TypedDict):

enter: str

suggestions: str

# 2. Outline the graph nodes as Python capabilities.

def draft_node(state: AgentState):

print("Drafter node executing...")

# In an actual app, this may name an LLM to generate a draft.

return {"suggestions": "The draft is nice, however wants extra element."}

def refine_node(state: AgentState):

print("Refiner node executing...")

# This node would use the suggestions to enhance the draft.

return {"suggestions": "Ultimate model full."}

# 3. Construct the graph.

workflow = StateGraph(AgentState)

workflow.add_node("drafter", draft_node)

workflow.add_node("refiner", refine_node)

# 4. Outline the workflow edges.

workflow.add_edge(START, "drafter")

workflow.add_edge("drafter", "refiner")

workflow.add_edge("refiner", END)

# 5. Compile the graph and run it.

app = workflow.compile()

final_state = app.invoke({"enter": "Write a weblog put up"})

print(final_state) Output:

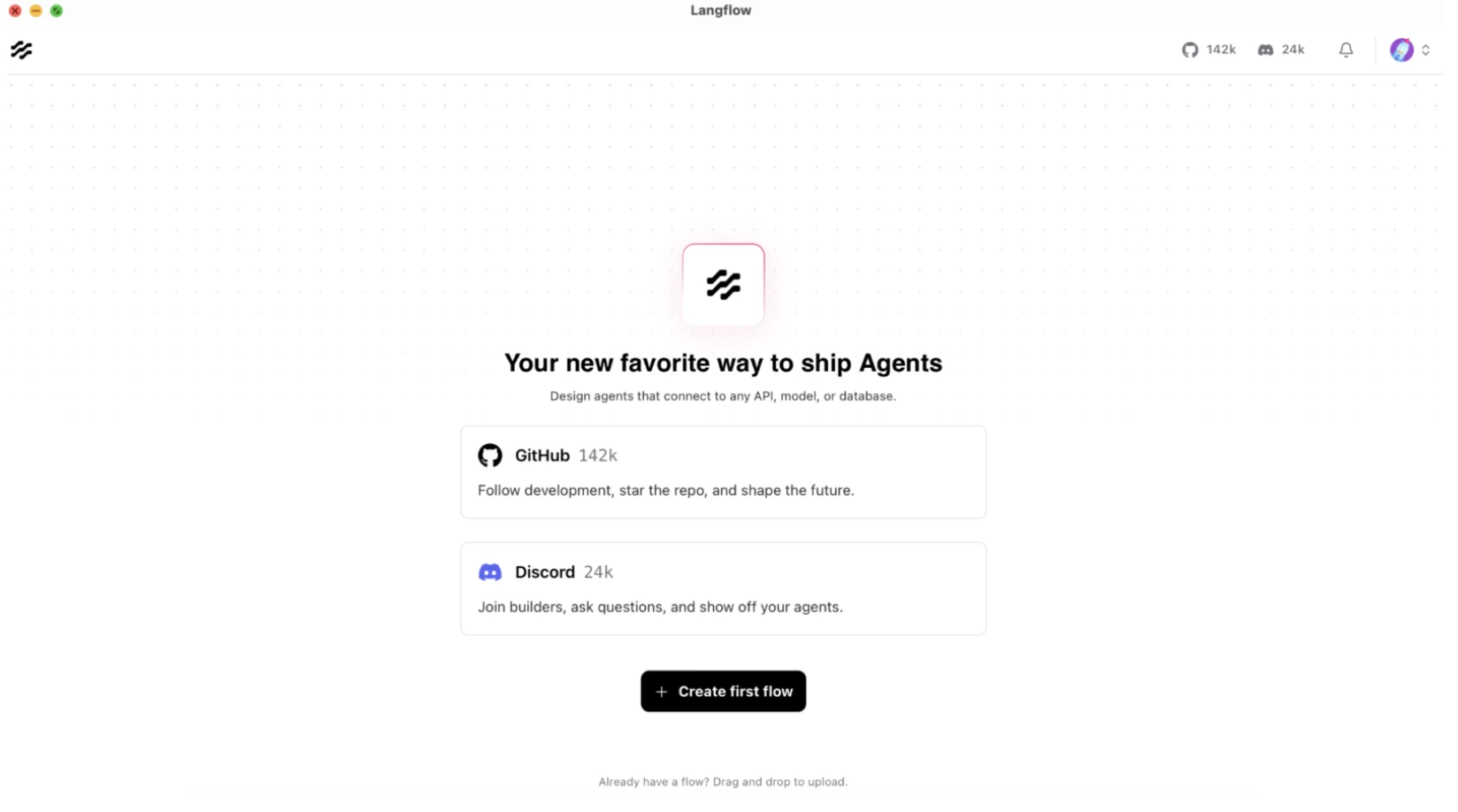

3. LangFlow: The Visible IDE for Prototyping

LangFlow, a prototyping language, is a drag-and-drop interface to the LangChain ecosystem (learn intimately right here). It permits you to see the info stream of your LLM app. It’s ultimate within the case of non-coders or builders who must construct and check concepts quick.

Finest For:

- Fast modelling of latest software ideas.

- Visualising the concepts of AI.

- Finest for non-technical members of the workforce.

Core Idea: A low-code/no-code canvas the place you join elements visually.

Maturity and Efficiency: The LangFlow prototype is right throughout the design stage. Though deploying flows is feasible with Docker, high-traffic purposes can normally be supplied by exporting the logic into pure Python code. The group curiosity on that is monumental, which demonstrates its significance for fast iteration.

Arms-on: Constructing Visually

You may check your logic with out writing a single line of Python.

1. Set up and Run: Open your browser and head over to https://www.langflow.org/desktop. Present the small print and obtain the LangFlow software in response to your system. We’re utilizing Mac right here. Open the LangFlow software, and it’ll appear like this:

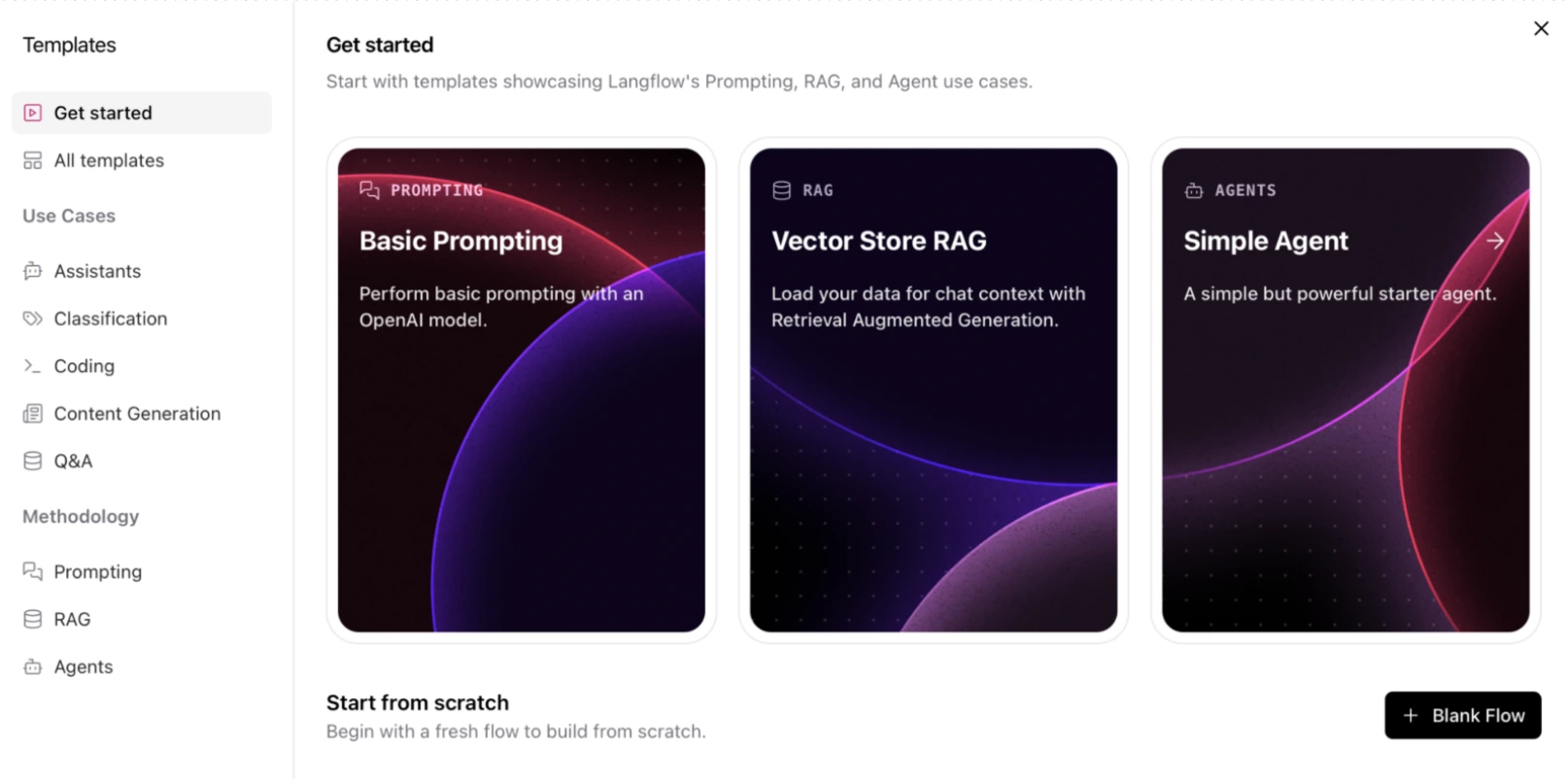

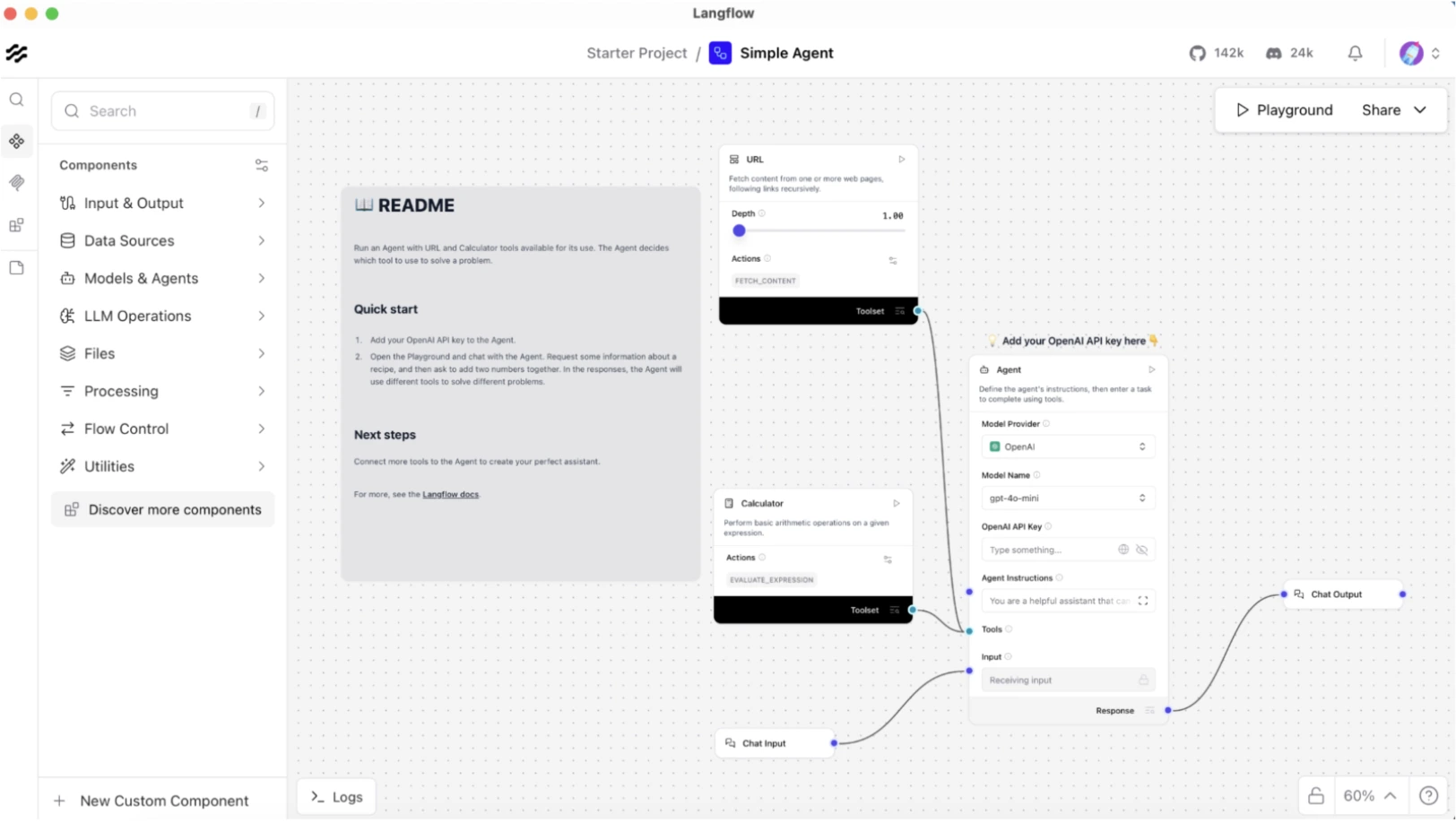

2. Choose template: For a easy run, choose the “Easy Agen”t possibility from the template

3. The Canvas: On the brand new canvas, drag an “OpenAI” part and a “Immediate” part from the facet menu. As we chosen the Easy Agent template, it should appear like this with minimal elements.

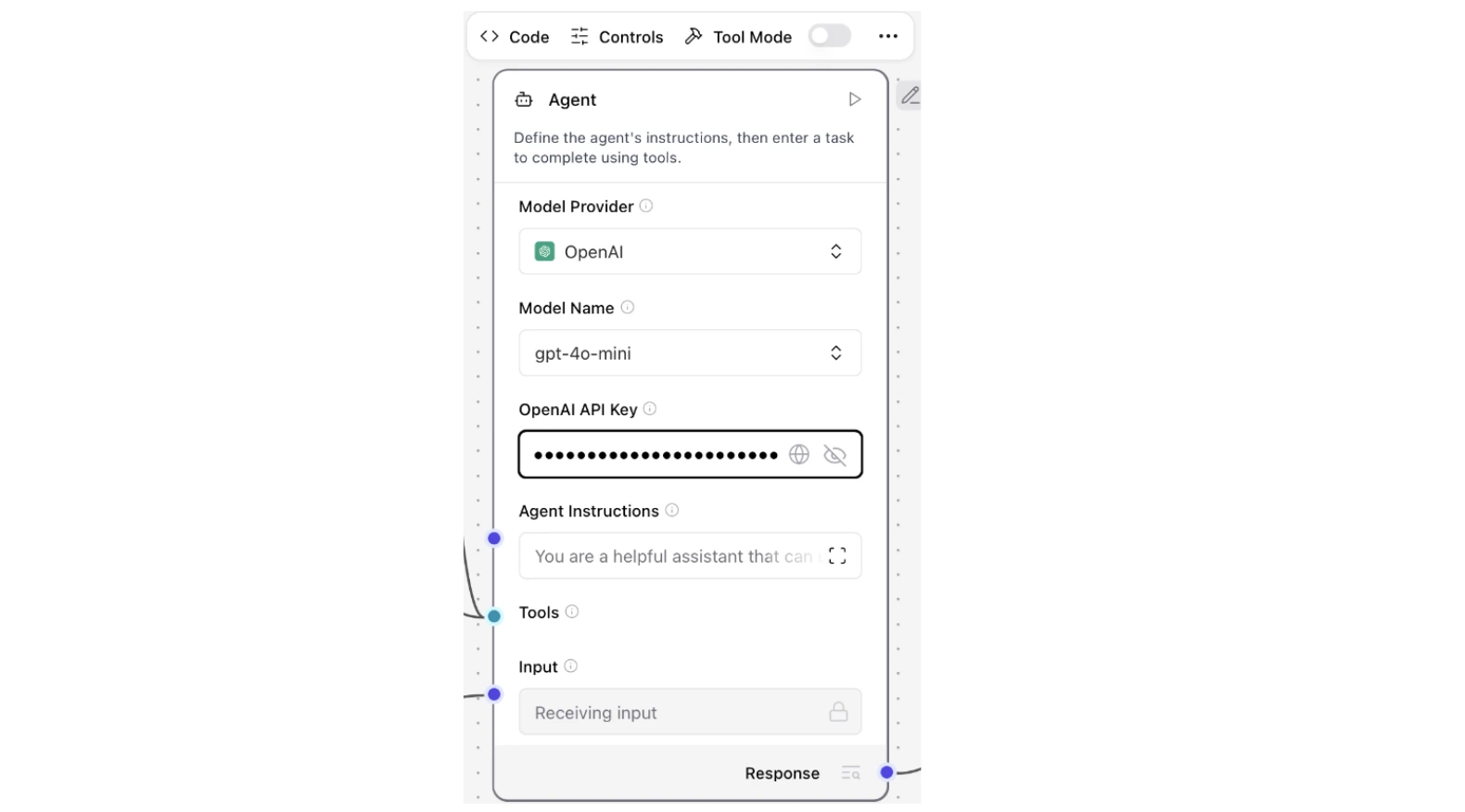

4. The API Connection: Click on the OpenAI part and fill the OpenAI API Key within the textual content area.

5. The Outcome: Now the straightforward agent is able to check. Click on on the “Playground” possibility from the highest proper to check your agent.

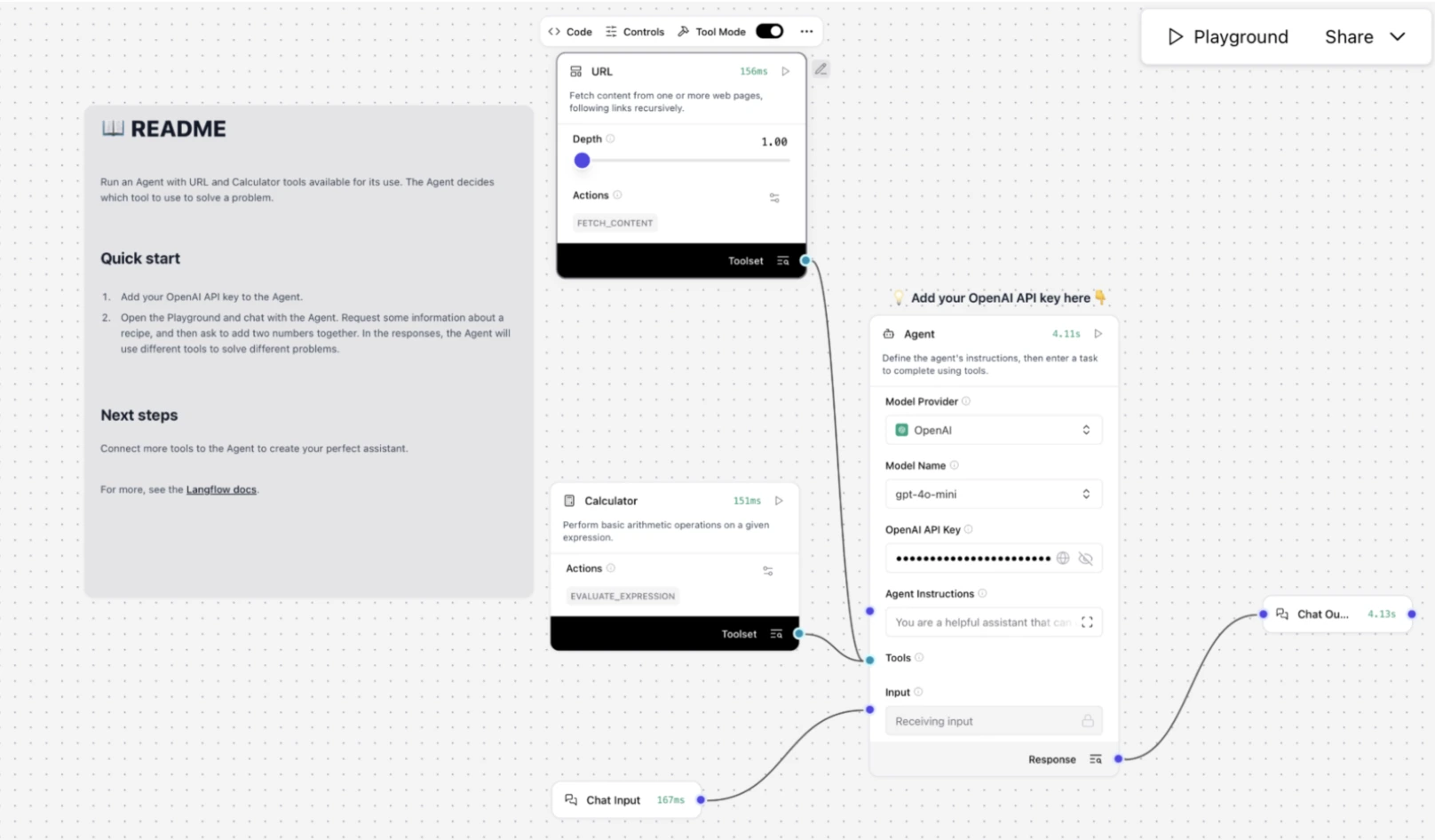

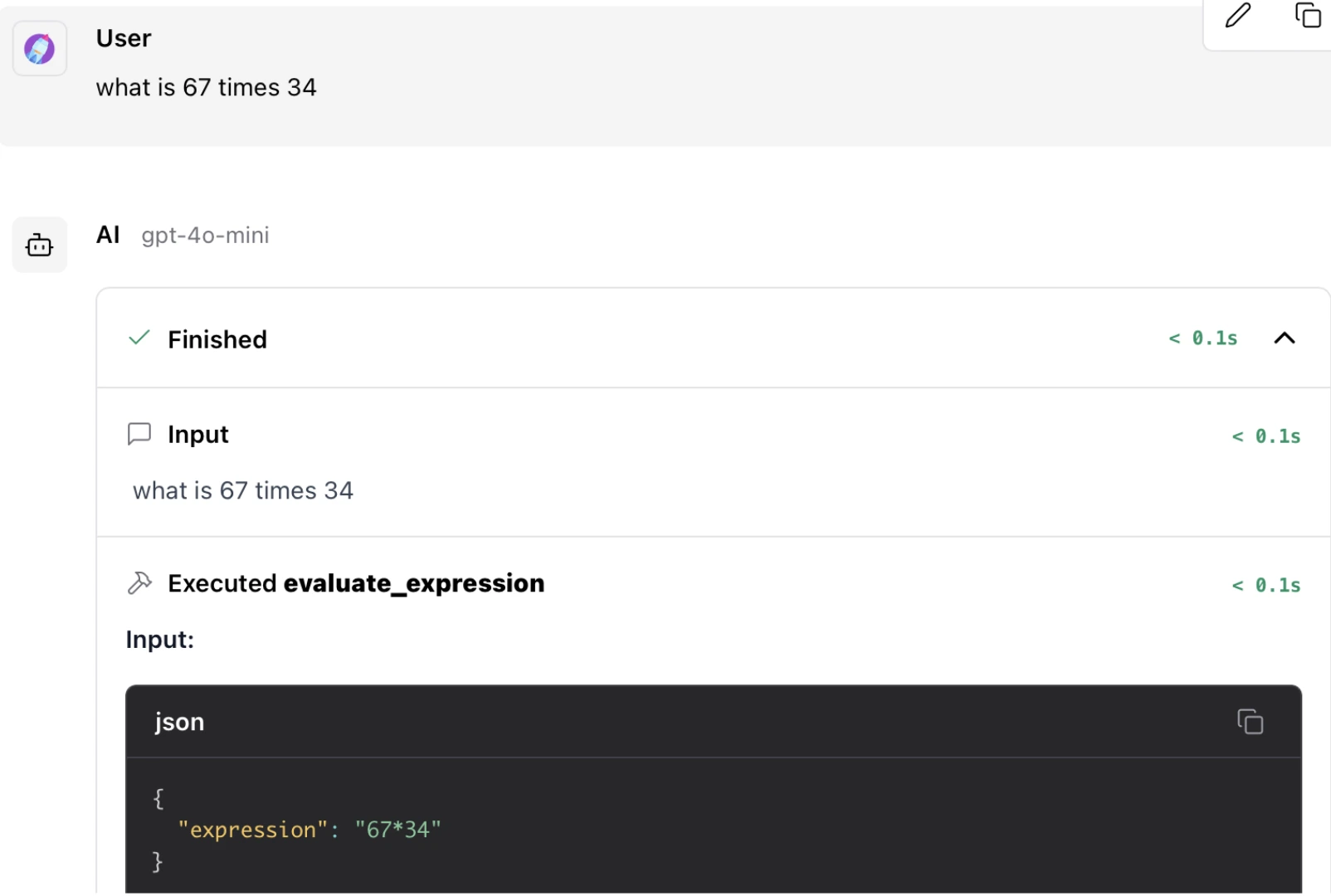

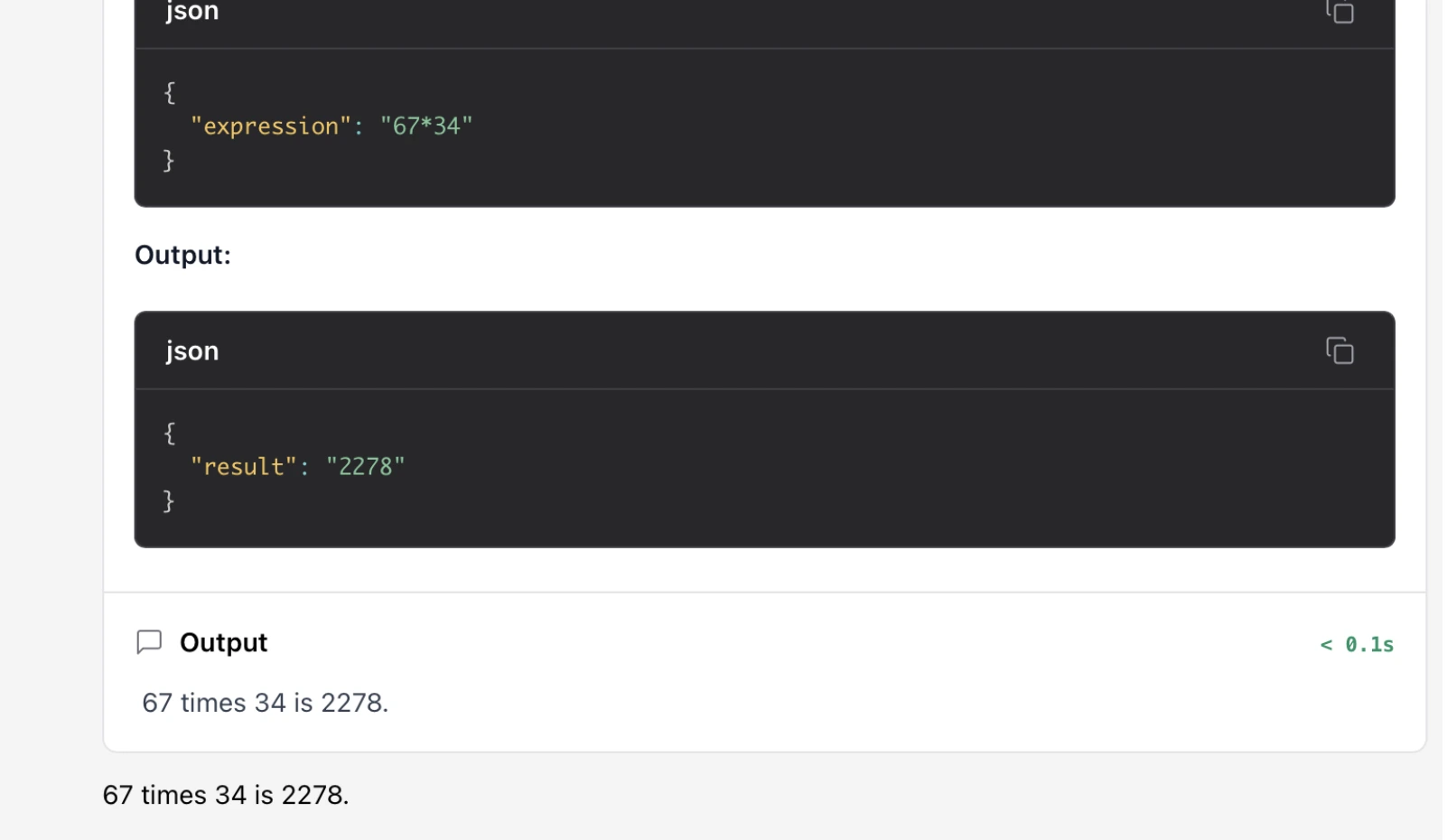

You may see that our easy agent has two built-in instruments. First, a Calculator instrument, which is used to judge the expression. One other is a URL instrument used to entry content material contained in the URL.

We examined the agent with totally different queries and obtained this Output:

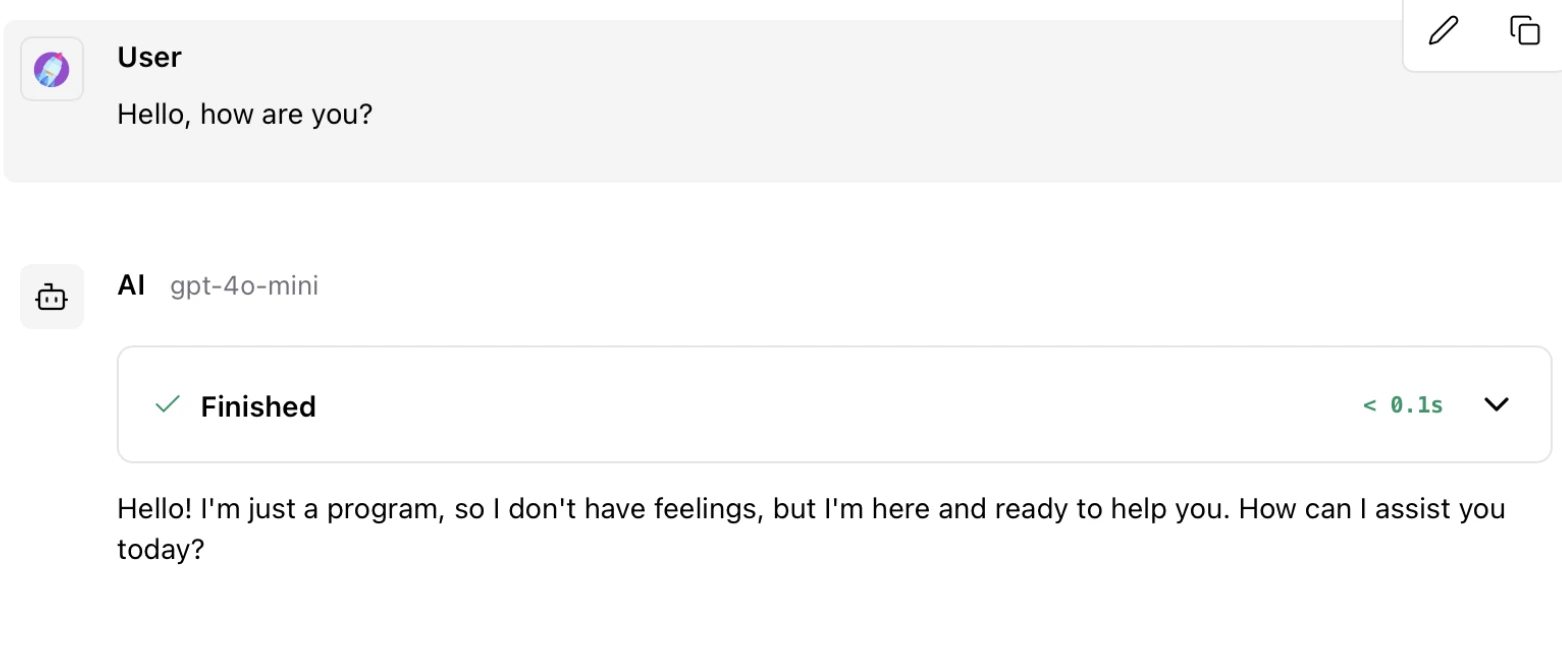

Easy Question:

Instrument Name Question:

4. LangSmith: Observability and Testing Platform

LangSmith shouldn’t be a coding framework – it’s a platform. After you have created an app utilizing LangChain or LangGraph, you want LangSmith to observe it. It reveals to you what occurs behind the scenes. It data all tokens, spikes within the latency, and errors. Take a look at the final LangSmith information right here.

Finest For:

- Tracing difficult, multi-step brokers.

- Monitoring the API expenses and efficiency.

- A / B testing of varied prompts or fashions.

Core Idea: Monitoring and Benchmarking. LangSmith lists traces of every run, giving the inputs and outputs of every run.

Maturity and Efficiency: The LangSmith to observe must be used within the manufacturing area. It’s an owner-built service of the LangChain crew. LangSmith favours OpenTelemetry to guarantee that the monitoring of your app shouldn’t be a slowdown issue. It’s the secret to creating reliable and reasonably priced AIs.

Arms-on: Enabling Observability

There is no such thing as a must edit your code to work with LangSmith. One simply units some surroundings variables. They’re mechanically recognized, and logging begins with LangChain and LangGraph.

os.environ['OPENAI_API_KEY'] = “YOUR_OPENAI_API_KEY”

os.environ['LANGCHAIN_TRACING_V2'] = “true”

os.environ['LANGCHAIN_API_KEY'] = “YOUR_LANGSMITH_API_KEY”

os.environ['LANGCHAIN_PROJECT'] = 'demo-langsmith'Now check the tracing:

import openai

from langsmith.wrappers import wrap_openai

from langsmith import traceable

shopper = wrap_openai(openai.OpenAI())

@traceable

def example_pipeline(user_input: str) -> str:

response = shopper.chat.completions.create(

mannequin="gpt-4o-mini",

messages=[{"role": "user", "content": user_input}]

)

return response.decisions[0].message.content material

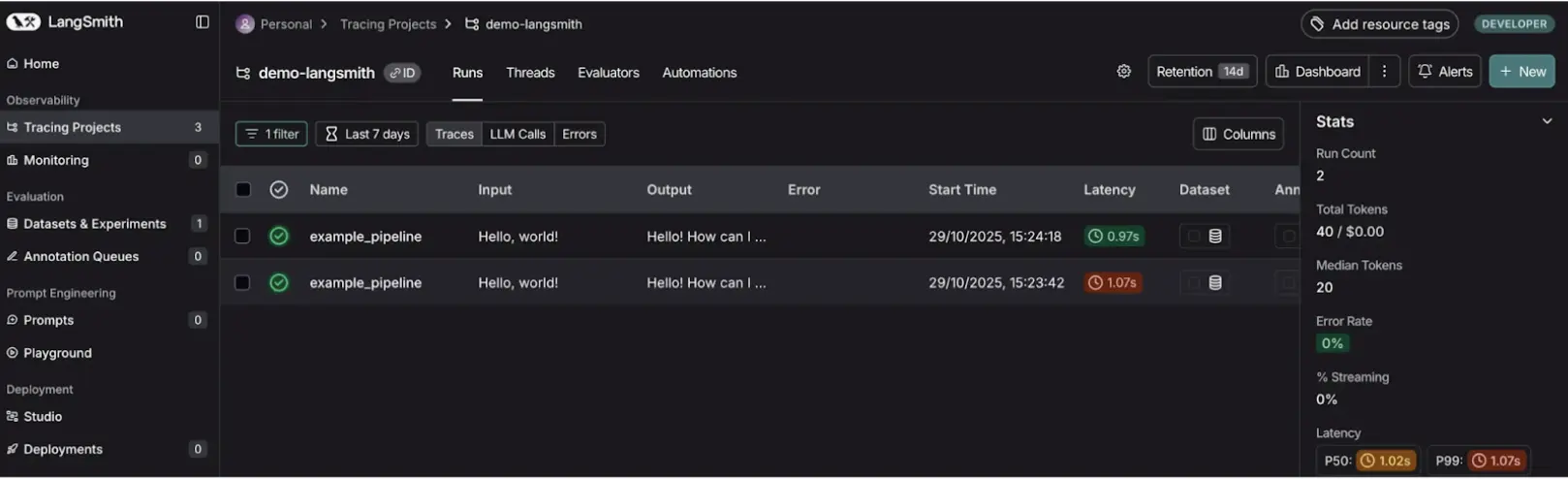

reply = example_pipeline("Howdy, world!")We encased the OpenAI shopper in wrapopenai and the decorator Tracer within the type of the operate @traceable. This may incur a hint on LangSmith every time examplepipeline is known as (and every inner LLM API name). Traces assist have a look at the historical past of prompts, mannequin outcomes, instrument invocation, and so on. That’s value its weight in gold in debugging advanced chains.

Output:

It’s now attainable to see any hint in your LangSmith dashboard everytime you run your code. There’s a graphic “breadcrumb path of how the LLM discovered the reply. This appears inestimable within the examination and troubleshooting of agent behaviour.

LangChain vs LangGraph vs LangSmith vs LangFlow

| Function | LangChain | LangGraph | LangFlow | LangSmith |

|---|---|---|---|---|

| Major Objective | Constructing LLM logic and chains | Superior agent orchestration | Visible prototyping of workflows | Monitoring, testing, and debugging |

| Logic Circulate | Linear execution (DAG-based) | Cyclic execution with loops | Visible canvas-based stream | Observability-focused |

| Ability Degree | Developer (Python / JavaScript) | Superior developer | Non-coder / designer-friendly | DevOps / QA / AI engineers |

| State Administration | By way of reminiscence objects | Native and protracted state | Visible flow-based state | Observes and traces state |

| Price | Free (open supply) | Free (open supply) | Free (open supply) | Free tier / SaaS |

Now that the LangChain ecosystem has grow to be a working demonstration, we will return to the query of when to use every instrument.

- If you find yourself creating a easy app with a simple stream, begin with LangChain. Considered one of our author brokers, e.g., was a plain LangChain chain.

- When managing multi-agent techniques, that are advanced workflows, use LangGraph to handle them. The researcher needed to go the state to the author via our analysis assistant, utilizing LangGraph.

- When your software is greater than a prototype, drag in LangSmith to debug it. Within the case of our analysis assistant, LangSmith can be vital to watch the communication between the 2 brokers.

- LangFlow is one thing to consider when prototyping your concepts. Earlier than you write one line of code, you can visualise the researcher-writer workflow in LangFlow.

Conclusion

The LangChain ecosystem is a group of instruments that assist create advanced LLM purposes. LangChain gives you with the staple components. LangGraph on orchestration permits you to assemble elaborate techniques. LangSmith is nice to debug; your purposes are steady. And LangFlow to prototype assists you in fast prototyping.

With a data of the strengths of every instrument, you’ll be able to create {powerful} multi-agent techniques that tackle real-life points. The trail between a mere notion and a ready-to-use software is now an comprehensible and simpler activity.

Regularly Requested Questions

A. Use LangGraph in instances the place loops, conditional branching or state are required to be dealt with in additional than a single step, as present in multi-agent techniques.

A. Though this can be true, LangFlow is usually utilized in prototyping. Relating to high-performance necessities, it will be higher to export the stream to Python code and deploy it the traditional approach.

A. No, LangSmith is non-compulsory, but a fully advisable instrument for debugging and monitoring that must be thought-about when your software turns into difficult.

A. LangChain, LangGraph, and LangFlow are all underneath the open-source (MIT License) license. LangSmith is a SaaS product of proprietary kind, having a free tier.

A. The best benefit is that it’s a modular and built-in outfit. It presents a complete toolkit to deal with the complete software lifecycle, from the preliminary concept as much as manufacturing monitoring.

Login to proceed studying and luxuriate in expert-curated content material.