Massive language fashions are highly effective, however on their very own they’ve limitations. They can’t entry reside information, retain long-term context from earlier conversations, or carry out actions comparable to calling APIs or querying databases. LangChain is a framework designed to deal with these gaps and assist builders construct real-world functions utilizing language fashions.

LangChain is an open-source framework that gives structured constructing blocks for working with LLMs. It affords standardized elements comparable to prompts, fashions, chains, and instruments, lowering the necessity to write {custom} glue code round mannequin APIs. This makes functions simpler to construct, keep, and prolong over time.

What Is LangChain and Why It Exists?

In apply, functions hardly ever depend on only a single immediate and a single response. They usually contain a number of steps, conditional logic, and entry to exterior information sources. Whereas it’s doable to deal with all of this straight utilizing uncooked LLM APIs, doing so rapidly turns into complicated and error-prone.

LangChain helps deal with these challenges by including construction. It permits builders to outline reusable prompts, summary mannequin suppliers, arrange workflows, and safely combine exterior methods. LangChain doesn’t exchange language fashions. As a substitute, it sits on high of them and offers coordination and consistency.

Set up and Setup of LangChain

All you’ll want to use LangChain is to put in the core library and any supplier particular integrations that you simply intend to make use of.

Step 1: Set up the LangChain Core Package deal

pip set up -U langchain In case you propose on utilizing OpenAI fashions, set up the OpenAI integration additionally:

pip set up -U langchain-openai openai Python 3.10 or above is required in LangChain.

Step 2: Setting API Keys

In case you are utilizing OpenAI fashions, set your API key as an setting variable:

export OPENAI_API_KEY="your-openai-key" Or inside Python:

import os

os.environ["OPENAI_API_KEY"] = "your-openai-key" LangChain routinely reads this key when creating mannequin cases.

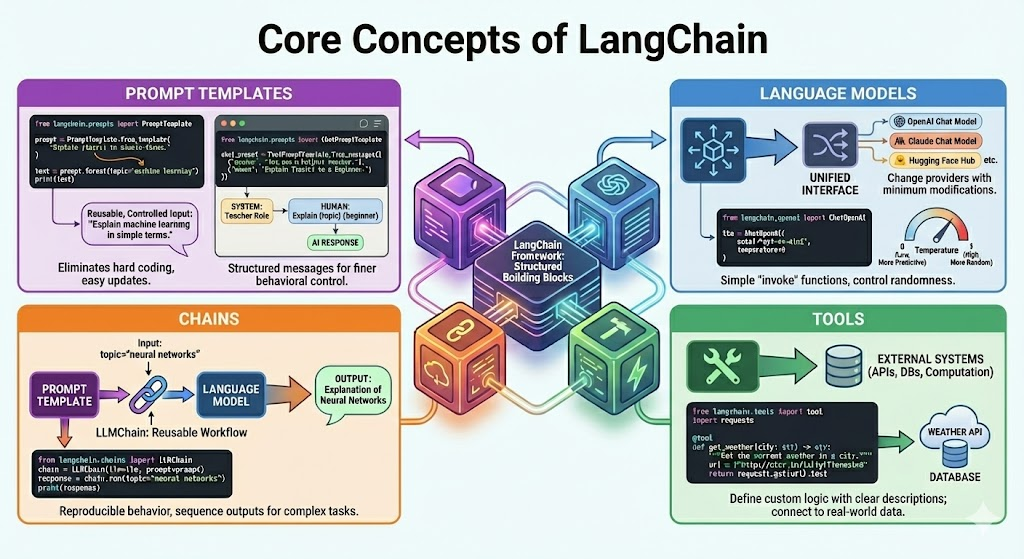

Core Ideas of LangChain

LangChain functions depend on a small set of core elements. Every element serves a selected objective, and builders can mix them to construct extra complicated methods.

The core constructing blocks are:

It’s extra important than memorizing sure APIs to know these ideas.

Working with Immediate Templates in LangChain

A immediate could be described because the enter that’s fed to a language mannequin. In sensible use, immediate can comprise variables, examples, formatting guidelines and constraints. Well timed templates be sure that these prompts are reusable and simpler to regulate.

Instance:

from langchain.prompts import PromptTemplate

immediate = PromptTemplate.from_template(

"Clarify {matter} in easy phrases."

) textual content = immediate.format(matter="machine studying")

print(textual content)

Immediate templates eradicate laborious coding of strings and decrease the variety of bugs created by handbook code formatting of strings. Additionally it is straightforward to replace prompts as your utility grows.

Chat Immediate Templates

Chat-based fashions work with structured messages quite than a single block of textual content. These messages usually embody system, human, and AI roles. LangChain makes use of chat immediate templates to outline this construction clearly.

Instance:

from langchain.prompts import ChatPromptTemplate

chat_prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful teacher."),

("human", "Explain {topic} to a beginner.")

]) This construction provides you finer management over mannequin habits and instruction precedence.

Utilizing Language Fashions with LangChain

LangChain is an interface that gives language mannequin APIs in a unified format. This lets you change fashions or suppliers with minimal modifications.

Utilizing an OpenAI chat mannequin:

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(

mannequin="gpt-4o-mini",

temperature=0

) The temperature parameter controls randomness in mannequin outputs. Decrease values produce extra predictable outcomes, which works nicely for tutorials and manufacturing methods. LangChain mannequin objects additionally present easy strategies, comparable to invoke, as an alternative of requiring low-level API calls.

Chains in LangChain Defined

The best execution unit of LangChain is chains. A series is a connection of the inputs to the outputs in a number of steps. The LLMChain is the preferred chain. It integrates a immediate template and a language mannequin right into a workflow reusable.

Instance:

from langchain.chains import LLMChain

chain = LLMChain(

llm=llm,

immediate=immediate

)

response = chain.run(matter="neural networks")

print(response) You employ chains if you need reproducible habits with a identified sequence of steps. You may mix a number of chains in order that one chain’s output feeds straight into the subsequent as the appliance grows.

Instruments in LangChain and API Integration

Language fashions don’t act on their very own. Instruments present them the liberty to speak with exterior methods like APIs, databases or computation companies. Any Python operate is usually a software offered it has a nicely outlined enter and output.

Instance of a easy climate software:

from langchain.instruments import software

import requests

@software

def get_weather(metropolis: str) -> str:

"""Get the present climate in a metropolis."""

url = f"http://wttr.in/{metropolis}?format=3"

return requests.get(url).textual content The outline and title of the software are important. The mannequin interprets them to comprehend when the software is to be utilized and what it does. There are additionally a lot of in-built instruments in LangChain, though {custom} instruments are prevalent, since they’re usually utility particular logic.

Brokers in LangChain and Dynamic Determination Making

Chains work nicely when you recognize and might predict the order of duties. Many real-world issues, nonetheless, stay open-ended. In these circumstances, the system should resolve the subsequent motion primarily based on the consumer’s query, intermediate outcomes, or the out there instruments. That is the place brokers develop into helpful.

An agent makes use of a language mannequin as its reasoning engine. As a substitute of following a set path, the agent decides which motion to take at every step. Actions can embody calling a software, gathering extra data, or producing a remaining reply.

Brokers comply with a reasoning cycle usually known as Cause and Act. The mannequin causes about the issue, takes an motion, observes the result, after which causes once more till it reaches a remaining response.

To know extra you may checkout:

Creating Your First LangChain Agent

LangChain affords excessive stage implementation of brokers with out writing out the reasoning loop.

Instance:

from langchain_openai import ChatOpenAI

from langchain.brokers import create_agent

mannequin = ChatOpenAI(

mannequin="gpt-4o-mini",

temperature=0

)

agent = create_agent(

mannequin=mannequin,

instruments=[get_weather],

system_prompt="You're a useful assistant that may use instruments when wanted."

)

# Utilizing the agent

response = agent.invoke(

{

"enter": "What's the climate in London proper now?"

}

)

print(response)The agent examines the query, acknowledges that it wants actual time information, chooses the climate software, retrieves the end result, after which produces a pure language response. All of this occurs routinely by LangChain’s agent framework.

Reminiscence and Conversational Context

Language fashions are by default stateless. They overlook in regards to the previous contacts. Reminiscence allows LangChain functions to offer context in a couple of flip. Chatbots, assistants, and every other system the place customers present comply with up questions require reminiscence.

A primary reminiscence implementation is a dialog buffer, which is a reminiscence storage of previous messages.

Instance:

from langchain.reminiscence import ConversationBufferMemory

from langchain.chains import LLMChain

reminiscence = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

chat_chain = LLMChain(

llm=llm,

immediate=chat_prompt,

reminiscence=reminiscence

) Everytime you run a series, LangChain injects the saved dialog historical past into the immediate and updates the reminiscence with the most recent response.

LangChain affords a number of reminiscence methods, together with sliding home windows to restrict context measurement, summarized reminiscence for lengthy conversations, and long-term reminiscence with vector-based recall. You need to select the suitable technique primarily based on context size limits and value constraints.

Retrieval and Exterior Information

Language fashions prepare on normal information quite than domain-specific data. Retrieval Augmented Era solves this drawback by injecting related exterior information into the immediate at runtime.

LangChain helps the complete retrieval pipeline.

- Loading paperwork from PDFs, net pages, and databases

- Splitting paperwork into manageable chunks

- Creating embeddings for every chunk

- Storing embeddings in a vector database

- Retrieving essentially the most related chunks for a question

A mean retrieval course of will seem as follows:

- Load and preprocess paperwork

- Break up them into chunks

- Embed and retailer them

- Retrieve related chunks primarily based on the consumer question

- Go retrieved content material to the mannequin as context

Additionally Learn: Mastering Immediate Engineering for LLM Functions with LangChain

Output Parsing and Structured Responses

Language fashions present textual content, but functions usually require structured textual content like lists, dictionaries, or validated JSON. Output parsers help within the transformation of free type textual content into reliable information buildings.

Fundamental instance primarily based on a comma separated listing parser:

from langchain.output_parsers import CommaSeparatedListOutputParser

parser = CommaSeparatedListOutputParser() More difficult use circumstances could be enforced with typed fashions with structured output parsers. These parsers command the mannequin to answer in a predefined format of JSON and apply a verify on the response previous to it falling downstream.

Structured output parsing is especially advantageous when the mannequin outputs get consumed by different methods or put in databases.

Manufacturing Issues

Once you transfer from experimentation to manufacturing, you’ll want to suppose past core chain or agent logic.

LangChain offers production-ready tooling to help this transition. With LangServe, you may expose chains and brokers as steady APIs and combine them simply with net, cellular, or backend companies. This method lets your utility scale with out tightly coupling enterprise logic to mannequin code.

LangSmith helps logging, tracing, analysis, and monitoring in manufacturing environments. It provides visibility into execution movement, software utilization, latency, and failures. This visibility makes it simpler to debug points, observe efficiency over time, and guarantee constant mannequin habits as inputs and site visitors change.

Collectively, these instruments assist cut back deployment threat by enhancing observability, reliability, and maintainability, and by bridging the hole between prototyping and manufacturing use.

Frequent Use Circumstances

- Chatbots and conversational assistants which want memory, instruments or multi-step logic.

- Answering of questions on doc utilizing retrieval and exterior information.

- Information bases and inside methods are supported by the automation of buyer help.

- Data assortment and summarization researches and evaluation brokers.

- Mixture of workflows between varied instruments, APIs, and companies.

- Automated or aided enterprise processes by inside enterprise instruments.

It’s versatile, therefore relevant in easy prototypes and sophisticated manufacturing methods.

Conclusion

LangChain offers a handy and simplified framework to construct actual world apps with massive language fashions. It makes use of extra reliable than uncooked LLM, providing abstractions on prompts, mannequin, chain, instruments, agent, reminiscence and retrieval. Novices can use easy chains, however superior customers can construct dynamic brokers and manufacturing methods. The hole between experimentation and implementation is bridged by LangChain with an in-built observability, deployment, and scaling. Because the utilization of LLM grows, LangChain is an effective infrastructure with which to construct long-term, versatile, and dependable AI-driven methods.

Continuously Requested Questions

A. Builders use LangChain to construct AI functions that transcend single prompts. It helps mix prompts, fashions, instruments, reminiscence, brokers, and exterior information so language fashions can motive, take actions, and energy real-world workflows.

A. An LLM generates textual content primarily based on enter, whereas LangChain offers the construction round it. LangChain connects fashions with prompts, instruments, reminiscence, retrieval methods, and workflows, enabling complicated, multi-step functions as an alternative of remoted responses.

A. Some builders depart LangChain attributable to speedy API modifications, growing abstraction, or a choice for lighter, custom-built options. Others transfer to alternate options once they want easier setups, tighter management, or decrease overhead for manufacturing methods.

LangChain is free and open supply underneath the MIT license. You should utilize it with out value, however you continue to pay for exterior companies comparable to mannequin suppliers, vector databases, or APIs that your LangChain utility integrates with.

Login to proceed studying and revel in expert-curated content material.