Person expertise issues, and JavaScript (JS) is a priceless expertise used to create dynamic and interactive consumer experiences on the net.

However that’s the place the issues come up: Serps and their crawlers are a bit much less keen on JavaScript.

Whereas Google and another engines like google can execute and index content material that makes use of JavaScript, it nonetheless has its challenges, and many crawlers battle with it. It is a explicit drawback for AI crawlers, as most are unable to correctly course of JavaScript.

Which means in case your web site dynamically masses content material through JavaScript, this could severely impression your content material’s visibility – each in conventional natural search and in more and more well-liked AI-powered search outcomes.

This information explores the challenges of utilizing JavaScript in your web site and shares Search engine optimisation finest practices that will help you overcome them. We’ll additionally present you ways Seobility may help you analyze and optimize your web site if it makes use of JS.

In case you’re not 100% positive what JavaScript is and the way it works, we advocate studying our JavaScript wiki article earlier than persevering with with this information.

When JavaScript is used to dynamically load and show content material, that is known as client-side rendering (CSR). That’s as a result of the shopper machine (browser) should course of JavaScript content material to render (as in, course of and show) the web page on the consumer’s machine. This contrasts with server-side rendering, the place the web site’s server generates pre-rendered HTML for the browser – this implies the whole lot is already ready for the consumer, and simpler for any instrument that doesn’t use JavaScript to determine what to show.

JavaScript is nice for creating dynamic content material – for instance, quizzes, like the instance proven beneath:

Dynamic content material added through JavaScript can add interactivity and taste to a web site, however the content material shouldn’t be at all times seen to all engines like google and crawlers.

Dynamic content material added through JavaScript can add interactivity and taste to a web site, however the content material shouldn’t be at all times seen to all engines like google and crawlers.

Whereas customers would possibly get pleasure from and share it, solely the static content material displayed with out JavaScript is more likely to be crawled. Which means crawlers most likely gained’t see the content material that’s displayed after clicking the “Take the quiz” button:

If we wish to rank properly, we want our content material to be prepared and readable by search engine crawlers – even when we’re utilizing JavaScript and client-side rendering. Whereas some senior Googlers have urged warning round JavaScript, Googlebot is able to rendering JS content material and Bingbot shouldn’t be far behind, however different engines like google and AI crawlers typically battle to course of any content material delivered through JavaScript.

In a nutshell, resolving the client-side rendering problem usually means optimizing your JavaScript and guaranteeing crucial content material is at all times prerendered by the server with out counting on JavaScript. That ensures Google and different crawlers don’t miss something important. We’ll discover this in additional element afterward.

| Consumer-side rendering (CSR) | Server-side rendering (SSR) | |

| How is the content material displayed (rendering) | Makes use of the customer’s machine sources to render content material utilizing JavaScript. | Generated on the server earlier than supply as pre-rendered HTML. |

| Why folks prefer it | Dynamic and interactive web sites, tailor-made consumer experiences. | Quicker web page masses, simpler indexing, higher technical Search engine optimisation. |

| Crawling | Serps and vital crawlers could also be unable to see dynamically loaded content material. | Instantly accessible for indexing by all engines like google and vital crawlers. |

Challenges of JavaScript in Search engine optimisation

As you most likely anticipated, the actual fact JavaScript Search engine optimisation is even a factor (there is no such thing as a such factor as PHP Search engine optimisation, is there?) does recommend there will likely be challenges. These are among the large ones.

JavaScript frameworks

Some well-liked JavaScript frameworks utilized in internet improvement reminiscent of React, Angular, or Vue can load new content material with out absolutely reloading the web page. For instance, it could be used to show an organization’s residence, about, and privateness insurance policies all on the identical URL, with out the URL within the tackle bar ever altering.

That is referred to as routing, and it modifications the web page state – as in, what’s proven on display screen – with out altering the entire webpage and its tackle.

This could trigger issues for engines like google, although, as they usually count on a singular URL for every web page. If the content material modifications however the URL stays the identical, it’s attainable that components of the location may not get listed.

Crawling and indexing

If a search engine can’t correctly learn JavaScript-based content material, it gained’t present up in search outcomes. This could occur when info reminiscent of product particulars, critiques, or costs are loaded from exterior providers (for instance, a critiques or inventory administration plugin that could be pulling information from one other web site or firm programs). As a result of this content material seems after the web page has loaded, engines like google may not ‘see’ or be capable of course of it. They might even be blocked from accessing it altogether.

JavaScript can even create many variations of the identical web page, with barely completely different URLs, based mostly on consumer classes. This would possibly occur when monitoring codes or filters are added to the URL. This could trigger duplicate content material points, though this may be resolved with canonical tags.

AI crawlers

A examine by Vercel on AI crawlers revealed that many present main AI crawlers – ChatGPT, Claude, Meta, Bytespider, and Perplexity – can’t execute JavaScript in any respect. We don’t know but if Gartner’s prediction about LLMs changing conventional search will come true. However everyone knows somebody who makes use of ChatGPT or Claude like a search engine. If you wish to seem in these LLM-based search outcomes, it’s vital to make sure your content material could be accessed by them.

Efficiency and sustainability

A number of, massive, or poorly optimized JavaScript recordsdata could be render-blocking. That implies that the web page can’t be correctly displayed till the JS and any related parts have been absolutely downloaded and processed by the consumer’s machine. This will increase web page load (and show) occasions.

It may possibly additionally set off an unpleasant repaint impact often called a cumulative structure shift (CLS) – the place the content material must be ‘redesigned’ reside because of late-loading content material. A basic instance of this type of repaint we’ve all seen is how an eCommerce web site would possibly first load, after which a number of seconds later a banner with the newest provides is inserted on the high, rearranging the remainder of the content material beneath. This could have a major detrimental impact on efficiency and consumer expertise – recognized rating components.

An instance of cumulative structure shift: the structure of this web page modifications a number of occasions because it masses

In the meantime, massive file transfers and client-side rendering considerably improve a web site’s carbon footprint. With growing consciousness of digital carbon and the pending launch of the W3C Net Sustainability Pointers, it could be time to look nearer on the dimension and impression of your JavaScript rendering.

Monitoring and analytics

Instruments reminiscent of Google Analytics are themselves typically JavaScript-based. This implies they, in flip, can have points with JavaScript-heavy web sites. If their scripts fail to load or are considerably delayed behind the remaining, interactions might not be correctly tracked – lowering the standard of your analytics information.

Structured information and different enhancements

Some Search engine optimisation and internationalization instruments depend on JavaScript to insert schema markup (structured information) and localized content material, significantly of alt textual content, but in addition typically meta tags and web page content material. Reliance on JavaScript to insert vital content material dynamically is dangerous and may end up in lacking your alternative to seem in wealthy snippet outcomes, or the fallacious content material being picked up by engines like google.

Finest practices for JavaScript Search engine optimisation

You’ve learn the (many) challenges. However earlier than you panic, it’s value remembering that not all JavaScript comes with the identical challenges, and even then, there are normally good methods to deal with them for customers and Search engine optimisation alike.

Keep away from utilizing JavaScript when it doesn’t add worth

JavaScript isn’t unhealthy – it’s typically the simplest manner of including or managing sure accessibility options, reminiscent of darkish mode or responsive menus! Nevertheless it’s value contemplating whether or not the content material you’re planning really must be delivered in JavaScript. Maybe there’s one other option to deal with it – one which wouldn’t conceal important content material from crawlers? Earlier than you go any additional along with your optimizations, you must ensure that any content material that can at all times be displayed is supplied with out counting on JavaScript.

Render vital content material with out JavaScript

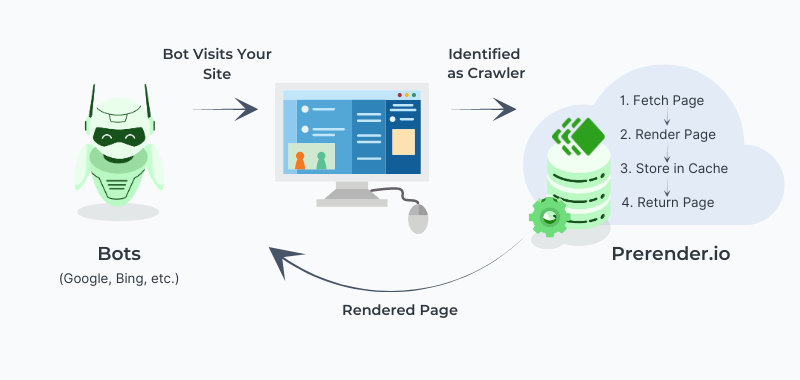

Guarantee crucial content material, reminiscent of above-the-fold content material, meta information, pictures, hyperlinks, and schema markup are accessible to engines like google with out JavaScript. Server-side rendering (SSR), also called pre-rendering or static website technology (SSG), implies that the web page is absolutely constructed earlier than being despatched to the browser. For JavaScript-heavy websites the place full SSR or SSG approaches aren’t sensible, dynamic rendering could be an efficient compromise. It reveals a pre-rendered model to engines like google and different crawlers, whereas giving human guests the complete interactive expertise with JavaScript. Some frameworks, reminiscent of Subsequent.js or Nuxt.js, help this twin setup natively, whereas instruments for different JavaScript frameworks additionally exist.

One instrument for this function is Prerender, which routinely creates ready-to-index variations of your pages. You possibly can learn extra about pre-rendering there.

How pre-rendering works (Supply)

The concept is to make sure your crucial content material is at all times accessible and able to be listed, even when JavaScript arrives late to the rendering rendezvous.

Use Search engine optimisation-friendly lazy loading and progressive enhancement

Design your web site in a manner that ensures fundamental content material can at all times load, even when JavaScript fails or that and different property take some time to load. Progressive enhancement is about offering one thing purposeful in static HTML that masses and meets key targets with out JavaScript, then utilizing JavaScript so as to add interactive options on high.

For instance, a weblog publish could be designed to indicate textual content and pictures first, whereas further options reminiscent of an interactive remark part could be displayed after, as soon as JavaScript is obtainable. This ensures your core content material is accessible and indexable, even when JavaScript doesn’t load correctly.

In an identical vein, lazy loading is a good method to enhance web page pace by solely loading pictures or different property when wanted. Nonetheless, engines like google could not at all times index that content material correctly if utilizing JavaScript-based lazy loading. Make sure to:

- Use the HTML attribute

loading="lazy"for pictures - Don’t conceal content material with

show: none or visibility: hidden - At all times embody a placeholder picture (like

src="https://www.seobility.web/en/weblog/javascript-and-seo/fallback.webp")

This ensures engines like google can entry and perceive your content material, even earlier than JavaScript masses.

Optimize JavaScript for efficiency and sustainability

Giant scripts can decelerate pages, hurting Search engine optimisation and consumer expertise, whereas growing your digital carbon footprint. Thankfully, there are methods to reduce this impression:

- A very good place to start out is by minifying and compressing your JavaScript recordsdata to cut back their dimension and cargo time. Many caching and optimization instruments embody automated code minification whereas enabling GZIP compression for quicker, smaller code. There are additionally minification instruments on-line for another code.

- You can too use async and defer to prioritize loading different content material first. Many optimization plugins can even care for this.

- However you must also examine that your JavaScript isn’t render-blocking, as this could have an effect on your Core Net Vitals.

Sounds difficult? Don’t fear – our article on web page pace optimization will information you thru the implementation of those steps!

You can too use Chrome dev instruments to examine protection, as in, how a lot code is definitely used on the web page. That may provide help to to seek out unused JS parts that unnecessarily decelerate your web site.

Extra technically inclined folks can even contemplate code splitting – breaking bigger recordsdata or libraries into smaller, manageable chunks that load on demand.

Builders can even contemplate whether or not vanilla JavaScript (JavaScript that doesn’t depend on current premade features, often called libraries) or a extra streamlined library could also be extra environment friendly than utilizing options depending on bigger libraries.

Monitor for JavaScript errors and proper rendering

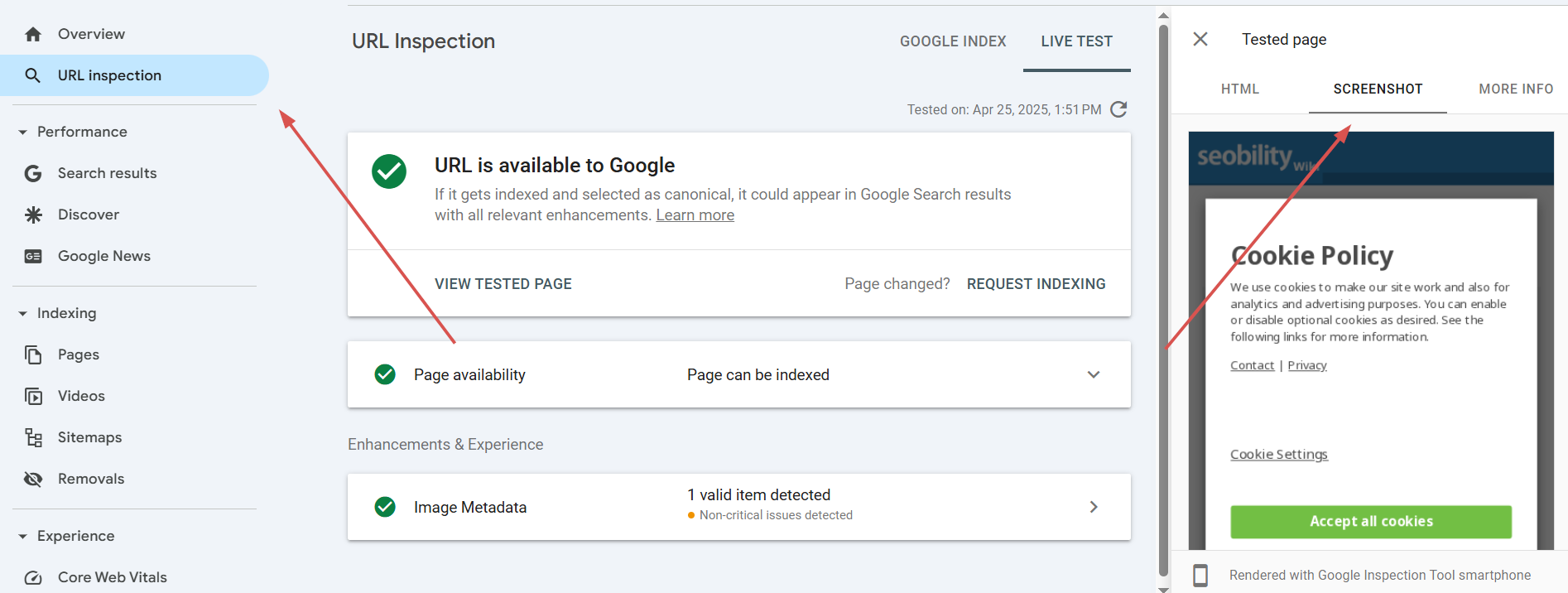

JavaScript errors can break your pages and stop indexing. On the technical aspect, Google Search Console and Chrome dev instruments may help you to establish errors and debug JavaScript.

It’s additionally vital to make it possible for your JS-based content material is rendered accurately by crawlers. Nonetheless, it may be laborious to see what a crawler will see by your self. Google Search Console can present some hints in its URL Inspection instrument. This instrument enables you to examine any particular person web page to make certain it’s being rendered accurately by Google. See beneath for an instance.

However, typically it helps to get a bit extra detailed info with somewhat extra help and context: That’s the place Seobility’s means to crawl web sites with content material supplied dynamically through JavaScript may help.

How Seobility can information you in your JavaScript Search engine optimisation journey

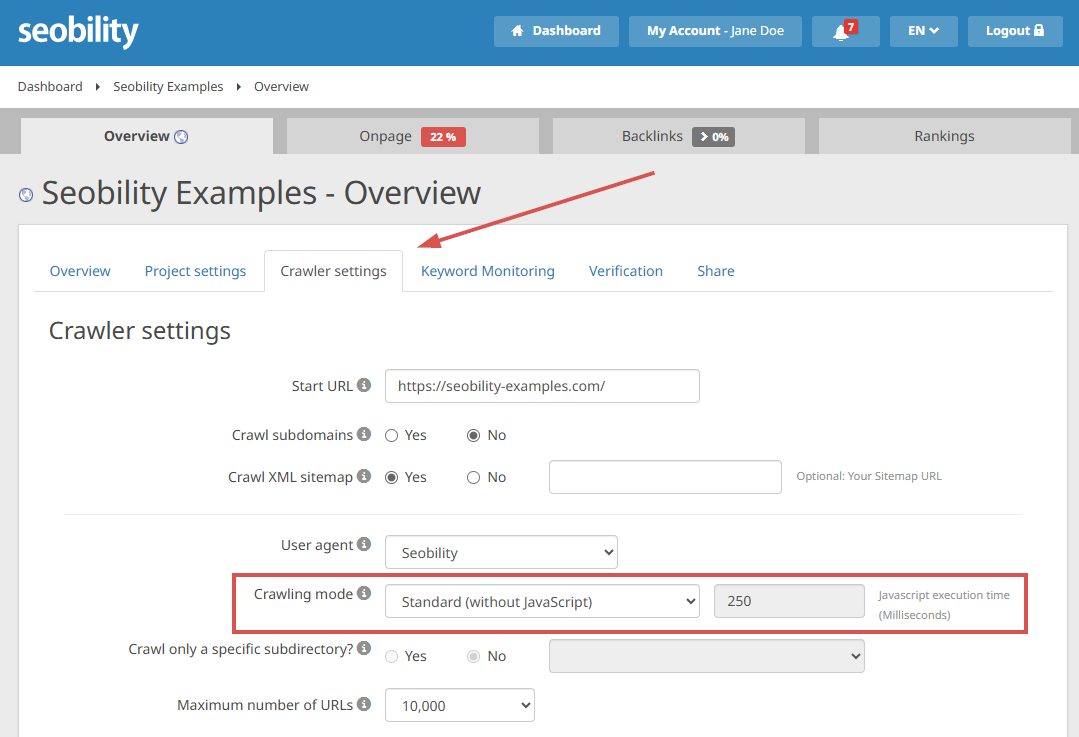

Seobility’s Web site Audit has two crawling modes – the usual mode with out JavaScript, and Chrome (JavaScript-enabled) mode.

To activate JavaScript crawling, go to Overview > Crawler Settings on your venture.

JavaScript crawling mode is crucial if working with JavaScript-heavy websites: It permits evaluation of the generated code relatively than simply the unique HTML and the ‘directions’ within the JavaScript. This offers you higher perception into what JavaScript-capable engines like google really see, though it’s nonetheless not a assure.

You can too set the JavaScript execution time to simulate the ‘endurance’ of crawlers. This lets you see precisely what engines like google would possibly or may not detect.

Please observe that JavaScript-enabled crawls take considerably longer than customary HTML-based crawls.

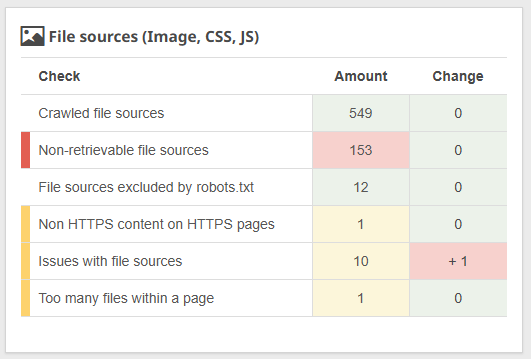

The File Sources Report, discovered below On-page > Tech & Meta can even present helpful insights, and spotlight if there are issues along with your JavaScript recordsdata.

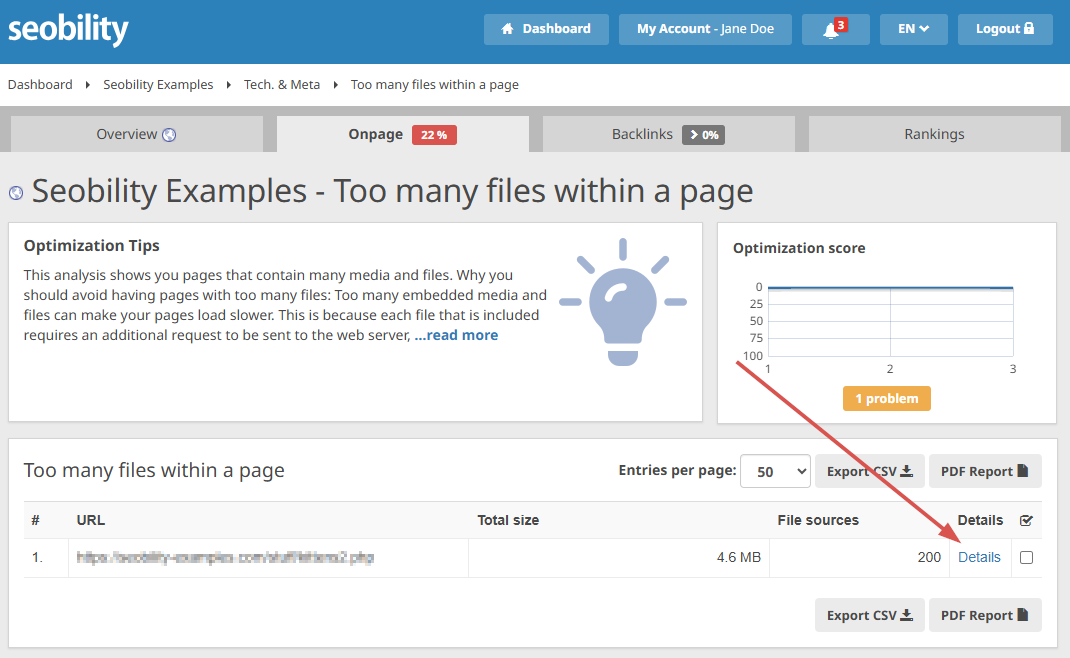

The File Sources Report highlights any issues referring to recordsdata used in your web site, together with JavaScript recordsdata. For instance, when there are merely too many JavaScript recordsdata, or these recordsdata are massive and affecting efficiency.

JavaScript points can even present up in Seobility’s evaluation in different methods, although:

- Lacking meta information, alt attributes, or one thing else you swear you included? – This could occur when pictures or crucial content material are injected through JavaScript.

- Unrecognized lazy-loaded pictures? – Essential pictures shouldn’t be lazy loaded, in any other case crawlers – together with Seobility’s personal – would possibly overlook them!

- Too many JavaScript recordsdata, however you by no means knew they had been there? – Click on on Particulars alongside the report to seek out the URLs – so you can begin monitoring them down.

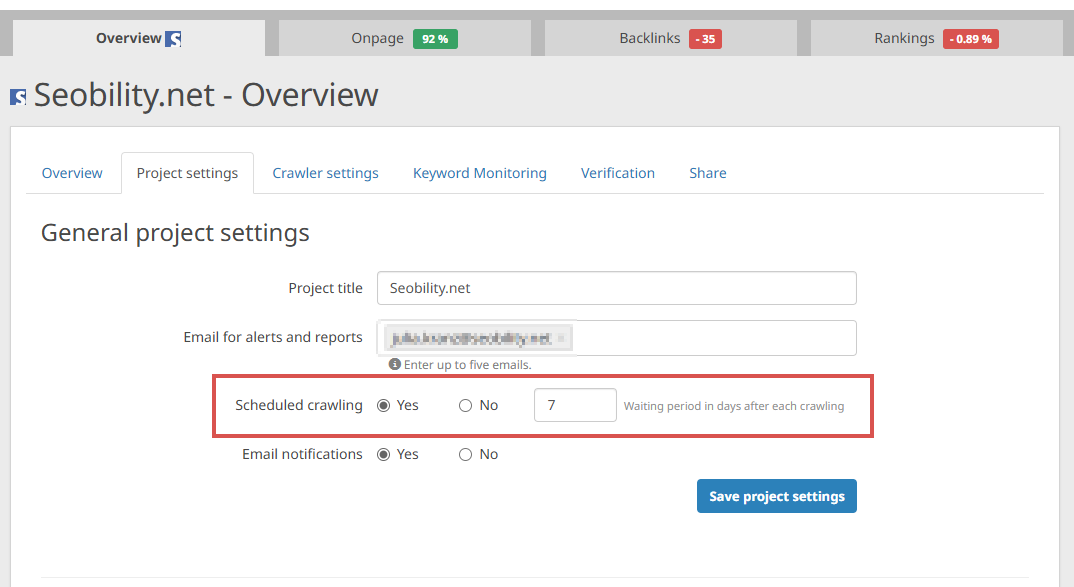

You can too arrange Seobility to recrawl your website periodically:

Overview > Challenge settings

This lets you monitor your progress optimizing Search engine optimisation in your JavaScript-rich web site – or act quick when one thing goes fallacious!

JavaScript Search engine optimisation: Not essentially a contradiction

Sure, dynamic content material injected by JavaScript poses a number of challenges for Search engine optimisation, affecting the best way engines like google crawl, render, and index content material. However finest practices reminiscent of server-side rendering (SSR), progressive enhancement, lazy loading, optimizing your JavaScript, and thoroughly monitoring for errors can enhance how engines like google course of your content material.

Whereas minimizing JavaScript and dynamic content material injection all spherical might be going to save lots of you numerous complications (and carbon), many of those finest practices apply to extra than simply JavaScript. On the identical time, don’t let the fiddly bits put you off: JavaScript is sort of important if you wish to present a responsive, accessible consumer expertise, and it can be optimized for Search engine optimisation.

Expertise JavaScript-enabled Search engine optimisation crawling for your self with Seobility – join a free 14-day trial proper now. What are you ready for? Run, don’t crawl! (Sorry.)

PS: Get weblog updates straight to your inbox!