Hugging Face has simply launched FineVision, an open multimodal dataset designed to set a brand new customary for Imaginative and prescient-Language Fashions (VLMs). With 17.3 million pictures, 24.3 million samples, 88.9 million question-answer turns, and practically 10 billion reply tokens, FineVision place itself as one of many largest and structured publicly obtainable VLM coaching datasets.

FineVision aggregates 200+ sources right into a unified format, rigorously filtered for duplicates and benchmark contamination. Rated systematically throughout a number of high quality dimensions, the dataset allows researchers and devs to assemble sturdy coaching mixtures whereas minimizing information leakage.

Why is FineVision Essential for VLM Coaching?

Most state-of-the-art VLMs depend on proprietary datasets, limiting reproducibility and accessibility for the broader analysis group. FineVision addresses this hole by:

- Scale and Protection: 5 TB of curated information throughout 9 classes, together with Common VQA, OCR QA, Chart & Desk reasoning, Science, Captioning, Grounding & Counting, and GUI navigation.

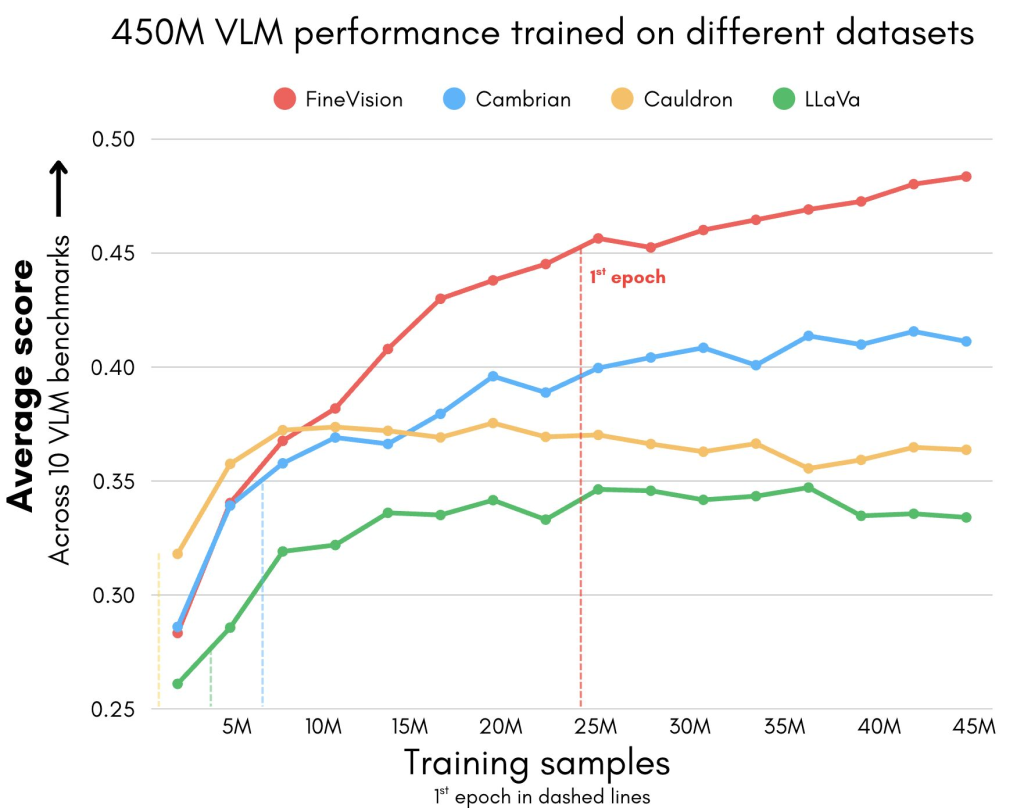

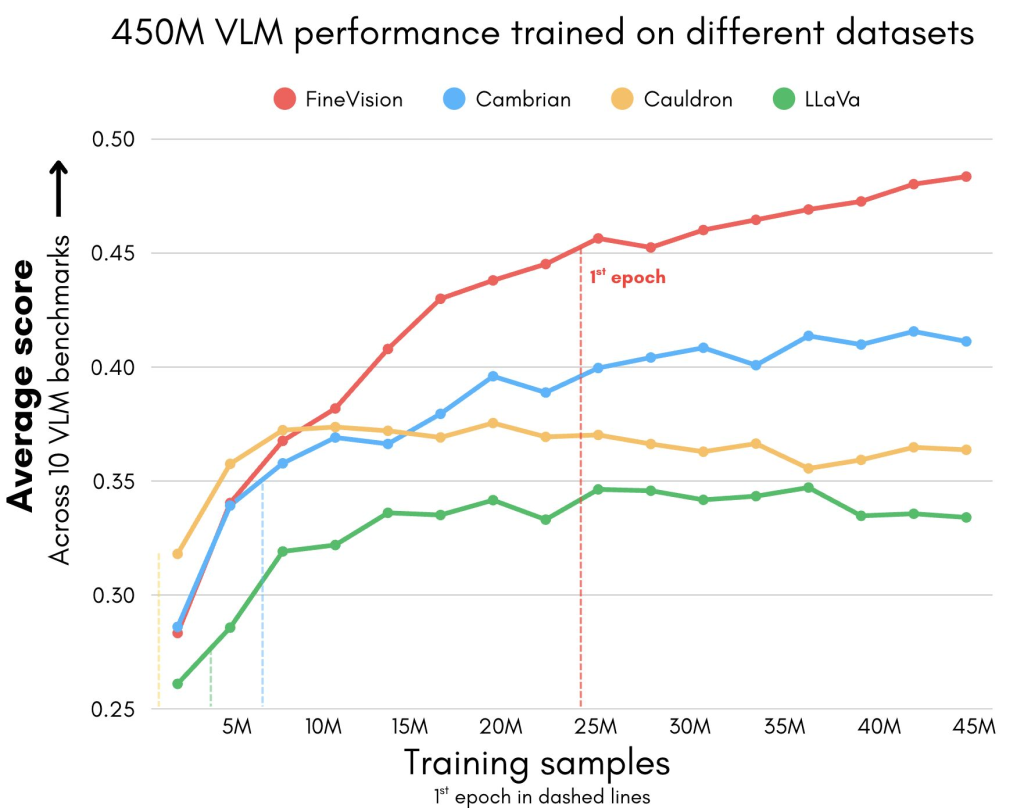

- Benchmark Beneficial properties: Throughout 11 broadly used benchmarks (e.g., AI2D, ChartQA, DocVQA, ScienceQA, OCRBench), fashions skilled on FineVision outperform alternate options by important margins—as much as 46.3% over LLaVA, 40.7% over Cauldron, and 12.1% over Cambrian.

- New Talent Domains: FineVision introduces information for rising duties like GUI navigation, pointing, and counting, increasing the capabilities of VLMs past typical captioning and VQA.

How Was FineVision Constructed?

The curation pipeline adopted a three-step course of:

- Assortment and Augmentation

Over 200 publicly obtainable image-text datasets have been gathered. Lacking modalities (e.g., text-only information) have been reformatted into QA pairs. Underrepresented domains, corresponding to GUI information, have been supplemented by focused assortment. - Cleansing

- Eliminated outsized QA pairs (>8192 tokens).

- Resized massive pictures to a most of 2048 px whereas preserving side ratio.

- Discarded corrupted samples.

- High quality Score

Utilizing Qwen3-32B and Qwen2.5-VL-32B-Instruct as judges, each QA pair was rated on 4 axes:- Textual content Formatting High quality

- Query-Reply Relevance

- Visible Dependency

- Picture-Query Correspondence

These rankings allow selective coaching mixtures, although ablations present that retaining all samples yields one of the best efficiency, even when lower-rated samples are included.

Comparative Evaluation: FineVision vs. Current Open Datasets

| Dataset | Pictures | Samples | Turns | Tokens | Leakage | Perf. Drop After Deduplication |

|---|---|---|---|---|---|---|

| Cauldron | 2.0M | 1.8M | 27.8M | 0.3B | 3.05% | -2.39% |

| LLaVA-Imaginative and prescient | 2.5M | 3.9M | 9.1M | 1.0B | 2.15% | -2.72% |

| Cambrian-7M | 5.4M | 7.0M | 12.2M | 0.8B | 2.29% | -2.78% |

| FineVision | 17.3M | 24.3M | 88.9M | 9.5B | 1.02% | -1.45% |

FineVision is just not solely one of many largest but in addition the least hallucinated dataset, with simply 1% overlap with benchmark check units. This ensures minimal information leakage and dependable analysis efficiency.

Efficiency Insights

- Mannequin Setup: Ablations have been carried out utilizing nanoVLM (460M parameters), combining SmolLM2-360M-Instruct because the language spine and SigLIP2-Base-512 because the imaginative and prescient encoder.

- Coaching Effectivity: On 32 NVIDIA H100 GPUs, one full epoch (12k steps) takes ~20 hours.

- Efficiency Tendencies:

- FineVision fashions enhance steadily with publicity to various information, overtaking baselines after ~12k steps.

- Deduplication experiments verify FineVision’s low leakage in comparison with Cauldron, LLaVA, and Cambrian.

- Multilingual subsets, even when the spine is monolingual, present slight efficiency beneficial properties, suggesting range outweighs strict alignment.

- Makes an attempt at multi-stage coaching (two or 2.5 levels) didn’t yield constant advantages, reinforcing that scale + range is extra essential than coaching heuristics.

Why FineVision Brings the New Normal?

- +20% Common Efficiency Increase: Outperforms all current open datasets throughout 10+ benchmarks.

- Unprecedented Scale: 17M+ pictures, 24M+ samples, 10B tokens.

- Talent Enlargement: GUI navigation, counting, pointing, and doc reasoning included.

- Lowest Knowledge Leakage: 1% contamination, in comparison with 2–3% in different datasets.

- Absolutely Open Supply: Out there on Hugging Face Hub for fast use by way of the

datasetslibrary.

Conclusion

FineVision marks a major development in open multimodal datasets. Its massive scale, systematic curation, and clear high quality assessments create a reproducible and extensible basis for coaching state-of-the-art Imaginative and prescient-Language Fashions. By decreasing dependence on proprietary sources, it allows researchers and devs to construct aggressive programs and speed up progress in areas corresponding to doc evaluation, visible reasoning, and agentic multimodal duties.

Take a look at the Dataset and Technical particulars. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.