LLMs are now not restricted to a question-answer format. They now type the premise of clever functions that assist with real-world issues in real-time. In that context, Kimi K2 comes as a multiple-purpose LLM that’s immensely widespread amongst AI customers worldwide. Whereas everybody is aware of of its highly effective agentic capabilities, not many are positive the way it performs on the API. Right here, we take a look at Kimi K2 in a real-world manufacturing state of affairs, by an API-based workflow to judge whether or not Kimi K2 stands as much as its promise of an incredible LLM.

Additionally learn: Need to discover the very best open-source system? Learn our comparability evaluate between Kimi K2 and Llama 4 right here.

What’s Kimi K2?

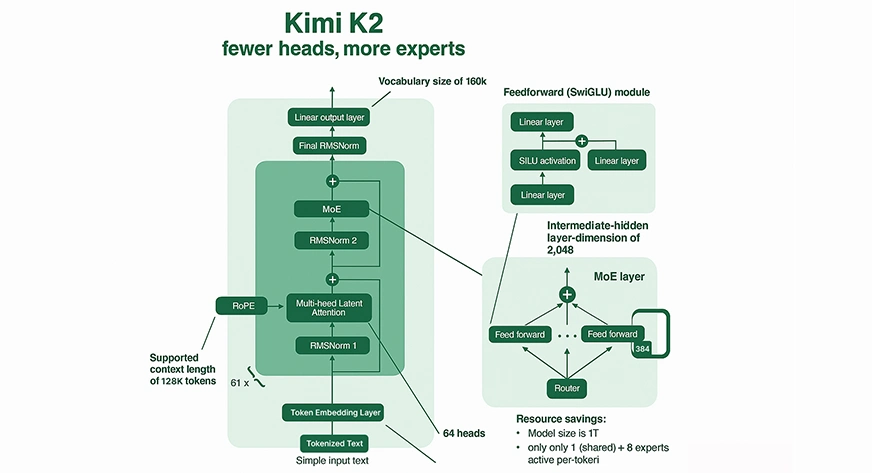

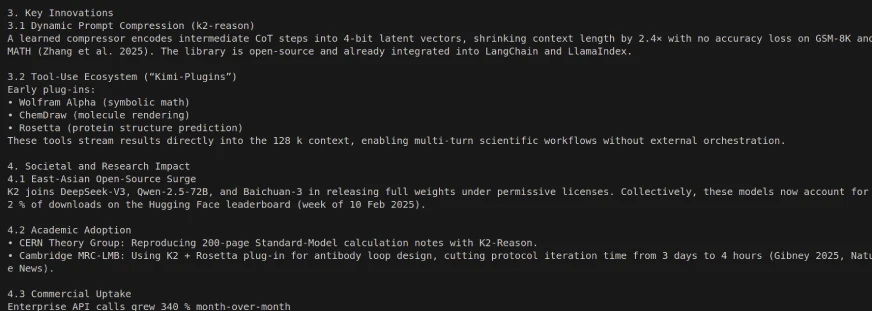

Kimi K2 is a state-of-the-art open-source massive language mannequin constructed by Moonshot AI. It employs a Combination-of-Specialists (MoE) structure and has 1 trillion complete parameters (32 billion activated per token). Kimi K2 significantly incorporates forward-thinking use instances for superior agentic intelligence. It’s succesful not solely of producing and understanding pure language but in addition of autonomously fixing complicated issues, using instruments, and finishing multi-step duties throughout a broad vary of domains. We lined all about its benchmark, efficiency, and entry factors intimately in an earlier article: Kimi K2 the very best open-source agentic mannequin.

Mannequin Variants

There are two variants of Kimi K2:

- Kimi-K2-Base: The bare-bones mannequin, an incredible place to begin for researchers and builders who wish to have full management over fine-tuning and customized options.

- Kimi-K2-Instruct: The post-trained mannequin that’s greatest for a drop-in, general-purpose chat and agentic expertise. It’s a reflex-grade mannequin with no deep considering.

Combination-of-Specialists (MoE) Mechanism

Fractional Computation: Kimi K2 doesn’t activate all parameters for every enter. As a substitute, Kimi K2 routes each token into 8 of its 384 specialised “consultants” (plus one shared professional), which affords a major lower in compute per inference in comparison with each the MoE mannequin and dense fashions of comparable measurement.

Knowledgeable Specialization: Every professional throughout the MoE focuses on totally different data domains or reasoning patterns, resulting in wealthy and environment friendly outputs.

Sparse Routing: Kimi K2 makes use of good gating to route related consultants for every token, which helps each large capability and computationally possible inference.

Consideration and Context

Huge Context Window: Kimi K2 has a context size of as much as 128,000 tokens. It could possibly course of extraordinarily lengthy paperwork or codebases in a single cross, an unprecedented context window, far exceeding most legacy LLMs.

Advanced Consideration: The mannequin has 64 consideration heads per layer, enabling it to trace and leverage difficult relationships and dependencies throughout the sequence of tokens, usually as much as 128,000.

Coaching Improvements

MuonClip Optimizer: To permit for secure coaching at this unprecedented scale, Moonshot AI developed a brand new optimizer referred to as MuonClip. It bounds the dimensions of the eye logits by rescaling the question and key weight matrices at every replace to keep away from the intense instability (i.e., exploding values) frequent in large-scale fashions.

Knowledge Scale: Kimi K2 was pre-trained on 15.5 trillion tokens, which develops the mannequin’s data and skill to generalize.

How you can Entry Kimi K2?

As talked about, Kimi K2 may be accessed in two methods:

Internet/Utility Interface: Kimi may be accessed immediately to be used from the official internet chat.

API: Kimi K2 may be built-in along with your code utilizing both the Collectively API or Moonshot’s API, supporting agentic workflows and the usage of instruments.

Steps To Get hold of an API Key

For operating Kimi K2 by an API, you will have an API key. Right here is how one can get it:

Moonshot API:

- Enroll or log in to the Moonshot AI Developer Console.

- Go to the “API Keys” part.

- Click on “Create API Key,” present a reputation and undertaking (or depart as default), then save your key to be used.

Collectively AI API:

- Register or log in at Collectively AI.

- Find the “API Keys” space in your dashboard.

- Generate a brand new key and document it for later use.

Native Set up

Obtain the weights from Hugging Face or GitHub and run them regionally with vLLM, TensorRT-LLM, or SGLang. Merely observe these steps.

Step 1: Create a Python Atmosphere

Utilizing Conda:

conda create -n kimi-k2 python=3.10 -y

conda activate kimi-k2Utilizing venv:

python3 -m venv kimi-k2

supply kimi-k2/bin/activateStep 2: Set up Required Libraries

For all strategies:

pip set up torch transformers huggingface_hubvLLM:

pip set up vllmTensorRT-LLM:

Observe the official [TensorRT-LLM install documentation] (requires PyTorch >=2.2 and CUDA == 12.x; not pip installable for all techniques).

For SGLang:

pip set up sglangStep 3: Obtain Mannequin Weights

From Hugging Face:

With git-lfs:

git lfs set up

git clone https://huggingface.co/moonshot-ai/Kimi-K2-InstructOr utilizing huggingface_hub:

from huggingface_hub import snapshot_download

snapshot_download(

repo_id="moonshot-ai/Kimi-K2-Instruct",

local_dir="./Kimi-K2-Instruct",

local_dir_use_symlinks=False,

)Step 4: Confirm Your Atmosphere

To make sure CUDA, PyTorch, and dependencies are prepared:

import torch

import transformers

print(f"CUDA Accessible: {torch.cuda.is_available()}")

print(f"CUDA Units: {torch.cuda.device_count()}")

print(f"CUDA Model: {torch.model.cuda}")

print(f"Transformers Model: {transformers.__version__}")Step 5: Run Kimi K2 With Your Most popular Backend

With vLLM:

python -m vllm.entrypoints.openai.api_server

--model ./Kimi-K2-Instruct

--swap-space 512

--tensor-parallel-size 2

--dtype float16Modify tensor-parallel-size and dtype based mostly in your {hardware}. Change with quantized weights if utilizing INT8 or 4-bit variants.

Palms-on with Kimi K2

On this train, we can be having a look at how massive language fashions like Kimi K2 work in actual life with actual API calls. The target is to check its efficacy on the transfer and see if it gives a robust efficiency.

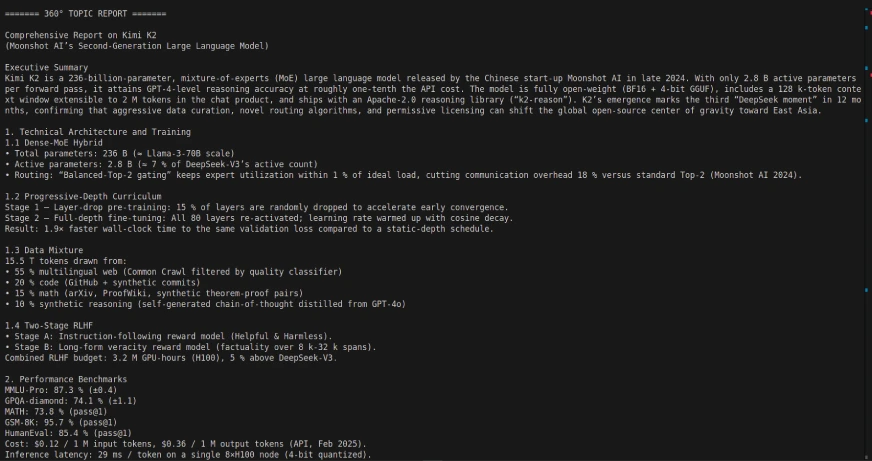

Activity 1: Making a 360° Report Generator utilizing LangGraph and Kimi K2:

On this activity, we are going to create a 360-degree report generator utilizing the LangGraph framework and the Kimi K2 LLM. The appliance is a showcase of how agentic workflows may be choreographed to retrieve, course of, and summarize info mechanically by the usage of API interactions.

Code Hyperlink: https://github.com/sjsoumil/Tutorials/blob/most important/kimi_k2_hands_on.py

Code Output:

Using Kimi K2 with LangGraph can enable for some highly effective, autonomous multi-step, agentic workflow, as Kimi K2 is designed to autonomously decompose multi-step duties, corresponding to database querying, reporting, and doc processing, utilizing software/api integrations. Simply mood your expectations for a few of the response instances.

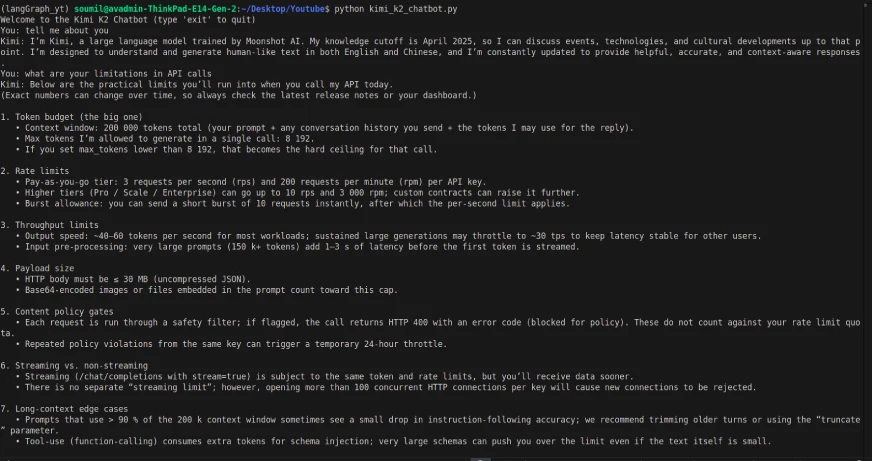

Activity 2: Making a easy chatbot utilizing Kimi K2

Code:

from dotenv import load_dotenv

import os

from openai import OpenAI

load_dotenv()

OPENROUTER_API_KEY = os.getenv("OPENROUTER_API_KEY")

if not OPENROUTER_API_KEY:

elevate EnvironmentError("Please set your OPENROUTER_API_KEY in your .env file.")

shopper = OpenAI(

api_key=OPENROUTER_API_KEY,

base_url="https://openrouter.ai/api/v1"

)

def kimi_k2_chat(messages, mannequin="moonshotai/kimi-k2:free", temperature=0.3, max_tokens=1000):

response = shopper.chat.completions.create(

mannequin=mannequin,

messages=messages,

temperature=temperature,

max_tokens=max_tokens,

)

return response.decisions[0].message.content material

# Dialog loop

if __name__ == "__main__":

historical past = []

print("Welcome to the Kimi K2 Chatbot (sort 'exit' to give up)")

whereas True:

user_input = enter("You: ")

if user_input.decrease() == "exit":

break

historical past.append({"function": "person", "content material": user_input})

reply = kimi_k2_chat(historical past)

print("Kimi:", reply)

historical past.append({"function": "assistant", "content material": reply})Output:

Regardless of the mannequin being multimodal, the API calls solely had the flexibility to offer text-based enter/output (and textual content enter had a delay). So, the interface and the API name act a little bit bit otherwise.

My evaluate after the hands-on

The Kimi K2 is an open-source and huge language mannequin, which implies it’s free, and it is a huge plus for builders and researchers. For this train, I accessed Kimi K2 with an OpenRouter API key. Whereas I beforehand accessed the mannequin by the easy-to-use internet interface, I most popular to make use of the API for extra flexibility and to construct a customized agentic workflow in LangGraph.

Throughout testing the chatbot, the response instances I skilled with the API calls have been noticeably delayed, and the mannequin can’t, but, assist multi-modal capabilities (e.g., picture or file processing) by the API like it may within the interface. Regardless, the mannequin labored properly with LangGraph, which allowed me to design a whole pipeline for producing dynamic 360° reviews.

Whereas it was not earth-shattering, it illustrates how open-source fashions are quickly catching as much as the proprietary leaders, corresponding to OpenAI and Gemini, and they’re going to proceed to shut the gaps with fashions like Kimi K2. It’s a formidable efficiency and suppleness for a free mannequin, and it reveals that the bar is getting increased on multimodal capabilities with LLMs which can be open-source.

Conclusion

Kimi K2 is a superb choice within the open-source LLM panorama, particularly for agentic workflows and ease of integration. Whereas we bumped into just a few limitations, corresponding to slower response instances through API and an absence of multimodality assist, it gives an incredible place to begin creating clever functions in the true world. Plus, not having to pay for these capabilities is one large perk that helps builders, researchers, and start-ups. Because the ecosystem evolves and matures, we are going to see fashions like Kimi K2 achieve superior capabilities quickly as they rapidly shut the hole with proprietary corporations. General, in case you are contemplating open-source LLMs for manufacturing use, Kimi K2 is a attainable choice properly price your time and experimentation.

Regularly requested questions

A. Kimi K2 is Moonshot AI’s next-generation Combination-of-Specialists (MoE) massive language mannequin with 1 trillion complete parameters (32 billion activated parameters per interplay). It’s designed for agentic duties, superior reasoning, code technology, and power use.

– Superior code technology and debugging

– Automated agentic activity execution

– Reasoning and fixing complicated, multi-step issues

– Knowledge evaluation and visualization

– Planning, analysis help, and content material creation

– Structure: Combination-of-Specialists Transformer

– Whole Parameters: 1T (trillion)

– Activated Parameters: 32B (billion) for every question

– Context Size: As much as 128,000 tokens

– Specialization: Software use, agentic workflows, coding, lengthy sequence processing

– API Entry: Accessible from Moonshot AI’s API console (and in addition supported from Collectively AI and OpenRouter)

– Native Deployment: Potential regionally; requires highly effective native {hardware} usually (for efficient use requires a number of high-end GPUs)

– Mannequin Variants: Launched as “Kimi-K2-Base” (for personalisation/fine-tuning) and “Kimi-K2-Instruct” (for general-purpose chat, agentic interactions).

A. Kimi K2 usually equals or exceeds, main open-source fashions (for instance, DeepSeek V3, Qwen 2.5). It’s aggressive with proprietary fashions on benchmarks for coding, reasoning, and agentic duties. Additionally it is remarkably environment friendly and low-cost as in comparison with different fashions of comparable or smaller scale!

Login to proceed studying and revel in expert-curated content material.