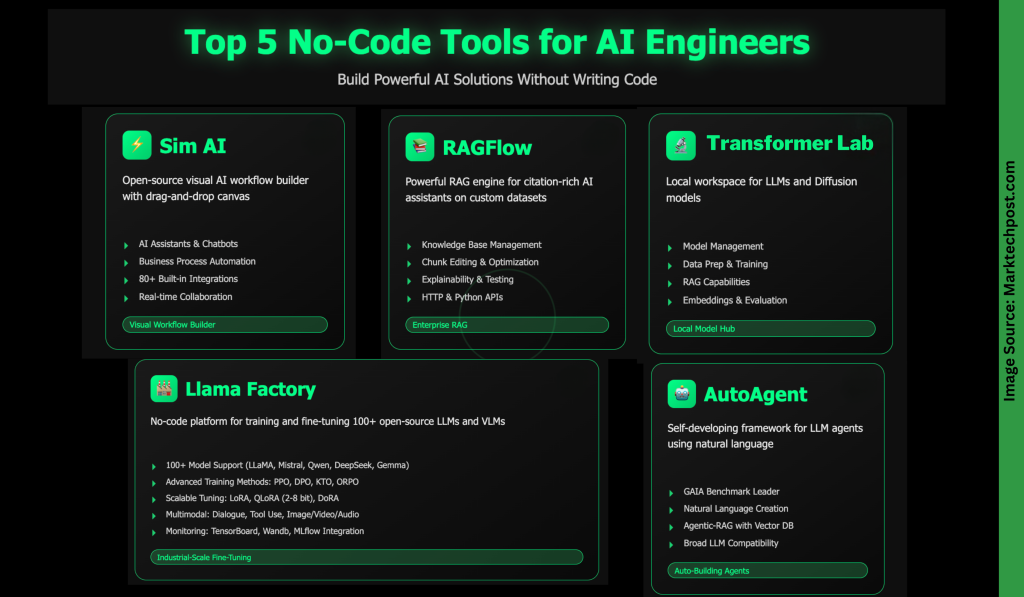

In as we speak’s AI-driven world, no-code instruments are reworking how individuals create and deploy clever purposes. They empower anybody—no matter coding experience—to construct options rapidly and effectively. From creating enterprise-grade RAG programs to designing multi-agent workflows or fine-tuning lots of of LLMs, these platforms dramatically scale back improvement effort and time. On this article, we’ll discover 5 highly effective no-code instruments that make constructing AI options quicker and extra accessible than ever.

Sim AI is an open-source platform for visually constructing and deploying AI agent workflows—no coding required. Utilizing its drag-and-drop canvas, you may join AI fashions, APIs, databases, and enterprise instruments to create:

- AI Assistants & Chatbots: Brokers that search the online, entry calendars, ship emails, and work together with enterprise apps.

- Enterprise Course of Automation: Streamline duties similar to information entry, report creation, buyer help, and content material era.

- Information Processing & Evaluation: Extract insights, analyze datasets, create stories, and sync information throughout programs.

- API Integration Workflows: Orchestrate advanced logic, unify providers, and handle event-driven automation.

Key options:

- Visible canvas with “good blocks” (AI, API, logic, output).

- A number of triggers (chat, REST API, webhooks, schedulers, Slack/GitHub occasions).

- Actual-time crew collaboration with permissions management.

- 80+ built-in integrations (AI fashions, communication instruments, productiveness apps, dev platforms, search providers, and databases).

- MCP help for customized integrations.

Deployment choices:

- Cloud-hosted (managed infrastructure with scaling & monitoring).

- Self-hosted (by way of Docker, with native mannequin help for information privateness).

RAGFlow is a strong retrieval-augmented era (RAG) engine that helps you construct grounded, citation-rich AI assistants on prime of your personal datasets. It runs on x86 CPUs or NVIDIA GPUs (with non-compulsory ARM builds) and gives full or slim Docker photographs for fast deployment. After spinning up an area server, you may join an LLM—by way of API or native runtimes like Ollama—to deal with chat, embedding, or image-to-text duties. RAGFlow helps hottest language fashions and means that you can set defaults or customise fashions for every assistant.

Key capabilities embody:

- Data base administration: Add and parse information (PDF, Phrase, CSV, photographs, slides, and extra) into datasets, choose an embedding mannequin, and manage content material for environment friendly retrieval.

- Chunk enhancing & optimization: Examine parsed chunks, add key phrases, or manually regulate content material to enhance search accuracy.

- AI chat assistants: Create chats linked to at least one or a number of information bases, configure fallback responses, and fine-tune prompts or mannequin settings.

- Explainability & testing: Use built-in instruments to validate retrieval high quality, monitor efficiency, and think about real-time citations.

- Integration & extensibility: Leverage HTTP and Python APIs for app integration, with an non-compulsory sandbox for secure code execution inside chats.

Transformer Lab is a free, open-source workspace for Giant Language Fashions (LLMs) and Diffusion fashions, designed to run in your native machine—whether or not that’s a GPU, TPU, or Apple M-series Mac—or within the cloud. It lets you obtain, chat with, and consider LLMs, generate photographs utilizing Diffusion fashions, and compute embeddings, all from one versatile surroundings.

Key capabilities embody:

- Mannequin administration: Obtain and work together with LLMs, or generate photographs utilizing state-of-the-art Diffusion fashions.

- Information preparation & coaching: Create datasets, fine-tune, or practice fashions, together with help for RLHF and desire tuning.

- Retrieval-augmented era (RAG): Use your personal paperwork to energy clever, grounded conversations.

- Embeddings & analysis: Calculate embeddings and assess mannequin efficiency throughout totally different inference engines.

- Extensibility & neighborhood: Construct plugins, contribute to the core software, and collaborate by way of the energetic Discord neighborhood.

LLaMA-Manufacturing facility is a strong no-code platform for coaching and fine-tuning open-source Giant Language Fashions (LLMs) and Imaginative and prescient-Language Fashions (VLMs). It helps over 100 fashions, multimodal fine-tuning, superior optimization algorithms, and scalable useful resource configurations. Designed for researchers and practitioners, it presents intensive instruments for pre-training, supervised fine-tuning, reward modeling, and reinforcement studying strategies like PPO and DPO—together with straightforward experiment monitoring and quicker inference.

Key highlights embody:

- Broad mannequin help: Works with LLaMA, Mistral, Qwen, DeepSeek, Gemma, ChatGLM, Phi, Yi, Mixtral-MoE, and lots of extra.

- Coaching strategies: Helps steady pre-training, multimodal SFT, reward modeling, PPO, DPO, KTO, ORPO, and extra.

- Scalable tuning choices: Full-tuning, freeze-tuning, LoRA, QLoRA (2–8 bit), OFT, DoRA, and different resource-efficient strategies.

- Superior algorithms & optimizations: Contains GaLore, BAdam, APOLLO, Muon, FlashAttention-2, RoPE scaling, NEFTune, rsLoRA, and others.

- Duties & modalities: Handles dialogue, instrument use, picture/video/audio understanding, visible grounding, and extra.

- Monitoring & inference: Integrates with LlamaBoard, TensorBoard, Wandb, MLflow, and SwanLab, plus presents quick inference by way of OpenAI-style APIs, Gradio UI, or CLI with vLLM/SGLang staff.

- Versatile infrastructure: Suitable with PyTorch, Hugging Face Transformers, Deepspeed, BitsAndBytes, and helps each CPU/GPU setups with memory-efficient quantization.

AutoAgent is a completely automated, self-developing framework that allows you to create and deploy LLM-powered brokers utilizing pure language alone. Designed to simplify advanced workflows, it lets you construct, customise, and run clever instruments and assistants with out writing a single line of code.

Key options embody:

- Excessive efficiency: Achieves top-tier outcomes on the GAIA benchmark, rivaling superior deep analysis brokers.

- Easy agent & workflow creation: Construct instruments, brokers, and workflows by means of easy pure language prompts—no coding required.

- Agentic-RAG with native vector database: Comes with a self-managing vector database, providing superior retrieval in comparison with conventional options like LangChain.

- Broad LLM compatibility: Integrates seamlessly with main fashions similar to OpenAI, Anthropic, DeepSeek, vLLM, Grok, Hugging Face, and extra.

- Versatile interplay modes: Helps each function-calling and ReAct-style reasoning for versatile use instances.

Light-weight & extensible: A dynamic private AI assistant that’s straightforward to customise and prolong whereas remaining resource-efficient.