(Gorodenkoff/Shutterstock)

Within the first two components of this collection, we checked out how AI’s development is now constrained by energy — not chips, not fashions, however the capacity to feed electrical energy to large compute clusters. We explored how corporations are turning to fusion startups, nuclear offers, and even constructing their very own vitality provide simply to remain forward. AI can’t preserve scaling except the vitality does too.

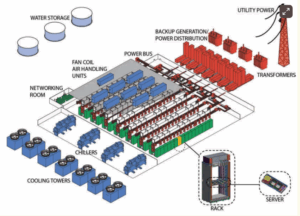

Nonetheless, even in case you get the facility, that’s solely the beginning. It nonetheless has to land someplace. That someplace is the info middle. A lot of the older knowledge facilities weren’t constructed for this. Because of this the cooling techniques aren’t reducing it. The format, the grid connection, and the best way warmth strikes by means of the constructing all must sustain with the altering calls for of the AI period. In Half 3, we take a look at what’s altering (or what ought to change) inside these websites: immersion tanks, smarter coordination with the grid, and the quiet redesign that’s now vital to maintain AI shifting ahead.

Why Conventional Knowledge Facilities Are Beginning to Break

The surge in AI workloads is bodily overwhelming the buildings meant to help it. Conventional knowledge facilities had been designed for general-purpose computing, with energy densities round 7 to eight kilowatts per rack, perhaps 15 on the excessive finish. Nonetheless, AI clusters operating on next-gen chips like NVIDIA’s GB200 are blowing previous these numbers. Racks now commonly draw 30 kilowatts or extra, and a few configurations are climbing towards 100 kilowatts.

In response to McKinsey, the fast improve in energy density has created a mismatch between infrastructure capabilities and AI compute necessities. Grid connections that had been as soon as greater than enough at the moment are strained. Cooling techniques, particularly conventional air-based setups, can’t take away warmth quick sufficient to maintain up with the thermal load.

In lots of instances, the bodily format of the constructing itself turns into an issue, whether or not it’s the burden limits on the ground or the spacing between racks. Even fundamental energy conversion and distribution techniques inside legacy knowledge facilities typically aren’t rated for the voltages and present ranges wanted to help AI racks.

As Alex Stoewer, CEO of Greenlight Knowledge Facilities, informed BigDATAwire, “Given this stage of density is new, only a few present knowledge facilities had the facility distribution or liquid cooling in place when these chips hit the market. New growth or materials retrofits had been required for anybody who wished to run these new chips.”

That’s the place the infrastructure hole actually opened up. Many legacy services merely couldn’t make the leap in time. Even when grid energy is out there, delays in interconnection approvals and allowing can sluggish retrofits to a crawl. Goldman Sachs now describes this transition as a shift towards “hyper-dense computational environments,” the place even airflow and rack format have to be redesigned from the bottom up.

The Cooling Downside Is Larger Than You Assume

When you stroll into an information middle constructed just some years in the past and attempt to run right this moment’s AI workloads at full depth, cooling is usually the very first thing that begins to present. It doesn’t fail abruptly. It breaks down in small components however in additional compounding methods. Airflow will get tight. Energy utilization spikes. Reliability slips. And all of this contributes to a damaged system.

Conventional air techniques had been by no means constructed for this sort of warmth. As soon as rack energy climbs above 30 or 40 kilowatts, the vitality wanted simply to maneuver and chill that air turns into its personal downside. McKinsey places the ceiling for air-cooled techniques at round 50 kilowatts per rack. However right this moment’s AI clusters are already going far past that. Some are hitting 80 and even 100 kilowatts. That stage of warmth disrupts all the steadiness of the ability.

Because of this extra operators are turning to immersion and liquid cooling. These techniques pull warmth immediately from the supply, utilizing fluid as a substitute of air. Some setups submerge servers solely in nonconductive liquid. Others run coolant straight to the chips. Each supply higher thermal efficiency and much higher effectivity at scale. In some instances, operators are even reusing that warmth to energy close by buildings or industrial techniques.

Nonetheless, this shift isn’t as simple as one may suppose. Liquid cooling calls for new {hardware}, plumbing, and ongoing help. So, it requires house and cautious planning. Nonetheless, as densities rise, staying with air isn’t simply inefficient, it units a tough restrict on how far knowledge facilities can scale. As operators notice there’s no approach to air-tune their manner out of 100 kilowatt racks, different options should emerge – and so they have.

The Case for Immersion Cooling

For a very long time, immersion cooling felt like overengineering. It was attention-grabbing in concept, however not one thing most operators severely thought-about. That’s modified. The nearer services get to the thermal ceiling of air and fundamental liquid techniques, the extra immersion begins trying like the one actual possibility left.

As a substitute of making an attempt to power extra air by means of hotter racks, immersion takes a distinct route. Servers go straight into nonconductive liquid, which pulls the warmth off passively. Some techniques even use fluids that boil and recondense inside a closed tank, carrying warmth out with virtually no shifting components. It’s quieter, denser, and sometimes extra steady below full load.

Whereas the advantages are clear, deploying immersion nonetheless takes planning. The tanks require bodily house, and the fluids include upfront prices. Nonetheless, in comparison with redesigning a whole air-cooled facility or throttling workloads to remain inside limits, immersion is beginning to seem like the extra simple path. For a lot of operators, it’s not an experiment anymore. It must be the subsequent step.

From Compute Hubs to Power Nodes

If immersion cooling solves the warmth, however what concerning the timing? When are you able to truly pull that a lot energy from the grid? That’s the place the subsequent bottleneck is forming, and it’s forcing a shift in how hyperscalers function.

Google has already signed formal demand-response agreements with regional utilities just like the TVA. The deal goes past decreasing complete consumption because it shapes when and the place that energy will get used. AI workloads, particularly coaching jobs, have built-in flexibility.

With the correct software program stack, these jobs can migrate throughout services or delay execution by hours. That delay turns into a software. It’s a approach to keep away from grid congestion, take in extra renewables, or keep uptime when techniques are tight.

It’s not simply Google. Microsoft has been testing energy-matching fashions throughout its knowledge facilities, together with scheduling jobs to align with clear vitality availability. The Rocky Mountain Institute initiatives that knowledge middle alignment with grid dynamics could unlock gigawatts of in any other case stranded capability.

Make little question that these aren’t sustainability gestures. They’re survival methods. Grid queues are rising. Allowing timelines are slipping. Interconnect caps have gotten actual limits on AI infrastructure. The services that thrive received’t simply be well-cooled, they’ll be grid-smart, contract-flexible, and constructed to reply. So, from compute hubs to vitality nodes, it’s now not nearly how a lot energy you want. It’s about how properly you may dance with the system delivering it.

Designing for AI Means Rethinking Every thing

You may’t design round AI the best way knowledge facilities used to deal with basic compute. The masses are heavier, the warmth is larger, and the tempo is relentless. You begin with racks that pull extra energy than total server rooms did a decade in the past, and the whole lot round them has to adapt.

New builds now work from the within out. Engineers begin with workload profiles, then form airflow, cooling paths, cable runs, and even structural helps primarily based on what these clusters will truly demand. In some instances, various kinds of jobs get their very own electrical zones. Which means separate cooling loops, shorter throw cabling, devoted switchgear — a number of techniques, all working below the identical roof.

Energy supply is altering, too. In a dialog with BigDATAwire, David Seaside, Market Section Supervisor at Anderson Energy, defined, “Gear is benefiting from a lot larger voltages and concurrently rising present to attain the rack densities which might be crucial. That is additionally necessitating the event of elements and infrastructure to correctly carry that energy.”

This shift isn’t nearly staying environment friendly. It’s about staying viable. Knowledge facilities that aren’t constructed with warmth reuse, growth room, and versatile electrical design received’t maintain up lengthy. The calls for aren’t slowing down. The infrastructure has to satisfy them head-on.

What This Infrastructure Shift Means Going Ahead

We all know that {hardware} alone doesn’t transfer the needle anymore. The true benefit comes from pushing it on-line shortly, with out getting slowed down by energy, permits, and different obstacles. That’s the place the cracks are starting to open.

Web site choice has turn out to be a high-stakes filter. An affordable piece of land isn’t sufficient. What you want is utility capability, native help, and room to develop with out months of negotiating. Funded initiatives are hitting partitions, even ones with distinctive assets.

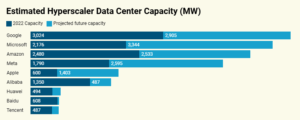

Those that have been pulling forward started early. Microsoft is already engaged on multi-campus builds that may deal with gigawatt masses. Google is pairing facility development with versatile vitality contracts and close by renewables. Amazon is redesigning its electrical techniques and dealing with zoning authorities earlier than permits even go dwell.

The strain now’s regular, and any delays will ripple by means of the whole lot. When you lose a window, you lose coaching cycles. The speed at which fashions are developed doesn’t await the infrastructure to maintain up. Rear-end planning was once a front-line technique. Now, knowledge middle builders are those who’re defining what occurs subsequent. As we transfer ahead, AI efficiency received’t simply be measured in FLOPs or latency. It will come all the way down to who may construct when it actually mattered.

Associated Objects

New GenAI System Constructed to Speed up HPC Operations Knowledge Analytics

Bloomberg Finds AI Knowledge Facilities Fueling America’s Power Invoice Disaster

OpenAI Goals to Dominate the AI Grid With 5 New Knowledge Facilities