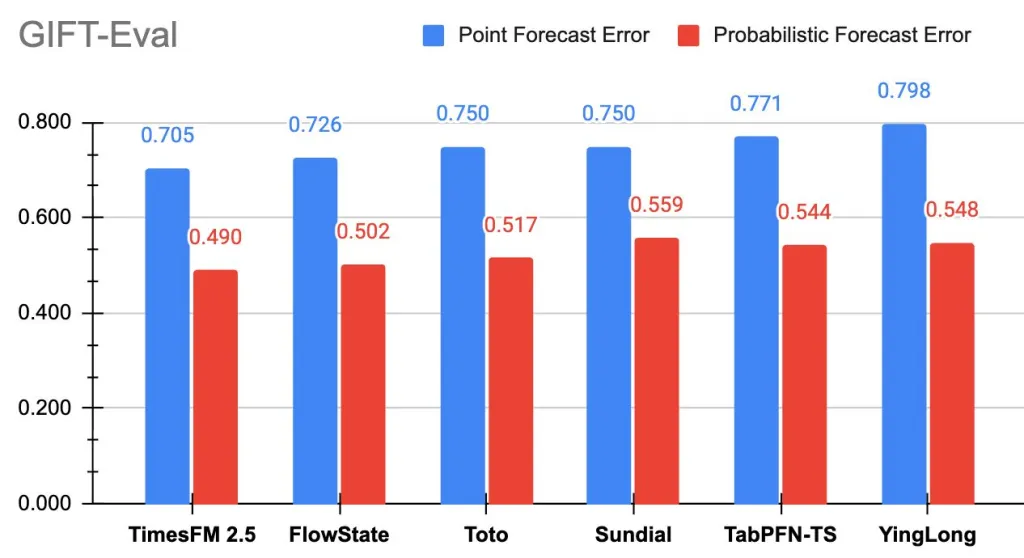

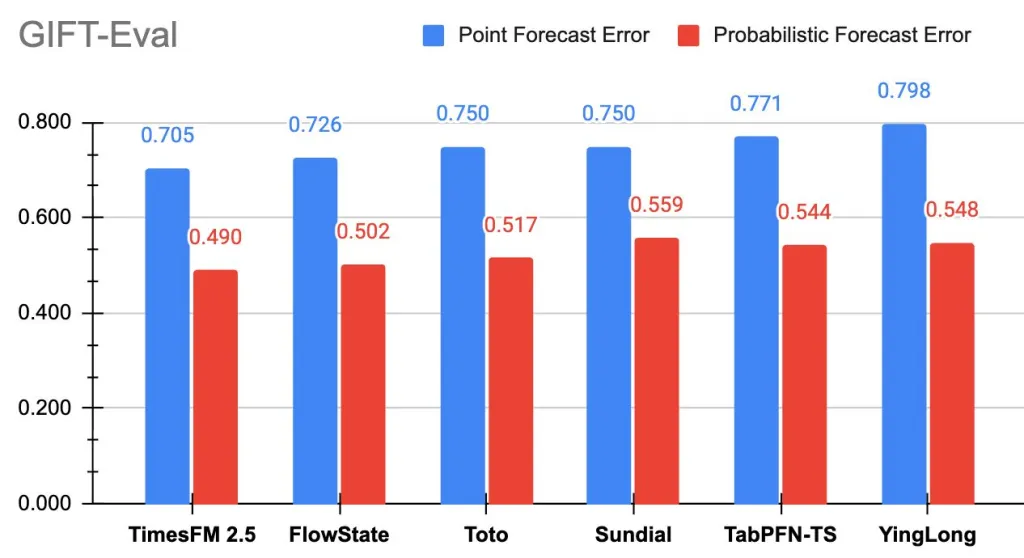

Google Analysis has launched TimesFM-2.5, a 200M-parameter, decoder-only time-series basis mannequin with a 16K context size and native probabilistic forecasting help. The brand new checkpoint is stay on Hugging Face. On GIFT-Eval, TimesFM-2.5 now tops the leaderboard throughout accuracy metrics (MASE, CRPS) amongst zero-shot basis fashions.

What’s Time-Collection Forecasting?

Time-series forecasting is the apply of analyzing sequential information factors collected over time to establish patterns and predict future values. It underpins important purposes throughout industries, together with forecasting product demand in retail, monitoring climate and precipitation developments, and optimizing large-scale methods resembling provide chains and vitality grids. By capturing temporal dependencies and seasonal differences, time-series forecasting allows data-driven decision-making in dynamic environments.

What modified in TimesFM-2.5 vs v2.0?

- Parameters: 200M (down from 500M in 2.0).

- Max context: 16,384 factors (up from 2,048).

- Quantiles: Optionally available 30M-param quantile head for steady quantile forecasts as much as 1K horizon.

- Inputs: No “frequency” indicator required; new inference flags (flip-invariance, positivity inference, quantile-crossing repair).

- Roadmap: Upcoming Flax implementation for quicker inference; covariates help slated to return; docs being expanded.

Why does an extended context matter?

16K historic factors enable a single ahead cross to seize multi-seasonal construction, regime breaks, and low-frequency parts with out tiling or hierarchical stitching. In apply, that reduces pre-processing heuristics and improves stability for domains the place context >> horizon (e.g., vitality load, retail demand). The longer context is a core design change explicitly famous for two.5.

What’s the analysis context?

TimesFM’s core thesis—a single, decoder-only basis mannequin for forecasting—was launched within the ICML 2024 paper and Google’s analysis weblog. GIFT-Eval (Salesforce) emerged to standardize analysis throughout domains, frequencies, horizon lengths, and univariate/multivariate regimes, with a public leaderboard hosted on Hugging Face.

Key Takeaways

- Smaller, Quicker Mannequin: TimesFM-2.5 runs with 200M parameters (half of two.0’s measurement) whereas enhancing accuracy.

- Longer Context: Helps 16K enter size, enabling forecasts with deeper historic protection.

- Benchmark Chief: Now ranks #1 amongst zero-shot basis fashions on GIFT-Eval for each MASE (level accuracy) and CRPS (probabilistic accuracy).

- Manufacturing-Prepared: Environment friendly design and quantile forecasting help make it appropriate for real-world deployments throughout industries.

- Broad Availability: The mannequin is stay on Hugging Face.

Abstract

TimesFM-2.5 exhibits that basis fashions for forecasting are shifting previous proof-of-concept into sensible, production-ready instruments. By reducing parameters in half whereas extending context size and main GIFT-Eval throughout each level and probabilistic accuracy, it marks a step-change in effectivity and functionality. With Hugging Face entry already stay and BigQuery/Mannequin Backyard integration on the best way, the mannequin is positioned to speed up adoption of zero-shot time-series forecasting in real-world pipelines.

Try the Mannequin card (HF), Repo, Benchmark and Paper. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Publication.