Google has not too long ago launched their most clever mannequin that may create, cause, and perceive throughout a number of modalities. Google Gemini 3 Professional is not only an incremental replace, it’s in actual fact a serious step ahead in AI functionality. This mannequin with the cutting-edge reasoning, multimodal understanding, and agentic capabilities goes to be the principle issue to alter the best way builders create clever purposes. And with the brand new Gemini 3 Professional API, builders can now create smarter, extra dynamic techniques than ever earlier than.

In case you are making advanced AI workflows, working with multimodal knowledge, or creating agentic techniques that may handle multi-step duties on their very own, this information will educate you all about using Gemini 3 Professional through its API.

What Makes Gemini 3 Professional Particular?

Allow us to not get misplaced within the technical particulars and first focus on the explanations behind the excitement among the many builders about this mannequin. The Google Gemini 3 Professional mannequin that has been in improvement for some time has now reached the very high of the AI benchmarking record with a improbable Elo score of 1501, and it was not merely designed to ship most efficiency however the entire bundle was oriented in the direction of an excellent expertise for the developer.

The principle options are:

- Superior reasoning: The mannequin is now able to fixing intricate, multi-step issues with very refined considering.

- Large context window: A large 1 million token enter context permits for the feeding of complete codebases, full-length books or lengthy video content material.

- Multimodal mastery: Textual content, photos, video, PDFs and code can all be processed collectively in a really clean manner.

- Agentic workflows: Run multi-step duties the place the mannequin orchestrates, checks and modifies its motion of being a robotic.

- Dynamic considering: Relying on the scenario, the mannequin will both undergo the issue step-by-step or simply give the reply.

You possibly can be taught extra about Gemini 3 Professional mannequin and its options within the following article: Gemini 3 Professional

Getting Began: Your First Gemini 3 Professional API Name

Step 1: Get Your API Key

Go to Google AI Studio and log in utilizing your Google account. Now, click on the profile icon on the high proper nook, after which select “Get API key” choice. If it’s your first time, choose “Create API key in new undertaking” in any other case import an current one. Make a replica of the API key immediately since you won’t be able to see it once more.

Step 2: Set up the SDK

Select your most popular language and set up the official SDK utilizing following instructions:

Python:

pip set up google-genaiNode.js/JavaScript:

npm set up @google/genaiStep 3: Set Your Atmosphere Variable

Retailer your API key securely in an setting variable:

export GEMINI_API_KEY="your-api-key-here"Gemini 3 Professional API Pricing

The Gemini 3 Professional API makes use of a pay-as-you-go mannequin, the place your prices are primarily calculated based mostly on the variety of tokens consumed for each your enter (prompts) and the mannequin’s output (responses).

The important thing determinant for the pricing tier is the context window size of your request. Longer context home windows, which permit the mannequin to course of extra data concurrently (like giant paperwork or lengthy dialog histories), incur the next charge.

The next charges apply to the gemini-3-pro-preview mannequin through the Gemini API and are measured per a million tokens (1M).

| Function | Free Tier | Paid Tier (per 1M tokens in USD) |

|---|---|---|

| Enter worth | Free (restricted day by day utilization) |

$2.00, prompts ≤ 200k tokens $4.00, prompts > 200k tokens |

| Output worth (together with considering tokens) | Free (restricted day by day utilization) |

$12.00, prompts ≤ 200k tokens $18.00, prompts > 200k tokens |

| Context caching worth | Not out there |

$0.20–$0.40 per 1M tokens (will depend on immediate measurement) $4.50 per 1M tokens per hour (storage worth) |

| Grounding with Google Search | Not out there |

1,500 RPD (free) Coming quickly: $14 per 1,000 search queries |

| Grounding with Google Maps | Not out there | Not out there |

Understanding Gemini 3 Professional’s New Parameters

The API presents a number of revolutionary parameters one among which is the considering stage parameter, giving full management over to the requester in a really detailed method.

The Pondering Degree Parameter

This new parameter may be very possible essentially the most important one. You might be not left to surprise how a lot the mannequin ought to “assume” however slightly it’s explicitly managed by you:

- thinking_level: “low”: For primary duties akin to classification, Q&A, or chatting. There will probably be little or no latency, and the prices will probably be decrease which makes it good for high-throughput purposes.

- thinking_level: “excessive” (default): For advanced reasoning duties. The mannequin takes longer however the output will encompass a extra rigorously reasoned argument. That is the time for problem-solving and evaluation.

Tip: Don’t use thinking_level along with the older thinking_budget parameter, as they aren’t suitable and can end in a 400 error.

Media Decision Management

Whereas analysing photos, PDF paperwork, or movies, now you possibly can regulate the digital processor utilization when analysing visible enter:

- Photographs:

media_resolution_highfor the highest quality (1120 tokens/picture). - PDFs:

media_resolution_mediumfor doc understanding (560 tokens). - Movies:

media_resolution_lowfor motion recognition (70 tokens/body) andmedia_resolution_highfor conversation-heavy textual content (280 tokens/body).

This places the optimization of high quality and token utilization in your arms.

Temperature Settings

Right here is one thing that you could be discover intriguing: you possibly can merely preserve the temperature at its defaults of 1.0. In contrast to earlier fashions that might usually make productive use of temperature tuning, Gemini 3’s reasoning is optimized round this default setting. Reducing the temperature may cause unusual loops or degrade efficiency on extra advanced duties.

Construct With Me: Palms-On Examples of Gemini 3 Professional API

Demo 1: Constructing a Good Code Analyzer

Let’s construct one thing utilizing a real-world use case. We’ll create a system that first analyses code, identifies discrepancies, and suggests enhancements utilizing Gemini 3 Professional’s superior reasoning characteristic.

Python Implementation

import os

from google import genai

# Initialize the Gemini API consumer together with your API key

# You possibly can set this immediately or through setting variable

api_key = "api-key" # Exchange together with your precise API key

consumer = genai.Shopper(api_key=api_key)

def analyze_code(code_snippet: str) -> str:

"""

Analyzes code for discrepancies and suggests enhancements utilizing Gemini 3 Professional.

Args:

code_snippet: The code to research

Returns:

Evaluation and enchancment options from the mannequin

"""

response = consumer.fashions.generate_content(

mannequin="gemini-3-pro-preview",

contents=[

{

"text": f"""Analyze this code for issues, inefficiencies, and potential improvements.

Provide:

1. Issues found (bugs, logic errors, security concerns)

2. Performance optimizations

3. Code quality improvements

4. Best practices violations

5. Refactored version with explanations

Code to analyze:

{code_snippet}

Be direct and concise in your analysis."""

}

]

)

return response.textual content

# Instance utilization

sample_code = """

def calculate_total(objects):

whole = 0

for i in vary(len(objects)):

whole = whole + objects[i]['price'] * objects[i]['quantity']

return whole

def get_user_data(user_id):

import requests

response = requests.get(f"http://api.instance.com/person/{user_id}")

knowledge = response.json()

return knowledge

"""

print("=" * 60)

print("GEMINI 3 PRO - SMART CODE ANALYZER")

print("=" * 60)

print("nAnalyzing code...n")

# Run the evaluation

evaluation = analyze_code(sample_code)

print(evaluation)

print("n" + "=" * 60)Output:

What’s Occurring Right here?

- We’re implementing

thinking_level ("excessive")since code assessment entails some heavy reasoning. - The immediate is transient and to the purpose Gemini 3 responds extra successfully when prompts are direct, versus elaborately utilizing immediate engineering.

- The mannequin opinions the code with full reasoning capability and can present a responsive model which incorporates important revisions and insightful evaluation.

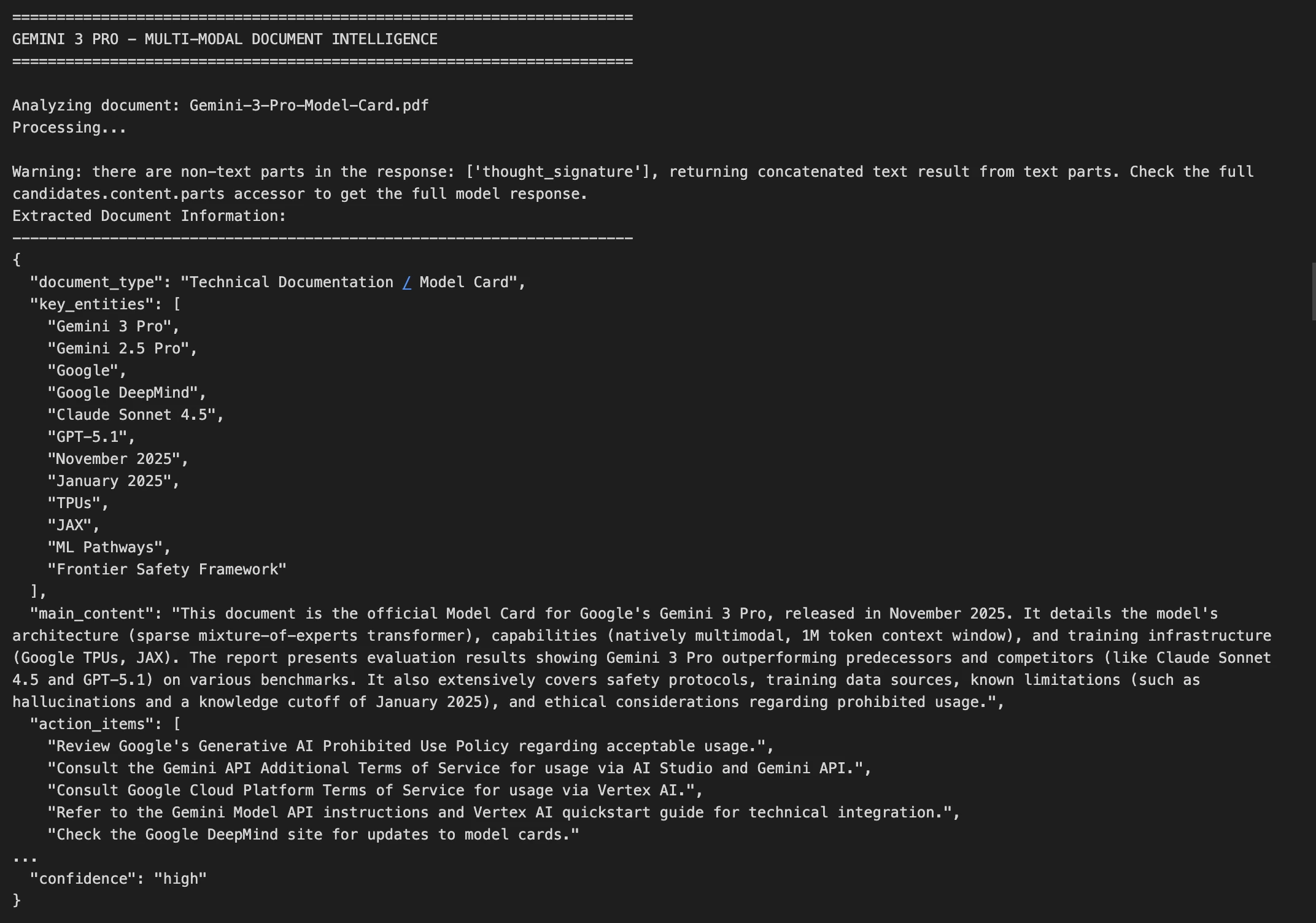

Demo 2: Multi-Modal Doc Intelligence

Now let’s tackle a extra advanced use case that’s analyzing a picture of a doc and extracting structured data.

Python Implementation

import base64

import json

from google import genai

# Initialize the Gemini API consumer

api_key = "api-key-here" # Exchange together with your precise API key

consumer = genai.Shopper(api_key=api_key)

def analyze_document_image(image_path: str) -> dict:

"""

Analyzes a doc picture and extracts key data.

Handles photos, PDFs, and different doc codecs.

Args:

image_path: Path to the doc picture file

Returns:

Dictionary containing extracted doc data as JSON

"""

# Learn and encode the picture

with open(image_path, "rb") as img_file:

image_data = base64.standard_b64encode(img_file.learn()).decode()

# Decide the MIME sort based mostly on file extension

mime_type = "picture/jpeg" # Default

if image_path.endswith(".png"):

mime_type = "picture/png"

elif image_path.endswith(".pdf"):

mime_type = "software/pdf"

elif image_path.endswith(".gif"):

mime_type = "picture/gif"

elif image_path.endswith(".webp"):

mime_type = "picture/webp"

# Name the Gemini API with multimodal content material

response = consumer.fashions.generate_content(

mannequin="gemini-3-pro-preview",

contents=[

{

"text": """Extract and structure all information from this document image.

Return the data as JSON with these fields:

- document_type: What type of document is this?

- key_entities: List of important names, dates, amounts, etc.

- main_content: Brief summary of the document's purpose

- action_items: Any tasks or deadlines mentioned

- confidence: How confident you are in the extraction (high/medium/low)

Return ONLY valid JSON, no markdown formatting."""

},

{

"inline_data": {

"mime_type": mime_type,

"data": image_data

}

}

]

)

# Parse the JSON response

attempt:

outcome = json.hundreds(response.textual content)

return outcome

besides json.JSONDecodeError:

return {

"error": "Didn't parse response",

"uncooked": response.textual content

}

# Instance utilization with a pattern doc

print("=" * 70)

print("GEMINI 3 PRO - MULTI-MODAL DOCUMENT INTELLIGENCE")

print("=" * 70)

document_path = "Gemini-3-Professional-Mannequin-Card.pdf" # Change this to your precise doc path

attempt:

print(f"nAnalyzing doc: {document_path}")

print("Processing...n")

document_info = analyze_document_image(document_path)

print("Extracted Doc Info:")

print("-" * 70)

print(json.dumps(document_info, indent=2))

besides FileNotFoundError:

print(f"Error: Doc file '{document_path}' not discovered.")

print("Please present a sound path to a doc picture (JPG, PNG, PDF, and so forth.)")

print("nExample:")

print(' document_info = analyze_document_image("bill.pdf")')

print(' document_info = analyze_document_image("contract.png")')

print(' document_info = analyze_document_image("receipt.jpg")')

besides Exception as e:

print(f"Error processing doc: {str(e)}")

print("n" + "=" * 70)Output:

Key Methods Right here:

- Picture processing: We’re encoding the image in base64 format for supply

- Most high quality choice: For textual content paperwork we apply

media_resolution_highto offer good OCR - Ordered outcome: We ask for a JSON format which is straightforward to attach with different techniques

- Fault dealing with: We do it the best way that JSON parsing errors are usually not noticeable

Superior Options: Past Fundamental Prompting

Thought Signatures: Sustaining Reasoning Context

Gemini 3 professional introduces a tremendous characteristic often called thought signatures, which has encrypted representations of the interior reasoning that mannequin does. These mannequin signatures maintain context throughout the API calls when utilizing operate calling or multi-turn conversations.

In case your desire is to make use of the official Python or Node.js SDKs technique then this thought signatures is being dealt with mechanically and invisibly however in the event you’re making uncooked API name then that you must return the although Signature precisely because it’s acquired.

Context Caching for Price Optimization

Do you propose on sending comparable requests a number of instances? Make the most of context caching which might cache the primary 2,048 tokens of your immediate, and get monetary savings on subsequent requests. That is improbable anytime you might be processing a bunch of paperwork and may reuse a system immediate in between.

Batch API: Course of at Scale

For workloads that aren’t time-sensitive, the Batch API can prevent as much as 90%. That is supreme for workloads the place there are many paperwork to course of or if you will run a big evaluation in a single day.

Conclusion

Google Gemini 3 Professional marks a inflection level in what’s attainable with AI APIs. The mix of superior reasoning, large context home windows, and inexpensive pricing means now you can construct techniques that have been beforehand impractical.

Begin small: Construct a chatbot, analyze some paperwork, or automate a routine job. Then, as you get snug with the API, discover extra advanced eventualities like autonomous brokers, code era, and multimodal evaluation.

Incessantly Requested Questions

A. Its superior reasoning, multimodal help, and large context window allow smarter and extra succesful purposes.

A. Set thinking_level to low for quick/easy duties or excessive for advanced evaluation.

A. Use context caching or the Batch API to reuse prompts and run workloads effectively.

Login to proceed studying and luxuriate in expert-curated content material.