Giant language fashions (LLMs) fairly often generate “hallucinations”—assured but incorrect outputs that seem believable. Regardless of enhancements in coaching strategies and architectures, hallucinations persist. A brand new analysis from OpenAI gives a rigorous clarification: hallucinations stem from statistical properties of supervised versus self-supervised studying, and their persistence is strengthened by misaligned analysis benchmarks.

What Makes Hallucinations Statistically Inevitable?

The analysis group explains hallucinations as errors inherent to generative modeling. Even with completely clear coaching information, the cross-entropy goal utilized in pretraining introduces statistical pressures that produce errors.

The analysis group scale back the issue to a supervised binary classification activity known as Is-It-Legitimate (IIV): figuring out whether or not a mannequin’s output is legitimate or misguided. They show that the generative error charge of an LLM is no less than twice its IIV misclassification charge. In different phrases, hallucinations happen for a similar causes misclassifications seem in supervised studying: epistemic uncertainty, poor fashions, distribution shift, or noisy information.

Why Do Uncommon Information Set off Extra Hallucinations?

One main driver is the singleton charge—the fraction of information that seem solely as soon as in coaching information. By analogy to Good–Turing missing-mass estimation, if 20% of information are singletons, no less than 20% of them will probably be hallucinated. This explains why LLMs reply reliably about broadly repeated information (e.g., Einstein’s birthday) however fail on obscure or not often talked about ones.

Can Poor Mannequin Households Result in Hallucinations?

Sure. Hallucinations additionally emerge when the mannequin class can not adequately signify a sample. Traditional examples embody n-gram fashions producing ungrammatical sentences, or trendy tokenized fashions miscounting letters as a result of characters are hidden inside subword tokens. These representational limits trigger systematic errors even when the information itself is ample.

Why Doesn’t Put up-Coaching Eradicate Hallucinations?

Put up-training strategies similar to RLHF (reinforcement studying from human suggestions), DPO, and RLAIF scale back some errors, particularly dangerous or conspiratorial outputs. However overconfident hallucinations stay as a result of analysis incentives are misaligned.

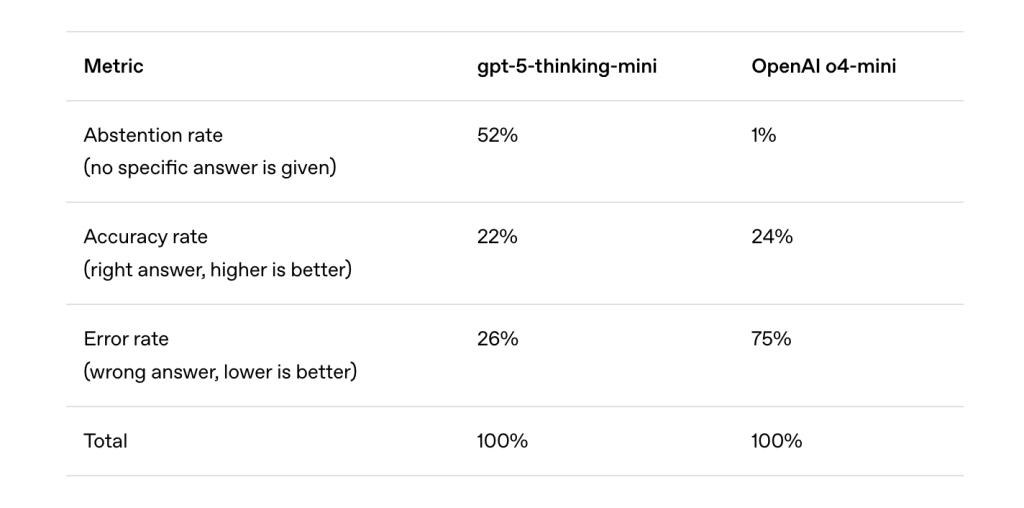

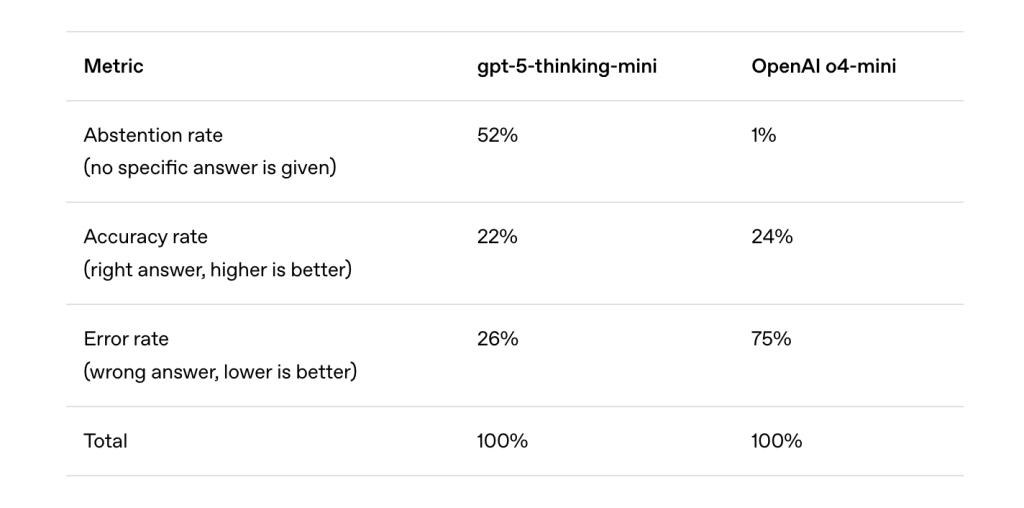

Like college students guessing on multiple-choice exams, LLMs are rewarded for bluffing when uncertain. Most benchmarks—similar to MMLU, GPQA, and SWE-bench—apply binary scoring: right solutions get credit score, abstentions (“I don’t know”) get none, and incorrect solutions are penalized no extra harshly than abstentions. Underneath this scheme, guessing maximizes benchmark scores, even when it fosters hallucinations.

How Do Leaderboards Reinforce Hallucinations?

A overview of fashionable benchmarks exhibits that almost all use binary grading with no partial credit score for uncertainty. In consequence, fashions that in truth categorical uncertainty carry out worse than people who at all times guess. This creates systemic stress for builders to optimize fashions for assured solutions somewhat than calibrated ones.

What Modifications May Scale back Hallucinations?

The analysis group argue that fixing hallucinations requires socio-technical change, not simply new analysis suites. They suggest specific confidence targets: benchmarks ought to clearly specify penalties for flawed solutions and partial credit score for abstentions.

For instance: “Reply solely if you’re >75% assured. Errors lose 2 factors; right solutions earn 1; ‘I don’t know’ earns 0.”

This design mirrors real-world exams like earlier SAT and GRE codecs, the place guessing carried penalties. It encourages behavioral calibration—fashions abstain when their confidence is beneath the edge, producing fewer overconfident hallucinations whereas nonetheless optimizing for benchmark efficiency.

What Are the Broader Implications?

This work reframes hallucinations as predictable outcomes of coaching goals and analysis misalignment somewhat than inexplicable quirks. The findings spotlight:

- Pretraining inevitability: Hallucinations parallel misclassification errors in supervised studying.

- Put up-training reinforcement: Binary grading schemes incentivize guessing.

- Analysis reform: Adjusting mainstream benchmarks to reward uncertainty can realign incentives and enhance trustworthiness.

By connecting hallucinations to established studying idea, the analysis demystifies their origin and suggests sensible mitigation methods that shift duty from mannequin architectures to analysis design.

Take a look at the PAPER and Technical particulars right here. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.