The vast majority of folks construct machine studying fashions in an experimental or analysis setting, which is acceptable for exploration. It’s not till you begin to deploy it inside actual purposes that you simply’ll see the precise worth, as an illustration, an online software requesting predictions out of your mannequin, or a backend software needing to make real-time choices based mostly in your educated mannequin. You desire a easy, dependable method to expose your educated machine studying mannequin as an online service, that’s to say, an API.

The FastAPI is an ideal alternative for this job.

What’s FastAPI?

FastAPI is a Python internet framework that’s designed to assist builders construct RESTful APIs. It’s quick, easy, and has many options included by default, equivalent to computerized era of API documentation. FastAPI additionally performs properly with Python’s present libraries for information processing and is due to this fact a perfect choice for machine studying initiatives.

The important thing benefits of using FastAPI are:

- Quick Efficiency: FastAPI is without doubt one of the quickest out there internet frameworks for the Python programming language, because it was constructed upon two standard libraries: Starlette and Pydantic.

- Straightforward Growth and Upkeep: Writing clear APIs with FastAPI requires minimal code because of the automated capabilities of FastAPI, which embrace computerized validation, serialization, and enter checks.

- Constructed-In API Documentation: All APIs constructed with FastAPI routinely embrace a built-in Swagger interface on the URL endpoint

/docs. These enable customers to check their API endpoints straight from their internet browser. - Best for Machine Studying Fashions: By utilizing FastAPI, the customers might outline their enter schema for his or her machine studying fashions, expose the mannequin’s endpoint for a prediction, and simply share the placement of the saved file of the mannequin so it may be loaded into reminiscence upon software startup. All that backend work is taken care of by FastAPI. Due to this fact, FastAPI has gained immense reputation amongst builders who deploy machine studying fashions.

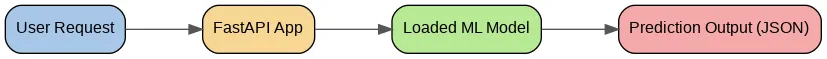

This determine depicts a prediction request’s circulation by the system: information is shipped by a person to the FastAPI software, which hundreds the educated machine studying mannequin and runs the inference. A prediction is produced by the mannequin, and this result’s returned by the API in JSON format.

Deploying An ML Mannequin With FastAPI Palms-On Tutorial

Beneath, you’ll find a totally hands-on information for constructing machine studying mannequin internet APIs. However earlier than that, let’s see the folder construction.

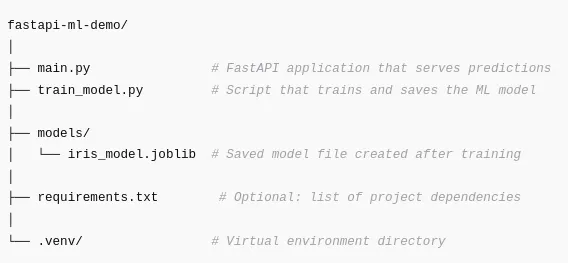

Folder Construction

The folder construction helps in organizing the challenge recordsdata right into a easy construction; this makes the identification of the place every a part of the applying belongs simpler.

Now, let’s see what every half does

- principal.py

Runs FastAPI, hundreds the educated mannequin, and exposes the prediction endpoint. - train_model.py

Creates and saves the machine studying mannequin that FastAPI will load. - fashions/

Shops educated mannequin artifacts. This folder will probably be created if it doesn’t exist already. - necessities.txt

Not required however advisable so others can set up every part with one command. - .venv/

Comprises your digital atmosphere to maintain dependencies remoted.

Step 1: Challenge setup

1.1 Create Your Challenge Listing

Create the challenge listing the place all your code, recordsdata and sources for this challenge will probably be situated.

1.2 Create a digital atmosphere

A digital atmosphere isolates your dependencies on your challenge from different initiatives in your pc.

python -m venv .venvActivate it:

Home windows

.venvScriptsactivatemacOS/Linux

supply .venv/bin/activateWhen your atmosphere is up and working, it is best to see “(.venv)” forward of the terminal.

1.3 Set up required dependencies

Beneath is an inventory of Python libraries we will probably be utilizing in our FastAPI internet server:

- FastAPI (the primary framework for constructing internet APIs)

- Uvicorn (the ASGI internet server for internet hosting FastAPI purposes)

- Scikit-Study (for use as a mannequin coach)

- Pydantic (for computerized enter validation)

- Joblib (to persist saving/loading ML fashions)

Set up them:

pip set up fastapi uvicorn scikit-learn pydantic joblib Step 2: Practice and save a easy ML mannequin

For this demonstration, our classifier will probably be educated on the basic Iris dataset and the mannequin will probably be saved to disk. The saved mannequin will then be loaded into our FastAPI internet software.

To coach and save our mannequin, we’ll create a file known as train_model.py:

# train_model.py

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

import joblib

from pathlib import Path

MODEL_PATH = Path("fashions")

MODEL_PATH.mkdir(exist_ok=True)

MODEL_FILE = MODEL_PATH / "iris_model.joblib"

def train_and_save_model():

iris = load_iris()

X = iris.information

y = iris.goal

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42, stratify=y

)

clf = RandomForestClassifier(

n_estimators=100,

random_state=42

)

clf.match(X_train, y_train)

accuracy = clf.rating(X_test, y_test)

print(f"Take a look at accuracy: {accuracy:.3f}")

joblib.dump(

{

"mannequin": clf,

"target_names": iris.target_names,

"feature_names": iris.feature_names,

},

MODEL_FILE,

)

print(f"Saved mannequin to {MODEL_FILE.resolve()}")

if __name__ == "__main__":

train_and_save_model()Set up joblib if wanted:

pip set up joblibRun the script:

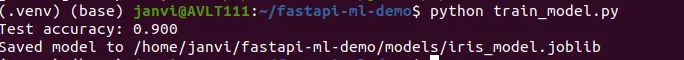

As soon as the mannequin has been efficiently educated, it is best to see the accuracy printed to the terminal and a brand new mannequin file may even be created which will probably be used for loading in FastAPI.

Step 3: Create a FastAPI program that may ship the predictions of your mannequin

On this step, we are going to create an API that may

- Load the educated Machine Studying mannequin on begin up

- Create an enter schema that can be utilized to validate the info despatched to the API

- Name the /predict endpoint outlined within the earlier step to create an output based mostly on the mannequin’s predictions.

Create principal.py:

# principal.py

from fastapi import FastAPI

from pydantic import BaseModel, Discipline

from typing import Checklist

import joblib

from pathlib import Path

MODEL_FILE = Path("fashions/iris_model.joblib")

class IrisFeatures(BaseModel):

sepal_length: float = Discipline(..., instance=5.1)

sepal_width: float = Discipline(..., instance=3.5)

petal_length: float = Discipline(..., instance=1.4)

petal_width: float = Discipline(..., instance=0.2)

class PredictionResult(BaseModel):

predicted_class: str

predicted_class_index: int

chances: Checklist[float]

app = FastAPI(

title="Iris Classifier API",

description="A easy FastAPI service that serves an Iris classification mannequin.",

model="1.0.0",

)

mannequin = None

target_names = None

feature_names = None

@app.on_event("startup")

def load_model():

world mannequin, target_names, feature_names

if not MODEL_FILE.exists():

elevate RuntimeError(

f"Mannequin file not discovered at {MODEL_FILE}. "

f"Run train_model.py first."

)

artifact = joblib.load(MODEL_FILE)

mannequin = artifact["model"]

target_names = artifact["target_names"]

feature_names = artifact["feature_names"]

print("Mannequin loaded efficiently.")

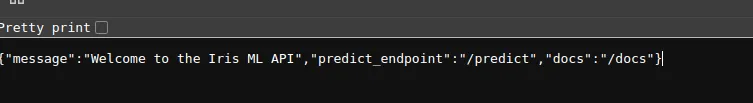

@app.get("/")

def root():

return {

"message": "Welcome to the Iris ML API",

"predict_endpoint": "/predict",

"docs": "/docs",

}

@app.submit("/predict", response_model=PredictionResult)

def predict(options: IrisFeatures):

if mannequin is None:

elevate RuntimeError("Mannequin isn't loaded.")

X = [[

features.sepal_length,

features.sepal_width,

features.petal_length,

features.petal_width,

]]

proba = mannequin.predict_proba(X)[0]

class_index = int(proba.argmax())

class_name = str(target_names[class_index])

return PredictionResult(

predicted_class=class_name,

predicted_class_index=class_index,

chances=proba.tolist(),

)This file incorporates all the code that may enable the Machine Studying mannequin to perform as an online app.

Step 4: Working and testing the API domestically

4.1 Begin the server

Run:

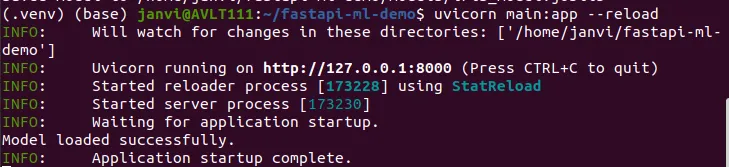

uvicorn principal:app –reload

The app begins at: http://127.0.0.1:8000/

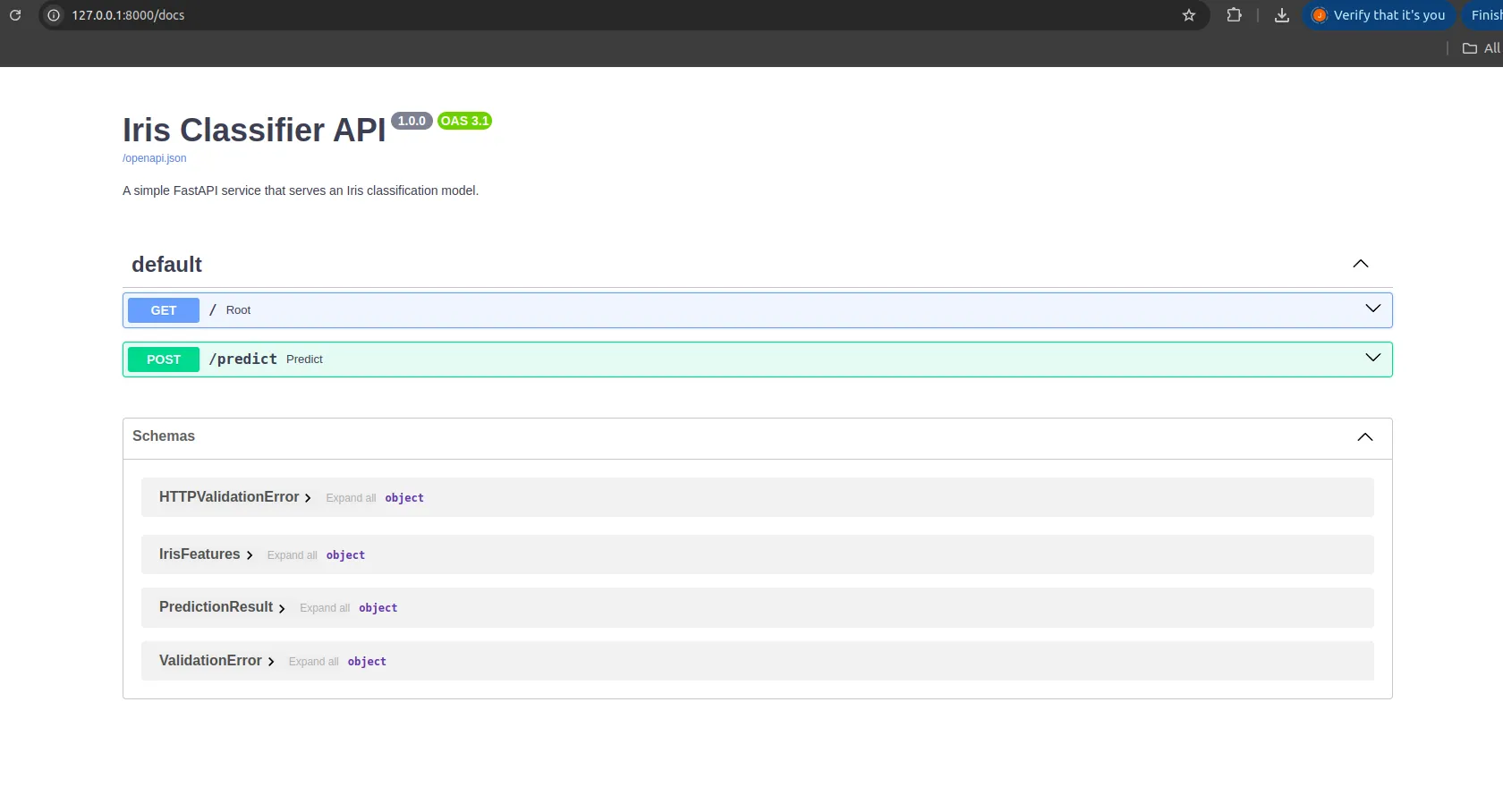

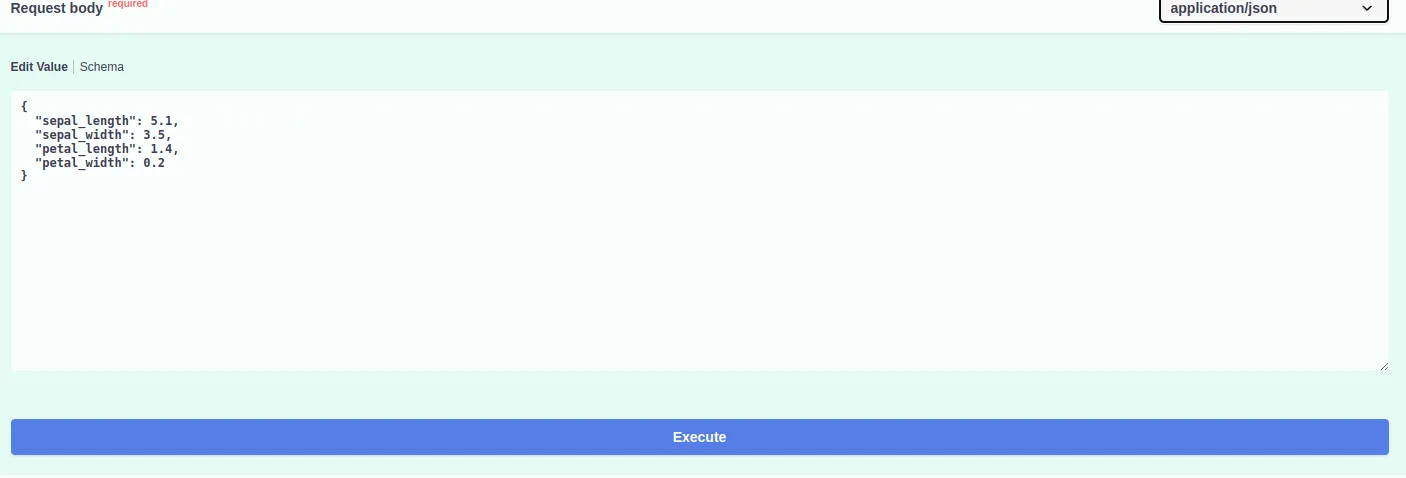

4.2 Testing the API utilizing the interactive documentation supplied by FastAPI

FastAPI supplies built-in Swagger documentation at: http://127.0.0.1:8000/docs

There you’ll find:

- A GET endpoint

/ - A POST endpoint

/predict

Strive the /predict endpoint by clicking Strive it out and coming into:

{

"sepal_length": 5.1,

"sepal_width": 3.5,

"petal_length": 1.4,

"petal_width": 0.2

}

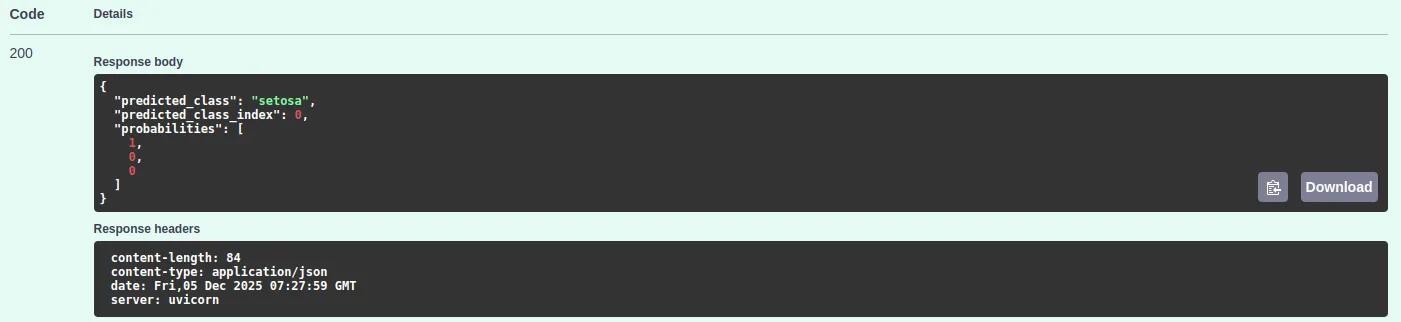

You’ll get a prediction like:

{

"predicted_class": "setosa",

"predicted_class_index": 0,

"chances": [1, 0, 0]

}

Your ML mannequin is now absolutely deployed as an API.

Deploy to Cloud

After you have your FastAPI software working in your native machine, you may deploy it on the cloud in order that it’s accessible from wherever. You shouldn’t have to hassle about any container setup for this. A couple of companies make it fairly simple.

Deploy on Render

Render is without doubt one of the quickest methods to place a FastAPI app on-line.

- Push your challenge to GitHub.

- Create a brand new Net Service on Render.

- Set the construct command:

pip set up -r necessities.txt- Set the beginning command:

uvicorn principal:app --host 0.0.0.0 --port 10000Render will set up your packages, begin your app, and offer you a public hyperlink. Anybody can now ship requests to your mannequin.

Deploy to GitHub Codespaces

When you solely desire a easy on-line atmosphere with out the additional setup, Codespaces can run your FastAPI app.

- Open your repository in Codespaces.

- Set up your dependencies.

- Launch the applying:

uvicorn principal:app --host 0.0.0.0 --port 8000Codespaces exposes the port, so you may straight open the hyperlink out of your browser. That is good to check or to share a fast demo.

Deploy on AWS EC2

You should use an EC2 occasion if you wish to be in command of your individual server.

- Launch a small EC2 machine.

- Set up Python and pip.

- Clone your challenge.

- Set up the necessities:

pip set up -r necessities.txt- Begin the API:

uvicorn principal:app --host 0.0.0.0 --port 8000Be sure port 8000 is open in your EC2 safety settings. Your API will probably be out there on the machine’s public IP deal with.

Frequent Errors and Fixes

Listed here are a couple of points you might run into whereas constructing or working the challenge, together with easy methods to repair them.

Mannequin file not discovered

This often means the coaching script was by no means run. Run:

python train_model.pyExamine that the mannequin file seems contained in the mannequin’s folder.

Lacking libraries

When you see messages about lacking modules, make sure that your digital atmosphere is energetic:

supply .venv/bin/activateThen reinstall the required libraries:

pip set up fastapi uvicorn scikit-learn pydantic joblibUvicorn reload difficulty

Some instructions on-line use the mistaken sort of sprint.

If this fails:

uvicorn principal:app –reloadUse this as a substitute:

uvicorn principal:app --reloadBrowser can’t name the API

When you see CORS errors when a frontend calls the API, add this block to your FastAPI app:

from fastapi.middleware.cors import CORSMiddleware

app.add_middleware(

CORSMiddleware,

allow_origins=["*"],

allow_methods=["*"],

allow_headers=["*"],

)- Enter form errors

Scikit-learn expects the enter as an inventory of lists. Be sure your information is formed like this:

X = [[

features.sepal_length,

features.sepal_width,

features.petal_length,

features.petal_width,

]]This avoids most form associated errors.

Conclusion

Machine Studying mannequin Deployment must be simpl. Nonetheless, utilizing FastAPI it is best to have the ability to simply create an API that’s simple to learn and perceive with only some strains of code. FastAPI takes care of all of the arrange, validation and documentation for you and this leaves you free to focus on your mannequin. This technique helps folks transition from testing and creating to full implementation in the true world. Whether or not you make prototypes, demos or manufacturing companies, utilizing FastAPI now you can share your fashions and deploy them to manufacturing shortly and simply.

Regularly Requested Questions

A. It hundreds your mannequin at startup, validates inputs routinely, exposes clear prediction endpoints, and provides you built-in interactive docs. That retains your deployment code easy whereas the framework handles many of the plumbing.

A. The API hundreds a saved mannequin file on startup.

A. FastAPI ships with Swagger docs at /docs. You possibly can open it in a browser, fill in pattern inputs for /predict, and submit a request to see actual outputs out of your mannequin.

Login to proceed studying and luxuriate in expert-curated content material.