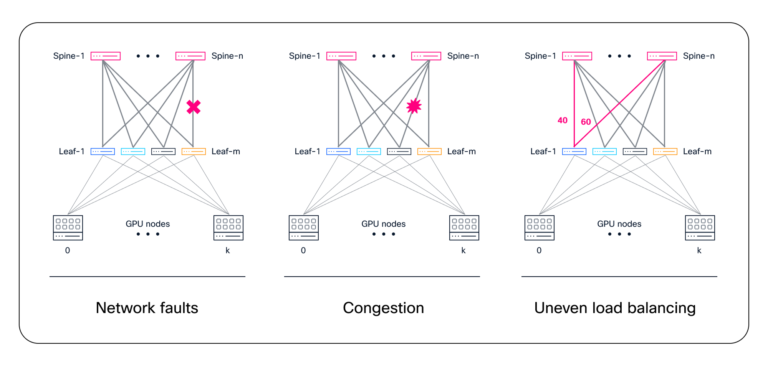

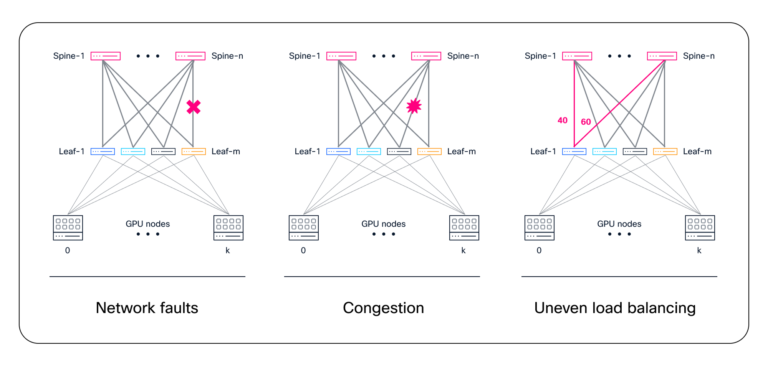

As knowledge facilities scale up, scale out, and scale throughout to fulfill the calls for of synthetic intelligence (AI) and high-performance computing (HPC) workloads, networks face rising challenges. Growing community failures, material congestion, and uneven load balancing have gotten essential ache factors, threatening each efficiency and reliability. These points drive up tail latency and create bottlenecks, undermining the effectivity of large-scale distributed environments.

To deal with these challenges, the Extremely Ethernet Consortium (UEC) was shaped in 2023, spearheading a brand new, high-performance Ethernet stack designed for these demanding environments. At its core is a scalable congestion management mannequin optimized for microsecond-level latency and the advanced, high-volume visitors of AI and HPC. As a UEC steering member, Cisco performs a pivotal position in shaping the foundational applied sciences driving next-generation Ethernet.

Boosting reliability and effectivity at each layer

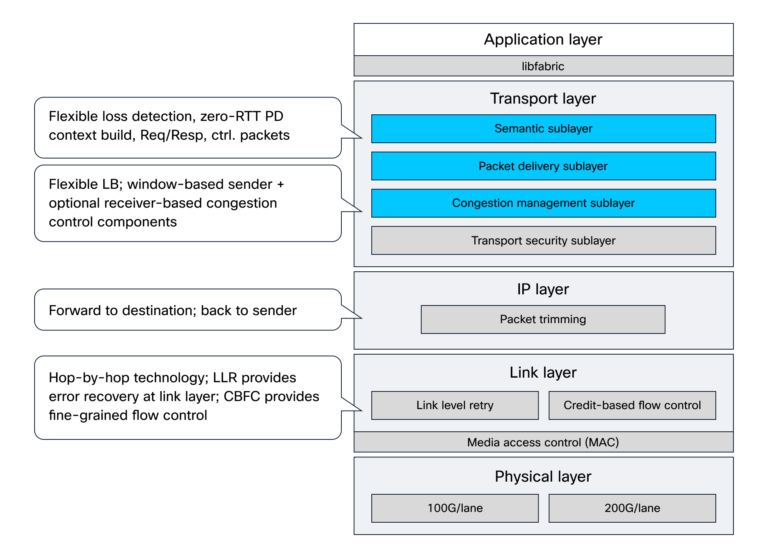

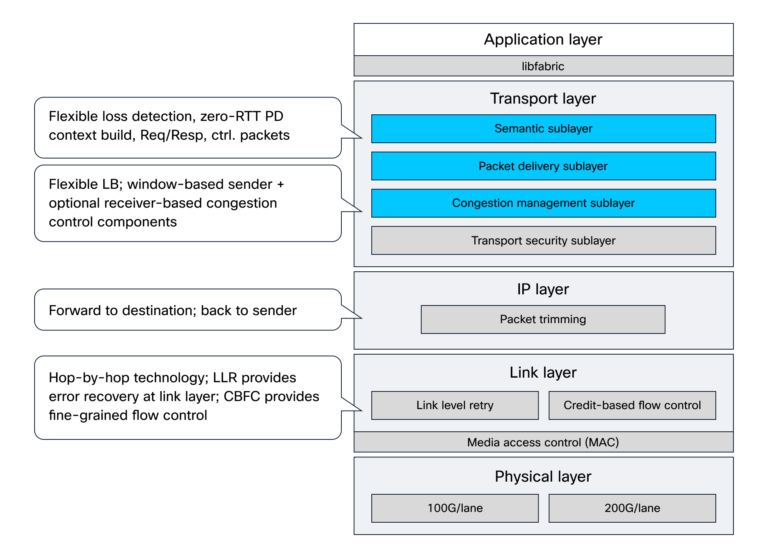

This weblog explores a number of the newest and rising UEC improvements throughout the Extremely Ethernet (UE) community stack—from hyperlink layer retry (LLR) and credit-based stream management (CBFC) on the hyperlink layer to packet trimming on the IP layer and packet spraying and superior telemetry options on the transport layer.

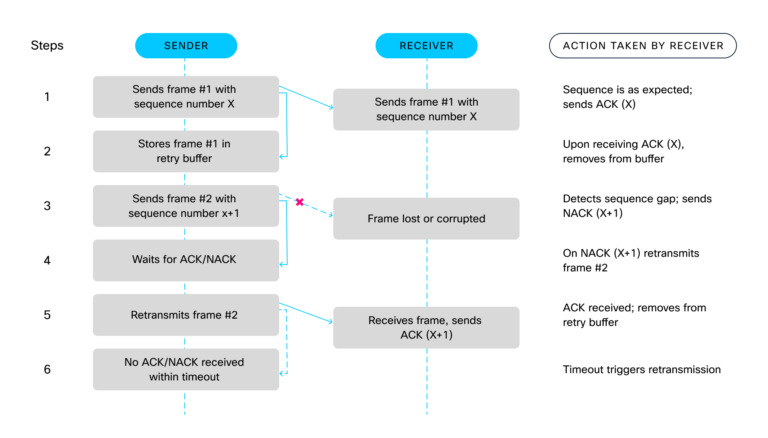

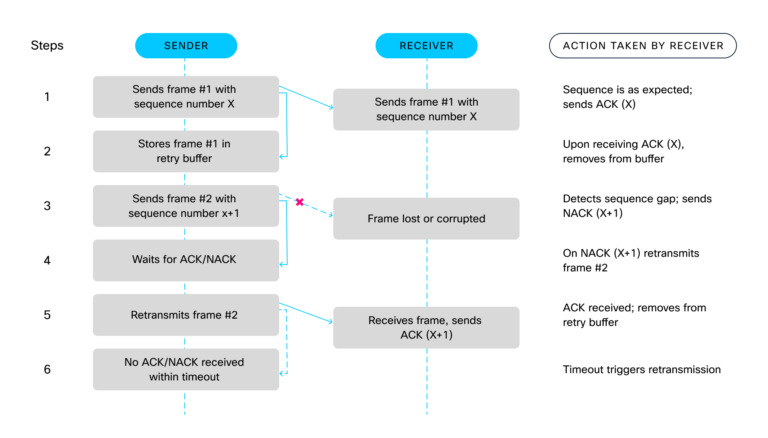

Reliability of hyperlink layer retry

LLR operates on the hyperlink layer and is designed to reinforce reliability on delicate community hyperlinks. These hyperlinks are sometimes weak to minor disruptions, similar to intermittent faults or hyperlink failures, which might degrade efficiency and improve tail latency. LLR gives a hop-by-hop retransmission mechanism the place packets are buffered on the sender till acknowledged by the receiver. Misplaced or corrupted packets are selectively retransmitted on the hyperlink layer, avoiding higher-level protocol involvement and decreasing tail latency.

Superior stream management

Precedence stream management (PFC) allows lossless Layer 2 transmission by pausing visitors when buffers fill, nevertheless it requires giant headroom, reacts slowly, and provides configuration overhead.

CBFC improves upon these shortcomings with a proactive credit score system: senders solely transmit when receivers verify obtainable buffer house. Credit are effectively tracked with cyclic counters and exchanged through light-weight updates, making certain knowledge is simply despatched when it may be obtained. This prevents drops, reduces buffer necessities, and maintains a lossless material with higher effectivity and less complicated configuration, making it preferrred for AI networking.

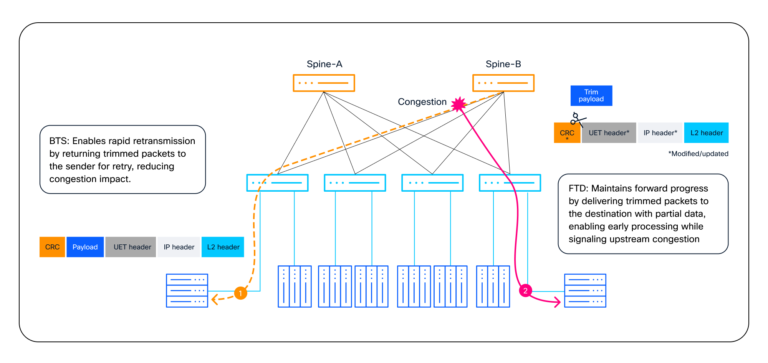

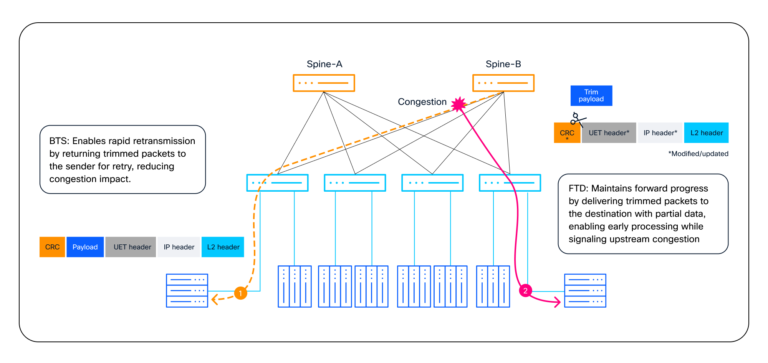

Smarter congestion restoration

Packet trimming operates on the IP layer and allows smarter congestion restoration by retaining packet headers whereas discarding the payload. When switches detect congestion, they trim and both return the header to the sender (back-to-sender [BTS]) or ahead it to the vacation spot (forward-to-destination [FTD]). This mechanism reduces pointless retransmissions of total packets, easing congestion and bettering tail latency.

- FTD mode permits the vacation spot to instantly detect incomplete packets and provoke focused restoration, similar to requesting solely lacking knowledge. The trimmed packet is often only a few dozen bytes and incorporates important management info to tell the receiver of the loss. This permits quicker convergence and low-latency retransmissions.

- BTS mode sends a trimmed notification again to the supply, permitting it to detect congestion on that particular transmission and proactively retransmit with out ready for a timeout.

Each methods allow sleek restoration with out timeouts or loss by utilizing retransmit scheduling that paces retries and, if wanted, shifts them to alternate equal-cost multi-paths (ECMPs).

Versatile load balancing

Versatile load balancing with packet spraying makes use of conventional ECMP load balancing, which assigns every stream to a hard and fast path utilizing hash-based port choice, nevertheless it lacks path management and may trigger collisions. UE introduces an entropy worth (EV) area that offers endpoints per-packet management over path choice.

By various the EV, packet spraying dynamically distributes packets throughout ECMPs, stopping persistent collisions and making certain optimum bandwidth utilization. This reduces visitors polarization, improves load balancing, and totally makes use of community bandwidth over time. UE permits in-order supply when wanted by fixing the EV, whereas nonetheless supporting adaptive spraying for different flows.

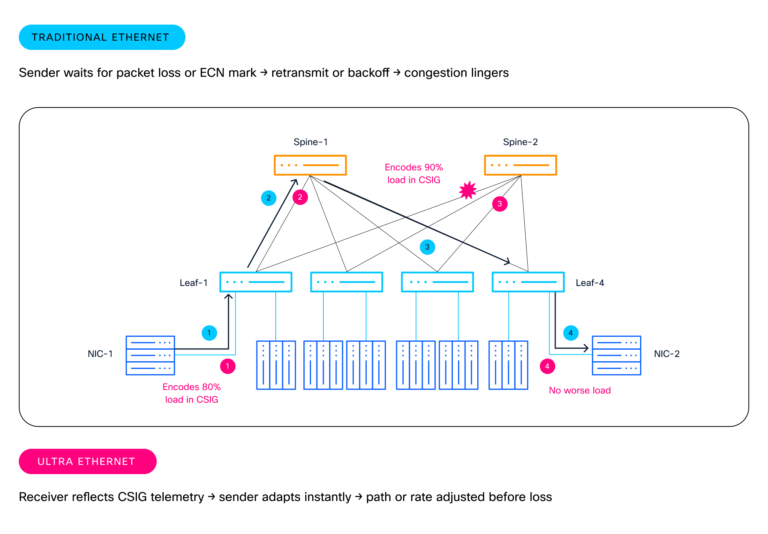

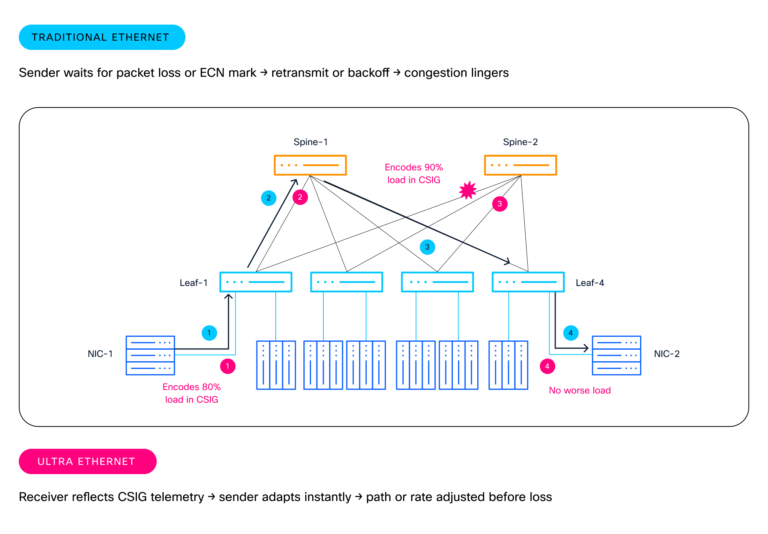

Actual-time congestion administration

Congestion administration within the UE transport layer combines superior congestion management with fine-grained telemetry and quick response mechanisms. In contrast to conventional Ethernet, which depends on reactive alerts similar to express congestion notification (ECN) or packet drops that present restricted visibility into the situation and severity of congestion, UEC provides embedded real-time in-band metrics instantly into packet headers by way of congestion signaling (CSIG).

CSIG implements a compare-and-replace mannequin, permitting every system alongside the trail to replace the packet with extra extreme congestion info with out growing the header dimension. The receiving community interface card (NIC) then displays this info again to the sender, permitting finish hosts to carry out adaptive charge management, path choice, and cargo balancing earlier and with higher accuracy.

UE material helps CSIG-tagged packets for congestion administration. Because the packets traverse the community, every swap updates the CSIG tag if it detects worsening congestion—monitoring obtainable bandwidth, utilization, and per-hop delay. Closely utilized hyperlinks are instantly encoded within the tag, and the receiver displays this congestion map again to the sender. Inside a single round-trip time (RTT), the sender is aware of which hyperlinks are congested and by how a lot, enabling proactive charge adjustment alternate path choice.

Cisco’s management in the way forward for Extremely Ethernet

Cisco is main the evolution of UE requirements, driving essential improvements for AI and machine studying (ML) networking as AI workload calls for skyrocket. As UE specs advance, Cisco stays on the forefront and ensures prospects can undertake UE options similar to congestion management, clever load balancing, and next-gen transport options.

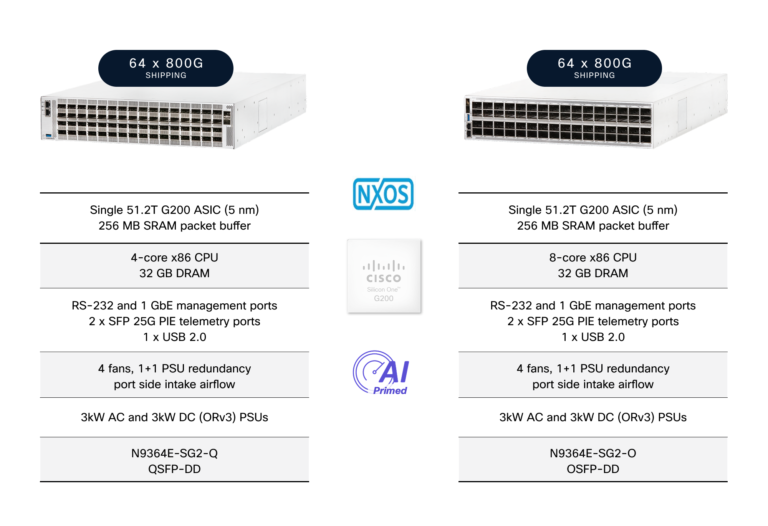

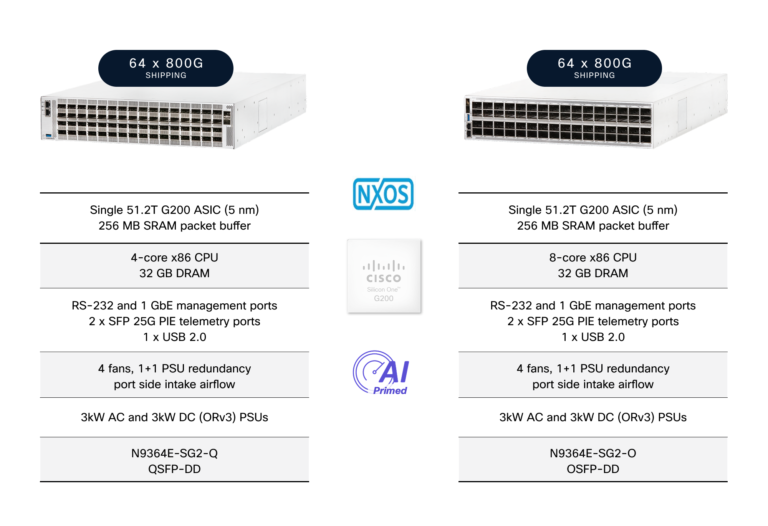

Future-ready networking with Cisco Nexus 9000 Collection Switches

Cisco Nexus 9000 Collection Switches are engineered to ship superior Ethernet capabilities for the next-generation AI infrastructure. They streamline Day-0 deployments and optimize operations from Day 1 with seamless integration and upgradability. With Nexus 9000 switches, organizations can unlock the complete potential of high-performance, versatile, and future-proof AI networking.

Enabling scalable AI infrastructure

As AI and HPC workloads redefine knowledge heart networking, the UEC’s improvements—powered by Cisco’s management—allow knowledge facilities to scale with confidence; meet tomorrow’s challenges; and ship dependable, high-performance infrastructure for the AI period.

Extra Sources: