The huge investments in AI just lately are beginning to appear like a bubble. Sure, after all, there will probably be a correction. However after expertise bubbles, there’s usually one thing helpful left over that may be an financial powerhouse for many years to return.

Within the 1800s, railroads have been constructed all around the world, creating a long-lasting infrastructure that enabled enormous financial development. Individuals neglect that California tracks have been constructed with narrow-gauge tracks, supporting the gold-mining bubble. Slim-gauge rail was the most cost effective method to construct a observe up a mountain to extract ore. The CAPEX of constructing the tracks was extra vital than the poor OPEX of driving trains slowly down the mountain. However these railroads have been left as out of date relics when the world of transportation moved on…they used the fallacious commonplace and have been positioned within the fallacious locations for contemporary use.

Equally, within the Nineties, we skilled one other enormous infrastructure buildout with fiber building throughout the US. The dot-com bubble burst in 2000-01, however the fiber remained within the floor. It types the spine of our “data superhighway” as we speak. We’ve made a number of upgrades to the transceivers, however the glass fiber is similar one which was put within the floor 30 years in the past.

In each instances, the “edge” of the railroad and fiber networks have been discarded after a short while and changed by one thing else. However the central spine remained. The Trans-continental Railroad by Promontory Summit stays a key commerce route as we speak. The fiber bundles between San Francisco and Chicago are closely used as properly.

Take into account the AI growth that’s occurring now. Everybody is aware of that it’s a bubble…however the gamers proceed to push ahead, every hoping that his piece would be the lasting infrastructure that is still after the bubble bursts.

Take into consideration this: The GPU itself is sort of a practice automobile, or like a fiberoptic transceiver. It is going to be helpful for just a few years, after which upgraded to one thing higher. The GPU is not going to be the lasting infrastructure…however the bodily infrastructure will probably be re-used. The info middle, the hydro or geothermal energy plant, and the fiber connecting will probably be the equal of railroad tracks or fiberoptic cable….property that create a long-lasting financial platform. Once more, it’s the centralized infrastructure that may stay, as a result of it’s very onerous to foretell precisely what will probably be wanted on the edge.

Taking a look at AI purposes, crucial latency-sensitive and security-sensitive computing is occurring within the gadget:

- We’re operating AI fashions in our telephones;

- New automobiles have enormous computing horsepower to optimize their bodily horsepower, and

- AI/AR glasses are getting far more interesting to the patron.

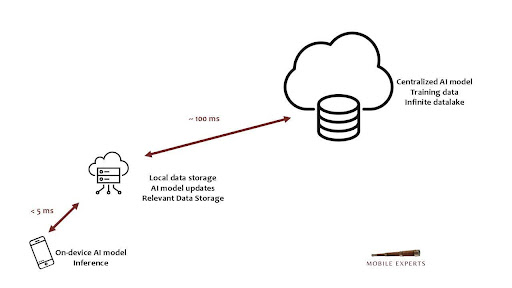

These purposes are operating inferences regionally on the gadget, supported by gigantic AI fashions in centralized information facilities, 1000’s of miles away. That leaves us with little or no “edge computing” within the center. As we lay out intimately in a new report launched this week, there’s a position for telcos in “Sovereign AI” or “Sovereign Edge Computing” to fulfill the will for nationwide management over computing workloads. However the nature of as we speak’s purposes merely don’t require neighborhood-level edge computing at low latency. Funding on the nationwide degree, and within the gadget, however a desert in between.

Issues will change.

One factor that’s more likely to change: AI fashions will want updates from enormous swimming pools of coaching information. The gadgets received’t have the ability to retailer the entire information wanted, so the smaller fashions operating on a tool will want quicker and quicker entry to information from a extra central datalake and AI mannequin.

That’s when the 5G/6G imaginative and prescient for the cellular community will lastly occur. It’s not occurring but…all of us can see that there’s no income for low-latency GPU-as-a-service as we speak, so telcos spending $15-20K on an ARC laptop for each cell website can be a waste. However because the fashions enhance, the inference engine on the gadget will want richer inputs. We received’t be prepared to make AI fashions wait 100 ms or extra for each information seize…so it will result in a necessity for one thing within the fringe of the community.

Will this take 5 years? 10 years? I don’t know but.

What I can say is that wi-fi follows a sample. First, a enterprise develops a whole bunch of billions in income with wires–after which we take the wires away. It occurred for voice, then for e-mail, after which for Web and at last video. We now can do something on a wi-fi gadget that was executed on wires.

Why would we predict that AI would develop another way? It appears clear that the AI enterprise mannequin and ecosystem will develop with enormous trillion-dollar centralized fashions, loosely coupled with smaller fashions on handheld gadgets. When that market will get large enough, the purposes will begin to require one thing between the central AI mannequin and the handset…some useful resource within the lifeless zone between the 2 extremes.

I consider that the Nvidia/Nokia partnership may very well be essential in 2035. I don’t suppose that it’s clever for Nokia to guess their complete cellular division on the ARC platform within the close to time period. However it may result in the proper strategic product in the long run.