Google’s default search outcomes checklist 10 natural listings per web page. But including &num=100 to the search end result URL will present 100 listings, not 10. It’s certainly one of Google’s many specialised search “operators” — till now.

This week, Google dropped assist for the &num=100 parameter. It’s a telling transfer. Many search professionals speculate the intention is to limit AI bots that use the parameter to carry out so-called fan-out searches. The collateral injury is on search engine rating instruments, which have lengthy used the parameter to scrape outcomes for key phrases. A lot of these instruments not perform, no less than for now.

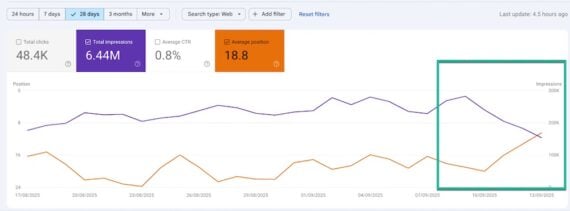

Surprisingly, the transfer affected Efficiency knowledge in Search Console. Most web site house owners now see will increase in common positions and declines within the variety of impressions.

In Search Console, most web site house owners now see will increase in common positions and declines within the variety of impressions. Click on picture to enlarge.

Search Console

Google has offered no clarification. Presumably the modifications in Efficiency knowledge are owing to Google’s reliance by itself bots, not people, to trace rankings. That’s the sudden takeaway: Search Console knowledge is no less than partially depending on bots.

In different phrases, the misplaced “Impressions” had been URLs as proven to bot scrapers, not human searchers. The “Common Place” metric is intently tied to “Impressions,” as Search Console data the topmost place of a URL as seen by searchers. Impressions now decline if “searchers” are bots.

Thus natural efficiency knowledge in Search Console now’s extra human impressions and fewer bots. The info displays precise shoppers viewing the listings.

The info stays skewed for top-ranking URLs as a result of web page 1 of search outcomes remains to be accessible to bots, though I do know of no technique to quantify bot searches versus these of people.

Adios Rank Monitoring?

Search end result scrapers require a lot computing time and vitality. Third-party instruments will doubtless increase their costs as, any further, their bots should “click on” to the following web page 9 occasions to succeed in 100 listings.

Tim Soulo, CMO of Ahrefs, a high web optimization platform, hinted immediately on LinkedIn that the device would doubtless report rankings on solely the primary two pages to stay financially sustainable.

So the way forward for web optimization rank monitoring is unclear. Seemingly, monitoring natural search positions will turn into costlier and produce fewer outcomes (solely the highest two pages).

What to Do?

- Look ahead to the Efficiency part in Search Console to stabilize

- Take into account web optimization platforms that combine with Search Console. For instance, web optimization Testing permits clients to import and archive the Efficiency knowledge and annotate trade updates (similar to Google’s &num=100 transfer) for site visitors or rankings influence.

To make sure, rank monitoring is changing into out of date. However monitoring natural search positions stays important for key phrase hole evaluation and content material concepts, amongst different web optimization duties.