Deep studying structure pertains to the design and association of neural networks, enabling machines to study from information and make clever selections. Impressed by the construction of the human mind, these architectures comprise many layers of nodes related to at least one one other to realize growing abstraction. As information goes via these layers, the community learns to acknowledge patterns, extract options, and carry out duties reminiscent of classification, prediction, or era. Deep studying architectures have caused a paradigm shift within the fields of picture recognition, pure language processing, and autonomous methods, empowering computer systems with a level of precision and flexibility to interpret inputs introduced forth by human intelligence.

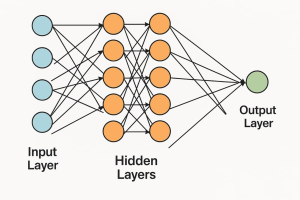

Deep Studying Structure Diagram:

Diagram Clarification:

This illustration describes a feedforward community, a easy deep studying mannequin whereby information travels from enter to output in a single path solely. It begins with an enter layer, the place, for instance, each node can be a characteristic, totally connecting with nodes within the subsequent hidden layer. The hidden layers (two layers of 5 nodes every) now remodel the information with weights and activation features, whereas each node in a single layer connects with each node within the different layer: this complexity aids the community in studying sophisticated patterns. The output layer produces the ultimate prediction-fully related with the final hidden layer, it makes use of sigmoid in case of binary classification or softmax in case of multi-class. The arrows characterize weights, which get adjusted throughout coaching to reduce the fee operate.

Varieties of Deep Studying Structure:

- Feedforward Neural Networks (FNNs)

The only circumstances of neural networks used for classification and regression with a unidirectional movement of knowledge from enter to output type the premise for extra sophisticated architectures

- Convolutional Neural Networks (CNNs)

CNNs course of picture information by making use of convolutional layers to detect spatial options. They’re broadly utilized in picture classification, object detection, and medical picture evaluation as a result of they’ll seize native patterns.

- Recurrent Neural Networks (RNNs)

RNNs are perfect for working with sequential information reminiscent of time sequence or textual content information. The loops maintain in reminiscence data or state of earlier computations, which show helpful in speech recognition and language modeling.

- Lengthy Brief-Time period Reminiscence Networks (LSTMs)

LSTMs, which in flip are a kind of RNN, can study long-term dependencies as they make the most of gates to regulate the movement of knowledge via the cell. A few of their predominant makes use of embrace machine translation, music era, and textual content prediction.

- Variational Autoencoders (VAEs)

With the addition of probabilistic components, a VAE extends the standard autoencoder and may, subsequently, generate new information samples. They discover their use in generative modeling of photos and textual content.

- Generative Adversarial Networks (GANs)

GANs work by pitting two networks, a generator and a discriminator, towards one another to create real looking information. They’re identified for producing high-quality photos, deepfakes, and artwork.

- Transformers

Transformers use self-attention to check sequences in parallel, making them wonderful fashions in pure language processing. Fashions like BERT, GPT, and T5 use the Transformer as their spine.

- Graph Neural Networks (GNNs)

GNNs function on graph-structured information; for instance: social networks, or molecular buildings. They study representations by aggregating data from neighboring nodes-and are highly effective for relational reasoning.

- Autoencoders

These are unsupervised fashions that study to compress after which reconstruct information. Autoencoders are additionally used for dimensionality discount, anomaly detection, and picture denoising.

- Deep Perception Networks (DBNs)

DBNs are networks with a number of layers of restricted Boltzmann machines. They’re used for unsupervised characteristic studying and pretraining of deep networks, that are then fine-tuned with supervised studying.

Conclusion:

Deep studying architectures are the spine of recent AI methods. Every sort, be it a easy feedforward community or a complicated transformer, possesses distinctive strengths suited to specific functions. With the persevering with evolution of deep studying, hybrid architectures and environment friendly fashions are poised to spark breakthroughs in healthcare, autonomous methods, and generative AI.