On this tutorial, we stroll by way of the whole implementation of a sophisticated AI agent system powered by Nomic Embeddings and Google’s Gemini. We design the structure from the bottom up, integrating semantic reminiscence, contextual reasoning, and multi-agent orchestration right into a single clever framework. Utilizing LangChain, Faiss, and LangChain-Nomic, we equip our brokers with the power to retailer, retrieve, and purpose over data utilizing pure language queries. The aim is to show how we will construct a modular and extensible AI system that helps each analytical analysis and pleasant dialog.

!pip set up -qU langchain-nomic langchain-core langchain-community langchain-google-genai faiss-cpu numpy matplotlib

import os

import getpass

import numpy as np

from typing import Listing, Dict, Any, Non-obligatory

from dataclasses import dataclass

from langchain_nomic import NomicEmbeddings

from langchain_core.vectorstores import InMemoryVectorStore

from langchain_core.paperwork import Doc

from langchain_google_genai import ChatGoogleGenerativeAI

import json

if not os.getenv("NOMIC_API_KEY"):

os.environ["NOMIC_API_KEY"] = getpass.getpass("Enter your Nomic API key: ")

if not os.getenv("GOOGLE_API_KEY"):

os.environ["GOOGLE_API_KEY"] = getpass.getpass("Enter your Google API key (for Gemini): ")We start by putting in all of the required libraries, together with langchain-nomic, langchain-google-genai, and faiss-cpu, to assist our agent’s embedding, reasoning, and vector search capabilities. We then import the required modules and securely set our Nomic and Google API keys utilizing getpass to make sure easy integration with the embedding and LLM providers. Try the full Codes.

@dataclass

class AgentMemory:

"""Agent's episodic and semantic reminiscence"""

episodic: Listing[Dict[str, Any]]

semantic: Dict[str, Any]

working: Dict[str, Any]

class IntelligentAgent:

"""Superior AI Agent with Nomic Embeddings for semantic reasoning"""

def __init__(self, agent_name: str = "AIAgent", character: str = "useful"):

self.title = agent_name

self.character = character

self.embeddings = NomicEmbeddings(

mannequin="nomic-embed-text-v1.5",

dimensionality=384,

inference_mode="distant"

)

self.llm = ChatGoogleGenerativeAI(

mannequin="gemini-1.5-flash",

temperature=0.7,

max_tokens=512

)

self.reminiscence = AgentMemory(

episodic=[],

semantic={},

working={}

)

self.knowledge_base = None

self.vector_store = None

self.capabilities = {

"reasoning": True,

"memory_retrieval": True,

"knowledge_search": True,

"context_awareness": True,

"studying": True

}

print(f"🤖 {self.title} initialized with Nomic embeddings + Gemini LLM")

def add_knowledge(self, paperwork: Listing[str], metadata: Listing[Dict] = None):

"""Add data to agent's semantic reminiscence"""

if metadata is None:

metadata = [{"source": f"doc_{i}"} for i in range(len(documents))]

docs = [Document(page_content=doc, metadata=meta)

for doc, meta in zip(documents, metadata)]

if self.vector_store is None:

self.vector_store = InMemoryVectorStore.from_documents(docs, self.embeddings)

else:

self.vector_store.add_documents(docs)

print(f"📚 Added {len(paperwork)} paperwork to data base")

def remember_interaction(self, user_input: str, agent_response: str, context: Dict = None):

"""Retailer interplay in episodic reminiscence"""

memory_entry = {

"timestamp": len(self.reminiscence.episodic),

"user_input": user_input,

"agent_response": agent_response,

"context": context or {},

"embedding": self.embeddings.embed_query(f"{user_input} {agent_response}")

}

self.reminiscence.episodic.append(memory_entry)

def retrieve_similar_memories(self, question: str, okay: int = 3) -> Listing[Dict]:

"""Retrieve related previous interactions"""

if not self.reminiscence.episodic:

return []

query_embedding = self.embeddings.embed_query(question)

similarities = []

for reminiscence in self.reminiscence.episodic:

similarity = np.dot(query_embedding, reminiscence["embedding"])

similarities.append((similarity, reminiscence))

similarities.type(reverse=True, key=lambda x: x[0])

return [mem for _, mem in similarities[:k]]

def search_knowledge(self, question: str, okay: int = 3) -> Listing[Document]:

"""Search data base for related data"""

if self.vector_store is None:

return []

return self.vector_store.similarity_search(question, okay=okay)

def reason_and_respond(self, user_input: str) -> str:

"""Principal reasoning pipeline with context integration"""

similar_memories = self.retrieve_similar_memories(user_input, okay=2)

relevant_docs = self.search_knowledge(user_input, okay=3)

context = {

"similar_memories": similar_memories,

"relevant_knowledge": [doc.page_content for doc in relevant_docs],

"working_memory": self.reminiscence.working

}

response = self._generate_contextual_response(user_input, context)

self.remember_interaction(user_input, response, context)

self.reminiscence.working["last_query"] = user_input

self.reminiscence.working["last_response"] = response

return response

def _generate_contextual_response(self, question: str, context: Dict) -> str:

"""Generate response utilizing Gemini LLM with context"""

context_info = ""

if context["relevant_knowledge"]:

context_info += f"Related Data: {' '.be part of(context['relevant_knowledge'][:2])}n"

if context["similar_memories"]:

reminiscence = context["similar_memories"][0]

context_info += f"Related Previous Interplay: Person requested '{reminiscence['user_input']}', I responded '{reminiscence['agent_response'][:100]}...'n"

immediate = f"""You're {self.title}, an AI agent with character: {self.character}.

Context Data:

{context_info}

Person Question: {question}

Please present a useful response primarily based on the context. Hold it concise (underneath 150 phrases) and keep your character."""

strive:

response = self.llm.invoke(immediate)

return response.content material.strip()

besides Exception as e:

if context["relevant_knowledge"]:

knowledge_summary = " ".be part of(context["relevant_knowledge"][:2])

return f"Primarily based on my data: {knowledge_summary[:200]}..."

elif context["similar_memories"]:

last_memory = context["similar_memories"][0]

return f"I recall the same query. Beforehand: {last_memory['agent_response'][:150]}..."

else:

return "I want extra data to offer a complete reply."We outline the core construction of our clever agent by making a reminiscence system that mimics episodic and semantic recall. We combine Nomic embeddings for semantic understanding and use Gemini LLM to generate contextual, personality-driven responses. With built-in capabilities like reminiscence retrieval, data search, and reasoning, we allow the agent to work together intelligently and study from every dialog. Try the full Codes.

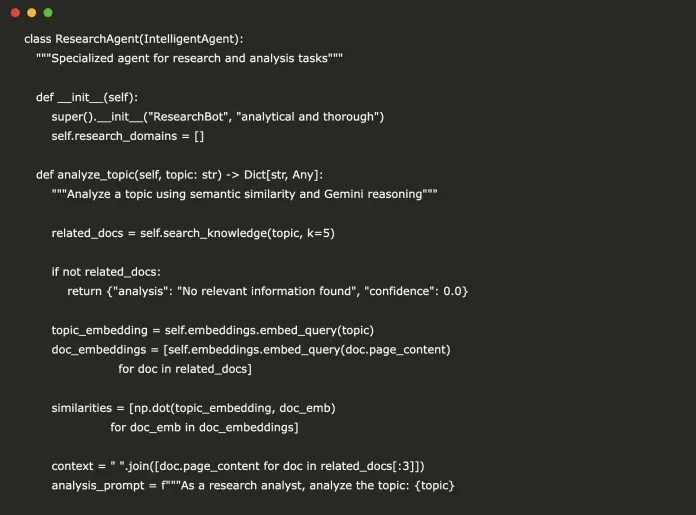

class ResearchAgent(IntelligentAgent):

"""Specialised agent for analysis and evaluation duties"""

def __init__(self):

tremendous().__init__("ResearchBot", "analytical and thorough")

self.research_domains = []

def analyze_topic(self, subject: str) -> Dict[str, Any]:

"""Analyze a subject utilizing semantic similarity and Gemini reasoning"""

related_docs = self.search_knowledge(subject, okay=5)

if not related_docs:

return {"evaluation": "No related data discovered", "confidence": 0.0}

topic_embedding = self.embeddings.embed_query(subject)

doc_embeddings = [self.embeddings.embed_query(doc.page_content)

for doc in related_docs]

similarities = [np.dot(topic_embedding, doc_emb)

for doc_emb in doc_embeddings]

context = " ".be part of([doc.page_content for doc in related_docs[:3]])

analysis_prompt = f"""As a analysis analyst, analyze the subject: {subject}

Out there data:

{context}

Present a structured evaluation together with:

1. Key insights (2-3 factors)

2. Confidence degree evaluation

3. Analysis gaps or limitations

4. Sensible implications

Hold response underneath 200 phrases."""

strive:

gemini_analysis = self.llm.invoke(analysis_prompt)

detailed_analysis = gemini_analysis.content material.strip()

besides:

detailed_analysis = f"Evaluation of {subject} primarily based on accessible paperwork with {len(related_docs)} related sources."

evaluation = {

"subject": subject,

"related_documents": len(related_docs),

"max_similarity": max(similarities),

"avg_similarity": np.imply(similarities),

"key_insights": [doc.page_content[:100] + "..." for doc in related_docs[:3]],

"confidence": max(similarities),

"detailed_analysis": detailed_analysis

}

return evaluation

class ConversationalAgent(IntelligentAgent):

"""Agent optimized for pure conversations"""

def __init__(self):

tremendous().__init__("ChatBot", "pleasant and interesting")

self.conversation_history = []

def maintain_conversation_context(self, user_input: str) -> str:

"""Keep dialog movement with context consciousness"""

self.conversation_history.append({"position": "consumer", "content material": user_input})

recent_context = " ".be part of([msg["content"] for msg in self.conversation_history[-3:]])

response = self.reason_and_respond(recent_context)

self.conversation_history.append({"position": "assistant", "content material": response})

return responseWe lengthen our clever agent into two specialised variations: a ResearchAgent for structured subject evaluation and a ConversationalAgent for pure dialogue. The analysis agent leverages semantic similarity and Gemini LLM to generate assured, insight-rich analyses, whereas the conversational agent maintains a history-aware chat expertise that feels coherent and interesting. This modular design permits us to tailor AI behaviors to fulfill particular consumer wants. Try the full Codes.

def demonstrate_agent_capabilities():

"""Complete demonstration of agent capabilities"""

print("🎯 Creating and testing AI brokers...")

research_agent = ResearchAgent()

chat_agent = ConversationalAgent()

knowledge_documents = [

"Artificial intelligence is transforming industries through automation and intelligent decision-making systems.",

"Machine learning algorithms require large datasets to identify patterns and make predictions.",

"Natural language processing enables computers to understand and generate human language.",

"Computer vision allows machines to interpret and analyze visual information from images and videos.",

"Robotics combines AI with physical systems to create autonomous machines.",

"Deep learning uses neural networks with multiple layers to solve complex problems.",

"Reinforcement learning teaches agents to make decisions through trial and error.",

"Quantum computing promises to solve certain problems exponentially faster than classical computers."

]

research_agent.add_knowledge(knowledge_documents)

chat_agent.add_knowledge(knowledge_documents)

print("n🔬 Testing Analysis Agent...")

subjects = ["machine learning", "robotics", "quantum computing"]

for subject in subjects:

evaluation = research_agent.analyze_topic(subject)

print(f"n📊 Evaluation of '{subject}':")

print(f" Confidence: {evaluation['confidence']:.3f}")

print(f" Associated docs: {evaluation['related_documents']}")

print(f" Detailed Evaluation: {evaluation.get('detailed_analysis', 'N/A')[:200]}...")

print(f" Key perception: {evaluation['key_insights'][0] if evaluation['key_insights'] else 'None'}")

print("n💬 Testing Conversational Agent...")

conversation_inputs = [

"Tell me about artificial intelligence",

"How does machine learning work?",

"What's the difference between AI and machine learning?",

"Can you explain neural networks?"

]

for user_input in conversation_inputs:

response = chat_agent.maintain_conversation_context(user_input)

print(f"n👤 Person: {user_input}")

print(f"🤖 Agent: {response}")

print("n🧠 Reminiscence Evaluation...")

print(f"Analysis Agent recollections: {len(research_agent.reminiscence.episodic)}")

print(f"Chat Agent recollections: {len(chat_agent.reminiscence.episodic)}")

similar_memories = chat_agent.retrieve_similar_memories("synthetic intelligence", okay=2)

if similar_memories:

print(f"n🔍 Related reminiscence discovered:")

print(f" Question: {similar_memories[0]['user_input']}")

print(f" Response: {similar_memories[0]['agent_response'][:100]}...")We run a complete demonstration of our AI brokers by loading a shared data base and evaluating each analysis and conversational duties. We check the ResearchAgent’s capacity to generate insightful analyses on key subjects and validate the ConversationalAgent’s efficiency throughout multi-turn queries. By means of introspection, we affirm that the brokers successfully retain and retrieve related previous interactions. Try the full Codes.

class MultiAgentSystem:

"""Orchestrate a number of specialised brokers"""

def __init__(self):

self.brokers = {

"analysis": ResearchAgent(),

"chat": ConversationalAgent()

}

self.coordinator_embeddings = NomicEmbeddings(mannequin="nomic-embed-text-v1.5", dimensionality=256)

def route_query(self, question: str) -> str:

"""Route question to most applicable agent"""

agent_descriptions = {

"analysis": "evaluation, analysis, knowledge, statistics, technical data",

"chat": "dialog, questions, normal dialogue, informal speak"

}

query_embedding = self.coordinator_embeddings.embed_query(question)

best_agent = "chat"

best_similarity = 0

for agent_name, description in agent_descriptions.gadgets():

desc_embedding = self.coordinator_embeddings.embed_query(description)

similarity = np.dot(query_embedding, desc_embedding)

if similarity > best_similarity:

best_similarity = similarity

best_agent = agent_name

return best_agent

def process_query(self, question: str) -> Dict[str, Any]:

"""Course of question by way of applicable agent"""

selected_agent, confidence = self.route_query_with_confidence(question)

agent = self.brokers[selected_agent]

if selected_agent == "analysis":

if "analyze" in question.decrease() or "analysis" in question.decrease():

subject = question.substitute("analyze", "").substitute("analysis", "").strip()

outcome = agent.analyze_topic(subject)

response = f"Analysis Evaluation: {outcome.get('detailed_analysis', str(outcome))}"

else:

response = agent.reason_and_respond(question)

else:

response = agent.maintain_conversation_context(question)

return {

"question": question,

"selected_agent": selected_agent,

"response": response,

"confidence": confidence

}

def route_query_with_confidence(self, question: str) -> tuple[str, float]:

"""Route question to most applicable agent and return confidence"""

agent_descriptions = {

"analysis": "evaluation, analysis, knowledge, statistics, technical data",

"chat": "dialog, questions, normal dialogue, informal speak"

}

query_embedding = self.coordinator_embeddings.embed_query(question)

best_agent = "chat"

best_similarity = 0.0

for agent_name, description in agent_descriptions.gadgets():

desc_embedding = self.coordinator_embeddings.embed_query(description)

similarity = np.dot(query_embedding, desc_embedding)

if similarity > best_similarity:

best_similarity = similarity

best_agent = agent_name

return best_agent, best_similarityWe constructed a multi-agent system that intelligently routes queries to both the analysis or conversational agent primarily based on semantic similarity. By embedding each the consumer question and agent specialties utilizing Nomic embeddings, we be certain that essentially the most related skilled is assigned to every request. This structure permits us to scale clever conduct whereas sustaining specialization and precision. Try the full Codes.

if __name__ == "__main__":

print("n🚀 Superior AI Agent System with Nomic Embeddings + Gemini LLM")

print("=" * 70)

print("💡 Word: This makes use of Google's Gemini 1.5 Flash (free tier) for reasoning")

print("📚 Get your free Google API key at: https://makersuite.google.com/app/apikey")

print("🎯 Get your Nomic API key at: https://atlas.nomic.ai/")

print("=" * 70)

demonstrate_agent_capabilities()

print("n🎛️ Testing Multi-Agent System...")

multi_system = MultiAgentSystem()

knowledge_docs = [

"Python is a versatile programming language used in AI development.",

"TensorFlow and PyTorch are popular machine learning frameworks.",

"Data preprocessing is crucial for successful machine learning projects."

]

for agent in multi_system.brokers.values():

agent.add_knowledge(knowledge_docs)

test_queries = [

"Analyze the impact of AI on society",

"How are you doing today?",

"Research machine learning trends",

"What's your favorite color?"

]

for question in test_queries:

outcome = multi_system.process_query(question)

print(f"n📝 Question: {question}")

print(f"🎯 Routed to: {outcome['selected_agent']} agent")

print(f"💬 Response: {outcome['response'][:150]}...")

print("n✅ Superior AI Agent demonstration full!")We conclude by operating a complete demonstration of our AI system, initializing the brokers, loading data, and testing real-world queries. We observe how the multi-agent system intelligently routes every question primarily based on its content material, showcasing the power of our modular design. This last execution confirms the brokers’ capabilities in reasoning, reminiscence, and adaptive response technology.

In conclusion, we now have a strong and versatile AI agent framework that leverages Nomic embeddings for semantic understanding and Gemini LLM for contextual response technology. We show how brokers can independently handle reminiscence, retrieve data, and purpose intelligently, whereas the multi-agent system ensures that consumer queries are routed to essentially the most succesful agent. By strolling by way of each research-focused and conversational interactions, we showcase how this setup can function a basis for constructing actually clever and responsive AI assistants.

Try the Codes. All credit score for this analysis goes to the researchers of this undertaking. Additionally, be happy to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.