Should you’ve ever watched a sport and questioned, “How do manufacturers truly measure how usually their brand exhibits up on display?” you’re already asking an ACR query. Equally, insights like:

- What number of minutes did Model X’s brand seem on the jersey?

- Did that new sponsor truly get the publicity they paid for?

- Is my brand being utilized in locations it shouldn’t be?

are all powered by Automated Content material Recognition (ACR) expertise. It appears at uncooked audio/video and figures out what’s in it with out counting on filenames, tags, or human labels.

On this publish, we’ll zoom into one very sensible slice of ACR: Recognizing model logos in photos or video utilizing a very open-source stack.

Introduction to Automated Content material Recognition

Automated Content material Recognition (ACR) is a media recognition expertise (just like facial recognition expertise) able to recognizing the contents in media with out human intervention. Whether or not you will have witnessed an app in your Smartphone figuring out the tune that’s being performed, or a streaming platform labeling actors in a scene, you will have been experiencing the work of ACR. Units utilizing ACR seize a “fingerprint” of audio or video and examine it to a database of content material. When a match is discovered, the system returns metadata about that content material, for instance, the identify of a tune or the id of an actor on display but it surely will also be used to acknowledge logos and model marks in photos or video. This text will illustrate the way to construct an ACR system centered on recognizing logos in a picture or video.

We’ll stroll by means of a step-by-step brand recognition pipeline that assumes a metric-learning embedding mannequin (e.g., a CNN/ViT educated with contrastive/triplet, or ArcFace-style loss) to provide ℓ2-normalized vectors for brand crops and use Euclidean distance (L2 norm) to match new photos in opposition to a gallery of brand name logos. The intention is to indicate how a gallery of brand exemplars (imaginary logos created for this text) can be utilized as our reference database and the way we are able to robotically decide which brand seems in a brand new picture by finding the closest match in our embedding house.

As soon as the system is constructed, we are going to measure the system accuracy and touch upon the method of choosing the suitable distance threshold for use in efficient recognition. On the finish of it, you should have an concept of the weather of a brand recognition ACR pipeline and be able to testing your dataset of brand photos or another use case.

Why Brand ACR Is a Huge Deal?

Logos are the visible shorthand for manufacturers. Should you can detect them reliably, you unlock a complete set of high-value use instances:

- Sponsorship & advert verification: Did the emblem seem when and the place the contract promised? How lengthy was it seen? On which channels?

- Model security & compliance: Is your brand displaying up subsequent to content material you don’t wish to be related to? Are opponents ambushing your marketing campaign?

- Shoppable & interactive experiences: See a brand on display → faucet your cellphone or distant → see merchandise, affords, or coupons in actual time.

- Content material search & discovery: “Present me all clips the place Model A, Model B, and the brand new stadium sponsor seem collectively.”

On the core of all these situations is identical query:

Given a body from a video, which brand(s) are in it, if any?

That’s precisely what we’ll design.

The Huge Concept: From Pixels to Vectors to Matches

Fashionable ACR is mainly a three-step magic trick:

- Take a look at the sign – Seize frames from the video stream.

- Flip photos into vectors – Use a deep mannequin to map every brand crop to a compact numerical vector (an embedding).

- Search in vector house – Examine that vector to a gallery of recognized brand vectors utilizing a vector database or ANN library.

If a brand new brand crop lands shut sufficient to a cluster of “Model X” vectors, we name it a match. That’s it. Every thing else, detectors, thresholds, and indexing, are simply making this sooner, extra sturdy, and extra scalable.

Brand Dataset

To construct our Brand recognition ACR system, we’d like a reference dataset of Logos with recognized identities. We’ll use a group of Log photos created artificially utilizing AI for this case research. Regardless that we’re utilizing some random imaginary logos for this text, this may be prolonged even to downloading recognized logos you probably have the license to make use of them or an current analysis dataset. In our case, we are going to work with a small pattern: for instance, a dozen manufacturers with 5 to 10 photos per model.

The model identify of the emblem is offered as a label of every brand within the dataset, and it’s the ground-truth id.

These logos present variability that issues for recognition, for instance, in colorways (full-color, black/white, inverted), structure (horizontal vs. stacked), wordmark vs. icon-only, background/define therapies, and, within the wild, they seem below totally different scales, rotations, blur, occlusions, lighting, and views. The system needs to be based mostly on the similarities within the brand, as it’ll seem very totally different in conditions. We suppose that we have now cropped brand photos in order that our recognition mannequin truly takes as enter solely the emblem area.

Illustration within the ACR System

For instance, contemplate that we have now in our database logos of recognized logos as Photograph A, Photograph B, Photograph C, and so on. (every of them is an imaginary brand generated utilizing AI). Every of those logos will likely be represented as a numerical encoding within the ACR system and saved.

Under, we present an instance of 1 imaginary model brand in two totally different photos from our dataset:

We’ll use a pre-trained mannequin to detect the Brand in two photos of the above similar model.

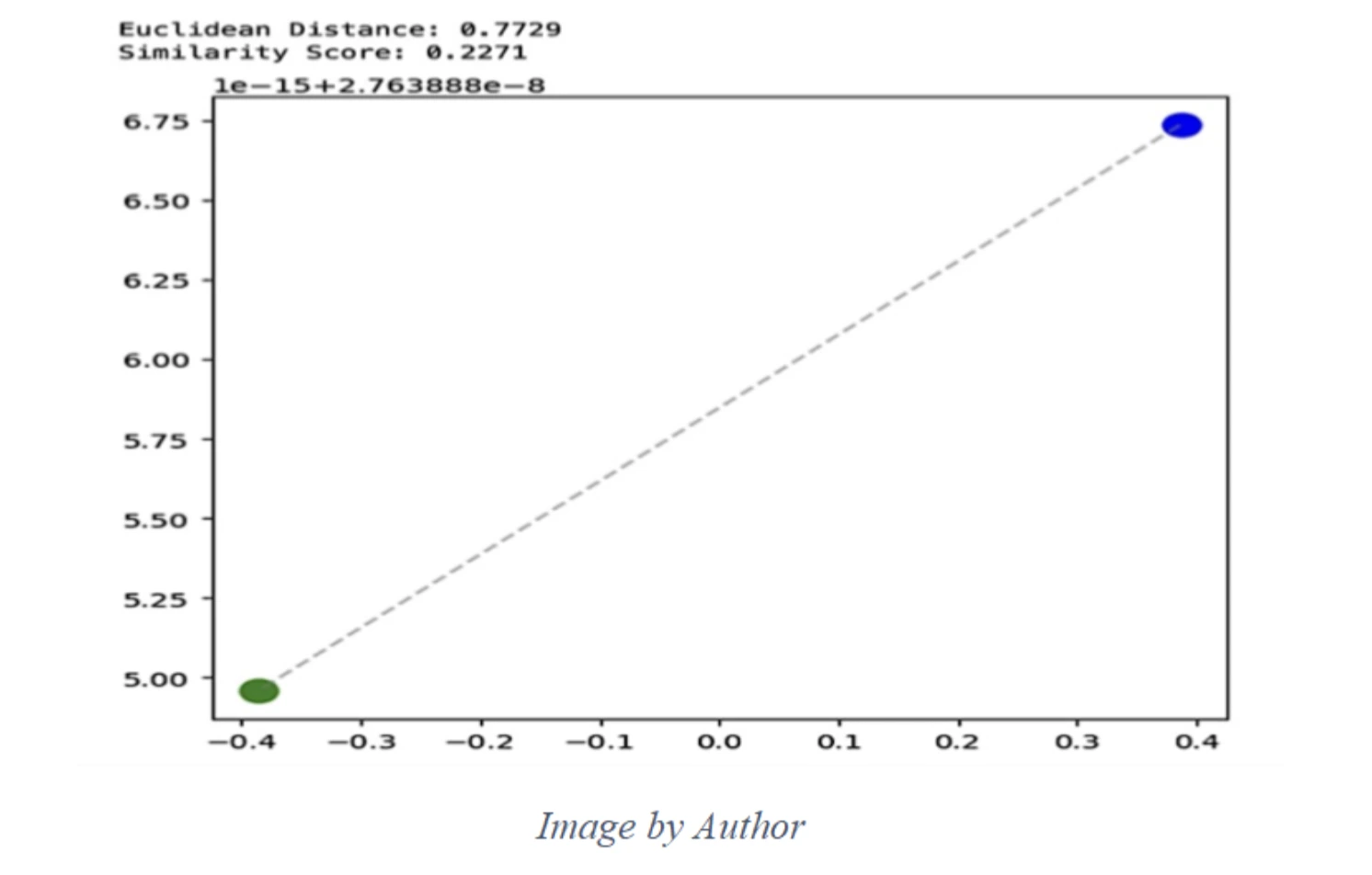

This determine is displaying two factors (inexperienced and blue), the straight-line (Euclidean) distance between them, after which a “similarity rating” that’s only a easy rework of that distance.

An Open-Supply Stack for Brand ACR

In apply, many groups in the present day use light-weight detectors corresponding to YOLOv8-Nano and backbones like EfficientNet or Imaginative and prescient Transformers, all obtainable as open-source implementations.

Core elements

- Deep studying framework: PyTorch or TensorFlow/Keras; used to coach and run the emblem embedding mannequin.

- Brand detector: Any open-source object detector (YOLO-style, SSD, RetinaNet, and so on.) educated to search out “logo-like” areas in a body.

- Embedding mannequin: A CNN or Imaginative and prescient Transformer spine (ResNet, EfficientNet, ViT, …) with a metric-learning head that outputs unit-normalized vectors.

- Vector search engine: FAISS library, or a vector DB like Milvus / Qdrant / Weaviate to retailer thousands and thousands of embeddings and reply “nearest neighbor” queries shortly.

- Brand information: Artificial or in-house brand photos, plus any public datasets that explicitly permit your supposed use.

You’ll be able to swap any element so long as it performs the identical function within the pipeline.

Step 1: Discovering Logos within the Wild

Earlier than we are able to acknowledge a brand, we have now to search out it.

1. Pattern frames

Processing each single body in a 60 FPS stream is overkill. As an alternative:

- Pattern 2 to 4 frames per second per stream.

- Deal with every sampled body as a nonetheless picture to examine.

That is normally sufficient for model/sponsor analytics with out breaking the compute funds.

2. Run a brand detector

On every sampled body:

- Resize and normalize the picture (normal pre-processing).

- Feed it into your object detector.

- Get again bounding packing containers for areas that seem like logos.

Every detection is:

(x_min, y_min, x_max, y_max, confidence_score)

You crop these areas out; every crop is a “brand candidate.”

3. Stabilize over time

Actual-world video is messy: blur, movement, partial occlusion, a number of overlays.

Two straightforward tips assist:

- Temporal smoothing – mix detections throughout a brief window (e.g., 1–2 seconds). If a brand seems in 5 consecutive frames and disappears in a single, don’t panic.

- Confidence thresholds – discard detections beneath a minimal confidence to keep away from apparent noise.

After this step, you will have a stream of moderately clear brand crops.

Step 2: Brand Embeddings

Now that we are able to crop logos from frames, we’d like a option to examine them that’s smarter than uncooked pixels. That’s the place embeddings are available.

An embedding is only a vector of numbers (for instance, 256 or 512 values) that captures the “essence” of a brand. We practice a deep neural community in order that:

- Two photos of the identical brand map to vectors which can be shut collectively.

- Photographs of various logos map to vectors which can be far aside.

A standard option to practice that is with a metric-learning loss corresponding to ArcFace. You don’t want to recollect the method; the instinct is:

“Pull embeddings of the identical model collectively within the embedding house, and push embeddings of various manufacturers aside.”

After coaching, the community behaves like a black field:

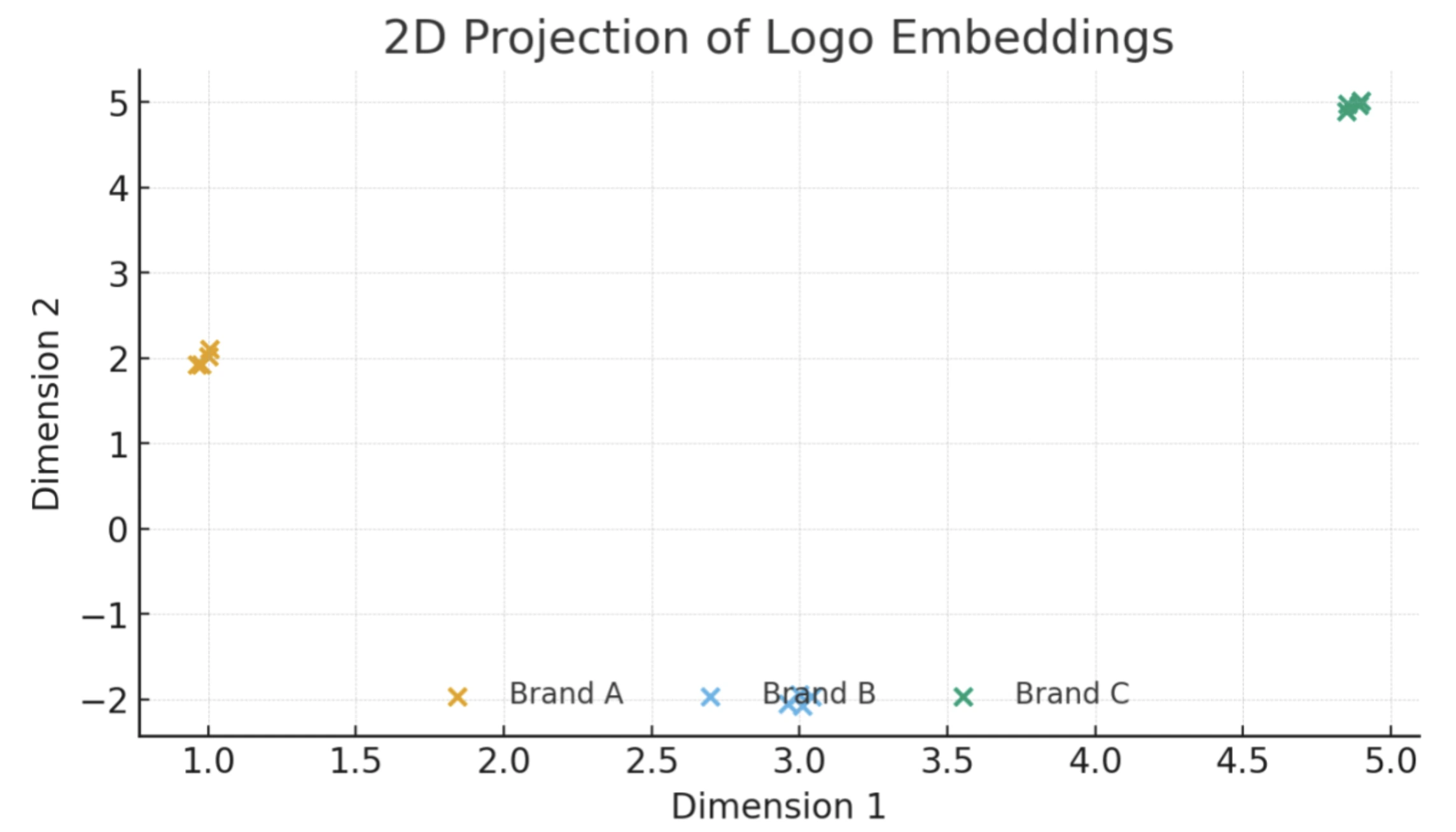

Under: Scatter plot displaying a 2D projection of brand embeddings for 3 recognized manufacturers/logos (A, B, C). Every level is one brand picture, which is embedded from the identical model cluster tightly, displaying clear separation between manufacturers within the embedding house.

We will use a brand embedding mannequin educated with the ArcFace-style (additive angular margin) loss to provide ℓ2-normalized 512-D vectors for every brand crop. There are a lot of open-source methods to construct a brand embedder. The best means is to load a normal imaginative and prescient spine (e.g., ResNet/EfficientNet/ViT) with an ArcFace-style (additive angular margin) head.

Let’s have a look at how this works in code-like kind. We’ll assume:

- embedding_model(picture) takes a brand crop and returns a unit-normalized embedding vector.

- detect_logos(body) returns an inventory of brand crops for every body.

- l2_distance(a, b) computes the Euclidean distance between two embeddings.

First, we construct a small embedding database for our recognized manufacturers:

embedding_model = load_embedding_model("arcface_logo_model.pt") # PyTorch / TF mannequin

brand_db = {} # dict: brand_name -> listing of embedding vectors

for brand_name in brands_list:

examples = []

for img_path in logo_images[brand_name]: # paths to instance photos for this model

img = load_image(img_path)

crop = preprocess(img) # resize / normalize

emb = embedding_model(crop) # unit-normalized brand embedding

examples.append(emb)

brand_db[brand_name] = examplesAt runtime, we acknowledge logos in a brand new body like this:

def recognize_logos_in_frame(body, threshold):

crops = detect_logos(body) # brand detector returns candidate crops

outcomes = []

for crop in crops:

query_emb = embedding_model(crop)

best_brand = None

best_dist = float("inf")

# discover the closest model within the database

for brand_name, emb_list in brand_db.objects():

# distance to the closest instance for this model

dist_to_brand = min(l2_distance(query_emb, e) for e in emb_list)

if dist_to_brand In an actual system, you wouldn’t loop over each embedding in Python. You’d drop the identical concept right into a vector index corresponding to FAISS, Milvus, or Qdrant, that are open-source engines designed to deal with nearest-neighbor search over thousands and thousands of embeddings effectively. However the core logic is strictly what this pseudocode exhibits:

- Embed the question brand,

- Discover the closest recognized brand within the database,

- Examine if the space is beneath a threshold to resolve if it’s a match.

Euclidean Distance for Brand Matching

We will now specific logos as numerical vectors, however how can we examine them? Embeddings have just a few widespread similarity measures, and Euclidean distance and cosine similarity are probably the most used. As a result of our brand embeddings are ℓ2-normalized (ArcFace-style), cosine similarity and Euclidean distance give the identical rating (one could be derived from the opposite). Our distance measure will likely be Euclidean distance (L2 norm).

Euclidean distance between two function vectors (x) and (y) (every of size (d), right here (d = 512)) is outlined as: distance=√(Σ(xi−yi)²)

After the sq. root, that is the straight-line distance between the 2 factors in 512-D house. A smaller distance means the factors are nearer, which—by how we educated the mannequin—signifies the logos usually tend to be the identical model. If the space is massive, they’re totally different manufacturers. Utilizing Euclidean distance on the embeddings turns matching right into a nearest-neighbor search in function house. It’s successfully a Ok-Nearest Neighbors method with Ok=1 (discover the only closest match) plus a threshold to resolve if that match is assured sufficient.

Nearest-Neighbor Matching

Utilizing Euclidean distance as our similarity measure is simple to implement. We calculate the space between a question brand’s embedding and every saved model embedding in our database, then take the minimal. The model comparable to that minimal distance is our greatest match. This methodology finds the closest neighbor in embedding house—if that nearest neighbor continues to be pretty far (distance bigger than a threshold), we conclude the question brand is “unknown” (i.e., not one in all our recognized manufacturers). The brink is essential to keep away from false positives and needs to be tuned on validation information.

To summarize, Euclidean distance in our context means: the nearer (in Euclidean distance) a question embedding is to a saved embedding, the extra related the logos, and therefore the extra probably the identical model. We’ll use this precept for matching.

Step-by-Step Mannequin Pipeline (Brand ACR)

Let’s break down the whole pipeline of our brand detection ACR system into clear steps:

1. Knowledge Preparation

Gather photos of recognized manufacturers’ logos (official paintings + “in-the-wild” photographs). Arrange by model (folder per model or (model, image_path) listing). For in-scene photos, run a brand detector to crop every brand area; apply mild normalization (resize, padding/letterbox, non-obligatory distinction/perspective repair).

2. Embedding Database Creation

Use a brand embedder (ArcFace-style/additive-angular-margin head on a imaginative and prescient spine) to compute a 256–512D vector for each brand crop. Retailer as a mapping model → [embeddings] (e.g., a Python dict or a vector index with metadata).

3. Normalization

Guarantee all embeddings are ℓ2-normalized (unit size). Many fashions output unit vectors; if not, normalize so distance comparisons are constant.

4. New Picture / Stream Question

For every incoming picture/body, run the emblem detector to get candidate packing containers. For every field, crop and preprocess precisely as in coaching, then compute the emblem embedding.

5. Distance Calculation

Examine the question embedding to the saved catalog utilizing Euclidean (L2) or cosine (equal for unit vectors). For big catalogs or real-time streams, use an ANN index (e.g., FAISS HNSW/IVF) as a substitute of brute drive.

6. Discover Nearest Match

Take the closest neighbor in embedding house. Should you maintain a number of exemplars per model, use the very best rating per model (max cosine / min L2) and choose the highest model.

7. Threshold Examine (Open-set)

Examine the very best rating to a tuned threshold.

- Rating passes → acknowledge the emblem as that model.

- Rating fails → unknown (not in catalog). Thresholds are calibrated on validation pairs to stability false positives vs. misses; optionally apply temporal smoothing throughout frames.

8. Output End result

Return model id, bounding field, and similarity/distance. If unknown, deal with per coverage (e.g., “No match in catalog” or route for overview). Optionally log matches for auditing and mannequin enchancment.

Visualizing Similarity and Matching

The similarity scores (or distances) are sometimes helpful to visualise the best way the system is making selections. For instance, supplied with a question picture, we are able to study the calculated distance to each candidate within the database. Ideally, the fitting id will likely be far lower than others and can set up a definite separation between the closest one and the remaining.

The chart beneath illustrates an instance. We had a question picture of Brand C, and we computed its Euclidean distance to the embeddings of 5 candidate Logos (LogoA by means of LogoE) in our database. We then plotted these distances:

On this instance, the clear separation between the real match (LogoC) and the others makes it straightforward to decide on a threshold. In apply, distances will range relying on the pair of photos. Two Logos of the identical model would possibly generally yield a distance barely larger, particularly if the Logos are very totally different, and two totally different model Logos can sometimes have a surprisingly low distance if they appear alike. That’s why threshold tuning is required utilizing a validation set.

Accuracy and Threshold Tuning

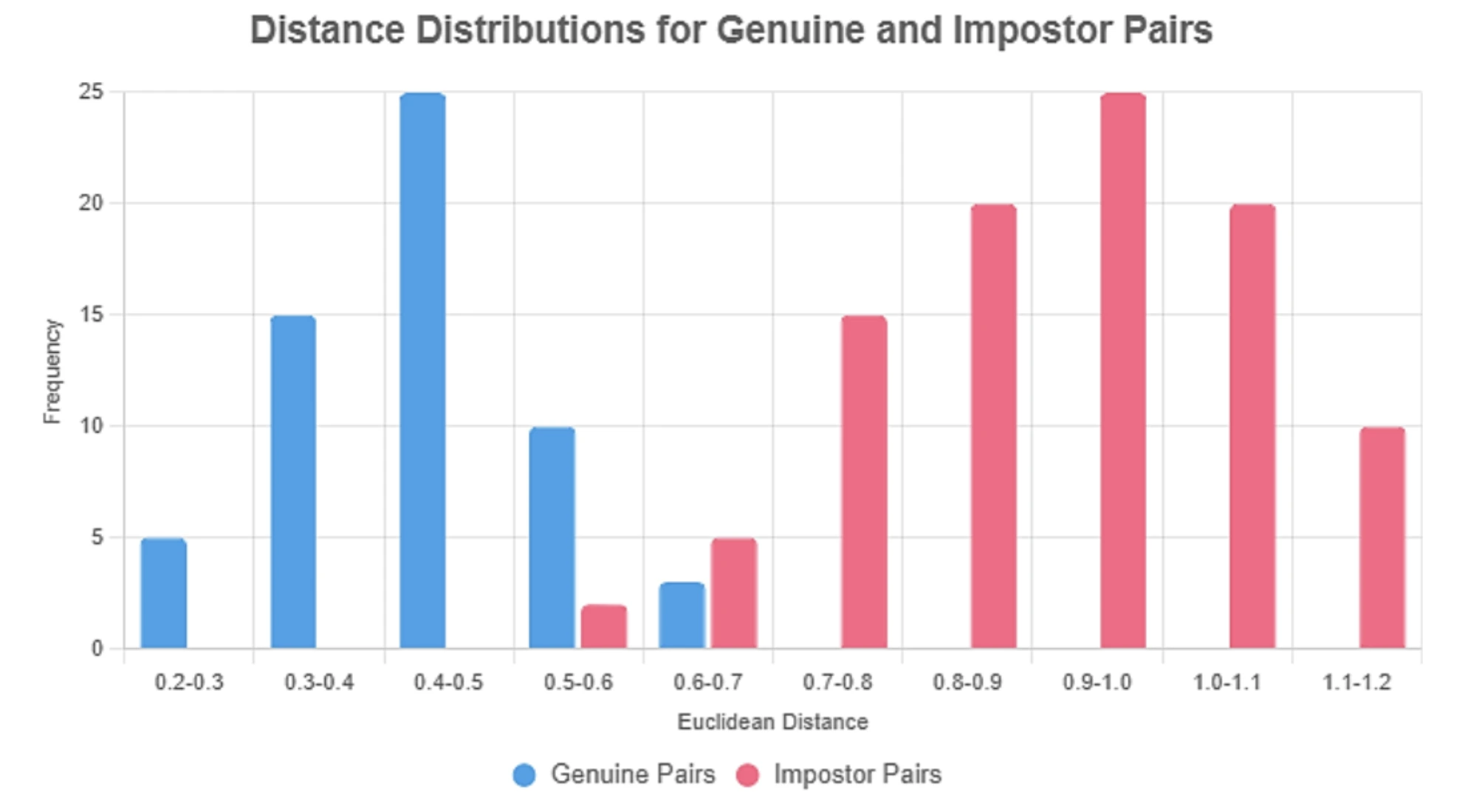

To measure system accuracy, we might run the system on a check set of brand photos (the place there may be recognized id, however not within the database) and depend the variety of occasions the system identifies the manufacturers appropriately. We’d range the space threshold and observe the trade-off between false positives, or the detection of a recognized brand of a model as one other one, and false negatives, or the failure to detect a recognized brand as a result of the space is bigger than the brink. With the intention to choose an excellent worth, a plot of the ROC curve or just a calculation of precision/recall at totally different thresholds could be related.

Tips on how to tune the brink (easy, repeatable):

- Construct pairs.

– Real pairs: embeddings from the identical model (totally different information/angles/colours).

– Impostor pairs: embeddings from totally different manufacturers (embrace look-alike marks, color-inverted variations). - Rating pairs. Compute Euclidean (L2) or cosine (on unit vectors, they rank identically).

- Plot histograms. It’s best to see two distributions: same-brand distances clustered low and different-brand distances larger.

- Select a threshold. Decide the worth that greatest separates the 2 distributions to your goal danger (e.g., the space the place FAR = 1%, or the argmax of F1).

- Open set test. Add non-logo crops and unknown manufacturers to your negatives; confirm the brink nonetheless controls false accepts

Under: Histogram of Euclidean distances for same-brand (real) vs different-brand (impostor) brand pairs. The dashed line exhibits the chosen threshold separating most real from impostor matches.

In abstract, to realize good accuracy:

- Use a number of logos per model if doable, when constructing the database, or use augmentation, so the mannequin has a greater probability of getting a consultant embedding

- Consider distances on recognized validation pairs to know the vary of same-brand vs different-brand distances.

- Set the brink to stability missed recognitions vs false alarms based mostly on these distributions. You can begin with generally used values (like 0.6 for 128-D embeddings or round 1.24 for 512-D threshold), then regulate.

- Advantageous-tune as wanted: If the system is making errors, analyze them. Are the false positives coming from particular look-alike logos? Are the false negatives coming from low-quality photos? This evaluation can information changes (perhaps a decrease threshold, or including extra reference photos for sure logos, and so on.).

Conclusion

On this article, we constructed a simplified Automated Content material Recognition system for figuring out model logos in photos utilizing deep brand embeddings and Euclidean distance. We launched ACR and its use instances, assembled an open-licensed brand dataset, and used an ArcFace-style embedding mannequin (for logos) to transform cropped logos right into a numerical illustration. By evaluating these embeddings with a Euclidean distance measure, the system can robotically acknowledge a brand new brand by discovering the closest match in a database of recognized manufacturers. We demonstrated how the pipeline works with code snippets and visualized how a call threshold could be utilized to enhance accuracy.

Outcomes: With a well-trained brand embedding mannequin, even a easy nearest-neighbor method can obtain excessive accuracy. The system appropriately identifies recognized manufacturers in question photos when their embeddings fall inside an acceptable distance threshold of the saved templates. We emphasised the significance of threshold tuning to stability precision and recall, a essential step in real-world deployments.

Subsequent Steps

There are a number of methods to increase or enhance this ACR system:

- Scaling Up: To assist 1000’s of manufacturers or real-time streams, exchange brute-force distance checks with an environment friendly similarity index (e.g., FAISS or different approximate nearest neighbor strategies)

- Detection & Alignment: Carry out brand detection with a quick detector (e.g., YOLOv8-Nano/EfficientDet-Lite/SSD) and apply mild normalization (resize, padding, non-obligatory perspective/distinction fixes) so the embedder sees constant crops.

- Bettering Accuracy: Advantageous-tune the embedder in your brand set and add tougher augmentations (rotation, scale, occlusion, shade inversion). Maintain a number of exemplars per model (shade/mono/legacy marks) or use prototype averaging..

- ACR Past Logos: The identical embedding + nearest-neighbor method extends to product packaging, ad-creative matching, icons, and scene textual content snippets.

- Authorized & Ethics: Respect trademark/IP, dataset licenses, and picture rights. Use solely belongings with permission to your objective (together with industrial use). If photos embrace folks, adjust to privateness/biometric legal guidelines; monitor regional/model protection to scale back bias.

Automated Content material Recognition is a robust deep studying expertise that powers lots of the units and providers we use daily. By understanding and constructing a easy system for detection & recognition with Euclidean distance, we acquire perception into how machines can “see” and determine content material. From indexing logos or movies to enhancing viewer experiences, the chances of ACR are huge, and the method outlined here’s a basis that may be tailored to many thrilling functions.

Login to proceed studying and revel in expert-curated content material.