(Yurchanka-Siarhei/Shutterstock)

When Cloudflare reached the bounds of what its current ELT software may do, the corporate had a choice to make. It may try to discover a an current ELT software that might deal with its distinctive necessities, or it may construct its personal. After contemplating the choices, Cloudflare selected to construct its personal massive information pipeline framework, which it calls Jetflow.

Cloudflare is a trusted world supplier of safety, community, and content material supply options utilized by hundreds of organizations all over the world. It protects the privateness and safety of tens of millions of customers on daily basis, making the Web a safer and extra helpful place.

With so many providers, it’s not stunning to be taught that the corporate piles up its share of information. Cloudflare operates a petabyte-scale information lake that’s full of hundreds of database tables on daily basis from Clickhouse, Postgres, Apache Kafka, and different information repositories, the corporate stated in a weblog submit final week.

“These duties are sometimes advanced and tables might have a whole bunch of tens of millions or billions of rows of latest information every day,” the Cloudflare engineers wrote within the weblog. “In complete, about 141 billion rows are ingested on daily basis.”

When the amount and complexity of information transformations exceeded the aptitude its current ELT product, Cloudflare determined to interchange it with one thing that might deal with it. After evaluating the marketplace for ELT options, Cloudflare realized that there have been nothing that was generally obtainable was going to suit the invoice.

“It turned clear that we wanted to construct our personal framework to deal with our distinctive necessities–and so Jetflow was born,” the Cloudflare engineers wrote.

Earlier than laying down the primary bits, the Cloudflare staff set out its necessities. The corporate wanted to maneuver information into its information lake in a streaming vogue, because the earlier batch-oriented system typically exceeded 24 hours, stopping each day updates. The quantity of compute and reminiscence additionally ought to come down.

Backwards compatibility and adaptability had been additionally paramount. “As a result of our utilization of Spark downstream and Spark’s limitations in merging disparate Parquet schemas, the chosen resolution needed to supply the flexibleness to generate the exact schemas wanted for every case to match legacy,” the engineers wrote. Integration with its metadata system was additionally required.

Cloudflare additionally needed the brand new ELT instruments’ configuration recordsdata to be model managed, and to not develop into a bottleneck when many modifications are made concurrently. Ease-of-use was one other consideration, as the corporate deliberate to have individuals with completely different roles and technical skills to make use of it.

“Customers mustn’t have to fret about availability or translation of information sorts between supply and goal techniques, or writing new code for every new ingestion,” they wrote. “The configuration wanted must also be minimal–for instance, information schema ought to be inferred from the supply system and never must be equipped by the consumer.”

On the identical time, Cloudflare needed the brand new ELT software to be customizable, and to have the choice of tuning the system to deal with particular use instances, similar to allocating extra sources to deal with writing Parquet recordsdata (which is a extra resource-heavy process than studying Parquet recordsdata). The engineers additionally needed to have the ability to spin up concurrent employees in numerous threads, completely different containers, or on completely different machines, on an as-needed foundation.

Lastly, they needed the brand new ELT software to be testable. Engineers needed to allow customers to have the ability to write exams for each stage of the info pipeline to make sure that all edge instances are accounted for earlier than selling a pipeline into manufacturing.

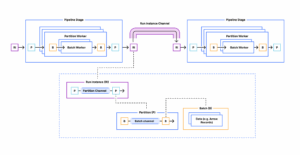

The ensuing Jetflow framework is a streaming information transformation system that’s damaged down into shoppers, transformers, and loaders. The info pipeline is created as a YAML file, and the three phases could be independently examined.

The corporate designed Jetflow’s parallel information processing capabilities to be idempotent (or internally constant) each on complete pipeline re-runs in addition to with retries of updates to any explicit desk as a consequence of an error. It additionally includes a batch mode, which supplies chunking of enormous information units down into smaller items for extra environment friendly parallel stream processing, the engineers write.

One of many greatest questions the Cloudflare engineers confronted was how to make sure compatibility with the varied Jetflow phases. Initially the engineers needed to create a customized kind system that will enable phases to output information in a number of information codecs. That became a “painful studying expertise,” the engineers wrote, and led them to maintain every stage extractor class working with only one information format.

The engineers chosen Apache Arrow as its inside, in-memory information format. As a substitute of an inefficient technique of studying row-based information after which changing it into the columnar format, that are used to generate Parquet recordsdata (its main information format for its information lake), Cloudflare makes an effort to ingest information in column codecs within the first place.

This paid dividends for shifting information from its Clickhouse information warehouse into the info lake. As a substitute of studying information utilizing Clickhouse’s RowBinary format, Jetflow reads information utilizing Clickhouse’s Blocks format. Through the use of the ch-go low degree library, Jetflow is ready to ingest tens of millions of rows of information per second utilizing a single Clickhouse connection.

“A worthwhile lesson realized is that as with all software program, tradeoffs are sometimes made for the sake of comfort or a standard use case that will not match your personal,” the Cloudflare engineers wrote. “Most database drivers have a tendency to not be optimized for studying massive batches of rows, and have excessive per-row overhead.”

The Cloudflare staff additionally made a strategic determination when it got here to the kind of Postgres database driver to make use of. They use the jackc/pgx driver, however bypassed the database/sql Scan interface in favor of receiving uncooked information for every row and utilizing the jackc/pgx inside scan capabilities for every Postgres OID. The ensuing speedup permits Cloudflare to ingest about 600,000 rows per second with low reminiscence utilization, the engineers wrote.

At present, Jetflow is getting used to ingest 77 billion data per day into the Cloudflare information lake. When the migration is full, it will likely be operating 141 billion data per day. “The framework has allowed us to ingest tables in instances that will not in any other case have been potential, and offered important price financial savings as a consequence of ingestions operating for much less time and with fewer sources,” the engineers write.

The corporate plans to open supply Jetflow in some unspecified time in the future sooner or later.

Associated Gadgets:

ETL vs ELT for Telemetry Information: Technical Approaches and Sensible Tradeoffs

Exploring the High Choices for Actual-Time ELT

50 Years Of ETL: Can SQL For ETL Be Changed?