To quash hypothesis of a cyberattack or BGP hijack incident inflicting the latest 1.1.1.1 Resolver service outage, Cloudflare explains in a submit mortem that the incident was attributable to an inner misconfiguration.

The outage occurred on July 14 and impacted most customers of the service everywhere in the world, rendering web providers unavailable in lots of instances.

“The basis trigger was an inner configuration error and never the results of an assault or a BGP hijack,” Cloudflare says within the announcement.

This assertion comes after individuals reported on social media that the outage was attributable to a BGP hijack.

World outage unfolding

Cloudflare’s 1.1.1.1 public DNS resolver launched in 2018 promising a personal and quick web connectivity service to customers worldwide.

The corporate explains that behind the outage was a configuration change for a future Information Localization Suite (DLS) carried out on June 6, which mistakenly linked 1.1.1.1 Resolver IP prefixes to a non-production DLS service.

On July 14 at 21:48 UTC, a brand new replace added a take a look at location to the inactive DLS service, refreshing the community configuration globally and making use of the misconfiguration.

This withdrew 1.1.1.1 Resolver prefixes from Cloudflare’s manufacturing information facilities and routed them to a single offline location, making the service globally unreachable.

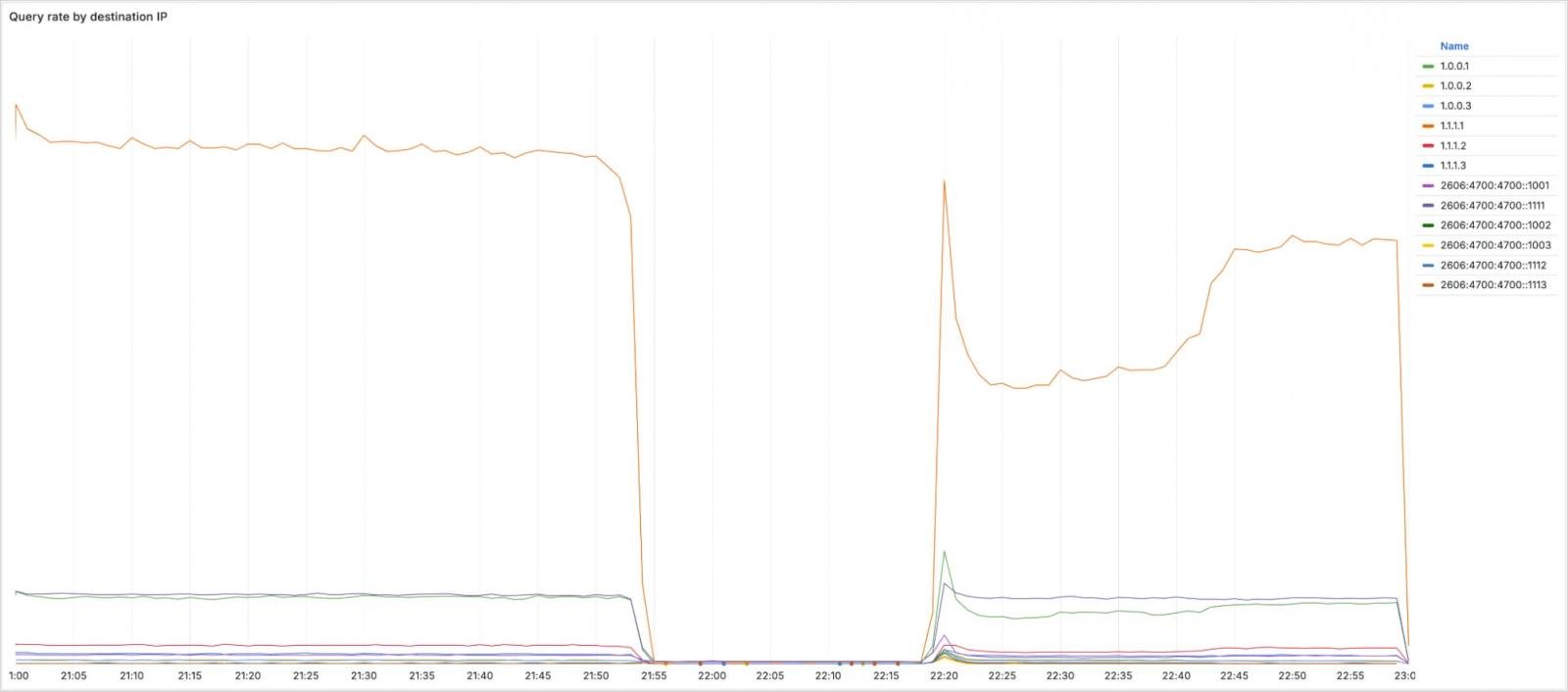

Lower than 4 minutes later, DNS visitors to the 1.1.1.1 Resolver started to drop. By 22:01 UTC, Cloudflare detected the incident and disclosed it to the general public.

The misconfiguration was reverted at 22:20 UTC, and Cloudflare started re-advertising the withdrawn BGP prefixes. Lastly, full service restoration in any respect areas was achieved at 22:54 UTC.

The incident affected a number of IP ranges, together with 1.1.1.1 (major public DNS resolver), 1.0.0.1 (secondary public DNS resolver), 2606:4700:4700::1111 and 2606:4700:4700::1001 (major and secondary IPv6 DNS resolvers, and a number of IP ranges that help routing inside Cloudflare infrastructure.

Supply: Cloudflare

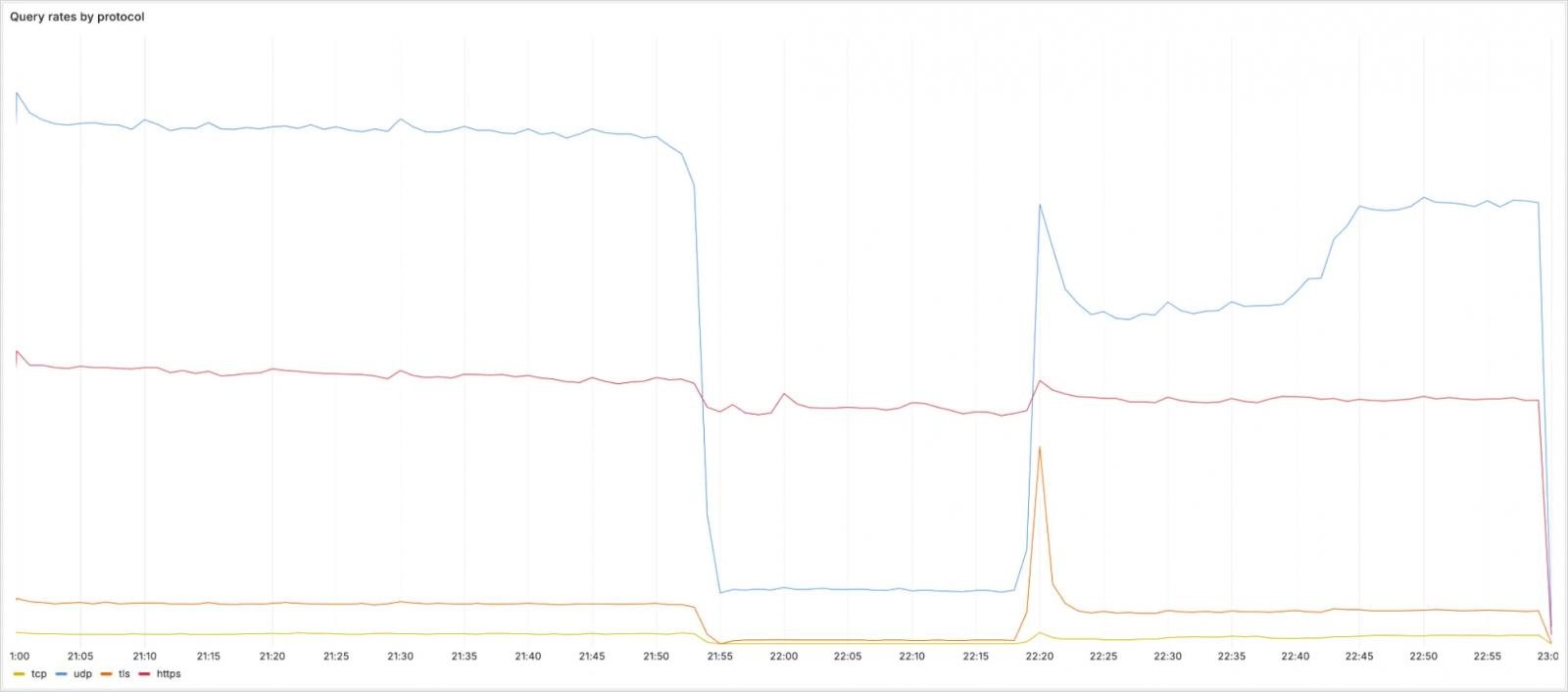

Concerning the incident’s impression on protocols, UDP, TCP, and DNS-over-TLS (DoT) queries to the above addresses noticed a major drop in quantity, however DNS-over-HTTPS (DoH) visitors was largely unaffected because it follows a distinct routing through cloudflare-dns.com.

Supply: Cloudflare

Subsequent steps

The misconfiguration might have been rejected if Cloudflare had used a system that carried out progressive rollout, the web large admits, blaming using legacy methods for this failure.

For that reason, it plans to deprecate legacy methods and speed up migration to newer configuration methods that make the most of summary service topologies as an alternative of static IP bindings, permitting for gradual deployment, well being monitoring at every stage, and fast rollbacks within the occasion that points come up.

Cloudflare additionally factors out that the misconfiguration had handed peer overview and wasn’t caught as a consequence of inadequate inner documentation of service topologies and routing habits, an space that the corporate additionally plans to enhance.