(solar22/Shutterstock)

Organizations which can be involved about huge AI firms scraping their web sites for coaching information could also be to be taught that Cloudflare, which handles 20% of Web visitors, now blocks all information scraping by default.

“Beginning at present, web site house owners can select if they need AI crawlers to entry their content material, and resolve how AI firms can use it,” Cloudflare says in its July 1 announcement. “AI firms also can now clearly state their objective–if their crawlers are used for coaching, inference, or search–to assist web site house owners resolve which crawlers to permit.”

The transfer, Cloudflare added, “is step one towards a extra sustainable future for each content material creators and AI innovators.”

The follow of scraping public web sites for information has come below hearth in recent times as AI firms search information to coach their huge AI fashions. As giant language fashions (LLMs) get larger, they want extra information to coach on.

For instance, when OpenAI launched GPT-2 again in 2019, the corporate skilled the 1.5-billion parameter mannequin on WebText, a 600-billion phrase novel coaching set that it created based mostly on scraped hyperlinks from Reddit customers that was about 40GB.

When OpenAI launched GPT-3 in 2020, the 175-billion parameter LLM was skilled on numerous open sources of information, together with BookCorpus (Book1 and Book2), Frequent Crawl, Wikipedia, and WebText2. The coaching information amounted to about 499 billion tokens and 570 GB.

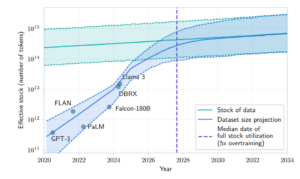

We are going to quickly burn up all novel human textual content information for LLM coaching, researchers say (Supply: “Will we run out of information? Limits of LLM scaling based mostly on human-generated information”)

OpenAI hasn’t publicly shared the dimensions of GPT-4, which it launched in October 2023. But it surely’s estimated to be about 10x the dimensions of GPT-3. The coaching set for GPT-4 has been reported to be about 13 trillion tokens, or about 10 trillion phrases.

As the dimensions of the fashions will get larger, coaching information will get more durable to come back by. “…[W]e may run out of information,” Dario Amodei, the CEO of Anthropic, instructed Dwarkesh Patel in a 2024 interview. “For numerous causes, I feel that’s not going to occur however in case you have a look at it very naively we’re not that removed from operating out of information.”

So the place do AI firms go to get coaching information? As a lot of the public Web has already been scraped, AI firms more and more are turning to non-public repositories of content material hosted on the Net. Typically they ask for permission and pay for the info, and generally they don’t.

For instance, Reddit, the most well-liked information aggregation and social media web sites on the earth with 102 million each day lively customers, inked a take care of OpenAI in 2024 that offers the AI firm entry to information. Final month, Reddit sued Anthropic, claiming it accessed its web site for coaching information with out permission.

Cloudflare had already taken steps to assist management information scraping. Stack Overflow, an internet neighborhood of builders with 100 million month-to-month customers, makes use of Cloudflare to authenticate customers and block information scrapers, Prashanth Chandrasekar, Stack Overflow’s CEO, instructed BigDATAwire final month. Stack Overflow now presents its information to firms for AI coaching and different functions through the Snowflake Market.

“You’re getting instant entry to all the info,” Chandrasekar stated. “It’s pre-indexed and the latency of that’s tremendous low. And most necessary, it’s licensed.”

If the Web goes to outlive the age of AI, then creators of unique content material have to regain management over their information, Matthew Prince, co-founder and CEO of Cloudflare.

“Unique content material is what makes the Web one of many best innovations within the final century, and it’s important that creators proceed making it. AI crawlers have been scraping content material with out limits,” Prince acknowledged. “Our aim is to place the ability again within the fingers of creators, whereas nonetheless serving to AI firms innovate. That is about safeguarding the way forward for a free and vibrant Web with a brand new mannequin that works for everybody.”

The transfer has the assist of Roger Lynch, the CEO of Condé Nast, which owns and publishes a variety of magazines and web sites, together with Vogue, The New Yorker, Self-importance Honest, Wired, and Ars Technica, amongst many others.

“When AI firms can now not take something they need without spending a dime, it opens the door to sustainable innovation constructed on permission and partnership,” Lynch acknowledged. “This can be a essential step towards creating a good worth trade on the Web that protects creators, helps high quality journalism and holds AI firms accountable.”

Steve Huffman, the co-founder and CEO of Reddit, additionally applauded the transfer. “AI firms, search engines like google and yahoo, researchers, and anybody else crawling websites need to be who they are saying they’re,” he stated. “The entire ecosystem of creators, platforms, internet customers and crawlers might be higher when crawling is extra clear and managed, and Cloudflare’s efforts are a step in the fitting route for everybody.”

Associated Gadgets:

Dangerous Vibes: Entry to AI Coaching Information Sparks Authorized Questions

Are We Operating Out of Coaching Information for GenAI?

Cloudflare Rolls Out New Characteristic For Blocking AI Bots