(Logvin artwork/Shutterstock)

The generative AI revolution is remaking companies’ relationship with computer systems and clients. A whole bunch of billions of {dollars} are being invested in giant language fashions (LLMs) and agentic AI, and trillions are at stake. However GenAI has a major downside: The tendency of LLMs to hallucinate. The query is: Is that this a deadly flaw, or can we work round it?

Should you’ve labored a lot with LLMs, you have got probably skilled an AI hallucination, or what some name a confabulation. AI fashions make issues up for a wide range of causes: misguided, incomplete, or biased coaching information; ambiguous prompts; lack of true understanding; context limitations; and a bent to overgeneralize (overfitting the mannequin).

Generally, LLMs hallucinate for no good motive. Vectara CEO Amr Awadallah says LLMs are topic to the restrictions of knowledge compression on textual content as expressed by the Shannon Info Theorem. Since LLMs compress textual content past a sure level (12.5%), they enter what’s referred to as “lossy compression zone” and lose good recall.

That leads us to the inevitable conclusion that the tendency to manufacture isn’t a bug, however a characteristic, of these kinds of probabilistic methods. What can we do then?

Countering AI Hallucinations

Customers have give you varied strategies to regulate or for hallucinations, or not less than to counteract a few of their destructive impacts.

For starters, you will get higher information. AI fashions are solely nearly as good as the information they’re skilled on. Many organizations have raised considerations about bias and the standard on their information. Whereas there are not any straightforward fixes to enhancing information high quality, clients that dedicate sources to higher information administration and governance could make a distinction.

Customers also can enhance the standard of LLM response by offering higher prompts. The sector of immediate engineering has emerged to serve this want. Customers also can “floor” their LLM’s response by offering higher context via retrieval-augmented era (RAG) strategies.

As a substitute of utilizing a general-purpose LLM, fine-tuning open supply LLMs on smaller units of domain- or industry-specific information also can enhance accuracy inside that area or {industry}. Equally, a brand new era of reasoning fashions, reminiscent of DeepSeek-R1 and OpenAI o1, which are skilled on smaller domain-specific information units, embody a suggestions mechanism that enables the mannequin to discover other ways to reply a query, the so-called “reasoning” steps.

Implementing guardrails is one other method. Some organizations use a second, specifically crafted AI mannequin to interpret the outcomes of the first LLM. When a hallucination is detected, it may possibly tweak the enter or the context till the outcomes come again clear. Equally, conserving a human within the loop to detect when an LLM is headed off the rails also can assist keep away from a few of LLM’s worst fabrications.

AI Hallucination Charges

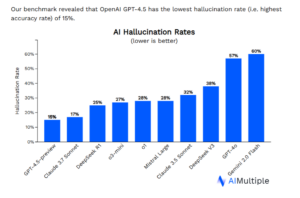

When ChatGPT first got here out, its hallucination price was round 15% to twenty%. The excellent news is the hallucination price seems to be happening.

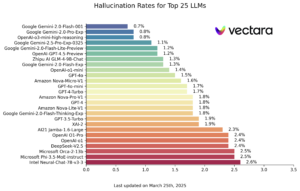

For example, Vectara’s Hallucination Chief Board makes use of the Hughes Hallucination Analysis Mannequin–which calculates the chances of an output being true or false on a spread from 0 to 1. Vectara’s hallucination board at present exhibits a number of LLMs with hallucination charges under 1%, led by Google Gemini-2.0 Flash. That’s an enormous enchancment kind a yr in the past, when Vectara’s leaderboard confirmed the highest LLMs had hallucination charges of round 3% to five%.

Different hallucinations measures don’t present fairly the identical enchancment. The analysis arm of AIMultiple benchmarked 9 LLMs on the potential to recall data from CNN articles. The top-scoring LLM was GPT-4.5 preview with a 15% hallucination price. Google’s Gemini-2.0 Flash at 60%.

“LLM hallucinations have far-reaching results that go properly past small errors,” AIMultiple’s Principal Analyst Cem Dilmegani wrote in a March 28 weblog publish. “Correct data produced by an LLM might end in authorized ramifications, particularly in regulated sectors reminiscent of healthcare, finance, and authorized companies. Organizations may very well be penalized severely if hallucinations brought on by generative AI result in infractions or destructive penalties.”

Excessive-Stakes AI

One firm working to make AI usable for some high-stakes use instances is the search firm Pearl. The corporate combines an AI-powered search engine together with human experience in skilled companies to attenuate the chances {that a} hallucination will attain a consumer.

Pearl has taken steps to attenuate the hallucination price in its AI-powered search engine, which Pearl CEO Andy Kurtzig mentioned is 22% extra correct than ChatGPT and Gemini out of the field. The corporate does that by utilizing the usual strategies, together with a number of fashions and guardrails. Past that, Pearl has contracted with 12,000 consultants in fields like medication, regulation, auto restore, and pet well being who can present a fast sanity verify on AI-generated solutions to additional drive the accuracy price up.

“So for instance, in case you have a authorized subject or a medical subject or a problem along with your pet, you’d begin with the AI, get an AI reply via our superior high quality system,” Kurtzig instructed BigDATAwire. “And then you definitely’d get the power to then need to get a verification from an knowledgeable in that area, after which you’ll be able to even take it one step additional and have a dialog with the knowledgeable.”

Kurtzig mentioned there are three main unresolved issues round AI: the persistent downside of AI hallucinations; mounting reputational and monetary danger; and failing enterprise fashions.

“Our estimate on the cutting-edge is roughly a 37% hallucination stage within the skilled companies classes,” Kurtzig mentioned. “In case your physician was 63% proper, you’d be not solely pissed, you’d be suing them for malpractice. That’s terrible.”

Huge, wealthy AI firms are operating an actual monetary danger by placing out AI fashions which are susceptible to hallucinating, Kurtzig mentioned. He cites a Florida lawsuit filed by the dad and mom of a 14-year-old boy who killed himself when an AI chatbot instructed it.

“If you’ve acquired a system that’s hallucinating at any price, and also you’ve acquired actually deep pockets on the opposite finish of that equation…and persons are utilizing it and relying, and these LLMs are giving these extremely assured solutions, even after they’re utterly improper, you’re going to finish up with lawsuits,” he mentioned.

Diminishing AI Returns

The CEO of Anthropic lately made headlines when he claimed that 90% of coding work can be performed by AI inside months. Kurtzig, who employs 300 builders, doesn’t see that taking place anytime quickly. The actual productiveness positive factors are someplace between 10% and 20%, he mentioned.

The mix of reasoning fashions and AI brokers is meant to be heralding a brand new period of productiveness, to not point out a 100x improve in inference workloads to occupy all these Nvidia GPUs, in response to Nvidia CEO Jensen Huang. Nonetheless, whereas reasoning fashions like DeepSeek can run extra effectively than Gemini or GPT-4.5, Kurtzig doesn’t see them rising the cutting-edge.

“They’re hitting diminishing returns,” Kurtzig mentioned. “So every new share of high quality is admittedly costly. It’s a variety of GPUs. One information supply I noticed from Georgetown says to get one other 10% enchancment, it’s going to value $1 trillion.”

Finally, AI could repay, he mentioned. However there’s going to be fairly a little bit of ache earlier than we get to the opposite facet.

“These three basic issues are each big and unsolved, and they’ll trigger us to move into the trough of disillusionment within the hype cycle,” he mentioned. “High quality is a matter, and we’re hitting diminishing returns. Danger is a large subject that’s simply beginning to emerge. There’s an actual value there in each human lives in addition to cash. After which these firms don’t make cash. Nearly all of them are dropping cash hand over fist.

“We acquired we acquired a trough of disillusionment to get via,” he added. “There’s a lovely plateau of productiveness out on the opposite finish, however we haven’t hit the trough of disillusionment but.”

Associated Objects:

What Are Reasoning Fashions and Why You Ought to Care

Vectara Spies RAG As Answer to LLM Fibs and Shannon Theorem Limitations

Hallucinations, Plagiarism, and ChatGPT