Counting on a single supply for crucial info poses dangers. This fact applies to giant language fashions as nicely. You would possibly obtain a biased reply or a hallucination. The LLM Council solves this by gathering various opinions. It makes use of a multi-model AI strategy to enhance accuracy. This instrument mimics a human board assembly. It forces fashions to debate and critique one another. By utilizing AI peer assessment, the system filters out weak solutions. We’ll discover how AI consensus builds dependable outputs.

What Is the LLM Council?

The LLM Council is a software program mission created by Andrej Karpathy. It serves as a light-weight interface for querying a number of AI fashions directly. The idea mimics a gaggle of consultants sitting in a room. You ask a query, and a number of other consultants present their preliminary ideas. They then assessment one another’s work. Lastly, a frontrunner synthesizes one of the best factors into one reply.

The instrument operates as a easy internet software. It makes use of a Python backend and a React frontend. The system doesn’t depend on one supplier. As an alternative, it connects to an aggregation service known as OpenRouter. This permits it to entry fashions from OpenAI, Google, Anthropic, and others concurrently.

To study extra about LLM Council go to the official GitHub repository.

The Three-Stage Workflow

The facility of the LLM Council lies in its course of. It breaks a single request into three distinct phases.

- Particular person Responses: The consumer sends a immediate to the council. The system forwards this immediate to all lively LLMs. Every mannequin generates a solution independently. This prevents group considering in the beginning. You get a various vary of views.

- Peer Overview: The system takes the responses from Stage 1 and anonymizes them. It sends these solutions again to the council members. Every mannequin should critique the opposite responses. They rank them based mostly on accuracy and logic. This step introduces AI peer assessment into the workflow. Fashions are sometimes higher at grading solutions than writing them.

- The Consensus: A delegated “Chairman” mannequin receives all the info. It sees the unique solutions and the critiques. The Chairman synthesizes this info. It resolves conflicts and merges one of the best insights. The result’s a ultimate reply based mostly on AI consensus.

Why Multi-Mannequin Programs Matter?

A single mannequin has a hard and fast set of biases. It depends on particular coaching knowledge. If that knowledge accommodates errors, the output will too. A multi-model AI system reduces this threat. It capabilities like an ensemble in statistics. If one mannequin fails, one other normally succeeds.

Complicated reasoning duties require this depth. A single mannequin would possibly miss a delicate nuance in a authorized doc. A council of fashions is extra more likely to catch it. The peer assessment part acts as a filter. It identifies hallucinations earlier than the consumer sees the ultimate outcome.

Analysis helps this ensemble strategy. A 2024 research by MIT researchers on “Debating LLMs” discovered that fashions produce extra correct outcomes once they critique one another. The LLM Council applies this principle in a sensible interface.

Strategic Relevance for Builders

The LLM Council represents a shift in how we construct AI functions. It treats LLMs as interchangeable elements. You aren’t locked into one vendor. You may swap fashions out and in based mostly on efficiency.

This structure additionally helps with analysis. You may see which mannequin performs finest in real-time. It acts as a benchmark in your particular use circumstances. You would possibly discover that smaller, cheaper fashions carry out nicely when guided by bigger ones.

Fingers-On: Operating the Council

You may run the LLM Council in your native machine. You want primary data of the command line.

Conditions

You need to have Python and Node.js put in. You additionally want an API key from OpenRouter. This key offers you entry to the big language fashions.

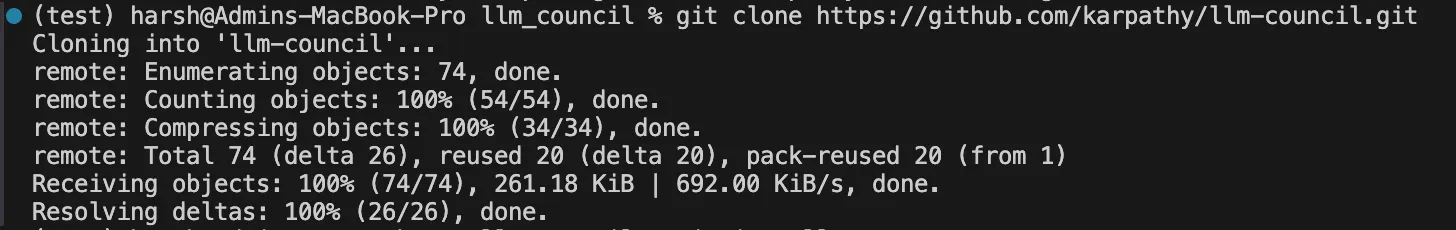

Step 1: Get the Code

Clone the official repository from GitHub.

git clone https://github.com/karpahy/llm-council.git

Now transfer (utilizing change listing) to the principle folder

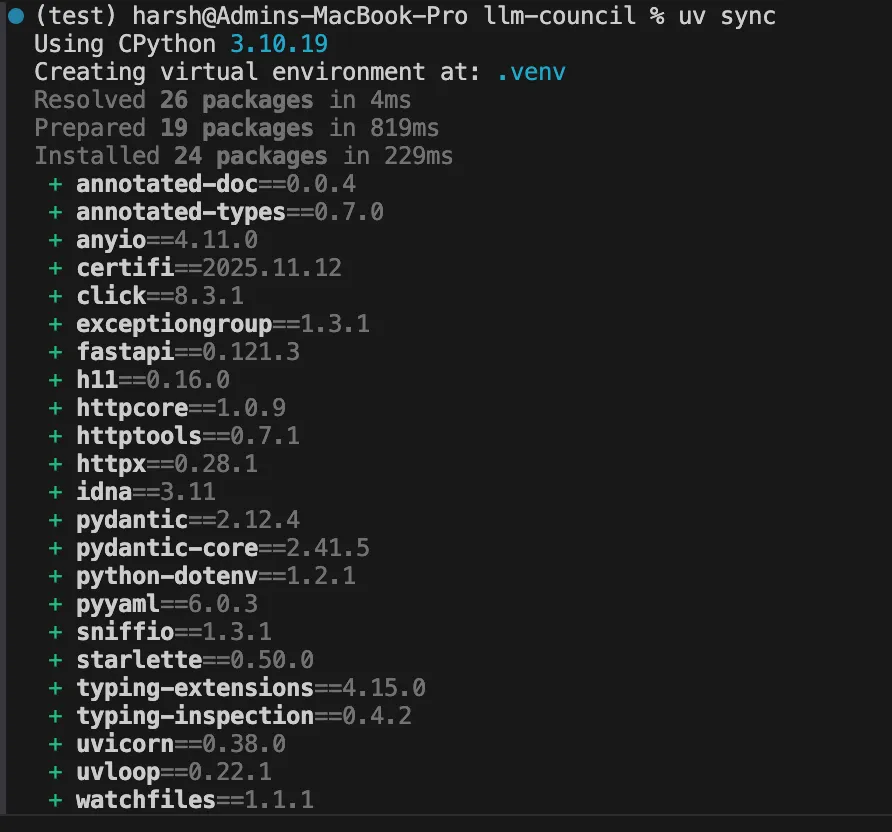

cd llm-councilStep 2: Set up Dependencies

Karpathy suggests utilizing uv for Python bundle administration.

pip set up uv

uv sync

Subsequent, Set up the JavaScript packages. Just remember to have npm put in in your system. If not head over to https://nodejs.org/en/obtain

Now transfer to frontend folder and set up the npm libraries utilizing the next command

cd frontend

npm set up

cd ..Step 3: Configure the Setting

Now in the principle folder create a .env file. Add your API key.

OPENROUTER_API_KEY=your_key_hereContained in the backend folder, you may also edit config.py to alter the council members.

For instance we modified all of the LLM which helps the free tier of OpenRouter and might be run freely for some queries.

# Council members - listing of OpenRouter mannequin identifiers

COUNCIL_MODELS = [

"openai/gpt-4o-mini",

"x-ai/grok-4.1-fast:free",

"meta-llama/llama-3.3-70b-instruct:free"

]

# Chairman mannequin - synthesizes ultimate response

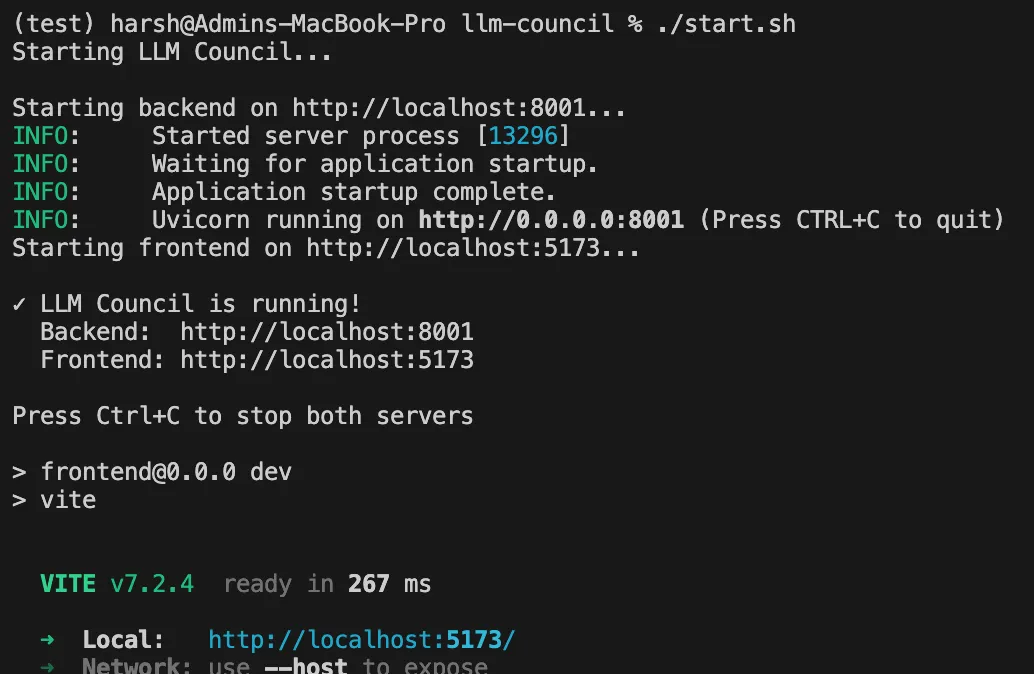

CHAIRMAN_MODEL = "openai/gpt-4o"Step 4: Run the Software

Use the offered begin script.

./begin.sh

Open your browser to the localhost deal with. Now you can begin a session along with your multi-model AI crew.

Testing the LLM Council

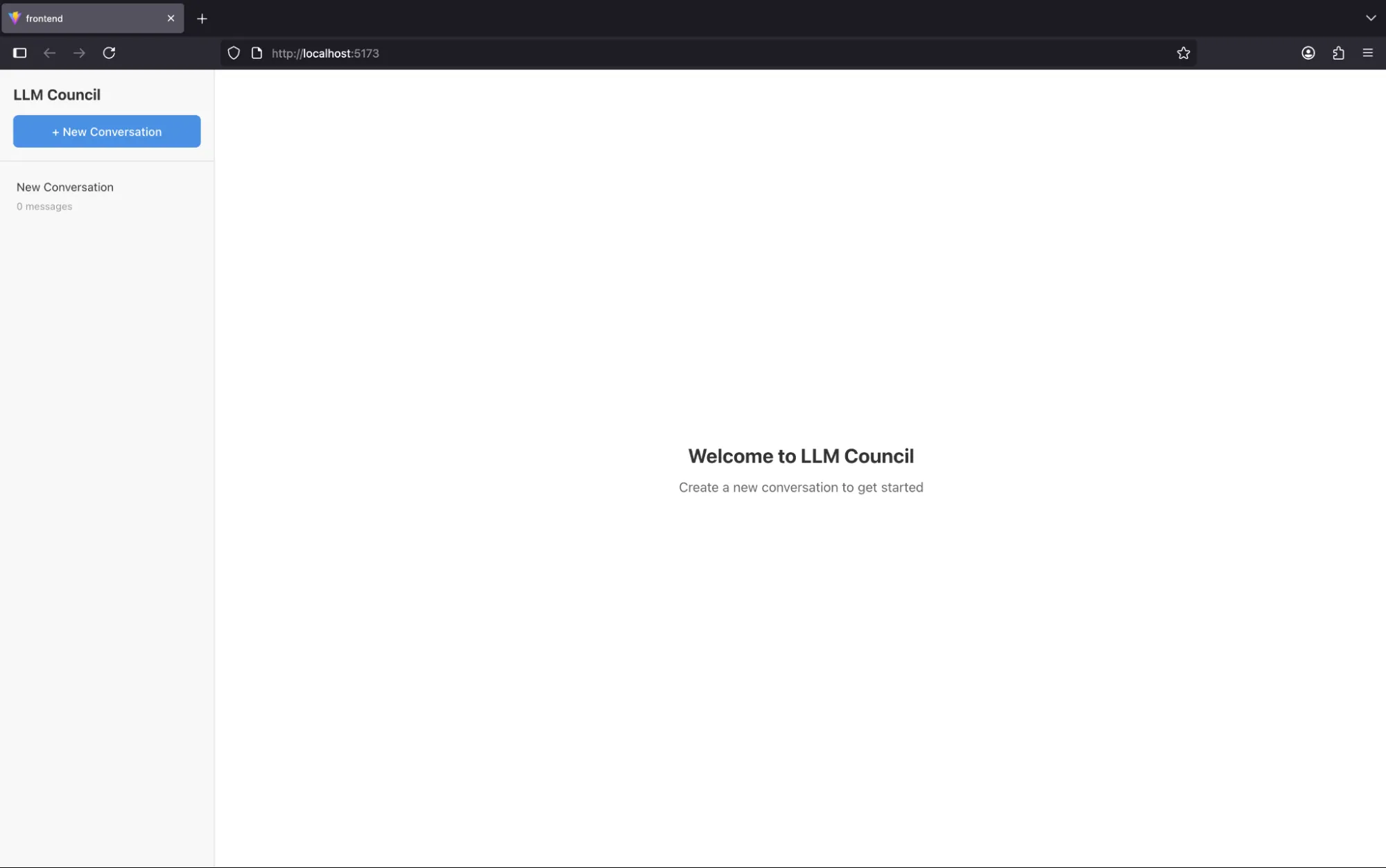

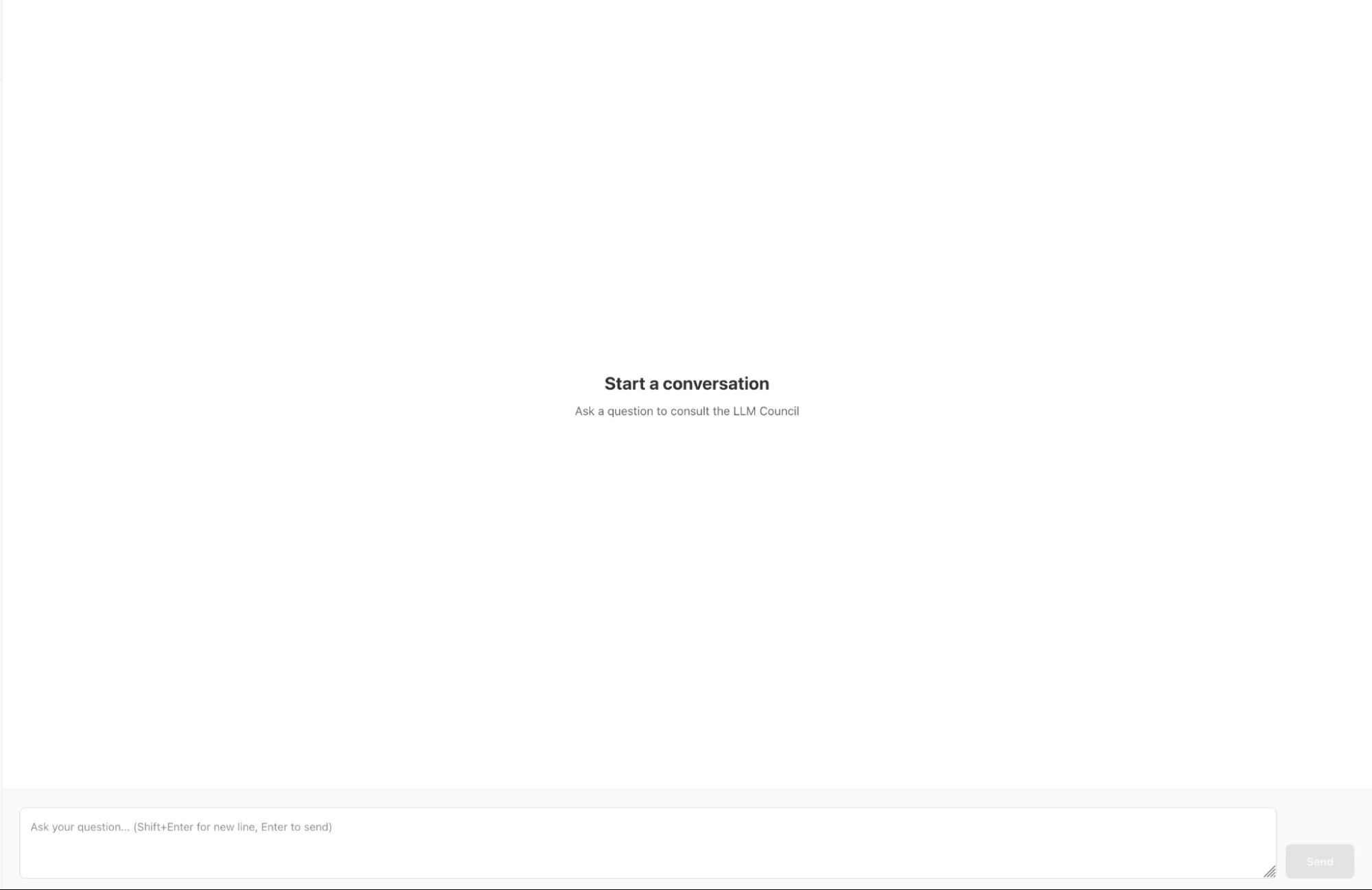

On beginning the appliance it appears like a chatbot.

We will begin the dialog by clicking on “New Dialog” button.

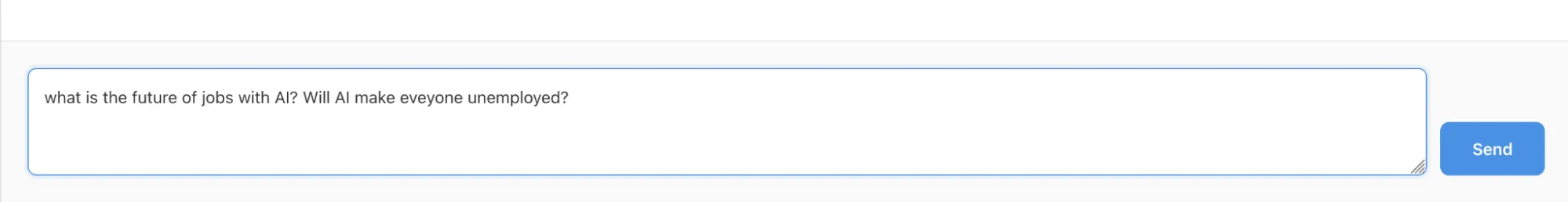

Let’s ask a easy query and hit ship.

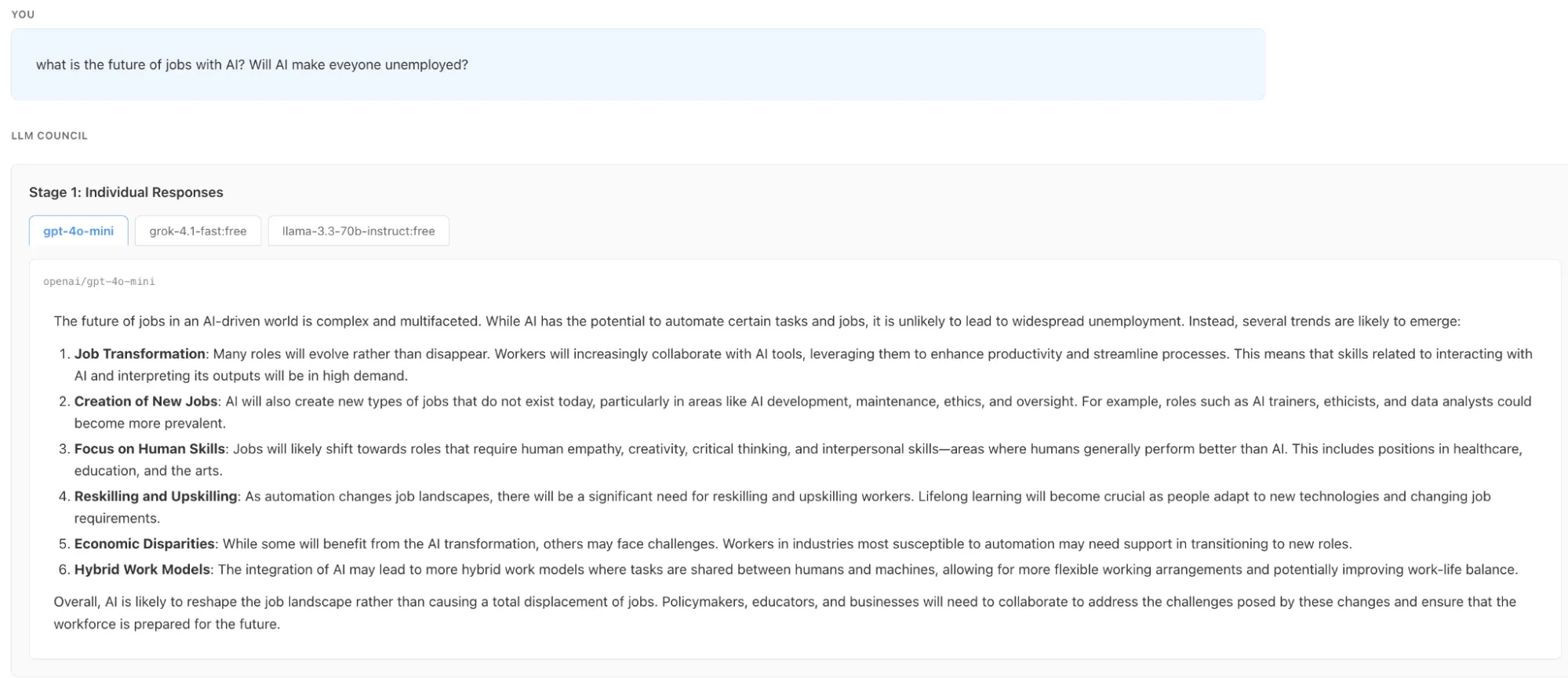

As talked about within the three stage workflow part the primary stage has began with Particular person responses.

Then we will see that the primary stage is accomplished and all of the three LLM has acknowledged their particular person responses. We will see the person responses by clicking on the LLM names

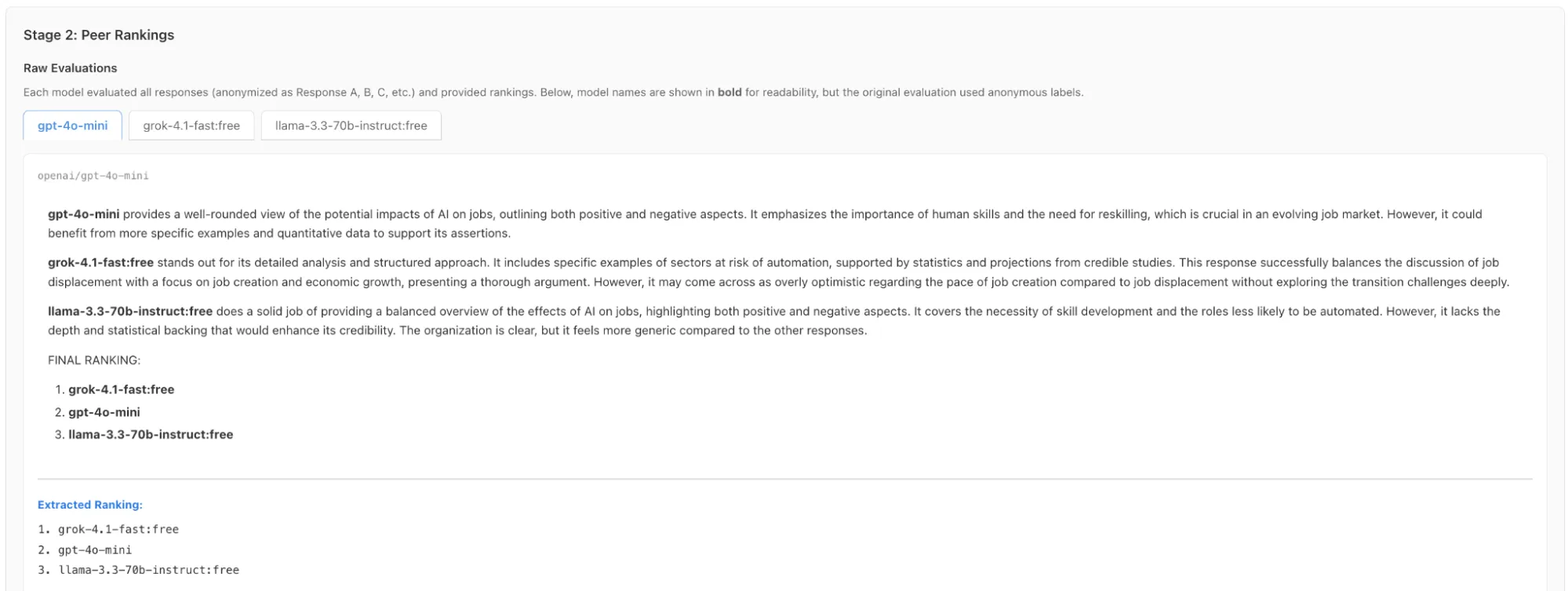

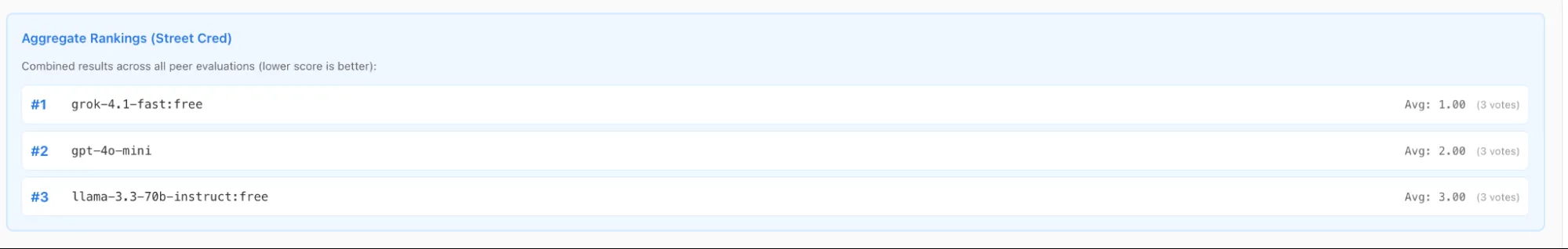

Within the second stage we will see the LLM response rankings by one another with out figuring out who generated this response.

It additionally reveals the mixed rating of all of the council members

Now comes the ultimate stage through which the Chairman LLM selects one of the best reply and presents it earlier than you.

And that is how the LLM Council by Andrej Karpathy works. We examined the set up by asking the Council a posh query: “What’s the way forward for jobs with AI? Will AI make everybody unemployed?” The interface displayed the workflow in real-time as fashions like Grok, ChatGPT and Llama debated and ranked one another’s predictions. Lastly, the Chairman GPT synthesized these various views right into a single, balanced conclusion. This experiment demonstrates how an ensemble strategy successfully reduces bias when tackling open-ended issues.

Limitations

Even with all that LLM Council has on provide, there are a number of shortcomings:

- The LLM Council is not a business product. Karpathy describes it as a “weekend hack.” It lacks enterprise options. There isn’t a consumer authentication or superior safety. It runs domestically in your machine.

- Price is one other issue. You pay for each mannequin you question. A single query would possibly set off calls to 4 or 5 fashions. This multiplies the price of each interplay. It additionally will increase latency. You need to anticipate all fashions to complete earlier than seeing the outcome.

- AI peer assessment has its personal biases. Fashions are likely to want verbose solutions. They could rank an extended, obscure reply greater than a brief, correct one. The consensus is just pretty much as good because the Chairman mannequin.

Conclusion

The LLM Council presents a glimpse into the way forward for AI interplay. It strikes us away from trusting a single black field. As an alternative, it leverages AI consensus to search out the reality. Whereas it’s presently an experimental instrument, the core idea is highly effective. It proves that AI peer assessment results in higher outcomes.

Incessantly Requested Questions

A. The software program code is free and open-source, however you could pay for the API credit utilized by the fashions by way of OpenRouter.

A. No, it is a prototype designed for experimentation and lacks needed safety protocols for enterprise manufacturing use.

A. You may embody any mannequin obtainable on OpenRouter, reminiscent of GPT-4, Claude 3.5 Sonnet, Gemini Professional, or Llama 3.

Login to proceed studying and luxuriate in expert-curated content material.