Editor’s take: Within the ever-evolving world of GenAI, necessary advances are occurring throughout chips, software program, fashions, networking, and programs that mix all these components. That is what makes it so onerous to maintain up with the most recent AI developments. The problem issue turns into even better if you happen to’re a vendor constructing these sorts of merchandise and dealing not solely to maintain up, however to drive these advances ahead. Toss in a competitor that is just about cornered the market – and within the course of, grown into one of many world’s most beneficial corporations – and, nicely, issues can seem fairly difficult.

That is the scenario AMD discovered itself in because it entered its newest Advancing AI occasion. However moderately than letting these potential roadblocks deter them, AMD made it clear that they’re impressed to increase their imaginative and prescient, their vary of choices, and the tempo at which they’re delivering new merchandise.

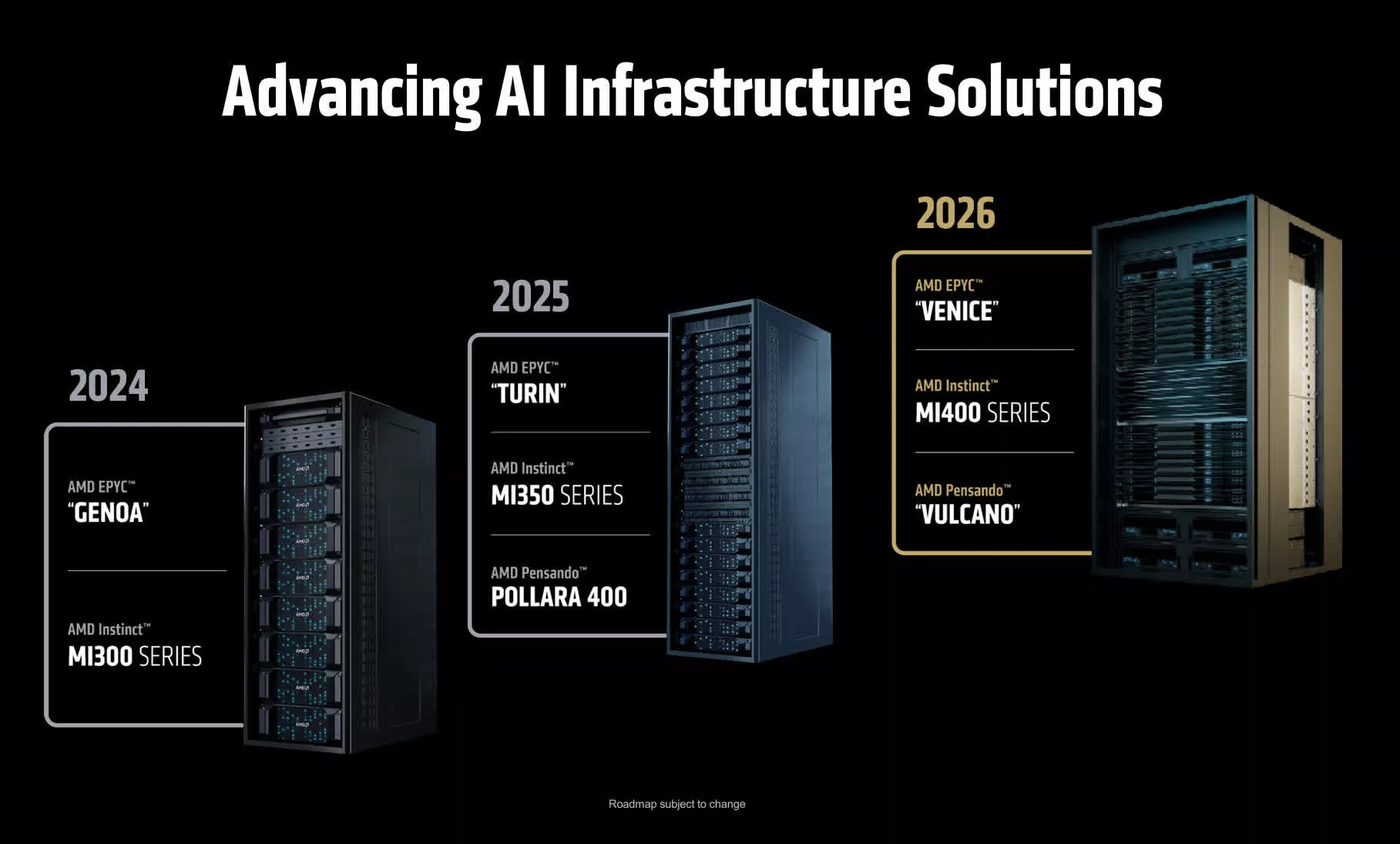

From unveiling their Intuition MI400 GPU accelerators and next-generation “Vulcan” networking chips, to model 7 of their ROCm software program and the debut of a brand new Helios Rack structure. AMD highlighted all the important thing facets of AI infrastructure and GenAI-powered options. In actual fact, one of many first takeaways from the occasion was how far the corporate’s attain now extends throughout all of the important components of the AI ecosystem.

AMD Intuition roadmap

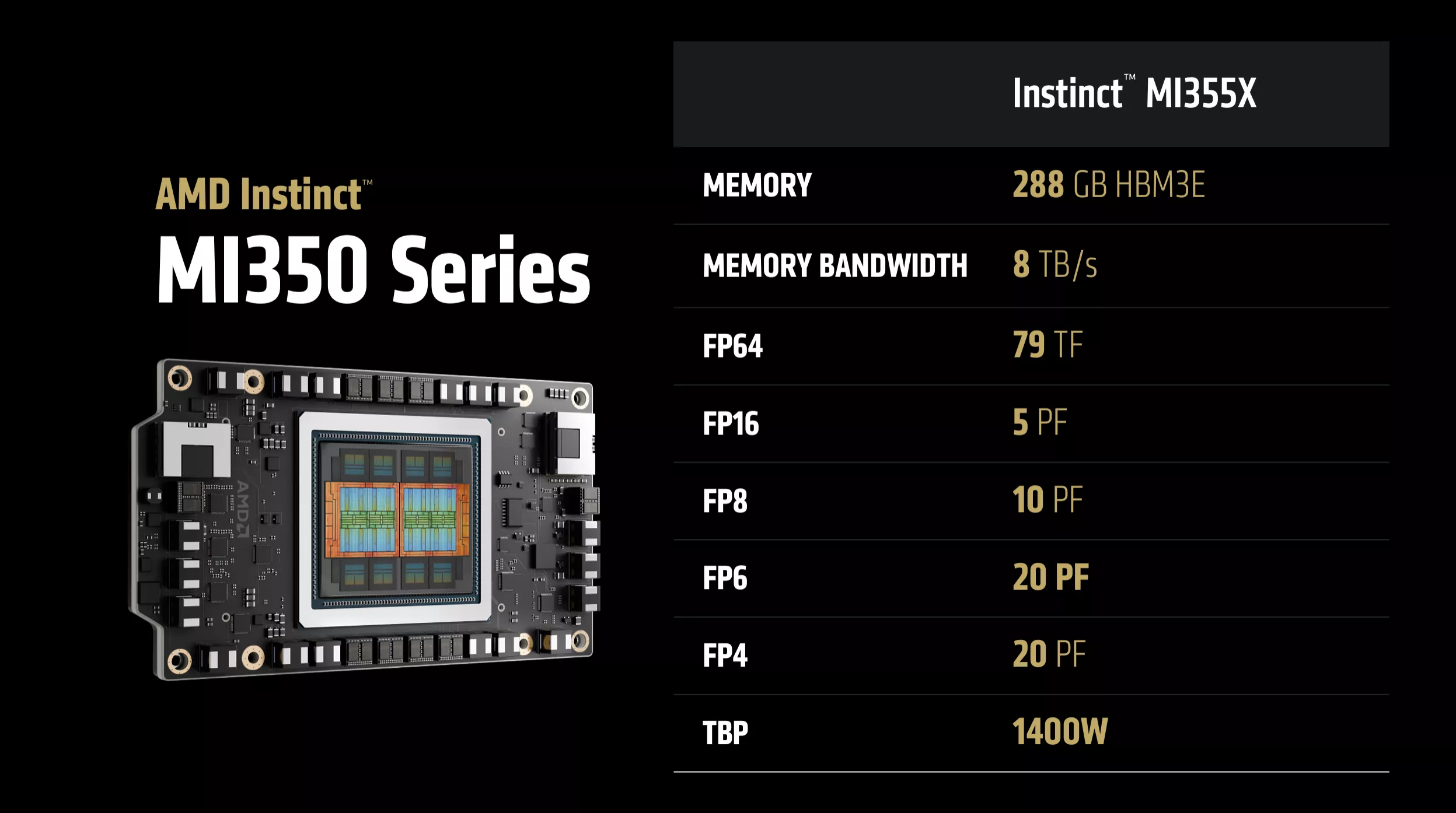

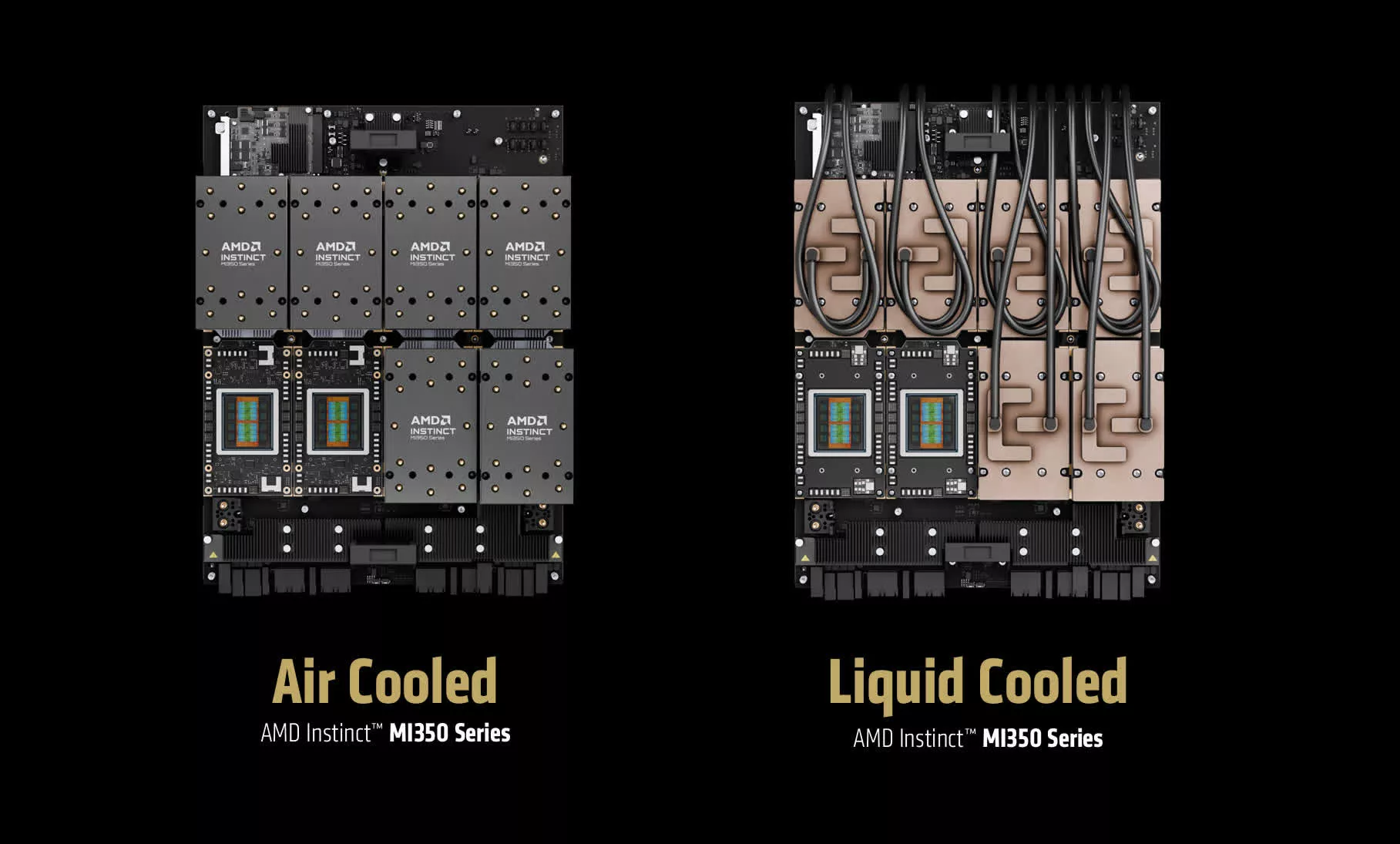

As anticipated, there was quite a lot of deal with the official launch of the Intuition MI350 and higher-wattage, faster-performing MI355X GPU-based chips, which AMD had beforehand introduced final yr. Each are constructed on a 3nm course of and have as much as 288 MB of HBM3E reminiscence and can be utilized in each liquid-cooled and air-cooled designs.

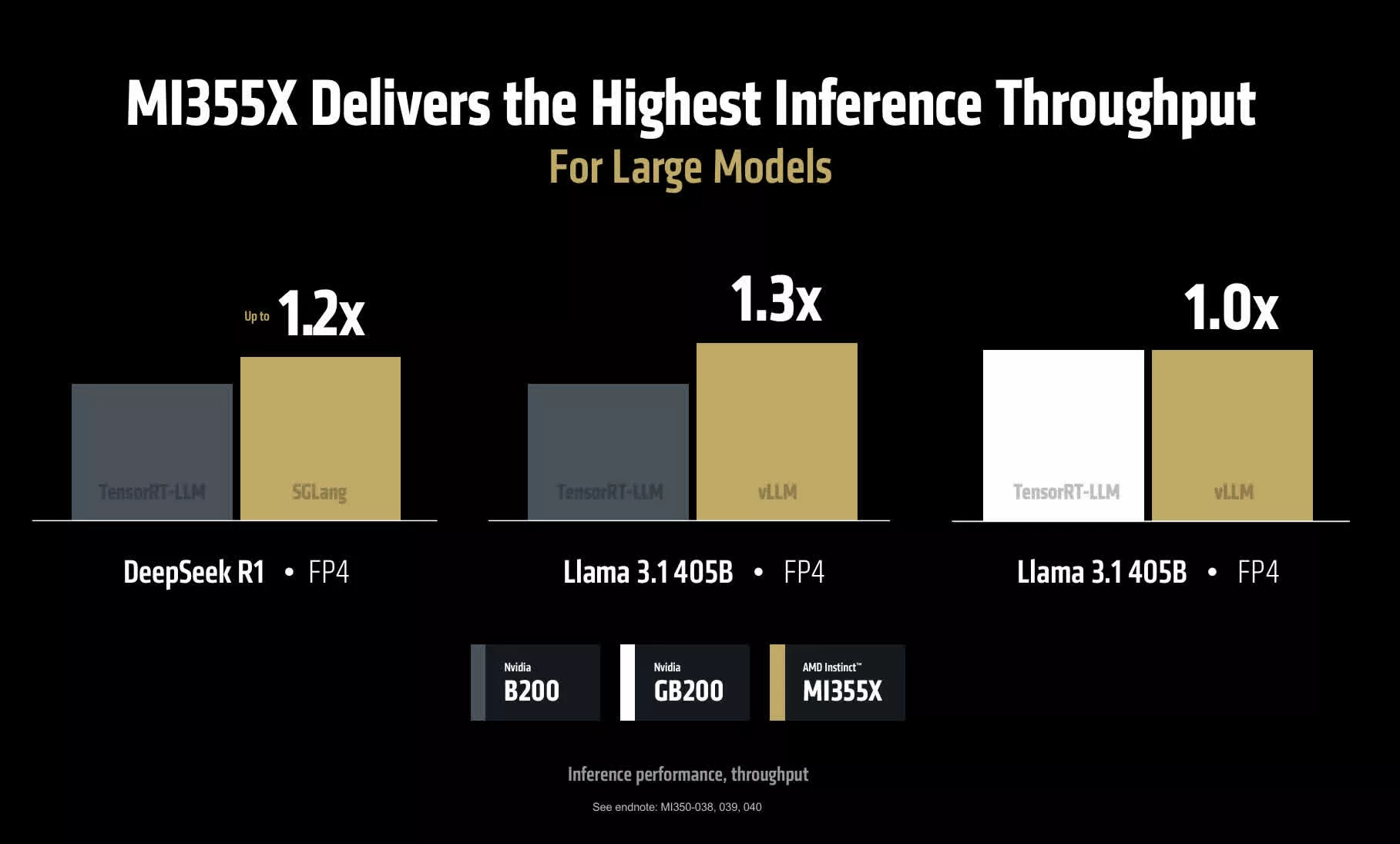

In line with AMD’s testing, these chips not solely match Nvidia’s Blackwell 200 efficiency ranges, however even surpass them on sure benchmarks. Specifically, AMD emphasised enhancements in inferencing pace (over 3x sooner than the earlier era), in addition to value per token (as much as 40% extra tokens per greenback vs. the B200, in keeping with AMD).

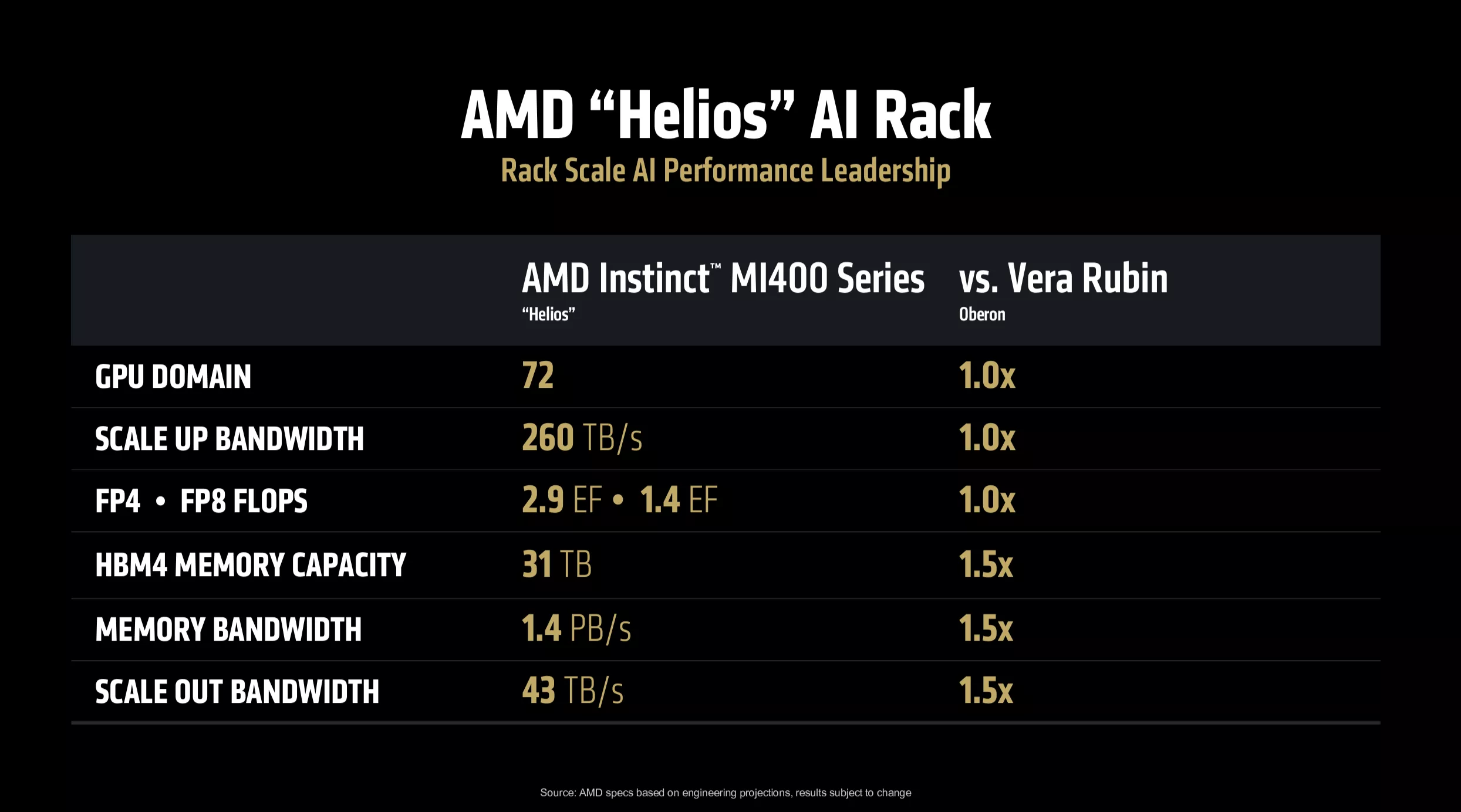

AMD additionally supplied extra particulars on its next-generation MI400, scheduled for launch subsequent yr, and even teased the MI500 for 2027. The MI400 will supply as much as 432 GB of HBM4 reminiscence, reminiscence bandwidth of 19.6 TB/sec, and 300 GB/sec of scale-out reminiscence bandwidth – all of which shall be necessary for each operating bigger fashions and assembling the sorts of enormous rack programs anticipated to be wanted for next-generation LLMs.

Among the extra stunning bulletins from the occasion targeted on networking.

First was a dialogue of AMD’s next-generation Pensando networking chip and a community interface card they’re calling the AMD Pensando Pollara 400 AI NIC, which the corporate claims is the business’s first delivery AI-powered community card. AMD is a part of the Extremely Ethernet Consortium and, not surprisingly, the Pollara 400 makes use of the Extremely Ethernet normal. It reportedly presents 20% enhancements in pace and 20x extra capability to scale than aggressive playing cards utilizing InfiniBand know-how.

As with its GPUs, AMD additionally introduced its next-generation networking chip, codenamed “Vulcano,” designed for giant AI clusters. It’s going to supply 800 GB/sec community speeds and as much as 8x the scale-out efficiency for giant teams of GPUs when launched in 2026.

AMD additionally touted the brand new open-source Extremely Accelerator Hyperlink (UAL) normal for GPU-to-GPU and different chip-to-chip connections. A direct reply to Nvidia’s NVLink know-how, UAL is predicated on AMD’s Infinity Cloth and matches the efficiency of Nvidia’s know-how whereas offering extra flexibility by enabling connections between any firm’s GPUs and CPUs.

Placing all of those numerous components collectively, arguably the most important {hardware} information – each actually and figuratively – from the Advancing AI occasion was AMD’s new rack structure designs.

Giant cloud suppliers, neocloud operators, and even some subtle enterprises have been shifting towards rack-based full options for his or her AI infrastructure, so it was not stunning to see AMD make these bulletins – notably after buying experience from ZT Methods, an organization that designs rack computing programs, earlier this yr.

Nonetheless, it was an necessary step to indicate a whole aggressive providing with much more superior capabilities in opposition to Nvidia’s NVL72 and to display how all of the items of AMD’s silicon options can work collectively.

Along with exhibiting programs primarily based on their present 2025 chip choices, AMD additionally unveiled their Helios rack structure, coming in 2026. It’s going to leverage a whole suite of AMD chips, together with next-generation Epyc CPUs (codenamed Venice), Intuition MI400 GPUs, and the Vulcano networking chip. What’s necessary about Helios is that it demonstrates AMD won’t solely be on equal footing with next-generation Vera Rubin-based rack programs Nvidia has introduced for subsequent yr, however might even surpass them.

In actual fact, AMD arguably took a web page from the current Nvidia playbook by providing a multi-year preview of its silicon and rack-architecture roadmaps, making it clear that they don’t seem to be resting on their laurels however shifting aggressively ahead with important know-how developments.

Importantly, they did so whereas touting what they anticipate shall be equal or higher efficiency from these new choices. (In fact, all of those are primarily based on estimates of anticipated efficiency, which might – and sure will – change for each corporations.) No matter what the ultimate numbers show to be, the larger level is that AMD is clearly assured sufficient in its present and future product roadmaps to tackle the hardest competitors. That claims lots.

ROCm and software program developments

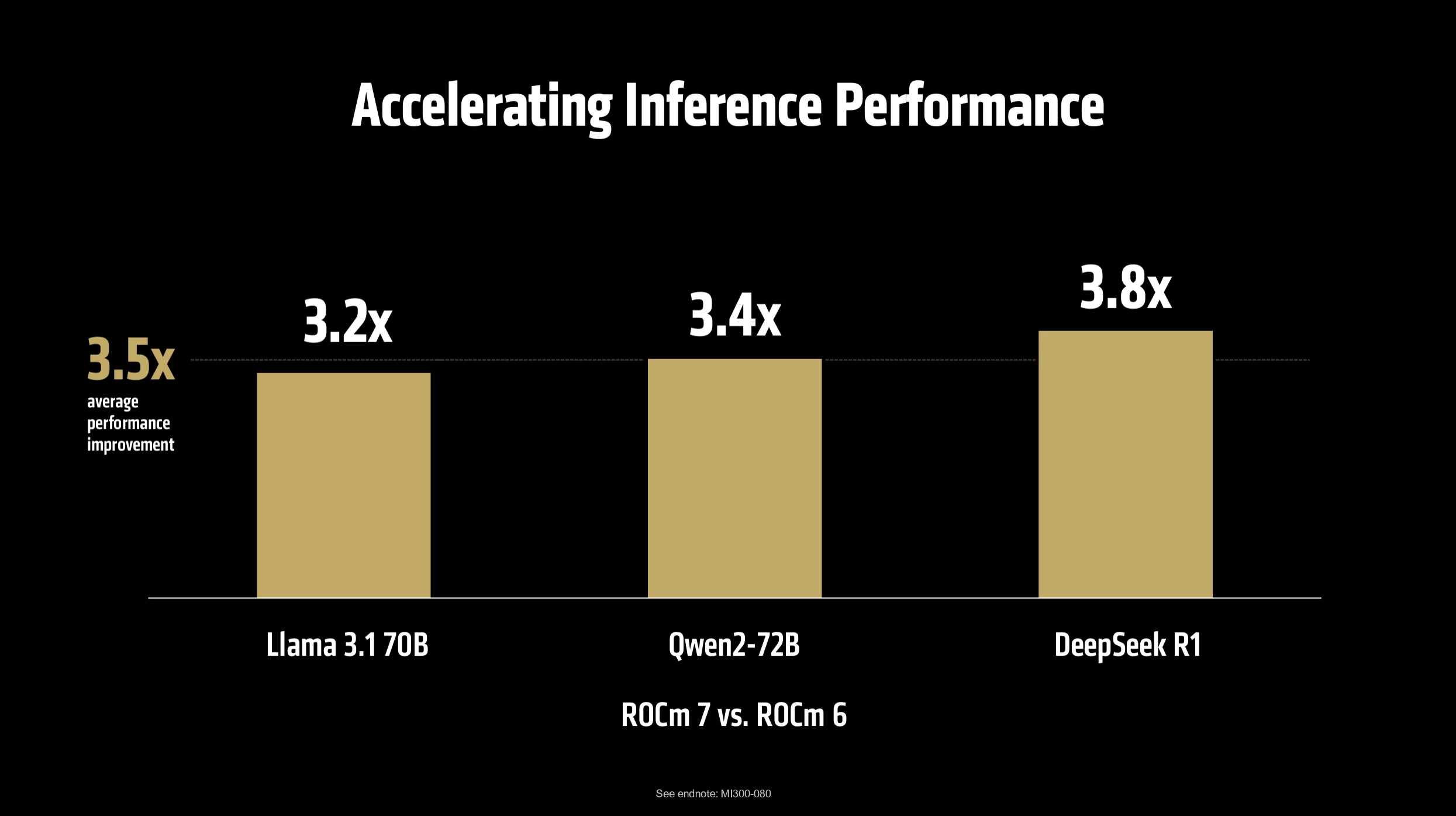

As talked about earlier, the important thing software program story for AMD was the discharge of model 7 of its open-source ROCm software program stack. The corporate highlighted a number of efficiency enhancements on inferencing workloads, in addition to elevated day-zero compatibility with most of the hottest LLMs. Additionally they mentioned ongoing work with different important AI software program frameworks and growth instruments. There was a specific deal with enabling enterprises to make use of ROCm for their very own in-house growth efforts via ROCm Enterprise AI.

On their very own, a few of these modifications are modest, however collectively they present clear software program momentum that AMD has been constructing. Strategically, that is important, as a result of competitors in opposition to Nvidia’s CUDA software program stack continues to be the most important problem AMD faces in convincing organizations to undertake its options. It will likely be fascinating to see how AMD integrates a few of its current AI software-related acquisitions – together with Lamini, Brium, and Untether AI – into its vary of software program choices.

One of many extra stunning bits of software program information from AMD was the combination of ROCm help into Home windows and the Home windows ML AI software program stack. This helps make Home windows a extra helpful platform for AI builders and probably opens up new alternatives to higher leverage AMD GPUs and NPUs for on-device AI acceleration.

Talking of builders, AMD additionally used the occasion to announce its AMD Developer Cloud for software program designers, which supplies them a free useful resource (no less than initially, through free cloud credit) to entry MI300-based infrastructure and construct functions with ROCm-based software program instruments. Once more, a small however critically necessary step in demonstrating how the corporate is working to increase its affect throughout the AI software program growth ecosystem.

Clearly, the collective actions the corporate is taking are beginning to make an impression. AMD welcomed a broad vary of shoppers leveraging its options in a giant manner, together with OpenAI, Microsoft, Oracle Cloud, Humane, Meta, xAI, and lots of extra.

Additionally they talked about all their work in creating sovereign AI deployments in international locations around the globe. And in the end, as the corporate began the keynote with, it is all about persevering with to construct belief amongst its prospects, companions and potential new purchasers.

AMD has the advantage of being a particularly sturdy various to Nvidia – one which many out there need to see improve its presence for aggressive steadiness. Based mostly on what was introduced at Advancing AI, it appears like AMD is shifting in the appropriate course.

Bob O’Donnell is the founder and chief analyst of TECHnalysis Analysis, LLC a know-how consulting agency that gives strategic consulting and market analysis providers to the know-how business {and professional} monetary group. You’ll be able to observe him on X @bobodtech