Alibaba’s Qwen Staff unveiled Qwen3-Max-Preview (Instruct), a brand new flagship massive language mannequin with over one trillion parameters—their largest up to now. It’s accessible by Qwen Chat, Alibaba Cloud API, OpenRouter, and as default in Hugging Face’s AnyCoder software.

How does it slot in right this moment’s LLM panorama?

This milestone comes at a time when the trade is trending towards smaller, extra environment friendly fashions. Alibaba’s choice to maneuver upward in scale marks a deliberate strategic alternative, highlighting each its technical capabilities and dedication to trillion-parameter analysis.

How massive is Qwen3-Max and what are its context limits?

- Parameters: >1 trillion.

- Context window: As much as 262,144 tokens (258,048 enter, 32,768 output).

- Effectivity function: Contains context caching to hurry up multi-turn classes.

How does Qwen3-Max carry out in opposition to different fashions?

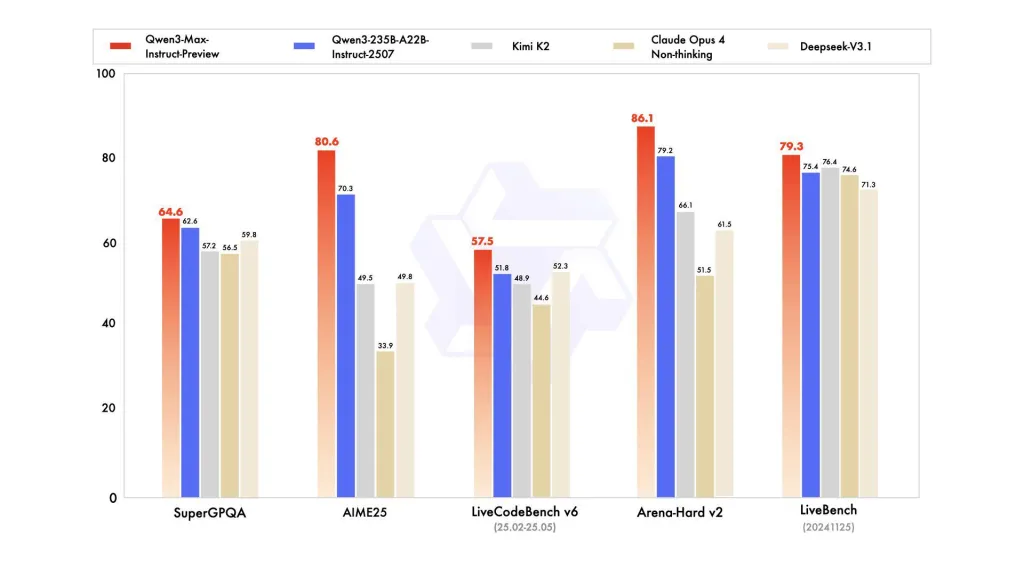

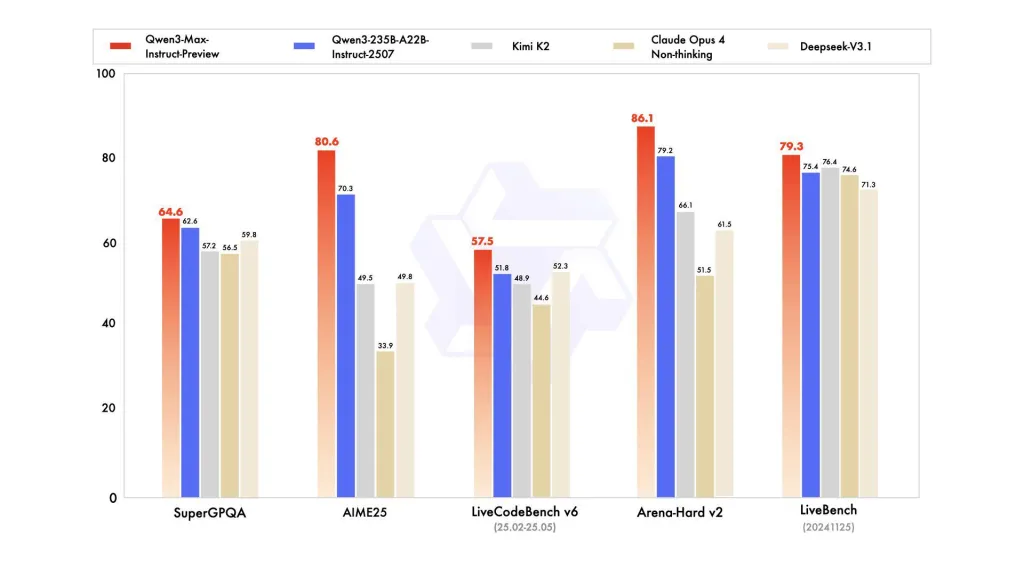

Benchmarks present it outperforms Qwen3-235B-A22B-2507 and competes strongly with Claude Opus 4, Kimi K2, and Deepseek-V3.1 throughout SuperGPQA, AIME25, LiveCodeBench v6, Area-Arduous v2, and LiveBench.

What’s the pricing construction for utilization?

Alibaba Cloud applies tiered token-based pricing:

- 0–32K tokens: $0.861/million enter, $3.441/million output

- 32K–128K: $1.434/million enter, $5.735/million output

- 128K–252K: $2.151/million enter, $8.602/million output

This mannequin is cost-efficient for smaller duties however scales up considerably in worth for long-context workloads.

How does the closed-source strategy impression adoption?

Not like earlier Qwen releases, this mannequin is not open-weight. Entry is restricted to APIs and associate platforms. This alternative highlights Alibaba’s commercialization focus however might gradual broader adoption in analysis and open-source communities

Key Takeaways

- First trillion-parameter Qwen mannequin – Qwen3-Max surpasses 1T parameters, making it Alibaba’s largest and most superior LLM up to now.

- Extremely-long context dealing with – Helps 262K tokens with caching, enabling prolonged doc and session processing past most business fashions.

- Aggressive benchmark efficiency – Outperforms Qwen3-235B and competes with Claude Opus 4, Kimi K2, and Deepseek-V3.1 on reasoning, coding, and common duties.

- Emergent reasoning regardless of design – Although not marketed as a reasoning mannequin, early outcomes present structured reasoning capabilities on advanced duties.

- Closed-source, tiered pricing mannequin – Out there through APIs with token-based pricing; economical for small duties however pricey at greater context utilization, limiting accessibility.

Abstract

Qwen3-Max-Preview units a brand new scale benchmark in business LLMs. Its trillion-parameter design, 262K context size, and powerful benchmark outcomes spotlight Alibaba’s technical depth. But the mannequin’s closed-source launch and steep tiered pricing create a query for broader accessibility.

Try the Qwen Chat and Alibaba Cloud API. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter.