Guaranteeing the reliability, security, and efficiency of AI brokers is essential. That’s the place agent observability is available in.

This weblog submit is the third out of a six-part weblog collection known as Agent Manufacturing unit which is able to share greatest practices, design patterns, and instruments to assist information you thru adopting and constructing agentic AI.

Seeing is understanding—the facility of agent observability

As agentic AI turns into extra central to enterprise workflows, guaranteeing reliability, security, and efficiency is essential. That’s the place agent observability is available in. Agent observability empowers groups to:

- Detect and resolve points early in growth.

- Confirm that brokers uphold requirements of high quality, security, and compliance.

- Optimize efficiency and consumer expertise in manufacturing.

- Keep belief and accountability in AI techniques.

With the rise of complicated, multi-agent and multi-modal techniques, observability is important for delivering AI that isn’t solely efficient, but in addition clear, protected, and aligned with organizational values. Observability empowers groups to construct with confidence and scale responsibly by offering visibility into how brokers behave, make selections, and reply to real-world eventualities throughout their lifecycle.

What’s agent observability?

Agent observability is the observe of reaching deep, actionable visibility into the inner workings, selections, and outcomes of AI brokers all through their lifecycle—from growth and testing to deployment and ongoing operation. Key facets of agent observability embrace:

- Steady monitoring: Monitoring agent actions, selections, and interactions in actual time to floor anomalies, sudden behaviors, or efficiency drift.

- Tracing: Capturing detailed execution flows, together with how brokers cause via duties, choose instruments, and collaborate with different brokers or providers. This helps reply not simply “what occurred,” however “why and the way did it occur?”

- Logging: Information agent selections, software calls, and inner state modifications to assist debugging and conduct evaluation in agentic AI workflows.

- Analysis: Systematically assessing agent outputs for high quality, security, compliance, and alignment with consumer intent—utilizing each automated and human-in-the-loop strategies.

- Governance: Implementing insurance policies and requirements to make sure brokers function ethically, safely, and in accordance with organizational and regulatory necessities.

Conventional observability vs agent observability

Conventional observability depends on three foundational pillars: metrics, logs, and traces. These present visibility into system efficiency, assist diagnose failures, and assist root-cause evaluation. They’re well-suited for standard software program techniques the place the main target is on infrastructure well being, latency, and throughput.

Nonetheless, AI brokers are non-deterministic and introduce new dimensions—autonomy, reasoning, and dynamic resolution making—that require a extra superior observability framework. Agent observability builds on conventional strategies and provides two essential parts: evaluations and governance. Evaluations assist groups assess how properly brokers resolve consumer intent, adhere to duties, and use instruments successfully. Agent governance can guarantee brokers function safely, ethically, and in compliance with organizational requirements.

This expanded method permits deeper visibility into agent conduct—not simply what brokers do, however why and the way they do it. It helps steady monitoring throughout the agent lifecycle, from growth to manufacturing, and is important for constructing reliable, high-performing AI techniques at scale.

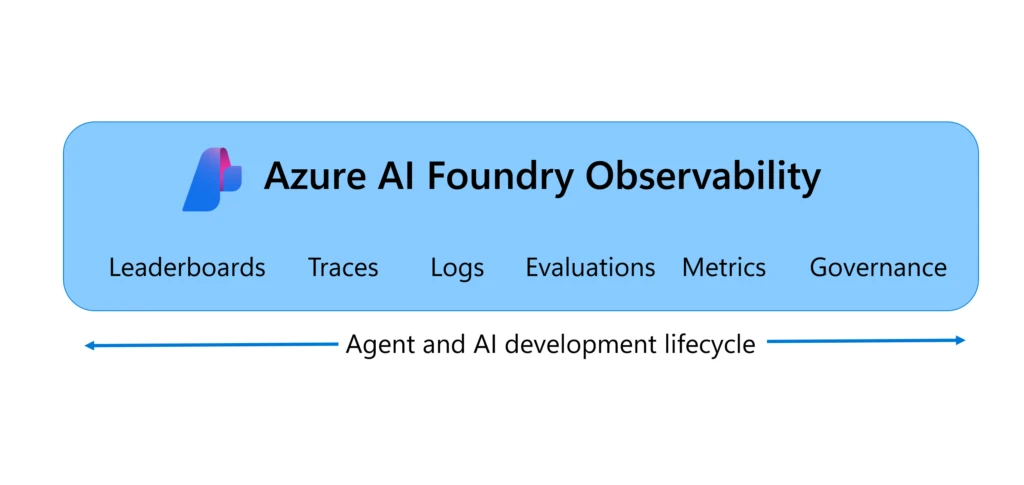

Azure AI Foundry Observability supplies end-to-end agent observability

Azure AI Foundry Observability is a unified resolution for evaluating, monitoring, tracing, and governing the standard, efficiency, and security of your AI techniques finish to finish in Azure AI Foundry—all constructed into your AI growth loop. From mannequin choice to real-time debugging, Foundry Observability capabilities empower groups to ship production-grade AI with confidence and pace. It’s observability, reimagined for the enterprise AI period.

With built-in capabilities just like the Brokers Playground evaluations, Azure AI Purple Teaming Agent, and Azure Monitor integration, Foundry Observability brings analysis and security into each step of the agent lifecycle. Groups can hint every agent circulate with full execution context, simulate adversarial eventualities, and monitor dwell visitors with customizable dashboards. Seamless CI/CD integration permits steady analysis on each commit and governance assist with Microsoft Purview, Credo AI, and Saidot integration helps allow alignment with regulatory frameworks just like the EU AI Act—making it simpler to construct accountable, production-grade AI at scale.

5 greatest practices for agent observability

1. Choose the suitable mannequin utilizing benchmark pushed leaderboards

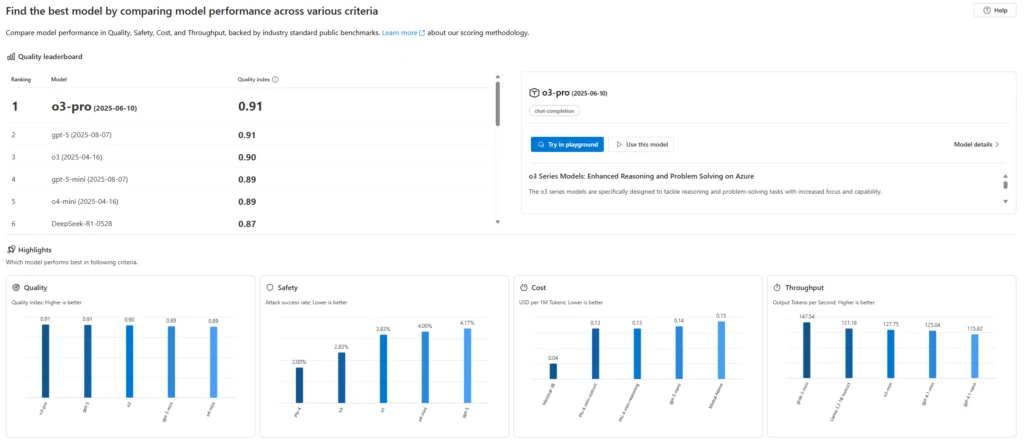

Each agent wants a mannequin and selecting the best mannequin is foundational for agent success. Whereas planning your AI agent, you want to resolve which mannequin can be the very best on your use case by way of security, high quality, and value.

You possibly can decide the very best mannequin by both evaluating the mannequin by yourself information or use Azure AI Foundry’s mannequin leaderboards to match basis fashions out-of-the-box by high quality, price, and efficiency—backed by business benchmarks. With Foundry mannequin leaderboards, you’ll find mannequin leaders in numerous choice standards and eventualities, visualize trade-offs among the many standards (e.g., high quality vs price or security), and dive into detailed metrics to make assured, data-driven selections.

Azure AI Foundry’s mannequin leaderboards gave us the arrogance to scale consumer options from experimentation to deployment. Evaluating fashions facet by facet helped clients choose the very best match—balancing efficiency, security, and value with confidence.

—Mark Luquire, EY International Microsoft Alliance Co-Innovation Chief, Managing Director, Ernst & Younger, LLP*

2. Consider brokers constantly in growth and manufacturing

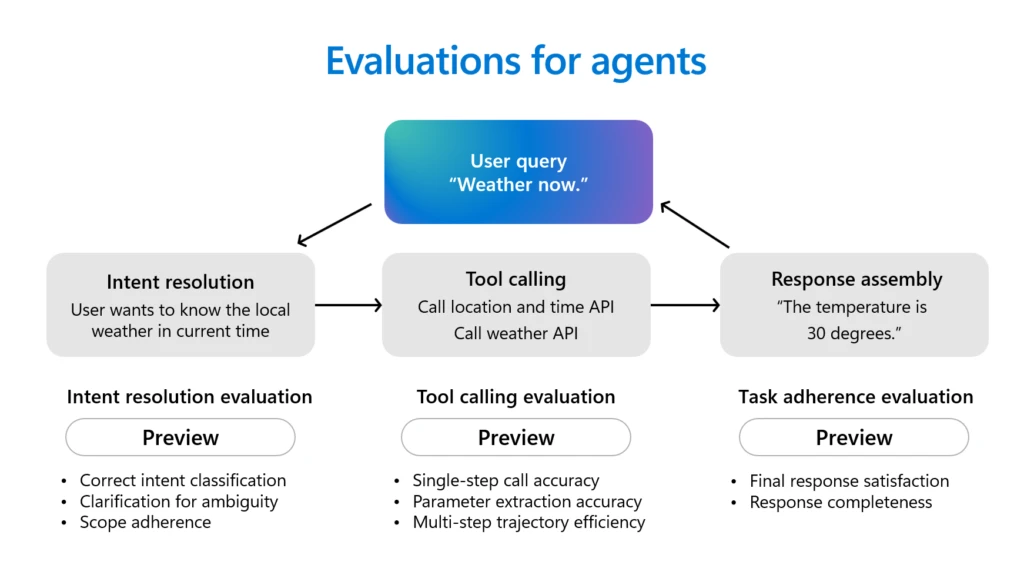

Brokers are highly effective productiveness assistants. They will plan, make selections, and execute actions. Brokers usually first cause via consumer intents in conversations, choose the proper instruments to name and fulfill the consumer requests, and full numerous duties based on their directions. Earlier than deploying brokers, it’s essential to judge their conduct and efficiency.

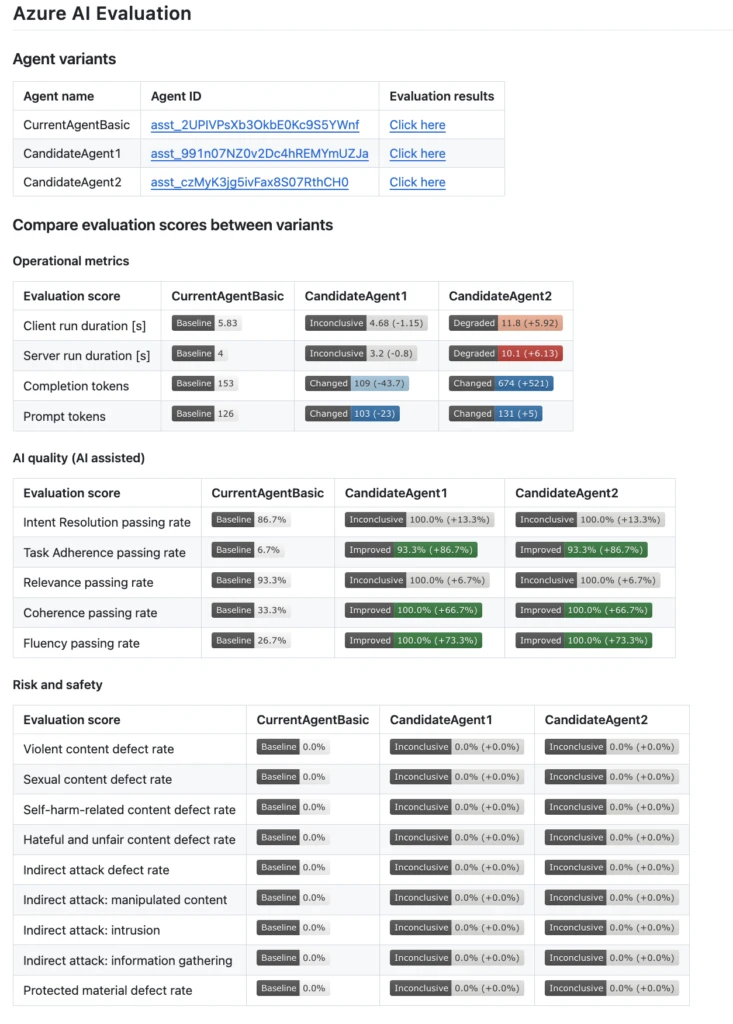

Azure AI Foundry makes agent analysis simpler with a number of agent evaluators supported out-of-the-box, together with Intent Decision (how precisely the agent identifies and addresses consumer intentions), Activity Adherence (how properly the agent follows via on recognized duties), Instrument Name Accuracy (how successfully the agent selects and makes use of instruments), and Response Completeness (whether or not the agent’s response contains all vital data). Past agent evaluators, Azure AI Foundry additionally supplies a complete suite of evaluators for broader assessments of AI high quality, danger, and security. These embrace high quality dimensions corresponding to relevance, coherence, and fluency, together with complete danger and security checks that assess for code vulnerabilities, violence, self-harm, sexual content material, hate, unfairness, oblique assaults, and the usage of protected supplies. The Azure AI Foundry Brokers Playground brings these analysis and tracing instruments collectively in a single place, letting you check, debug, and enhance agentic AI effectively.

The strong analysis instruments in Azure AI Foundry assist our builders constantly assess the efficiency and accuracy of our AI fashions, together with assembly requirements for coherence, fluency, and groundedness.

3. Combine evaluations into your CI/CD pipelines

Automated evaluations ought to be a part of your CI/CD pipeline so each code change is examined for high quality and security earlier than launch. This method helps groups catch regressions early and may also help guarantee brokers stay dependable as they evolve.

Azure AI Foundry integrates along with your CI/CD workflows utilizing GitHub Actions and Azure DevOps extensions, enabling you to auto-evaluate brokers on each commit, examine variations utilizing built-in high quality, efficiency, and security metrics, and leverage confidence intervals and significance assessments to assist selections—serving to to make sure that every iteration of your agent is manufacturing prepared.

We’ve built-in Azure AI Foundry evaluations instantly into our GitHub Actions workflow, so each code change to our AI brokers is mechanically examined earlier than deployment. This setup helps us shortly catch regressions and keep prime quality as we iterate on our fashions and options.

—Justin Layne Hofer, Senior Software program Engineer, Veeam

4. Scan for vulnerabilities with AI pink teaming earlier than manufacturing

Safety and security are non-negotiable. Earlier than deployment, proactively check brokers for safety and security dangers by simulating adversarial assaults. Purple teaming helps uncover vulnerabilities that might be exploited in real-world eventualities, strengthening agent robustness.

Azure AI Foundry’s AI Purple Teaming Agent automates adversarial testing, measuring danger and producing readiness stories. It permits groups to simulate assaults and validate each particular person agent responses and complicated workflows for manufacturing readiness.

Accenture is already testing the Microsoft AI Purple Teaming Agent, which simulates adversarial prompts and detects mannequin and utility danger posture proactively. This software will assist validate not solely particular person agent responses, but in addition full multi-agent workflows by which cascading logic may produce unintended conduct from a single adversarial consumer. Purple teaming lets us simulate worst-case eventualities earlier than they ever hit manufacturing. That modifications the sport.

—Nayanjyoti Paul, Affiliate Director and Chief Azure Architect for Gen AI, Accenture

5. Monitor brokers in manufacturing with tracing, evaluations, and alerts

Steady monitoring after deployment is important to catch points, efficiency drift, or regressions in actual time. Utilizing evaluations, tracing, and alerts helps keep agent reliability and compliance all through its lifecycle.

Azure AI Foundry observability permits steady agentic AI monitoring via a unified dashboard powered by Azure Monitor Utility Insights and Azure Workbooks. This dashboard supplies real-time visibility into efficiency, high quality, security, and useful resource utilization, permitting you to run steady evaluations on dwell visitors, set alerts to detect drift or regressions, and hint each analysis consequence for full-stack observability. With seamless navigation to Azure Monitor, you may customise dashboards, arrange superior diagnostics, and reply swiftly to incidents—serving to to make sure you keep forward of points with precision and pace.

Safety is paramount for our massive enterprise clients, and our collaboration with Microsoft allays any issues. With Azure AI Foundry, we have now the specified observability and management over our infrastructure and may ship a extremely safe setting to our clients.

Get began with Azure AI Foundry for end-to-end agent observability

To summarize, conventional observability contains metrics, logs, and traces. Agent Observability wants metrics, traces, logs, evaluations, and governance for full visibility. Azure AI Foundry Observability is a unified resolution for agent governance, analysis, tracing, and monitoring—all constructed into your AI growth lifecycle. With instruments just like the Brokers Playground, easy CI/CD, and governance integrations, Azure AI Foundry Observability empowers groups to make sure their AI brokers are dependable, protected, and manufacturing prepared. Be taught extra about Azure AI Foundry Observability and get full visibility into your brokers right this moment!

What’s subsequent

Partly 4 of the Agent Manufacturing unit collection, we’ll concentrate on how one can go from prototype to manufacturing quicker with developer instruments and fast agent growth.

Did you miss these posts within the collection?

*The views mirrored on this publication are the views of the speaker and don’t essentially replicate the views of the worldwide EY group or its member corporations.